- Casey

FiberMall

Answered on 6:59 am

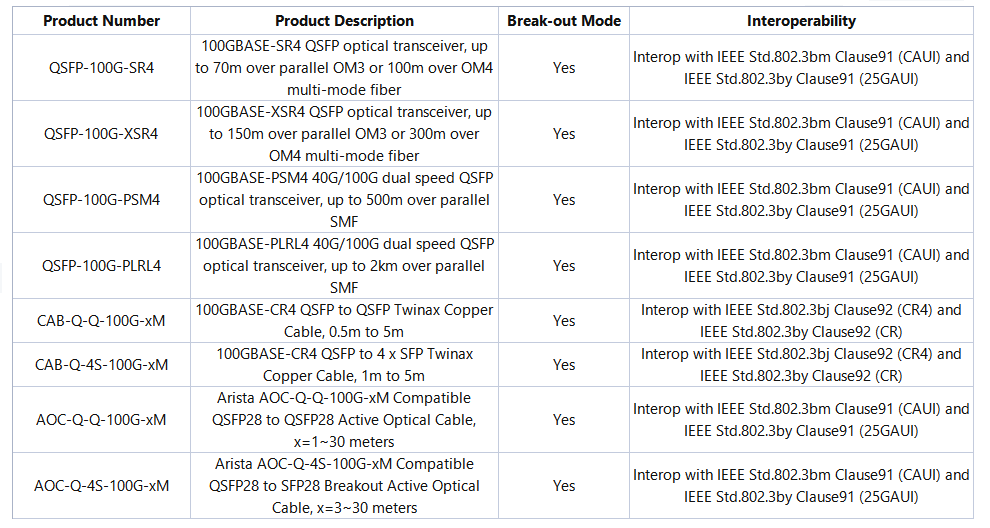

Several Arista 100G transceivers and cables can be used in breakout mode, which means they can be split into four 25G channels. These include:

- QSFP-100G-SR4: 100GBASE-SR4 QSFP optical transceiver, up to 70m over parallel OM3 or 100m over OM4 multi-mode fiber

- QSFP-100G-XSR4: 100GBASE-XSR4 QSFP optical transceiver, up to 150m over parallel OM3 or 300m over OM4 multi-mode fiber

- QSFP-100G-PSM4: 100GBASE-PSM4 40G/100G dual speed QSFP optical transceiver, up to 500m over parallel SMF

- QSFP-100G-PLRL4: 100GBASE-PLRL4 40G/100G dual speed QSFP optical transceiver, up to 2km over parallel SMF

- CAB-Q-Q-100G-xM: 100GBASE-CR4 QSFP to QSFP Twinax Copper Cable, 0.5m to 5m

- CAB-Q-4S-100G-xM: 100GBASE-CR4 QSFP to 4 x 25GbE SFP Twinax Copper Cable, 1m to 5m

- AOC-Q-Q-100G-xM: 100GbE QSFP to QSFP Active Optical Cable, 1m to 30m

- AOC-Q-4S-100G-xM: Arista AOC-Q-4S-100G-xM Compatible QSFP28 to 4x25G SFP28 Breakout Active Optical Cable, 3m to 30m

These transceivers and cables are interoperable with relevant industry standards when used in the breakout mode, as shown in the table below:

People Also Ask

Key Design Principles for AI Clusters: Scale, Efficiency, and Flexibility

In the era of trillion-parameter AI models, building high-performance AI clusters has become a core competitive advantage for cloud providers and AI enterprises. This article deeply analyzes the unique network requirements of AI workloads, compares architectural differences between AI clusters and traditional data centers, and introduces two mainstream network design

Google TPU vs NVIDIA GPU: The Ultimate Showdown in AI Hardware

In the world of AI acceleration, the battle between Google’s Tensor Processing Unit (TPU) and NVIDIA’s GPU is far more than a spec-sheet war — it’s a philosophical clash between custom-designed ASIC (Application-Specific Integrated Circuit) and general-purpose parallel computing (GPGPU). These represent the two dominant schools of thought in today’s AI hardware landscape.

InfiniBand vs. Ethernet: The Battle Between Broadcom and NVIDIA for AI Scale-Out Dominance

The Core Battle in High-Performance Computing Interconnects Ethernet is poised to reclaim mainstream status in scale-out data centers, while InfiniBand continues to maintain strong momentum in the high-performance computing (HPC) and AI training sectors. Broadcom and NVIDIA are fiercely competing for market leadership. As artificial intelligence models grow exponentially in

From AI Chips to the Ultimate CPO Positioning Battle: NVIDIA vs. Broadcom Technology Roadmap Showdown

In the era driven by artificial intelligence (AI) and machine learning, global data traffic is multiplying exponentially. Data center servers and switches are rapidly transitioning from 200G and 400G connections to 800G, 1.6T, and potentially even 3.2T speeds. Market research firm TrendForce predicts that global shipments of optical transceiver modules

H3C S6550XE-HI Series 25G Ethernet Switch: High-Performance 25G/100G Solution for Campus and Metro Networks

The H3C S6550XE-HI series is a cutting-edge, high-performance, high-density 25G/100G Ethernet switch developed by H3C using industry-leading professional ASIC technology. Designed as a next-generation Layer 3 Ethernet switch, it delivers exceptional security, IPv4/IPv6 dual-stack management and forwarding, and full support for static routing protocols as well as dynamic routing protocols including

Switching NVIDIA ConnectX Series NICs from InfiniBand to Ethernet Mode: A Step-by-Step Guide

The NVIDIA ConnectX Virtual Protocol Interconnect (VPI) series network interface cards (NICs)—including models such as ConnectX-4, ConnectX-5, ConnectX-6, ConnectX-7, and ConnectX-8 (commonly abbreviated as CX-4/5/6/7/8)—represent a rare class of dual-mode adapters in the industry. A single card enables seamless switching between InfiniBand (IB) and Ethernet physical networks without hardware replacement.

Related Articles

800G SR8 and 400G SR4 Optical Transceiver Modules Compatibility and Interconnection Test Report

Version Change Log Writer V0 Sample Test Cassie Test Purpose Test Objects:800G OSFP SR8/400G OSFP SR4/400G Q112 SR4. By conducting corresponding tests, the test parameters meet the relevant industry standards, and the test modules can be normally used for Nvidia (Mellanox) MQM9790 switch, Nvidia (Mellanox) ConnectX-7 network card and Nvidia (Mellanox) BlueField-3, laying a foundation for

Key Design Principles for AI Clusters: Scale, Efficiency, and Flexibility

In the era of trillion-parameter AI models, building high-performance AI clusters has become a core competitive advantage for cloud providers and AI enterprises. This article deeply analyzes the unique network requirements of AI workloads, compares architectural differences between AI clusters and traditional data centers, and introduces two mainstream network design

Google TPU vs NVIDIA GPU: The Ultimate Showdown in AI Hardware

In the world of AI acceleration, the battle between Google’s Tensor Processing Unit (TPU) and NVIDIA’s GPU is far more than a spec-sheet war — it’s a philosophical clash between custom-designed ASIC (Application-Specific Integrated Circuit) and general-purpose parallel computing (GPGPU). These represent the two dominant schools of thought in today’s AI hardware landscape.

InfiniBand vs. Ethernet: The Battle Between Broadcom and NVIDIA for AI Scale-Out Dominance

The Core Battle in High-Performance Computing Interconnects Ethernet is poised to reclaim mainstream status in scale-out data centers, while InfiniBand continues to maintain strong momentum in the high-performance computing (HPC) and AI training sectors. Broadcom and NVIDIA are fiercely competing for market leadership. As artificial intelligence models grow exponentially in

From AI Chips to the Ultimate CPO Positioning Battle: NVIDIA vs. Broadcom Technology Roadmap Showdown

In the era driven by artificial intelligence (AI) and machine learning, global data traffic is multiplying exponentially. Data center servers and switches are rapidly transitioning from 200G and 400G connections to 800G, 1.6T, and potentially even 3.2T speeds. Market research firm TrendForce predicts that global shipments of optical transceiver modules

H3C S6550XE-HI Series 25G Ethernet Switch: High-Performance 25G/100G Solution for Campus and Metro Networks

The H3C S6550XE-HI series is a cutting-edge, high-performance, high-density 25G/100G Ethernet switch developed by H3C using industry-leading professional ASIC technology. Designed as a next-generation Layer 3 Ethernet switch, it delivers exceptional security, IPv4/IPv6 dual-stack management and forwarding, and full support for static routing protocols as well as dynamic routing protocols including

Switching NVIDIA ConnectX Series NICs from InfiniBand to Ethernet Mode: A Step-by-Step Guide

The NVIDIA ConnectX Virtual Protocol Interconnect (VPI) series network interface cards (NICs)—including models such as ConnectX-4, ConnectX-5, ConnectX-6, ConnectX-7, and ConnectX-8 (commonly abbreviated as CX-4/5/6/7/8)—represent a rare class of dual-mode adapters in the industry. A single card enables seamless switching between InfiniBand (IB) and Ethernet physical networks without hardware replacement.

Related posts:

- What is the Difference Between UFM Telemetry, Enterprise and Cyber-AI?

- Is the Module on the OSFP NIC flat or Riding Heatsink?

- What is the Reach, Fiber Type, Connector, and Optical Modulation for Each 400G Transceiver Type?

- What do QSFP28, QSFP56, and SFP56 Mean? What Nomenclature Should be Used to Describe the Different Types of QSFP and SFP Ports?