Although OpenAI o1 proposed the reinforcement learning (RL), it did not break the circle for various reasons. DeepSeek R1 solved the puzzle of RL and pushed the entire industry into a new paradigm, truly entering the second half of intelligence. There have been many discussions in the market about the definition of DeepSeek. The next valuable discussion is how to play the AI race?

Has DeepSeek surpassed OpenAI?

There is no doubt that DeepSeek has surpassed Meta Llama, but it is still far behind the first-tier players such as OpenAI, Anthropic and Google. For example, Gemini 2.0 Flash, powerful and fully modal, costs less than DeepSeek. The outside world underestimates the capabilities of the first-tier players represented by Gemini 2.0, which has not been open sourced to achieve a sensational effect.

DeepSeek is exciting, but it cannot be called a paradigm-level innovation. A more accurate description is that it has open-sourced the previously half-hidden paradigm of OpenAI o1, pushing the entire ecosystem to a very high penetration rate.

From the perspective of first principles, it is difficult to surpass the first-tier model manufacturers under the Transformer generation architecture. It is also difficult to achieve overtaking under the same path. Today, we are looking forward to someone exploring the next generation of intelligent architecture and paradigm.

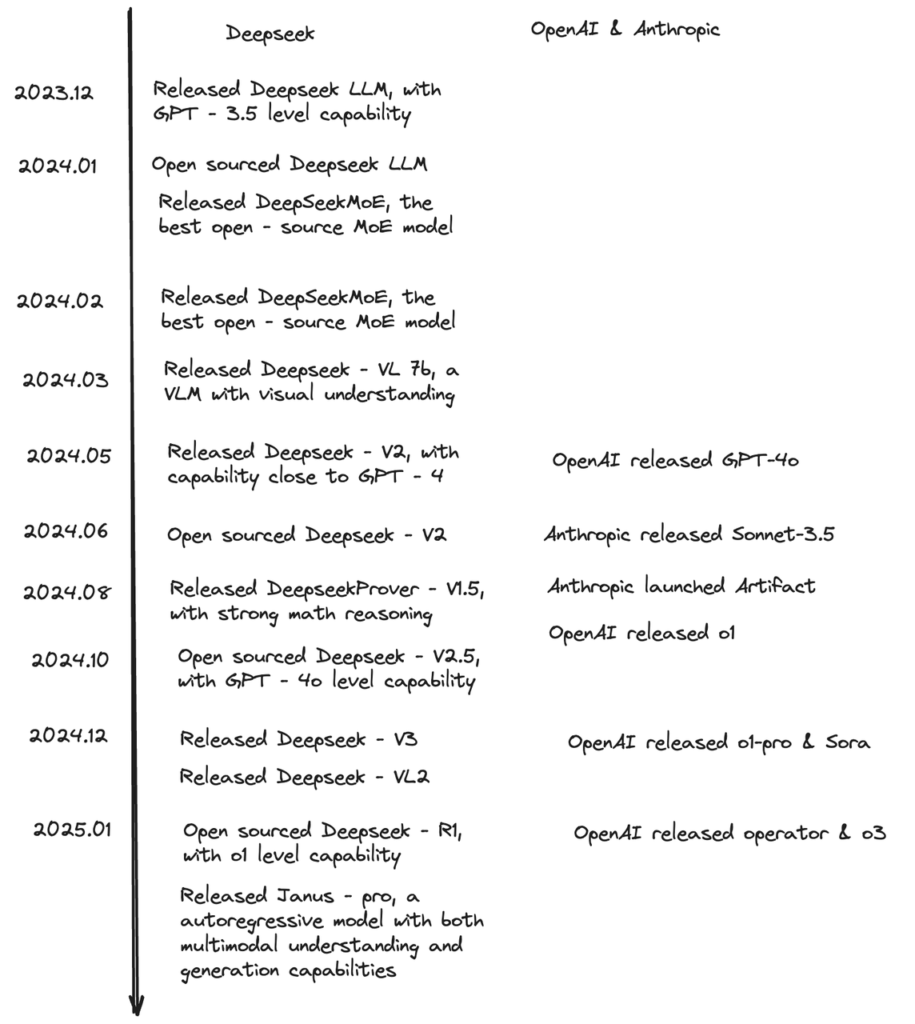

DeepSeek caught up with OpenAI and Anthropic in one year

Does DeepSeek open a new paradigm?

As mentioned earlier, strictly speaking, DeepSeek did not invent a new paradigm.

But the significance of DeepSeek lies in helping the new paradigm of RL and test time compute to gain more popularity. If OpenAI’s initial release of o1 posed a riddle to the industry, DeepSeek was the first to publicly solve it.

Before DeepSeek released R1 and R1-zero, only a small number of people in the industry were practicing RL and reasoning models. However, DeepSeek provided a roadmap for everyone, making the industry believe that doing so can really improve intelligence. This has greatly boosted confidence and attracted more AI researchers to turn to new paradigms of research.

Only with the entry of talented people can there be algorithm innovation, and only with the close pursuit of open source can more computing resources be invested. After DeepSeek, OpenAI, which originally planned not to release new models, released o3mini one after another, and plans to continue releasing o3, and open source models are also under consideration. Anthropic and Google will also accelerate RL research. The industry’s advancement of the new paradigm has been accelerated because of DeepSeek, and small and medium-sized teams can also try RL in different domains.

In addition, the improvement of the reasoning model will further help the implementation of the agent. AI researchers are now more confident in the research and exploration of agents. Therefore, it can also be said that DeepSeek’s open source reasoning model has promoted the industry’s further exploration of agent.

So although DeepSeek did not invent a new paradigm, it pushed the entire industry into a new paradigm.

How does Anthropic’s technology approach differ from R1?

From Dario’s interview, we can see that Anthropic’s understanding of R-1/reasoning model is somewhat different from that of the O series. Dario thinks that the base model and reasoning model should be a continuous spectrum, rather than an independent model series like OpenAI. If you only do the O series, you will soon hit the ceiling.

I have always wondered why Sonnet 3.5’s coding, reasoning, and agentic capabilities improved so much all of a sudden, but 4o has never caught up?

They did a lot of RL work in the pre-training base model stage. The core is to improve the base model. Otherwise, relying solely on RL to improve the reasoning model may easily eat up all the benefits.

The sensation DeepSeek’s caused was inevitable, but also accidental

From a technical perspective, DeepSeek has the following highlights:

- Open source: Open source is very important. After OpenAI became a closed-source company starting with GPT-3, the top three companies no longer disclosed technical details, leaving a blank open source niche. However, Meta and Mistral did not take over this position. DeepSeek’s surprise attack this time made it a smooth sailing in the open source field.

If we give 100 points to sensationalism, then 30 points will go to intelligence improvement, and 70 points to open source. LLaMA was also open source before but did not have such a sensational effect, which shows that LLaMa’s intelligence level is not enough.

- Cheap: “Your margin is my opportunity” is becoming more valuable.

- Networking + Public CoT : These two points can bring a good user experience to users. DeepSeek plays both cards at the same time, which can be said to be a king bomb. The experience it gives to C-end users is completely different from other Chatbots. In particular, CoT transparency makes the model thinking process public. Transparency can make users more trusting of AI and promote breaking the circle. However, the emergence of DeepSeek should have had a huge impact on Perplexity, but the DeepSeek server was unstable, and the Perplexity team responded quickly and launched R-1, which in turn took over a large number of DeepSeek R-1 overflow users.

- RL generalization: Although RL was first proposed by OpenAI o1, its penetration rate is not high due to various operations that have been kept half-hidden. DeepSeek R-1 has greatly promoted the progress of the reasoning model paradigm and greatly improved its ecological acceptance.

DeepSeek’s investment in technological exploration is a deterministic factor that makes this intelligent achievement worthy of more attention and discussion, but the timing of the launch of DeepSeek R1 makes this sensation accidental:

In the past, the United States has always said that it is far ahead in basic technology research, but DeepSeek is native to China, which is also a highlight in itself. In this process, many American technology giants began to promote the argument that DeepSeek challenges the position of the United States as a technological big shot. DeepSeek was passively involved in the public opinion war;

Before the release of DeepSeek R1, the OpenAI Stargate event had just begun to ferment. The contrast between this huge investment and the intelligent output efficiency of the DeepSeek team was too stark, and it was hard not to attract attention and discussion.

DeepSeek caused Nvidia’s stock price to plummet and further provoked public opinion. They certainly didn’t expect that they would become the first black swan in the US stock market in 2025;

The Spring Festival is a training ground for products. In the mobile Internet era, many super apps exploded during the Spring Festival, and the AI era is no exception. DeepSeek R1 was released just before the Spring Festival. What surprised the public was its text creation ability, rather than the coding and math skills emphasized during training. Cultural creations which are more relatable, are more likely to go viral.

Who is injured? Who benefits?

The players in this arena can be divided into three categories: ToC, To Developer and To Enterprise (to Government):

- ToC: Chatbot is definitely the most impacted, with DeepSeek taking away the mindshare and brand attention, and ChatGPT is no exception;

- The impact on developers is very limited. We have seen some users comment that R1 is not as good as Sonnet after using it. Cursor officials also said that Sonnet is still outperforming. Therefore, a high proportion of users choose Sonnet and there is no large-scale migration.

- The business of To Enterprise and To Government is based on trust and understanding of needs. The interests of large organizations in making decisions are very complex and it is not as easy to migrate as C-end users.

Let’s think about this from another perspective: closed source, open source, and computing power:

In the short term, people will think that closed-source OpenAI/Anthropic/Google will be more impacted:

- The mystery of technology has been open sourced, and the most important premium of the mystery in AI hype has been broken;

- More realistically, the market believes that some of the potential customers and market size of these closed-source companies have been snatched away, and the payback period of GPU investment has been lengthened;

- As the leader, OpenAI is the one that suffers the most. Its previous dream of keeping its technology secret and not open to the public in the hope of earning more technology premiums is unlikely to come true.

But in the medium and long term, companies with abundant GPU resources will still benefit. On the one hand, Meta, a second-tier company, can quickly follow up on new methods, making Capex more efficient, so Meta may be a big beneficiary. On the other hand, more exploration is needed to improve intelligence. The open source of DeepSeek has brought everyone to the same level, and entering into new explorations requires 10 times or even more GPU investment.

From the first principles, for the AI intelligent industry, whether it is developing intelligence or applying intelligence, it is bound to consume massive computing power from the physical nature. This is determined by basic laws and cannot be completely avoided by technical optimization.

Therefore, whether it is exploring intelligence or applying intelligence, even if there are doubts in the short term, the demand for computing power in the medium and long term will explode. This also explains why Musk starts from the first principles and xAI insists on expanding the cluster. The deep logic behind xAI and Stargate may be the same. Amazon and other cloud vendors have announced plans to increase Capex guidance.

Let’s assume that the talent level and awareness of AI research around the world are on a par, will more GPUs allow us to do more experimental exploration? In the end, it may come back to the competition in compute.

DeepSeek has no commercial demands and focuses on exploring AGI intelligent technology. The open source action is of great significance to promoting the progress of AGI, intensifying competition, promoting openness, which has a catfish effect to some extent.

Can Distillation surpass SOTA?

There is one detail that is uncertain. If DeepSeek had used a large amount of distilled CoT data from the pre-train stage, the results today would not be considered as amazing for it is still based on the basic intelligence obtained by the first-tier giants and then open sourced. But if the pre-train stage does not use a large amount of distilled data, it would be amazing for DeepSeek to achieve today’s results from pre-training from scratch.

In addition, it is unlikely that distillation can surpass SOTA in the base model. But DeepSeek R-1 is very strong. I guess it’s because the Reward model does a very good job. If the R-1 Zero path is reliable, it has a chance to surpass SOTA.

No Moat!

Google’s previous comment on OpenAI: No Moat! This sentence is also appropriate here.

The large migration of Chatbot users has given the market an important inspiration: the progress of intelligent technology is beyond people’s imagination, and it is difficult for phased products to form an absolute barrier.

Whether it is ChatGPT/Sonnet/Perplexity that has just formed mindshare and reputation, or developer tools such as Cursor and Windsurf, once smarter products are available, users have no loyalty to the “previous generation” of smart products. Today, it is difficult to build a moat at both the model layer and the application layer.

DeepSeek has also verified one thing this time: the model is the application. DeepSeek has no innovation in product form. The core is intelligence + open source. I can’t help but think: In the AI era, is any innovation in products and business models inferior to the innovation of intelligence?

Should DeepSeek take over the Chatbot traffic and expand it?

It is clear from the DeepSeek team’s response that DeepSeek has not yet figured out how to use this wave of traffic.

The essence of the question of whether or not to accept and actively operate this traffic is: can a great commercial company and a great research lab co-exist in the same organization?

This matter is a great test of energy and resource allocation, organizational capabilities and strategic choices. If it were a large company like ByteDance or Meta, their first reaction would be to take it on, and they would have a certain organizational foundation to do so. However, as a research lab organization, DeepSeek must be under great pressure to handle this huge amount of traffic.

But at the same time, we should also think about will this wave of Chatbot a temporary traffic? Is Chatbot part of the main line of future intelligent exploration? It seems that each stage of intelligence has a corresponding product form, and Chatbot is just one of the early forms unlocked.

For DeepSeek, from the perspective of the next 3-5 years, would it be a miss if it does not take over Chatbot traffic today? What if there is a scale effect one day? If AGI is finally realized, what carrier will be used to carry it?

Where will the next Aha moment of AI breakthrough come from?

On the one hand, the next-generation model of the first echelon is critical, but today we are at the limit of Transformer, and it is uncertain whether the first echelon can come up with a model that can achieve generational improvement. OpenAI, Anthropic, and Google responded by releasing models that are 30-50% better, but that may not be enough to save the situation because the opponent has 10-30 times more resources.

On the other hand, the implementation of Agent is critical, because Agent needs to do long-distance multi-step reasoning. If the model is 5-10% better, the leading effect will be magnified many times. Therefore, OpenAI, Anthropic and Google must implement Agent products on the ground on one hand, full stack integrated model + Agent products, just like Windows + Office. On the other hand, they must also show more powerful models, such as the next generation models represented by the full version of O3 and Sonnet 4/3.5 opus.

Amid technological uncertainty, the most valuable are talented AI researchers. Any organization that wants to explore AGI must invest resources in a more radical bet on the next paradigm, especially in today’s context where models has reached the equilibrium state in the pre-training stage. It is necessary to have good talent + ample resources to explore the next Aha moment of emerging intelligence.

Finally, I hope technology has no borders.