InfiniBand, as the native network technology of RDMA (Remote Direct Memory Access), is favored and used by many customers. But what other unique advantages does InfiniBand have compared with ROCE (RDMA over Converged Ethernet), lossless Ethernet, which is also compatible with and supports the RDMA protocol?

Table of Contents

ToggleThe “original believers” of traditional SDN: Make the network efficient and simple

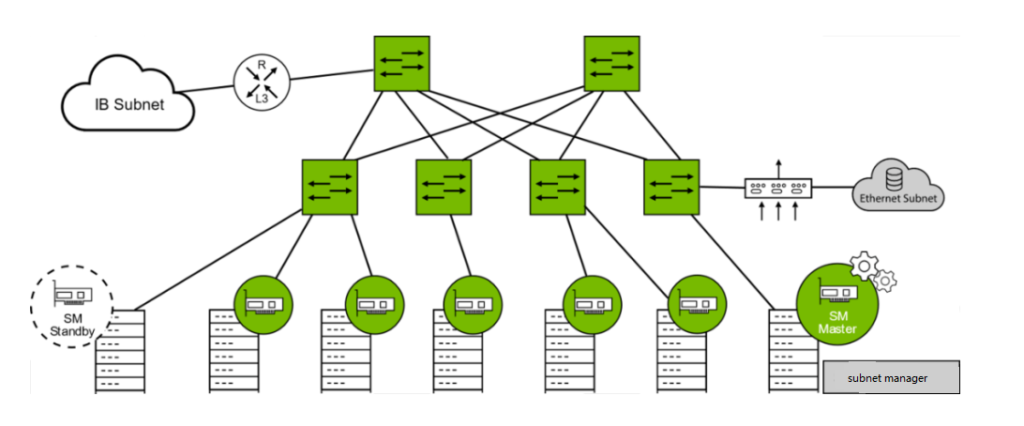

InfiniBand is the first network architecture that is truly designed natively according to SDN. It is managed by a subnet manager (i.e. SDN controller). Unlike traditional Ethernet (including ROCE lossless Ethernet), InfiniBand switches do not run any routing protocols, and the forwarding table of the entire network is calculated and distributed by a centralized subnet manager. In addition to forwarding tables, the subnet manager is also responsible for managing configuration within the InfiniBand subnet, such as zoning and QoS. InfiniBand network no longer relies on broadcast mechanisms such as ARP for forwarding table learning, and there will be no broadcast storms or additional waste of bandwidth.

Although traditional Ethernet (including ROCE lossless Ethernet) also supports SDN controller networking, various network manufacturers have deviated from the early concept of OpenSlow flow table forwarding and instead adopted the netconf+VXLAN+EVPN solution in order to avoid becoming a “no-brand machine” manufacturer. The SDN controller has become a more advanced “big network management” that only provides the distribution of relevant control policies. The forwarding level is still based on inter-device learning (MAC table entry learning, ARP table learning and routing table entry learning, etc.), which makes ROCE lossless Ethernet lose the advantage of efficient and simple networking like InfiniBand.

InfiniBand’s efficient and simple networking

Let me use an example in life to illustrate:

We can compare high-speed rail travel to the InfiniBand network. The entire high-speed rail journey is managed and scheduled by a dispatcher (subnetwork manager). Passengers (network traffic) do not need to learn and find routes to go to their destinations. They only need to take the bus according to the scheduled train number (forwarding table). In this mode, the entire journey is efficient and smooth, without redundant announcements or temporary route changes, ensuring the quality and speed of passengers’ travel.

In comparison, self-driving travel represents traditional Ethernet and ROCE lossless Ethernet. Although they are also equipped with a navigation system (SDN controller) for navigation, the driver (network device) still needs to make real-time judgments and adjust the driving direction based on road conditions (inter-device learning). This process may involve querying the map (broadcast mechanism) multiple times, waiting for traffic lights (waste of bandwidth), or taking detours to avoid congestion (complex network configuration), making the entire travel process relatively inefficient.

Ex-ante credit congestion avoidance mechanism: realizing native lossless network

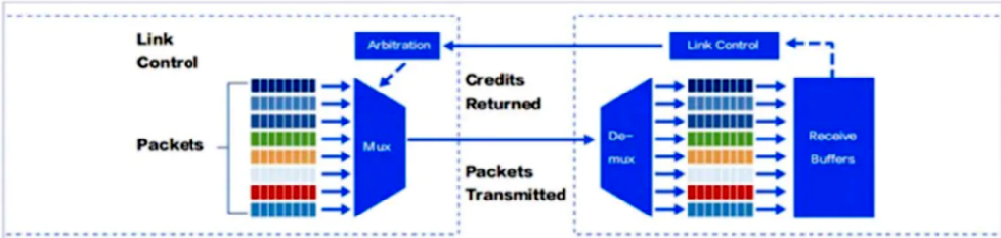

InfiniBand network uses a credit-based mechanism to fundamentally avoid the problems of buffer overflow and packet loss. This mechanism ensures that the sender will only initiate packet transmission when it confirms that the receiver has sufficient credit to accept a corresponding number of messages.

This credit-based mechanism works as follows: Each InfiniBand network link has a predetermined buffer for storing packets to be transmitted. The sender checks the receiver’s available credit before transmitting data. This credit can be understood as the buffer size currently available to the receiver. The sender will decide whether to initiate packet transmission based on this credit value. If the receiver has insufficient credit, the sender waits until the receiver frees sufficient buffers and reports new available credits.

Once the receiver completes forwarding, it releases the used buffers and continuously reports the currently available scheduled buffer size to the sender. In this way, the sender can understand the buffer status of the receiver in real time and adjust the transmission of data packets. This link-level flow control mechanism ensures that the sender does not send too much data, effectively preventing network buffer overflow and packet loss.

The advantage of this credit-based mechanism is that it provides an efficient and reliable method of flow control. By monitoring and adjusting the transmission of data packets in real time, InfiniBand networks can ensure smooth transmission of data while avoiding network congestion and performance degradation. In addition, this mechanism provides better network predictability and stability, allowing applications to utilize network resources more efficiently.

InfiniBand network uses a credit-based mechanism to fundamentally avoid buffer overflow and packet loss problems through link-level flow control, while ROCE lossless Ethernet adopts a “post-event” congestion management mechanism. Before message is sent, it will not negotiate resources with the receiver, but directly forward message. Only when the receiving switch has port buffer congestion (or impending congestion), congestion management message is sent through the PFC and ECN protocols, allowing the peer switch and peer network card to reduce or suspend the sending of message. This “post-facto” method can alleviate the influence of congestion to a certain extent, but packet loss and network instability cannot be completely avoided.

Schematic diagram of lossless data transmission in infiniBand network

Let me use another example to illustrate:

The credit-based mechanism of the InfiniBand network is like a hotel that supports telephone reservation of seats. When you want to dine at a restaurant, you call the hotel in advance to ensure that there are enough seats, thus avoiding the embarrassment of not having a seat after arriving at the restaurant. This method ensures customers’ dining experience and avoids waste of resources and dissatisfaction.

The queueing of customers after arriving at the restaurant is like the “post-event” congestion management mechanism of ROCE lossless Ethernet. Those who have not made an appointment in advance can only wait according to the actual situation. Although hotels will take measures to alleviate congestion, they may still face the risk of insufficient seats and loss of customers. Although “post-event” congestion management mechanism can deal with the situation to a certain extent, it cannot completely avoid customer dissatisfaction and loss.

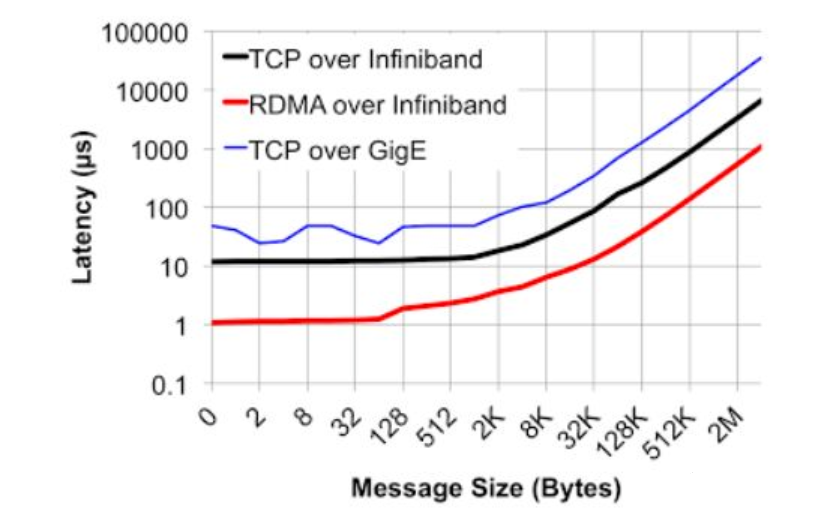

Cut-through forwarding mode: allowing the network to achieve lower latency

Ethernet (includes ROCE lossless Ethernet) uses store-and-forward mode by default. The switch needs to completely receive the entire data packet and store it in the cache, check the destination address and integrity of the data packet, and then forward it. This approach can cause some latency, especially when processing a large number of packets.

While cut-through forwarding mode technology only needs to read the header information of the data packet, determine the destination port, and then immediately start forwarding the data packet when the switch receives a data packet. This technology can significantly reduce the residence time of data packets in the switch, thereby reducing transmission delays.

InfiniBand switches use cut-through forwarding mode, which makes the forwarding processing of messages very simple. It only needs a 16-bit LID (given directly by the subnet manager) to quickly find the forwarding path. In this way, the forwarding delay is shortened to less than 100 nanoseconds. Ethernet switches usually use MAC table lookup addressing and store-and-forward methods to process data. But as they also need to handle many complex services, such as IP, MPLS, QinQ, etc., the processing time is relatively long, which may take several microseconds or even longer. Even if some Ethernet switches use cut-through technology, the forwarding delay may still be more than 200 nanoseconds.

Forwarding delay

Let me use another example to illustrate:

Ethernet handles packets like mailing fragile items. Postman needs to be particularly careful to receive the package and check its integrity to ensure there is no damage before forwarding it to the destination. It takes some time for the postman to do so, therefore, there will be a certain time delay.

InfiniBand switches handle packets more like mailing ordinary items. The postman simply takes a quick look at the address on the package and quickly forwards it without waiting for a full package inspection. This method is faster and significantly reduces the time the package spends in the post office, thereby reducing transmission delays.

Related Products:

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

10m (33ft) 12 Fibers Female to Female MPO Trunk Cable Polarity B LSZH OS2 9/125 Single Mode

$32.00

10m (33ft) 12 Fibers Female to Female MPO Trunk Cable Polarity B LSZH OS2 9/125 Single Mode

$32.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MFP7E10-N015 Compatible 15m (49ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$54.00

NVIDIA MFP7E10-N015 Compatible 15m (49ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$54.00

-

NVIDIA MCP4Y10-N00A Compatible 0.5m (1.6ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive Direct Attach Copper Cable

$105.00

NVIDIA MCP4Y10-N00A Compatible 0.5m (1.6ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive Direct Attach Copper Cable

$105.00

-

NVIDIA MFA7U10-H015 Compatible 15m (49ft) 400G OSFP to 2x200G QSFP56 twin port HDR Breakout Active Optical Cable

$785.00

NVIDIA MFA7U10-H015 Compatible 15m (49ft) 400G OSFP to 2x200G QSFP56 twin port HDR Breakout Active Optical Cable

$785.00

-

NVIDIA MCP7Y60-H001 Compatible 1m (3ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$99.00

NVIDIA MCP7Y60-H001 Compatible 1m (3ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$99.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MCP4Y10-N00A-FTF Compatible 0.5m (1.6ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive DAC, Flat top on one end and Finned top on other

$105.00

NVIDIA MCP4Y10-N00A-FTF Compatible 0.5m (1.6ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive DAC, Flat top on one end and Finned top on other

$105.00

-

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

NVIDIA NVIDIA(Mellanox) MCX75510AAS-NEAT ConnectX-7 InfiniBand/VPI Adapter Card, NDR/400G, Single-port OSFP, PCIe 5.0x 16, Tall Bracket

$1650.00

NVIDIA NVIDIA(Mellanox) MCX75510AAS-NEAT ConnectX-7 InfiniBand/VPI Adapter Card, NDR/400G, Single-port OSFP, PCIe 5.0x 16, Tall Bracket

$1650.00

-

NVIDIA NVIDIA(Mellanox) MCX653105A-HDAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR/200GbE, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$1400.00

NVIDIA NVIDIA(Mellanox) MCX653105A-HDAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR/200GbE, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$1400.00

-

NVIDIA MCP7Y50-N001-FLT Compatible 1m (3ft) 800G InfiniBand NDR Twin-port OSFP to 4x200G Flat Top OSFP Breakout DAC

$255.00

NVIDIA MCP7Y50-N001-FLT Compatible 1m (3ft) 800G InfiniBand NDR Twin-port OSFP to 4x200G Flat Top OSFP Breakout DAC

$255.00

-

NVIDIA MCA7J70-N004 Compatible 4m (13ft) 800G InfiniBand NDR Twin-port OSFP to 4x200G OSFP Breakout ACC

$1100.00

NVIDIA MCA7J70-N004 Compatible 4m (13ft) 800G InfiniBand NDR Twin-port OSFP to 4x200G OSFP Breakout ACC

$1100.00

-

NVIDIA MCA7J60-N004 Compatible 4m (13ft) 800G Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Active Copper Cable

$800.00

NVIDIA MCA7J60-N004 Compatible 4m (13ft) 800G Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Active Copper Cable

$800.00

-

NVIDIA MCP7Y00-N001-FLT Compatible 1m (3ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$160.00

NVIDIA MCP7Y00-N001-FLT Compatible 1m (3ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$160.00