Introduction

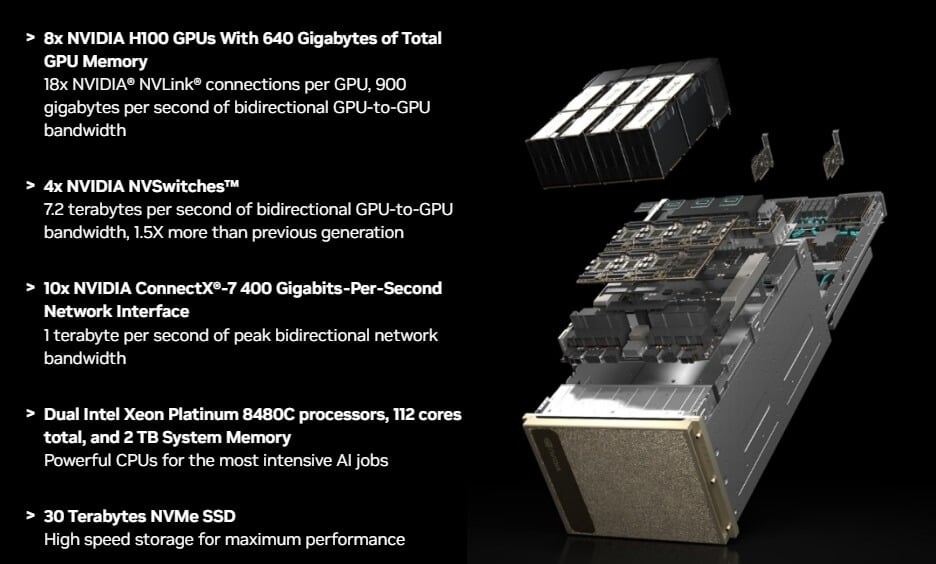

When working with NVIDIA’s H100 SXM servers, you may often see a configuration that includes two BFD-3 units. This raises questions, especially since the system already comes with eight CX-7 400G network cards. What are the fundamental differences and roles of BFD-3 compared to CX-7? Moreover, why does BFD have a BMC port when the server’s motherboard already includes a BMC port?

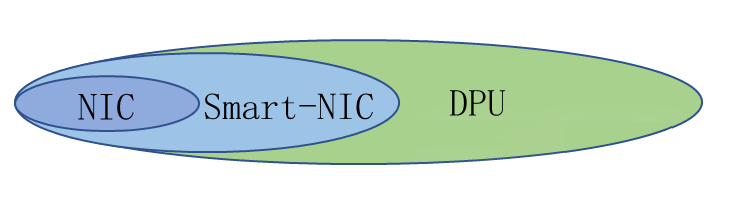

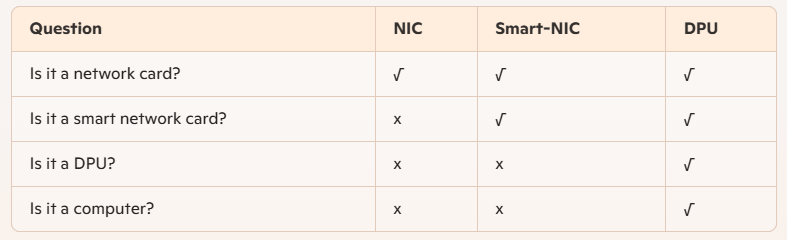

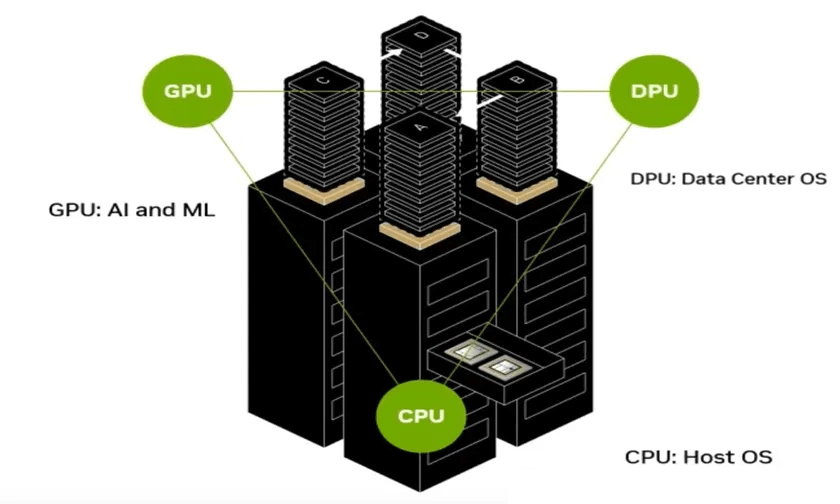

Logical Relationship Between NIC, Smart NIC, and DPU

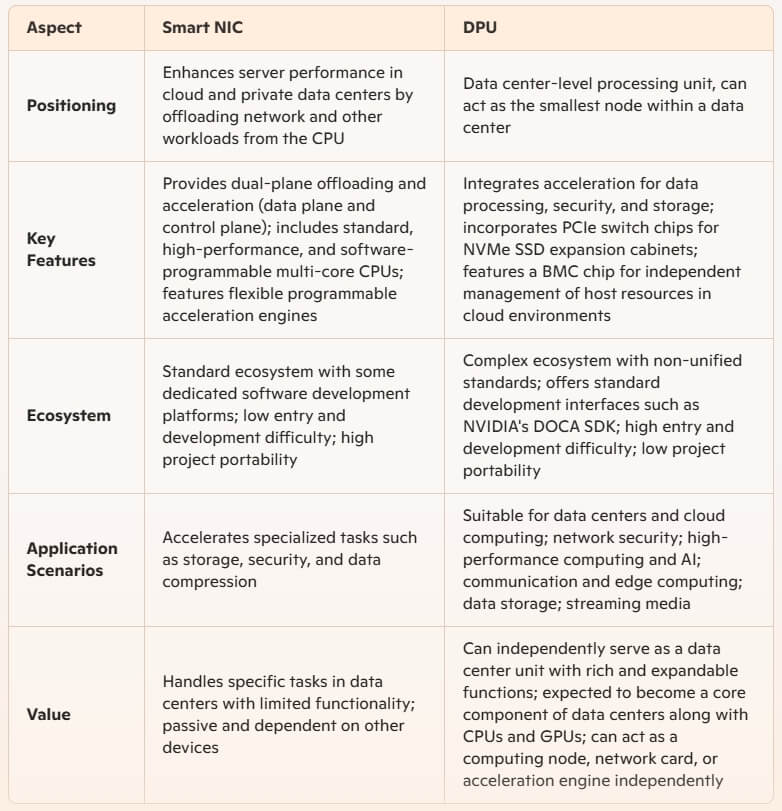

To understand their distinctions, let’s compare the following points (personal views for reference):

Reasons for the Emergence of Smart NICs and DPUs

Era of Traditional NICs

In traditional data centers, the CPU was the absolute core. However, as Moore’s Law becomes less applicable, the growth in CPU computing power can no longer keep up with the data explosion, creating a bottleneck. Offloading the CPU’s workload onto network adapters (network interface cards) became necessary, driving the rapid development of smart NICs.

Era of Smart NICs (First Generation)

The first generation of smart NICs primarily focused on offloading tasks from the data plane. Examples include OVS Fastpath hardware offloading, RDMA network hardware offloading based on RoCEv1 and v2, hardware offloading for lossless network capabilities (PFC, ECN, ETS), NVMe-oF hardware offloading in the storage domain, and data plane offloading for secure transmission.

Era of DPU Smart NICs (Second Generation)

DPUs (Data Processing Units) emerged to address three main issues in data centers:

Between Nodes: Low efficiency of server data exchange and unreliable data transmission.

Within Nodes: Inefficient data center model execution, low I/O switch efficiency, and inflexible server architecture.

Network Systems: Insecure networks.

Simplified Explanation: Why DPUs Are Superior to Smart NICs

NVIDIA defines DPU-based smart NICs as network interface cards that offload tasks usually handled by the system CPU. Using its onboard processor, a DPU-based SmartNIC can perform a combination of encryption/decryption, firewall, TCP/IP, and HTTP processing tasks. Essentially, it assists the CPU with various tasks and has its own CPU to handle network security-related tasks independently.

Overview of NVIDIA BlueField-3 DPU

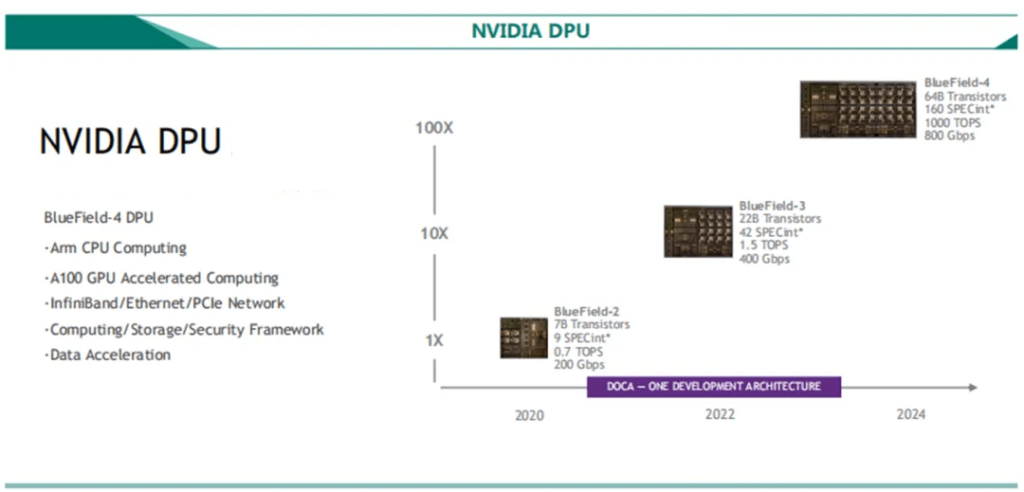

To address the shift in data center architecture driven by hyperscale cloud technology, NVIDIA introduced the BlueField DPU series. These new processors are designed specifically for data center infrastructure software, offloading and accelerating the massive computational workloads generated by virtualization, networking, storage, security, and other cloud-native AI services.

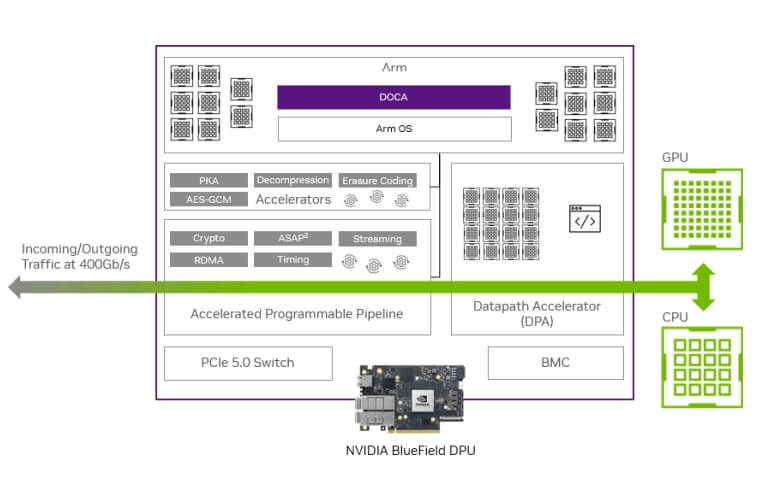

System Layout of NVIDIA BlueField-3 DPU

BlueField-3 functions as an “independent node” integrated into the server’s PCIe path:

- ARM + OS: Can offload various tasks originally handled by the host OS.

- Integrated Accelerators: Improve efficiency in data processing, security, and storage.

- PCIe Switch Chip: Can be used in NVMe SSD expansion cabinets.

- BMC Chip: Allows independent management of original host resources in a cloud environment.

Recommended Use Cases for BlueField-3

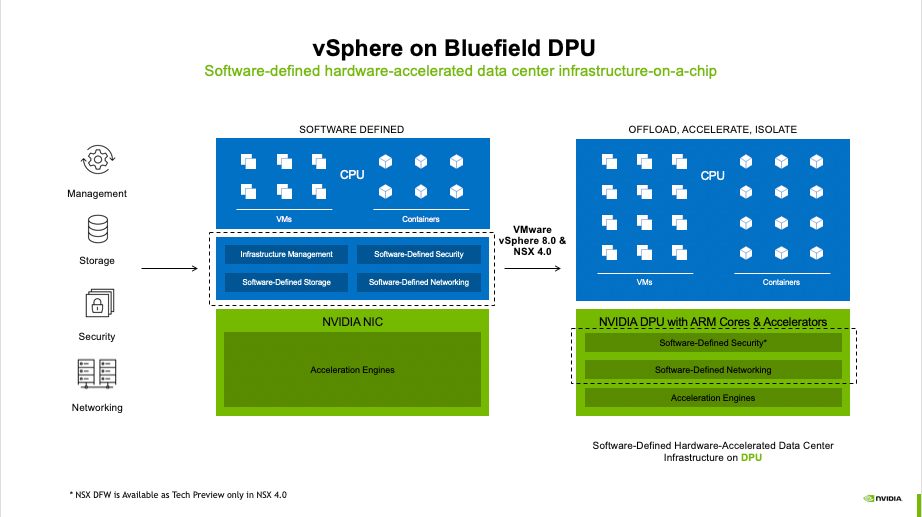

NVIDIA® BlueField®-3 DPU is the third-generation infrastructure computing platform, enabling enterprises to build software-defined, hardware-accelerated IT infrastructure from the cloud to core data centers and edge environments. With 400Gb/s Ethernet or NDR 400Gb/s InfiniBand network connectivity, the BlueField-3 DPU can offload, accelerate, and isolate software-defined networking, storage, security, and management functions, significantly enhancing data center performance, efficiency, and security.

Example Application of BlueField-3 in VMware Private Cloud

NVIDIA DPU Roadmap

By understanding the capabilities and applications of the BlueField-3 DPU, enterprises can effectively leverage this technology to meet the demands of modern data centers and ensure robust, scalable, and secure infrastructure.