The NVIDIA DGX H100 System, originally designed to integrate 256 NVIDIA H100 GPUs, faced challenges in commercial adoption. Industry discussions suggest that the primary obstacle was the lack of cost-effectiveness. The system heavily utilized optical fibers for GPU connections, resulting in increased Bill of Materials (BoM) costs beyond what was economically reasonable for the standard NVL8 configuration.

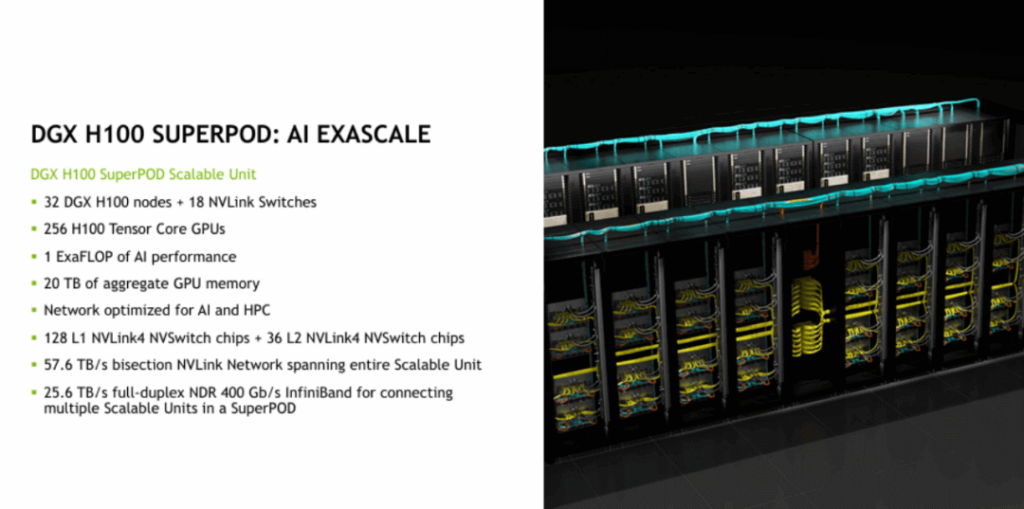

Despite NVIDIA’s claim that the expanded NVL256 could offer up to 2x throughput for 400B MoE training, some large customers remain skeptical. While the latest NDR InfiniBand is approaching 400 Gbit/s and NVLink4 reaches 450 GB/s theoretically, the system’s design—featuring 128 L1 NVSwitches and 36 external L2 NVSwitches—creates a 2:1 blocking ratio. Consequently, each server can only utilize half of its bandwidth to connect to another server. NVIDIA relies on NVLink SHARP technology to optimize the network and achieve equivalent all-to-all bandwidth.

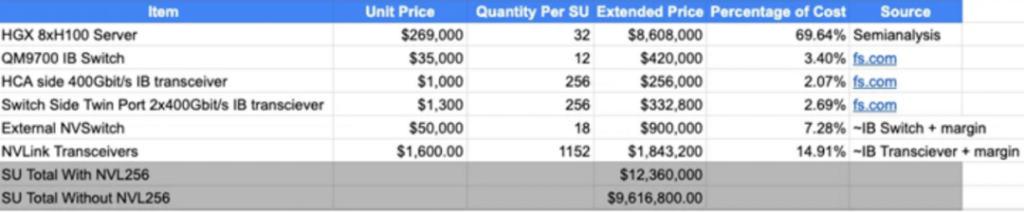

An analysis of the H100 NVL256 Bill of Materials (BoM) during the Hot Chips 34 conference revealed that expanding to NVLink256 increased the BoM cost by approximately 30% per superunit (SU). As the system scales beyond 2048 H100 GPUs, transitioning from a two-layer InfiniBand network topology to a three-layer topology slightly reduces the percentage of InfiniBand costs.

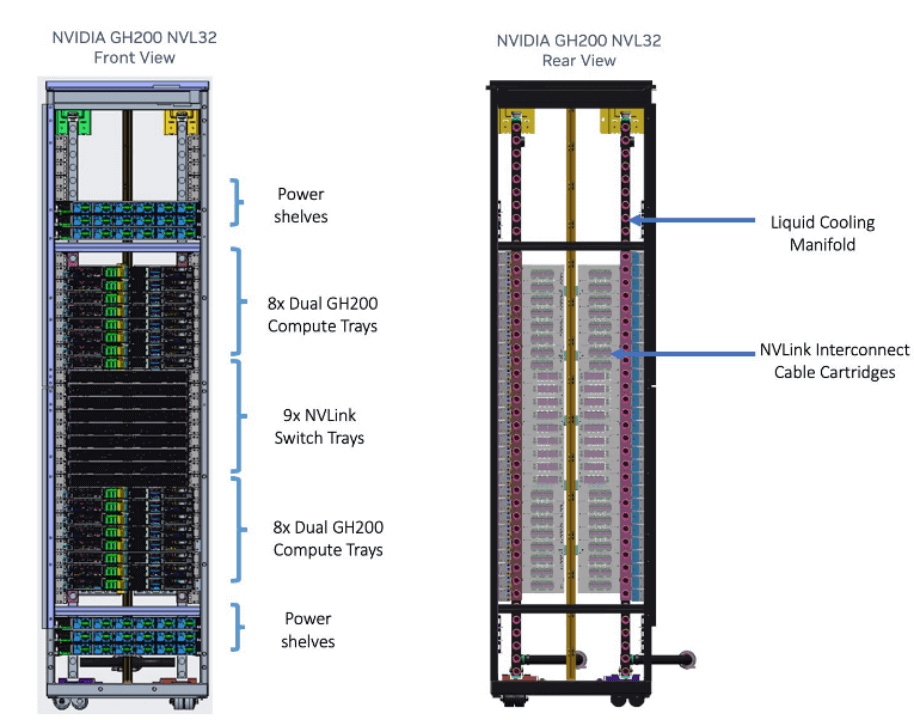

NVIDIA redesigned the NVL256 to create the NVL32, utilizing a copper backplane spine similar to their NVL36/NVL72 Blackwell design. AWS has agreed to purchase 16k GH200 NVL32 for their Project Ceiba initiative. The cost premium for this redesigned NVL32 is estimated to be 10% higher than the standard advanced HGX H100 Bill of Materials (BoM). As workloads continue to grow, NVIDIA claims that NVL32 will be 1.7x faster for GPT-3 175B and 16k GH200, and 2x faster for 500B LLM inference compared to 16k H100. These attractive performance-to-cost ratios are driving more customers toward adopting NVIDIA’s new design.

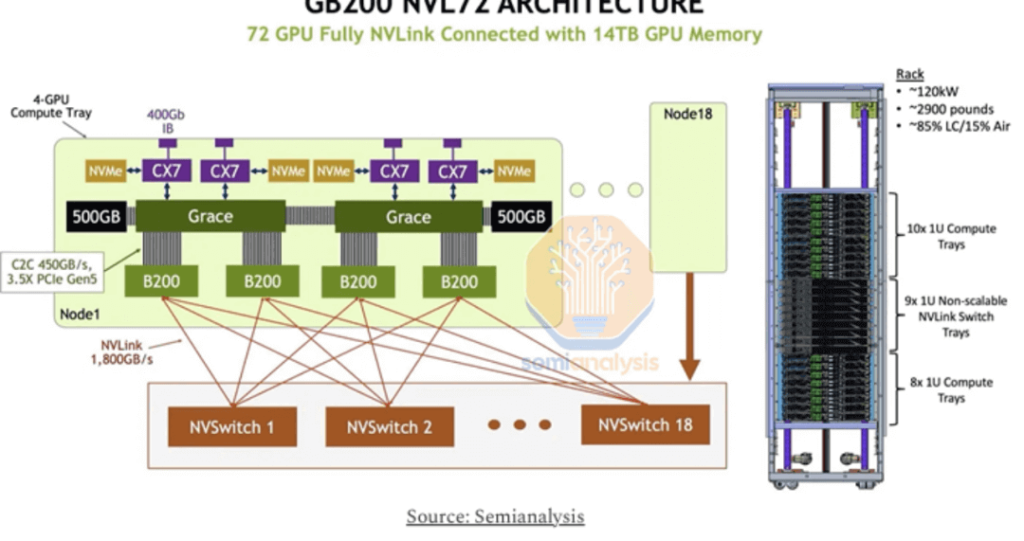

Regarding the expected launch of GB200 NVL72, NVIDIA has learned from the failure of H100 NVL256. They now use copper cabling, referred to as “NVLink spine,” to address cost concerns. This design change is expected to reduce the cost of goods (COG) and pave the way for GB200 NVL72’s success. By adopting copper design, NVL72 is estimated to save approximately 6x in costs per GB200 NVL72 rack, resulting in power savings of around 20kW per GB200 NVL72 rack and 10kW per GB200 NVL32 rack. Unlike H100 NVL256, GB200 NVL72 will not use any NVLink switches within compute nodes; instead, it will employ a flat rail-optimized network topology. For every 72 GB200 GPUs, there will be 18 NVLink switches. Since all connections remain within the same rack, the farthest connection spans only 19U (0.83 meters), feasible with active copper cables.

According to Semianalysis reports, NVIDIA claims that their design can support connecting up to 576 GB200 GPUs within a single NVLink domain. Achieving this may involve adding additional NVLink switch layers. NVIDIA is expected to maintain a 2:1 blocking ratio, using 144 L1 NVLink switches and 36 L2 NVLink switches within GB NVL576 SUs. Alternatively, they may adopt a more aggressive 1:4 blocking ratio, utilizing only 18 L2 NVLink switches. They will continue to use optical OSFP transceivers to extend connections from the rack’s L1 NVLink switches to L2 NVLink switches.

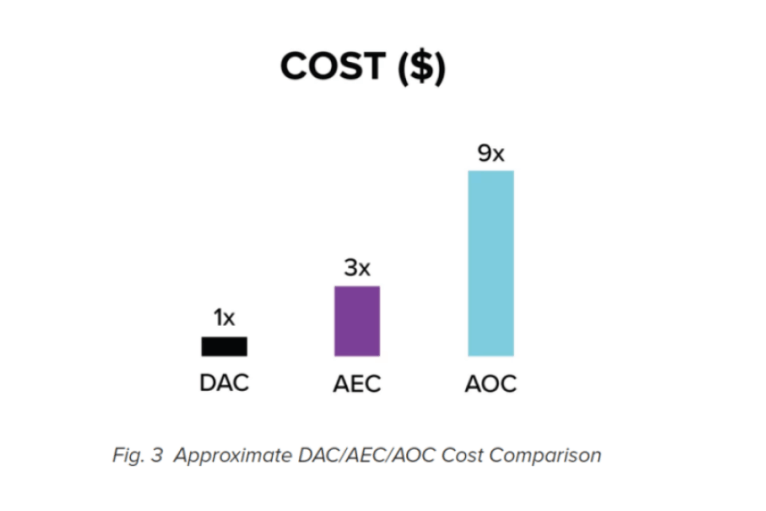

There have been rumors that NVL36 and NVL72 account for over 20% of NVIDIA Blackwell deliveries. However, the question remains whether large customers will choose the more expensive NVL576, as expanding to NVL576 requires additional optical component costs. NVIDIA seems to have learned from this and recognizes that copper cable interconnect costs are significantly lower than those of fiber optics.

According to semiconductor industry expert Doug O’Langhlin, copper interconnects will dominate at the rack scale level, maximizing copper’s value before transitioning to optics. The new Moore’s Law focuses on packing the most compute power into a rack. O’Langhlin believes that the NVLink domain over passive copper is the new benchmark for success, making GB200 NVL72 racks a sensible choice over B200s.

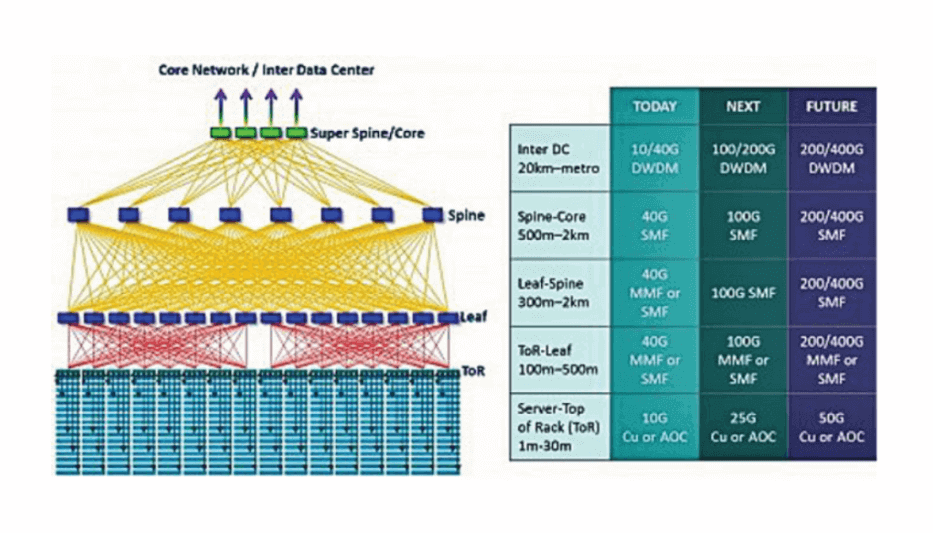

From an industry perspective, copper interconnects have clear advantages in short-distance communication scenarios. They play a crucial role in high-speed data center interconnects, offering benefits in heat efficiency, low power consumption, and cost-effectiveness. As SerDes rates progress from 56G and 112G to 224G, single-port rates are expected to reach 1.6T based on 8 channels, leading to significant cost reductions in high-speed transmission. To address high-speed copper cable transmission losses, AEC and ACC enhance signal distance through built-in signal boosters, while copper cable module production processes continue to evolve.

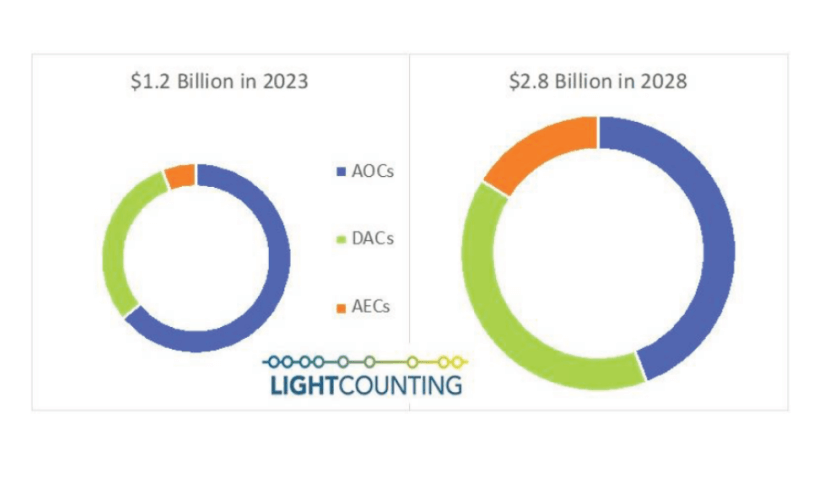

According to Light Counting, the global market size for passive direct-attach cables (DAC) and active optical cables (AOC) is projected to grow at compound annual growth rates of 25% and 45%, respectively.

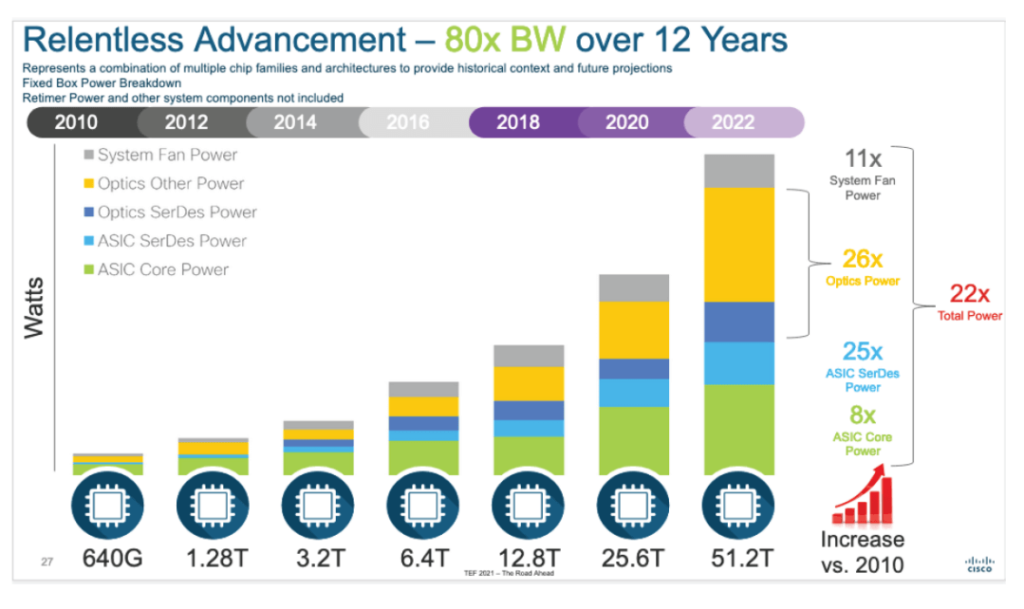

Between 2010 and 2022, switch chip bandwidth capacity increased from 640 Gbps to 51.2 Tbps, resulting in an 80-fold increase in overall system power consumption. Notably, optical component power consumption increased by a factor of 26.

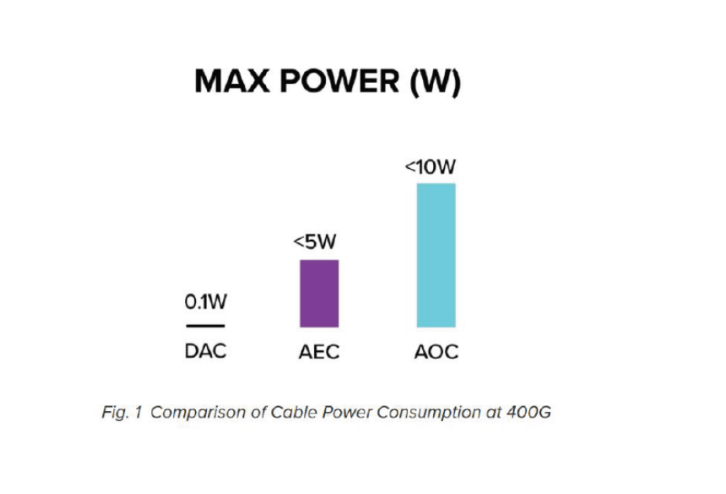

Copper cable interconnects, due to their lack of optoelectronic conversion, exhibit low power consumption. Current copper direct-attach cables (DAC) consume less than 0.1 W, making them negligible, while active cables (AEC) can keep power within 5 W, contributing to reduced overall power consumption in computing clusters.

Within the reachable high-speed signal transmission distance of copper cables, their cost is lower compared to fiber optic connections. Additionally, copper cable modules offer extremely low-latency electrical signal transmission over short distances and maintain high reliability, avoiding the signal loss or interference risks that optical fibers may encounter in certain environments. Furthermore, the physical characteristics of copper cables make them easier to handle, maintain, and highly compatible without requiring additional conversion equipment.

Related Products:

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

-

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

-

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$260.00

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$260.00

-

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

-

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

-

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$190.00

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$190.00