NVIDIA’s Spectrum-X platform aims to unleash AI performance by providing unmatched network features with ultra-high bandwidth and low-latency Ethernet technology. Designed for the datacenter, Spectrum-X incorporates NVIDIA Spectrum-4 switches and BlueField-3 DPUs to deliver a comprehensive, modular solution for AI workloads. The architecture addresses the escalating needs of AI training and inference activities’ efficiency, while recomposing distributed computing silos’ constraints in their propeller nerve.

What is the NVIDIA Spectrum-X Networking Platform?

Understanding NVIDIA Spectrum-X Capabilities

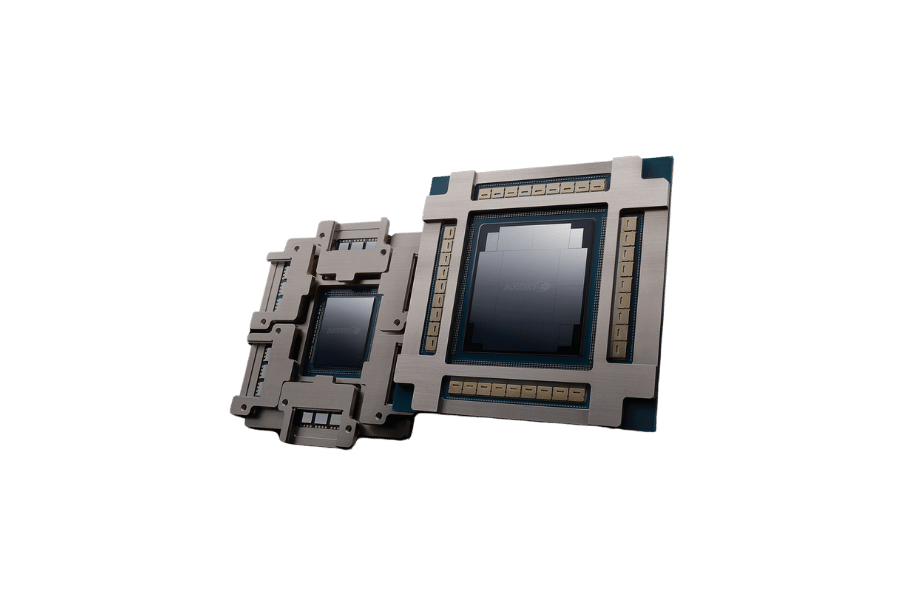

NVIDIA Spectrum-X aims at networking optimization to meet the throughput and latency requirements of AI workloads. It includes NVIDIA Spectrum-4 Ethernet switches alongside BlueField-3 DPUs, which have been integrated to guarantee seamless data movement cross data centers. The platform adds both scale and scope to provide uninterrupted support to complicated AI training and inference workflows. By relieving many networking constraints, Spectrum-X accelerates computation and enhances energy efficiency in distributed environments.

The Role of AI in Spectrum-X

AI significantly enhances the actionable capabilities of Spectrum-X with respect to automatic decision making at the component level and autonomously orchestrated data processing workflows. With the help of sophisticated machine learning techniques, Spectrum-X intelligently reallocates resources among remote data centers and adapts to varying workload levels. This adaptability lowers operational expenses considerably, improves bandwidth efficiency, and reduces latency using proactive traffic control.

AI-centered smart network technologies, such as those embedded in Spectrum-X, are estimated to improve throughput efficiency by as much as forty percent in large scale AI training environments. It also includes machine learning models that provide sophisticated network patterns analysis which is essential for proactive fault diagnosis and predictive maintenance. This increases efficiency by lowering the number of downtime hours needed by approximately twenty-five percent while ensuring uninterrupted data transmission.

With these intelligent systems, Spectrum-X shifts the industry paradigm when it comes to efficiently managing the exponentially increasing AI workload. These advancements also position Spectrum-X as a flagship solution for companies wanting to automate business processes and operations using AI technology within a borderless marketplace.

The Impact of Bandwidth on Network Performance

Network performance is greatly influenced by bandwidth, which is defined as the amount of data that can be transmitted over the network at a given time. High bandwidth allows for simultaneous data transfer which boosts the efficiency of the network by decreasing latency. Not having enough bandwidth can cause severe network delays especially for vital operations. Having sufficient bandwidth is critical when ensuring constant connectivity in high-volume data processing or real-time communication environments.

Benefits Of Spectrum-X For AI Factories

Spectrum-X With AI Integration

Spectrum-X improves the performance and efficiency of AI factories by offering tailored, state-of-the-art, low latency networking solutions. Its integration with NVIDIA Spectrum switches as well as BlueField DPUs ensure that there Agile and DevOps accents avoid bottlenecks and support real time processing within the data center. Throughput is enhanced which guarantees high quality trained AI models are deployed swiftly. AI factories are assured peak performance as connectivity allows Scale, Reliability and Efficiency (SRE) to function freely.

Facilitating Generative AI Models

Innovation and automation across numerous industries was made possible by the ability to create realistic content for use – a task facilitated through the development of Generative AI. Sophisticated infrastructure and advanced computational frameworks combine to enable generative AI applications to process large datasets with unprecedented accuracy to generate text, images, audio, simulations, and much more. The remarkable strides that recent Generative Adversarial Networks (GANs) alongside transformer-based models like GPT and DALL-E are making in the entertainment and design industries, as well as healthcare and finance, are breathtaking indeed.

⭐ Generative AI is anticipated to have more than $110 billion market value by 2030 because it’s able to optimize workflows and create scalable outputs. This enterprise automation solution increases AI content-driven customer engagement and decreases the time-to-market for new products. AI factories must integrate emerging technologies like NVIDIA Spectrum switches and BlueField DPUs for optimal data routing and real-time training, which is crucial for IoT applications.

⭐ Many industries are already using this technology for hyper-personalized marketing to enhance customer retention rates by over 40%. Moreover, researchers are now able to devise new drugs faster and more cost-effectively due to advancements of generative AI in drug discovery and medical imaging. Also, the quatity and quality of generic AI integrates is ushering a new era of advanced computational ecosystems with expanded connectivity solutions. 泛

Spectrum-X’s Role in Hyperscale AI

Spectrum-X’s contributions to hyperscale AI adoption stems its enabling hardware and software solutions that help with high-performance data processing and analytics. Its infrastructure further facilitates the efficient execution of extremely demanding computational tasks, which accelerates the training of AI models. Spectrum-X complements proprietary connectivity infrastructure with high performance computing to improve the scalability and efficiency of generative AI applications. This helps ensure that organizations achieve the desired outcomes with AI without being limited by resource constraints.

Examining the Spectrum-X AI Ethernet Capacities

Ethernet Networking Integration in AI Solutions

Reliable and rapid data transfer is essential for communication between AI systems and Ethernet networking enables that. Spectrum-X employs advanced Ethernet technologies to optimize connectivity for AI workloads by minimizing latency and maximizing bandwidth. This supports effortless data transfer that is necessary for training complex AI models and utilizing generative AI systems. Spectrum-X is capable of efficiently meeting the rising needs from AI-centric sectors due to its sturdy architecture and Ethernet’s scalability.

Important Characteristics of Spectrum-4 Ethernet Switch

- High Performance: Exceptional throughput alongside low latency is enabled by high power operational expenditure, which supports AI and HPC workloads.

- Scalability: Designed to be deployed at scale, it also provides support for expanding network infrastructure.

- Energy Efficiency: Redundant power operational expenditure does not adversely affect performance while lowering operational costs.

- Enhanced Reliability: Uninterrupted network operations are guaranteed by robust failover and fault-tolerance enables seamless network operational continuity.

- Integrated AI Networking Support: Applications with AI-centric capabilities are supported through advanced data flow optimizations. This device is built specifically for them.

- Ease of Management: Streamlined configuration and maintenance are possible because of intuitive management tools provided.

Control of Latency Management and Congestion

Active monitoring of latency and congestion control is central to current networking due to the impact they have on system and user performance. Latency refers to the delay between the time a request is made and a response is provided. Congestion control refers to preventive measures that stop an excessive overload of traffic to a network from happening.

Modern techniques, such as Low-Latency Queuing (LLQ) and Dynamic Frequency Selection (DFS), are used to reduce lag. In LLQ, a portion of data packets is set aside in a queue for transmission. The portion for transmission is determined by the level of importance assigned to traffic, thereby transmitting sensitive information such as voice and video streams with ease. Implementing Edge Computing can further minimize latency by analyzing information as close as possible to where it is generated, hence shortening trip time to centralized servers.

On the other hand, alleviation of network restrictions through the use of satellites, modulation devices, and gateways require special algorithms to ensure smooth data traffic flow. Such methods include Transmission Control Protocol (TCP) congestion control, where the data sent over a network connection or network is throttled dynamically depending on current network conditions. For instance, TCP CUBIC and TCP BIC are protocols made up of two classes. The first class is made for prolonged distances and peak speed, while the second is built for short distances and low latency. A study carried out in 2005 showed that CUBIC has 20 to 30% improved throughput in conditions of high latency when compared with TCP Reno, which had been predominant in earlier times.

Moreover, Advanced Queue Management (AQM) strategies, such as Random Early Detection (RED), are incorporated to handle congestion issues in advance. RED manages the early stages of congestion by notifying devices of the need to reduce their transmission rate (to avoid packet loss) and maintaining a steady flow of packets in the network. Together with modern traffic shapng policies, such as Weighted Fair Queuing (WFQ), these policies allow for better bandwidth management and fairness in a network of differing traffic types.

Real world usage statistics show how much of a difference these methods make. For example, systems with sophisticated congestion-control algorithms integrated report more than a 40 percent drop in packet loss, and edge-enabled, latency-sensitive networks show up to a 50 percent improvement in response time. These figures demonstrate the gain obtained from contemporary approaches for dealing with latency and congestion in complex networks.

What Is NVIDIA Spectrum-X’s Approach To Supporting Scalability In Networks?

Implementing Adaptive Routing for Efficient Scaling

NVIDIA Spectrum-X is equipped with Adaptive routing, which effectively allows scalability in routing networks by performing load balancing using dynamic selection based on real time data regarding the state of the network. This feature increases the utilization of bandwidth, reduces congestion, and lowers the latency by shifting with traffic patterns. With the use of sophisticated telemetry and analytics Spectrum-X is able to reliably utilize increasing network resources with growing workloads frontierously and robustly Spectrum-X excels in a wide variety of neutral environments without compromsising performance and reliability.

The Importance of Effective Bandwidth on Scalability

As networks scale, effective bandwidth becomes crucial because lack of it directly hinders performance and can lead to system collapse. Effective bandwidth ensures the network is not overloaded by providing an upper limit to data transmitted, allowing for lower latency while preventing bottleneck overhead. Through the maximization of bandwidth, greater user volumes and higher data amounts can be accommodated without overheating performance. With advanced traffic control and load balancing features, NVIDIA Spectrum-X ensure superior effective bandwidth under huge amounts of workloads as well. In order for networks to scale and become more reliable and efficient, proper optimization is of utmost importance.

What sets NVIDIA’s Spectrum-X apart from traditional Ethernet?

Differentiation Between Ethernet and Spectrum-X.

Traditional Ethernet serves a general purpose. It provides adequate compatibility for a host of devices and network systems as well ensuring basic data transfer. It is useful for general networking processes but often proves to be inefficient when it comes to high performance task because of poor congestion control systems and substandard latency performance.

As for NVIDIA’s Spectrum X, it is constructed to address the challenges of contemporary datacenter Atmosphere. Its modern features, such as advanced adaptive routing along with proper congestion control, optimization of workloads for severe data-intensive applications, and directional overloads, allows it to perform tasks more efficiently. Besides, Spectrum-X provides predictably low latency, packet loss, and reliable scalability. Its improvements best suite high-performance environments which require maximum speed and utmost reliability.

Benefits Of Converged Ethernet in AI Implementations

The support of Converged Ethernet offers several necessary features that enhance the performance of AI systems as well as their scalability at the same time.

- Enhanced Data Throughput: For AI workloads, portions of pertinent data which are stored in huge datasets are processed by Converged Ethernet. It transfers data with high-bandwidth which ensures proper large Scale data handling.

- Low Latency: It provides an exceptionally low latency which is a prerequisite for real time data processing, computation acceleration, and overall AI assistance.

- Improved Efficiency: Integration of storage and networking into a singular traffic fabric streamlines the configuration complexity of the data center, improving operational efficiency.

- Broader Expansion Potential: The scalability features of AI model and workload requirements can easily be incorporated using Converged Ethernet.

- Reduced Expenditures: It reduces the number of specialized networks required, thus lowering infrastructure costs.

These advantages facilitate the categorization of Converged Ethernet technology with large-scale, high-performing AI execution infrastructures.

Frequently Asked Questions (FAQ)

Q: How does Spectrum-X improve performance of GPUs in AI workloads?

A: With Spectrum-X, GPUs have better performance due to the exceptional network bandwidth provided for AI-focused tasks. The platform allows data transfer between GPUs at the staggering rate of 51.2 terabits per second, which permits distributed systems to efficiently scale AI models. By alleviating communication bottlenecks between multiple GPUs, Spectrum-X ensures quicker and more efficient processing of complex AI workloads, thus maximizing the capabilities of NVIDIA GPUs in data centers.

Q: In what way does the Spectrum-X Ethernet Networking Platform differentiate for AI cloud deployments?

A: The Spectrum-X Ethernet Networking Platform is unique for AI cloud deployments because standardization and high performance are typically offered by specialized fabrics such as InfiniBand. According to NVIDIA, the company integrates their state-of-the-art networking switches with BlueField-3 DPUs to get accelerated Ethernet that is able to efficiently handle the massive parallel processing of millions of GPUs. The architecture tackles data movement fundamentally required for large-scale AI training and inference, which would make it much simpler to build scalable and cost-effective AI clouds without the need of proprietary networking protocols.

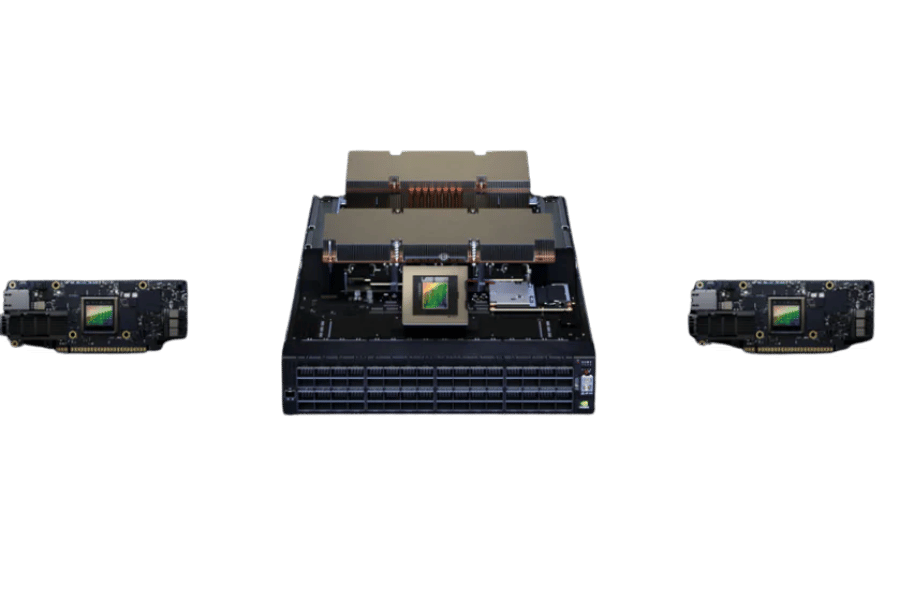

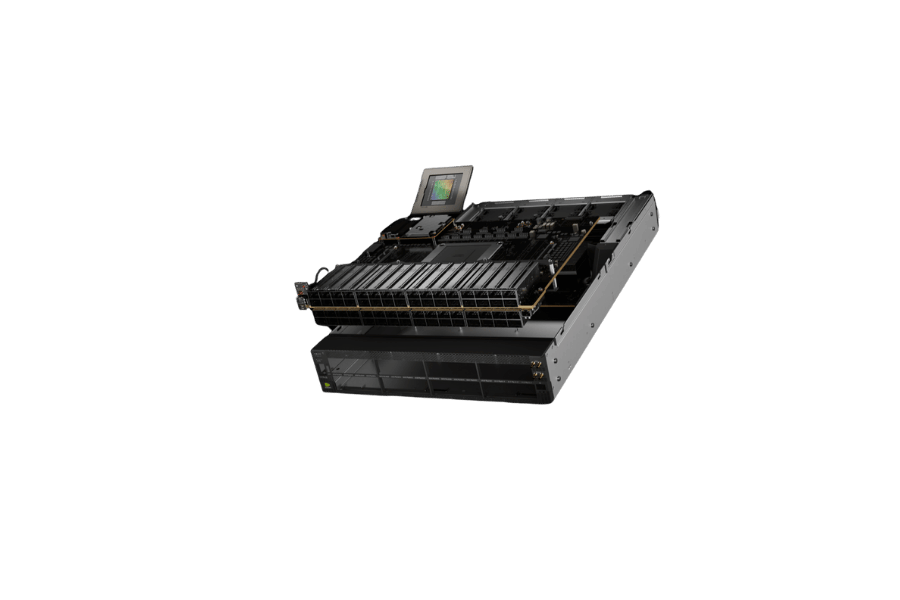

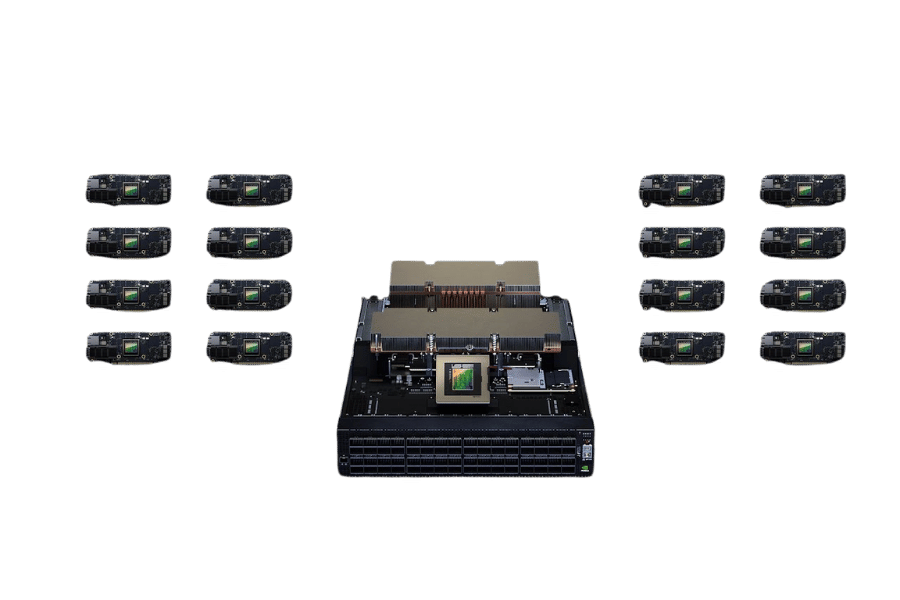

Q: What is the maximum number of GPUs that can be integrated with the Spectrum-X product?

A: This product is capable of connecting and managing millions of GPUs across colossal data centers. The advanced networking architecture on Spectrum-X enables it to scale AI compute environments from small clusters to gigantic distributed systems containing thousands of servers. The SN5600 switch alongside BlueField-3 DPUs and silicon photonics networking components integrated into the Spectrum-X product created this capability. This incredible scope allows organizations to integrate millions of GPUs to create AI supercomputers, which in return, allows the deployment and training of intricate AI models to be performed simultaneously.

Q: What networking switches to scale are included in the Spectrum-X ecosystem?

A: The Spectrum-X ecosystem consists of these key networking switches to efficiently scale AI infrastructure: NVIDIA SN5600 switch, which at the core guarantees 64 ports of 400Gb/s connectivity within one switch. This greatly increases the throughput of AI clusters. In addition, Spectrum-X photonics Ethernet switches utilize silicon photonics technology to incorporate longer distance connections. These together with BlueField-3 DPUs enable accelerated RoCE (RDMA over Converged Ethernet) and thus, these sets of switches provide an integrated scalable solution starting from small deployments to enormous AI data centers capable of accommodating millions of simultaneous operations.

Q: How do the Spectrum-X Ethernet Networking Platform and NVIDIA’s Quantum-X Photonics InfiniBand platforms compare?

A: Both are part of NVIDIA’s networking portfolio but have different functions. The Spectrum-X Ethernet Networking platform delivers accelerated Ethernet performance optimized for Infiniand throughput; however, it is fully compatible with Ethernet infrastructure, making it convenient for organizations that operate with existing Ethernet networks. On the other hand, Quantum-X Photonics InfiniBand platforms have the best performance and lowest latency systems, but they are wired with specialized InfiniBand infrastructure. NVIDIA marketed Spectrum X as bringing Infiniand-like performance to Ethernet, offering clients flexibility in regards to their infrastructure and performance needs.

Q: What role do the server GPUs have in the entire Spectrum-X ecosystem?

A: Spectrum-X architecture serves as the structural base for the server GPU. The entire structure of this platform is built around the capability of these GPUs to communicate effectively with one another. In AI compute environments, data exchange between GPUs is continuous. Any restriction to this transfer will severely impact system efficiency. This optimization allows communication to be transferred independently by utilizing technologies such as GPUDirect RDMA. With this technique, data can be transferred directly between GPUs situated in different servers without the need of a CPU. This architectural design guarantees that valuable GPU compute resources spend more time processing information and less waiting for information to be provided, making computing environments that are GPU accelerated become more efficient economically and operationally.

Q: In what ways does NVIDIA Spectrum-X assist with the modernization of AI cloud infrastructure?

A: NVIDIA Spectrum-X modernizes AI cloud infrastructure by solving underlying fundamental networking issues that limit AI scale-out. Moving increasing amounts of data, known as “data movement,” becomes a challenge when more and more GPUs are needed to accommodate the higher complexity and size of AI models. AI fabrics comprised of high-bandwidth switches, accelerated networking protocols, and purpose-built DPUs are created with Spectrum-X, allowing for the workload to be distributed efficiently across massive GPU clusters. As discussed in NVIDIA GTC presentations, large language models, visual recognition systems, and scientific simulations are just some of the applications that will be powered by the next generation of AI clouds and will require sophisticated AI technology. This platform is crucial for enabling full-scale AI infrastructure.

Q: What benefits does Spectrum-X provide for organizations building compute clusters for AI research?

A: I would respond that with Spectrum-X, organizations building compute clusters for AI research are able to benefit in several important ways. For one, it significantly improves training throughput by streamlining the movement of data around the GPUs – meaning more complex models can be trained in less time. Furthermore, it provides better resource allocation so that the expensive GPU hardware is not wasted waiting for data to be processed. In addition, it features scalable architecture that can evolve from small research clusters to production level deployments. Also noteworthy, Spectrum-X is fully standard based which allows organizations to benefit from possessing Ethernet infrastructure without having to suffer the performance degradation that comes with trying to use generic networking instead of specialized ones. All of these benefits combined allows AI research to be conducted in a manner which is more efficient and cost-effective, allowing the pursue ever more powerful undertakings.

Reference Sources

1. A Comparative Analysis of Near-Infrared Image Colorization Methods for Low-Power NVIDIA Jetson Embedded Systems

- Authors: Shengdong Shi et al.

- Published In: Frontiers in Neurorobotics

- Publication Date: April 24, 2023

- Citation Token: (Shi et al., 2023)

- Summary:

- Objective: This investigation analyzes several near-infrared (NIR) image colorization techniques for the low-power NVIDIA Jetson embedded systems, frequently employed in real-time tasks.

- Methodology: The writers constructed a evaluation system which measured 11 different methods of NIR image colorization by means of image metrics, including quality, resource occupancy, energy consumption, and more. The analysis was performed on three configurations of NVIDIA Jetson boards.

- Key Findings: It was found that the Pix2Pix method is the best one since it can process 27 frames per second on the Jetson Xavier NX. This performance is considered to be enough for real-time applications which illustrates the NVIDIA Jetson systems’ ability in processing NIR images.

2. Evaluation of Performance Portability of Applications and Mini-Apps across AMD, Intel, and NVIDIA GPUs

- Authors: JaeHyuk Kwack et al.

- Published In: International Workshop on Performance, Portability and Productivity in HPC

- Publication Date: November 1, 2021

- Citation Token: (Kwack et al., 2021, pp. 45–56)

- Summary:

- Objective: This paper assesses the cross-architecture performance portability of applications and mini-apps across varied GPU designs, such as the NVIDIA A100.

- Methodology: The authors analyzed and calculated performance efficiency for AMD, Intel, and NVIDIA GPUs using the roofline performance model. They evaluated multiple applications created with different parallel programming models like SYCL, OpenMP, and Kokkos, alongside other models.

- Key Findings: The research suggested a novel approach to measure performance portability by quantifying it with a metric defined as the standard deviation of roofline efficiencies. The results show variance on the performance aspects across the platforms which indicates that each GPU architecture requires specific optimization efforts.

3. Multi-band Sub-GHz Technology Recognition on NVIDIA’s Jetson Nano

- Authors: Jaron Fontaine et al.

- Published In: IEEE Vehicular Technology Conference

- Publication Date: November 1, 2020

- Citation Token: (Fontaine et al., 2020, pp. 1–7)

- Summary:

- Objective: This study seeks to implement deep learning-based recognition of different wireless technologies using NVIDIA’s Jetson Nano with an emphasis on its power-efficient features.

- Methodology: The authors suggested a convolutional neural network (CNN) model integrated with software-defined radios for intelligent spectrum management. The system was meant to function in near-real-time, perceiving several technologies simultaneously.

- Key Findings: The outcomes achieved were an accuracy of approximately 99% with technology recognition. This is on par with the most developed solutions which offer low processing costs. This progress is relevant to the creation of intelligent networks that autonomously respond to dynamic wireless surroundings without the need of costly physical components.

5. Nvidia