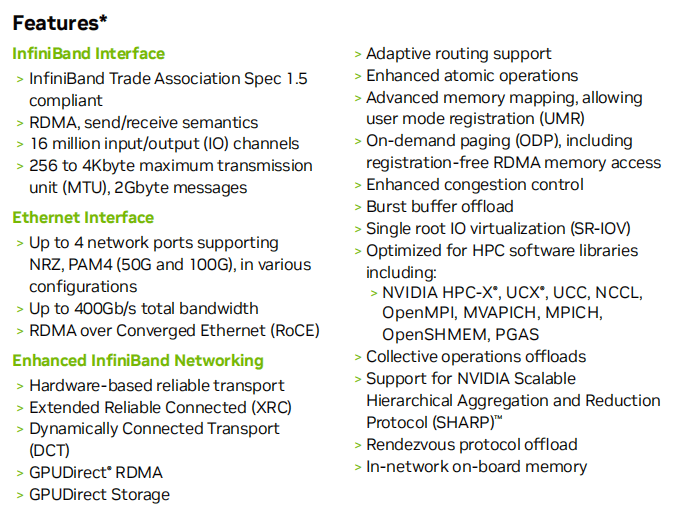

The network adapters from the NVIDIA ConnectX-7 family support both InfiniBand and Ethernet protocols, providing a versatile solution for a wide range of networking needs. These adapters are designed to deliver intelligent, scalable, and feature-rich networking capabilities, catering to the requirements of traditional enterprise applications as well as high-performance workloads in AI, scientific computing, and hyperscale cloud data centers.

The ConnectX-7 network adapters are available in two different form factors: stand-up PCIe cards and Open Compute Project (OCP) Spec 3.0 cards. This flexibility allows users to choose the adapter that best suits their specific deployment requirements.

400Gbps networks are a new capability that can be handled by PCIe Gen5 x16 slots. Let’s take a look at the configuration for using NDR 400Gbps InfiniBand/400GbE.

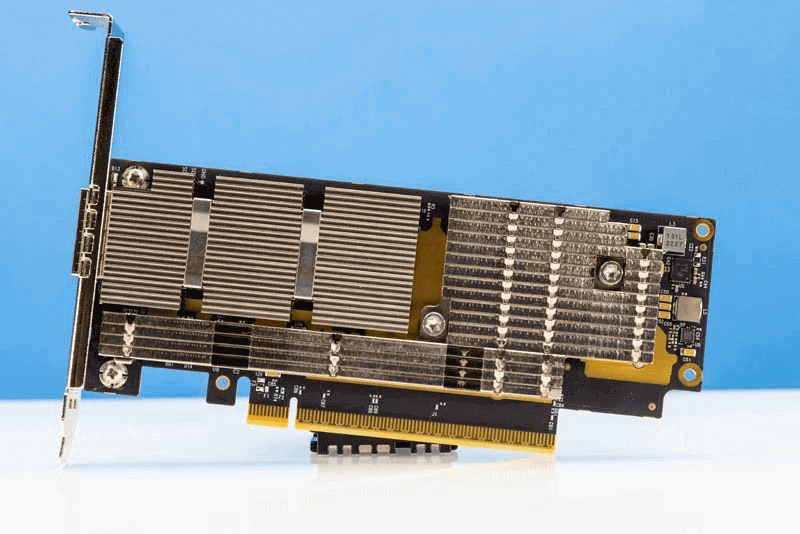

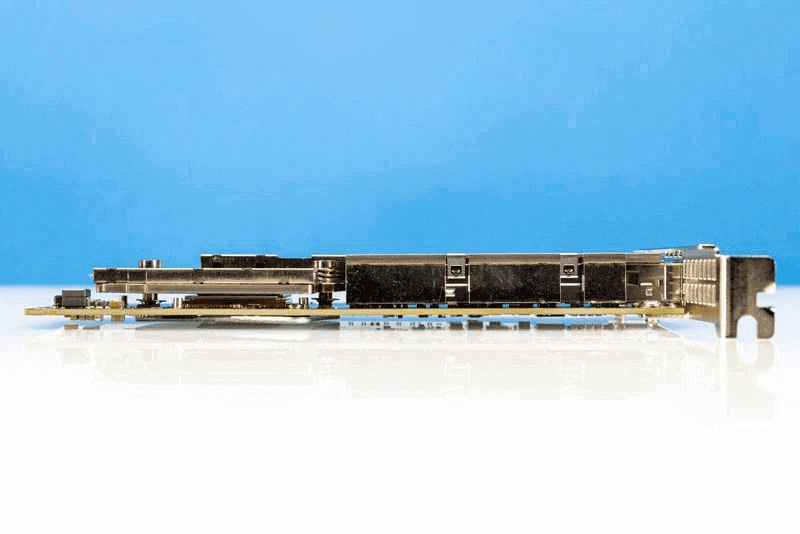

Angle 1 shot of NVIDIA ConnectX 7 400G OSFP

Hardware Overview of MCX75310AAS-NEAT Adapter

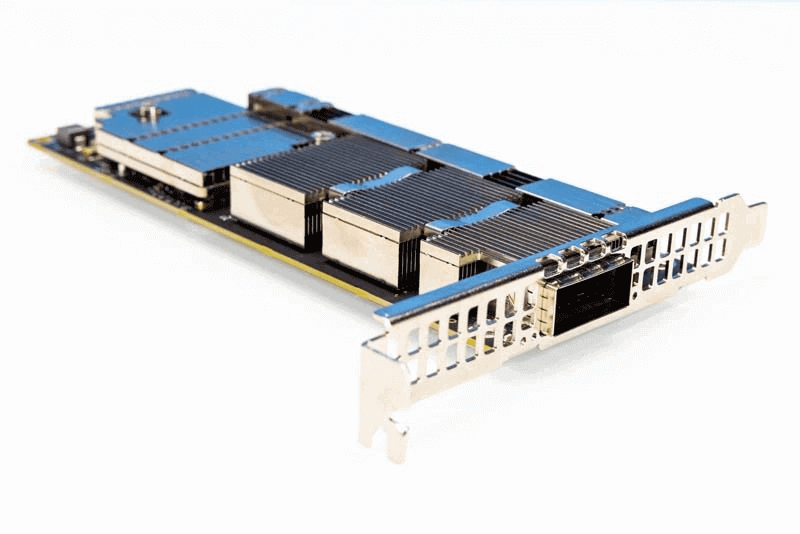

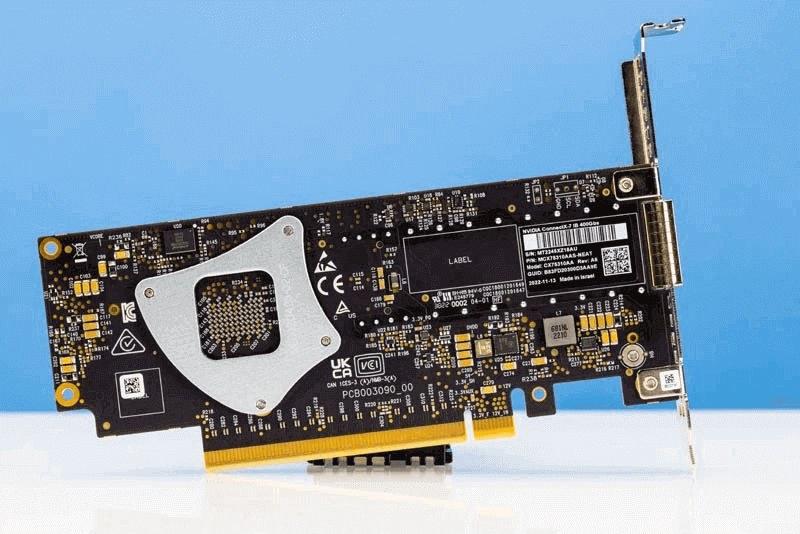

The ConnectX-7 (MCX75310AAS-NEAT) is a low-profile card designed for PCIe Gen5 x16 slots. The image below shows the full-height bracket, but it also includes a low-profile bracket in the box.

Front of NVIDIA ConnectX 7 400G OSFP

It’s worth noting the dimensions of the cooling solution. However, NVIDIA does not disclose the power specifications of these network adapters.

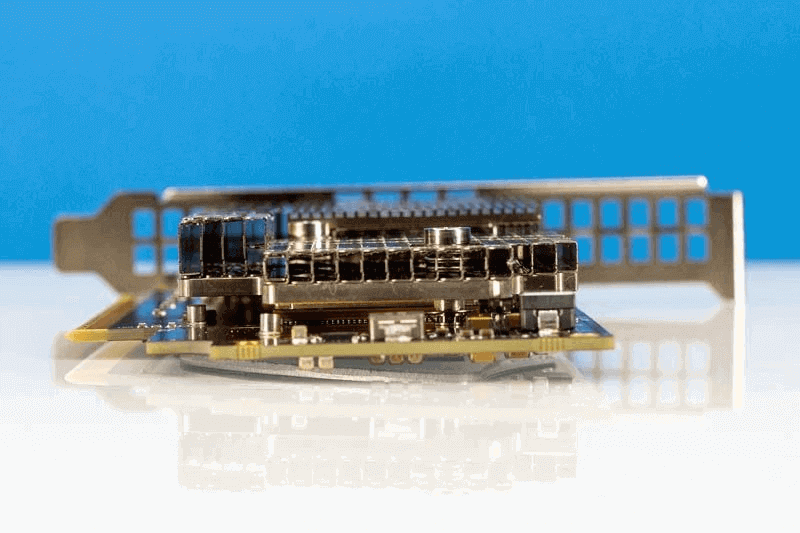

Angle 2 shot of NVIDIA ConnectX 7 400G OSFP

Here is the back of the card with a heatsink backplate.

The back of the NVIDIA ConnectX 7 400G OSFP card

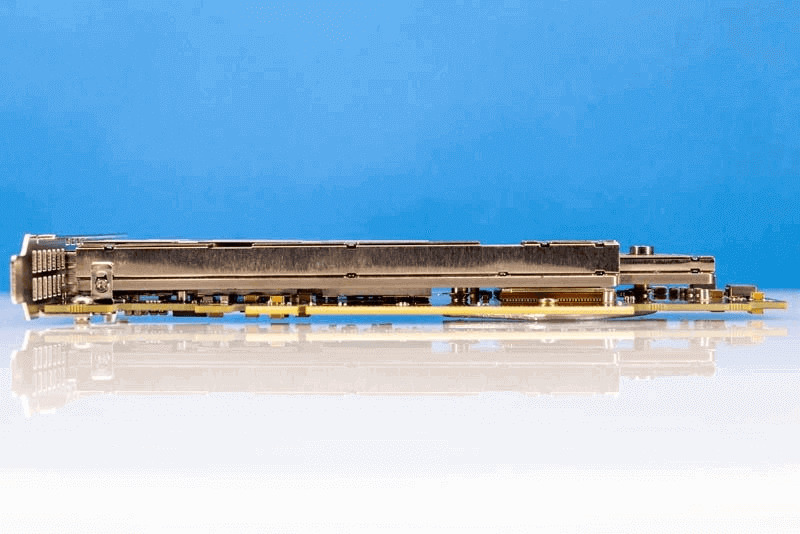

Here is a side view of the card from the PCIe Gen5 x16 connector.

NVIDIA ConnectX 7 400G OSFP connector angle

This is another view of the card from the top of the card.

NVIDIA ConnectX 7 400G OSFP top angle

This is a view from the direction of airflow in most servers.

This is a flat single-port card that operates at a speed of 400Gbps. It provides a tremendous amount of bandwidth.

Installing the NVIDIA ConnectX-7 400G Adapter

One of the most important aspects of such a card is to install it in a system that can take advantage of its speed.

The installation procedure of ConnectX-7 adapter cards involves the following steps:

- Check the system’s hardware and software requirements.

- Pay attention to the airflow consideration within the host system.

- Follow the safety precautions.

- Unpack the package.

- Follow the pre-installation checklist.

- (Optional) Replace the full-height mounting bracket with the supplied short bracket.

- Install the ConnectX-7 PCle x16 adapter card/ConnectX-7 2x PCle x16 Socket Direct adapter card in the system.

- Connect cables or modules to the card.

- Identify ConnectX-7 in the system.

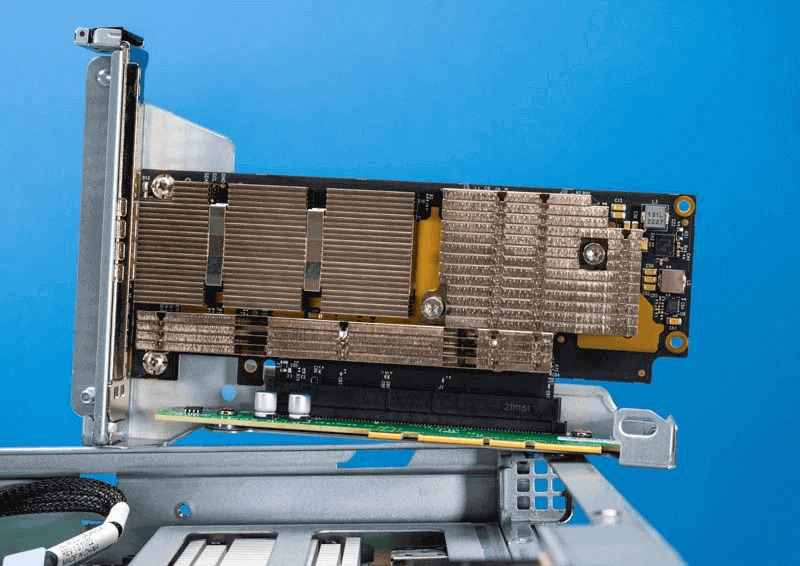

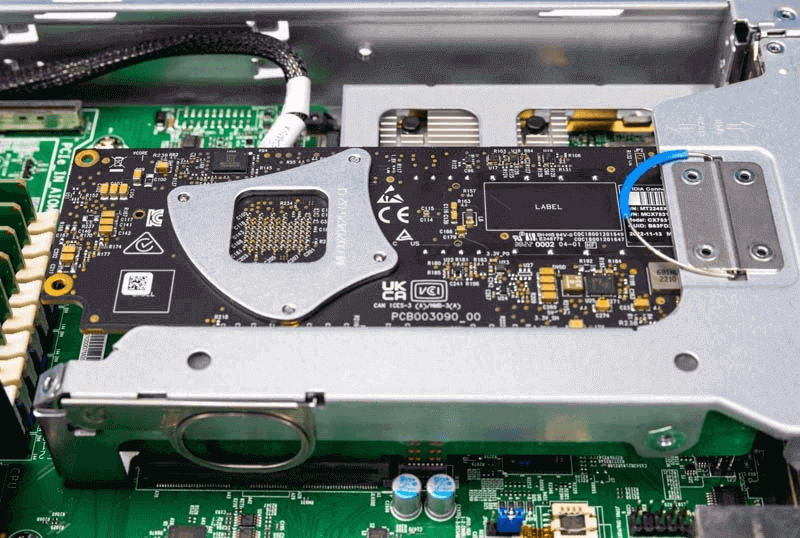

Supermicro SYS 111C NR with NVIDIA ConnectX 7 400Gbps Adapter 1

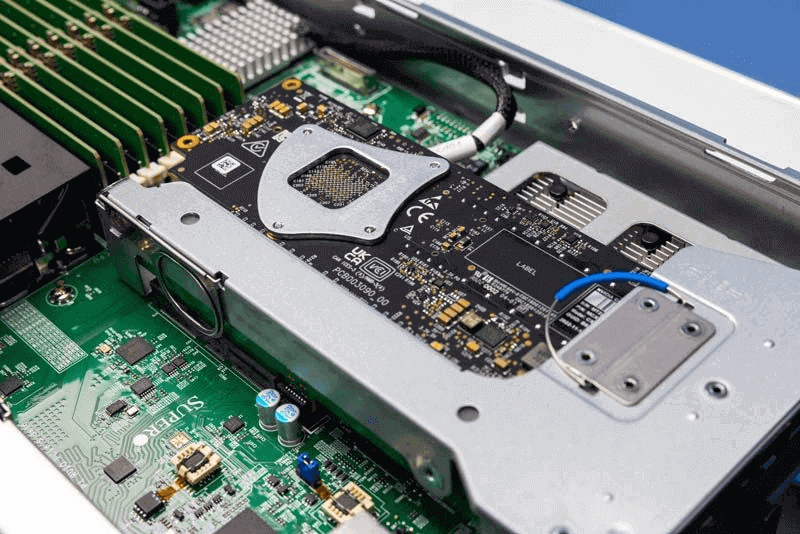

Fortunately, we have successfully installed these devices on the Supermicro SYS-111C-NR 1U and Supermicro SYS-221H-TNR 2U servers and they are working fine.

Supermicro SYS 111C NR with NVIDIA ConnectX 7 400Gbps Adapter 2

The SYS-111C-NR is a single-slot node server that provides us with more flexibility because we don’t need to worry about the connections between the slots when setting up the system. At 10/40Gbps or even 25/50Gbps speeds, there have been discussions about performance challenges through connections between CPU slots. With the advent of 100GbE, the issue of having a network adapter for each CPU to avoid cross-slot connections became more prominent and prevalent. The impact is even more pronounced and severe when using networks with 400GbE speeds. For dual-slot servers using a single 400GbE NIC, looking at multiple host adapters that connect directly to each CPU may be an option worth considering.

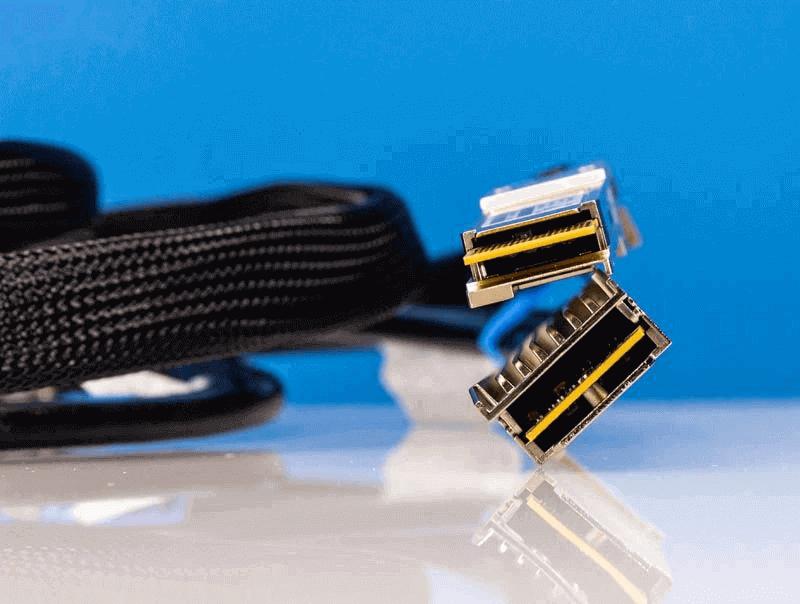

OSFP vs QSFP-DD

Once the cards were installed, we had our next challenge. These cards use OSFP cages, but our 400GbE switch uses QSFP-DD.

Supermicro SYS 111C NR with NVIDIA ConnectX 7 400Gbps Adapter 4

These two standards have some differences in power levels and physical design. It is possible to convert QSFP-DD to OSFP, but the reverse is not possible. If you have never seen OSFP optics or DACs, they have their own unique thermal management solutions. QSFP-DD typically uses a heat sink on top of the socket, while OSFP typically includes a cooling solution on OSFP DACs and optics in the lab.

OSFP and QSFP-DD Connectors 1

This is a tricky one. Both the OSFP DAC and the OSFP to QSFP-DD DAC use a heatsink cooling solution. And because of the direct cooling on the DAC, the OSFP plug will not insert into the OSFP port of the ConnectX-7 NIC.

NVIDIA is likely using OSFP because it has a higher power level. OSFP allows 15W optics, while QSFP-DD only supports 12W. Having a higher power ceiling can make early adoption easier during the early adoption phase, which is one reason why products like the 24W CFP8 module are available.

Whenever possible, be aware of the size of the heatsink on the OSFP side of the ConnectX-7 insert. If you are used to QSFP/QSFP-DD, then all devices will plug in and work fine, but encountering a minor issue like connector size can present a bigger challenge. However, if you are a solution provider, this is also an opportunity to provide professional service support. Distributors like NVIDIA and PNY also sell LinkX cables, which would have been a more convenient option. This is a valuable lesson.

Next, let’s get this all set up and get to work.

Software Setup NDR InfiniBand vs 400GbE

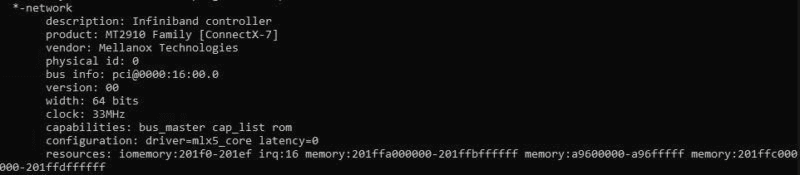

In addition to doing the physical installation, we also worked on the software on the server. Fortunately, this was the easiest part. We used Supermicro’s MT2910 series ConnectX-7 adapter.

NVIDIA MT2910 Lshw

By doing a quick OFED (OpenFabrics Enterprise Distribution) install and reboot, the

we got the system ready.

NVIDIA MT2910 Lshw after OFED installation

Since we are using a Broadcom Tomahawk 4 switch in Ethernet and running directly in InfiniBand mode, we also need to change the link type.

The process is simple and similar to changing the Mellanox ConnectX VPI port to Ethernet or InfiniBand in Linux.

The following is the basic process:

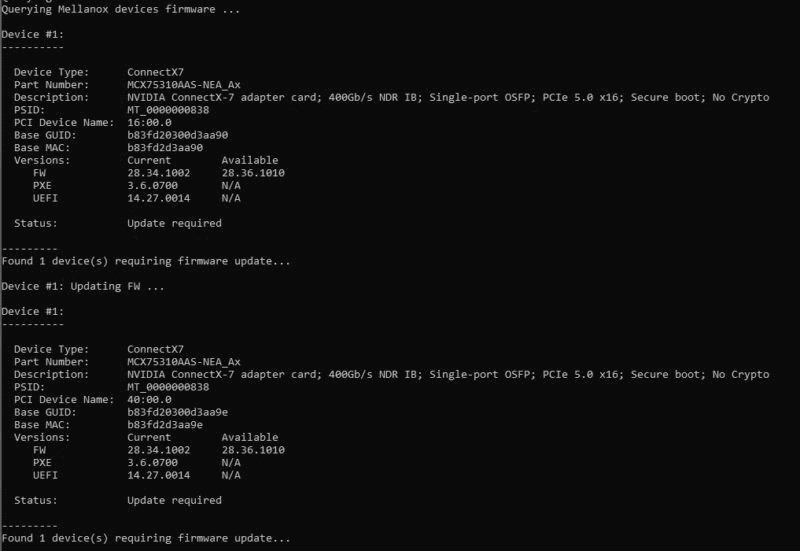

0. Install OFED and update firmware

This is a required step to ensure the card works properly.

During the MLNX_OFED_LINUX installation, NVIDIA ConnectX 7 Mellanox Technologies MT2910 MT2910 Series

The process is fairly simple. First, download the required version for your operating system and use the script provided in the download to install the driver. The standard installer will also update the card’s firmware.

NVIDIA ConnectX 7 MT2910 MT2910 MLNX_OFED_LINUX Installing the Firmware Update

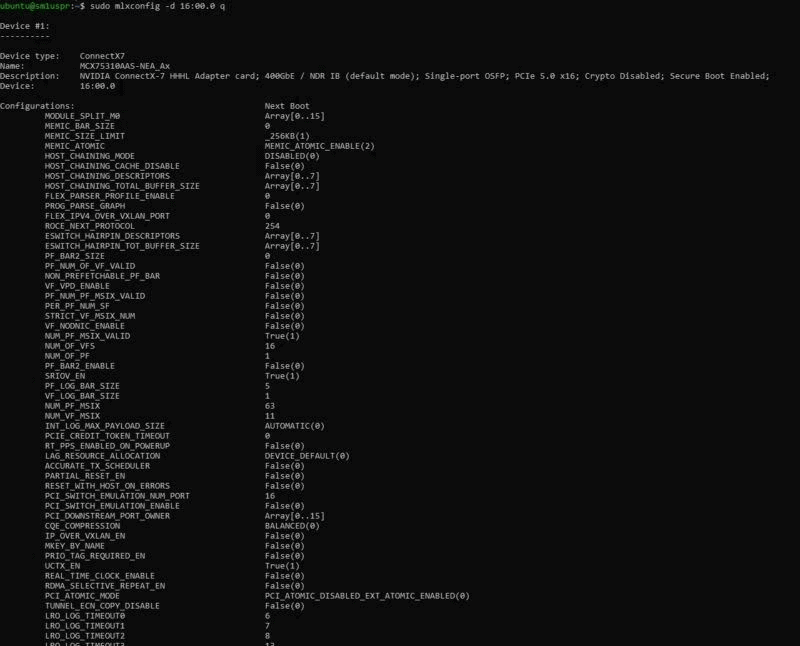

Once we have installed OFED after rebooting the server, we can see that the NVIDIA ConnectX-7 MCX75310AAS-NEAT is 400GbE and NDR IB (InfiniBand) capable. NDR IB is set to default mode.

NVIDIA ConnectX 7 MCX75310AAS NEAT Mlxconfig

If we want to turn it into Ethernet, there are only three simple steps:

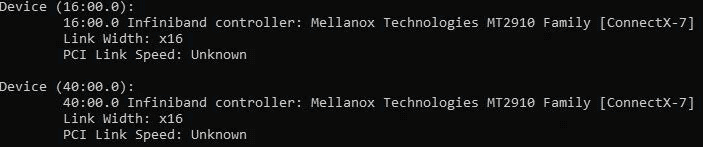

1. Find the ConnectX-7 device

Especially if you have other devices in your system, you will need to find the right device to change. If you only have one card, that’s easy to do.

lspci | grep Mellanox

16:00.0 Infiniband controller: Mellanox Technologies MT2910 Family [ConnectX-7]

Here, we now know that our device is at 16:00.0 (as you can see from the screenshot above).

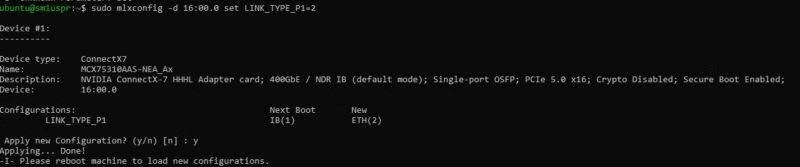

2. Use mlxconfig to change the ConnectX-7 device from NDR Infiniband to Ethernet.

Next, we will use the device ID to change the link type of the Infiniband.

sudo mlxconfig -d 16:00.0 set LINK_TYPE_P1=2

NVIDIA ConnectX 7 MCX75310AAS NEAT Mlxconfig sets the link type to Ethernet

Here LINK_TYPE_P1=2 sets P1 (port 1) to 2 (Ethernet). The default LINK_TYPE_P1=1 means that P1 (port 1) is set to 1 (NDR InfiniBand.) If you need to change it back, you can simply reverse the process.

3. Reboot the system

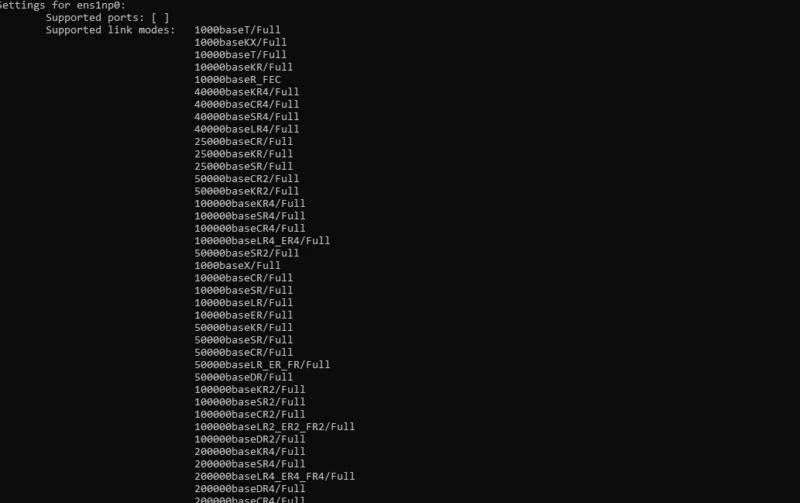

After a quick reboot, we now have a ConnectX-7 Ethernet adapter.

Numerous Ethernet speed options for the NVIDIA ConnectX 7 MT2910

This 400Gbps adapter still supports 1GbE speeds.

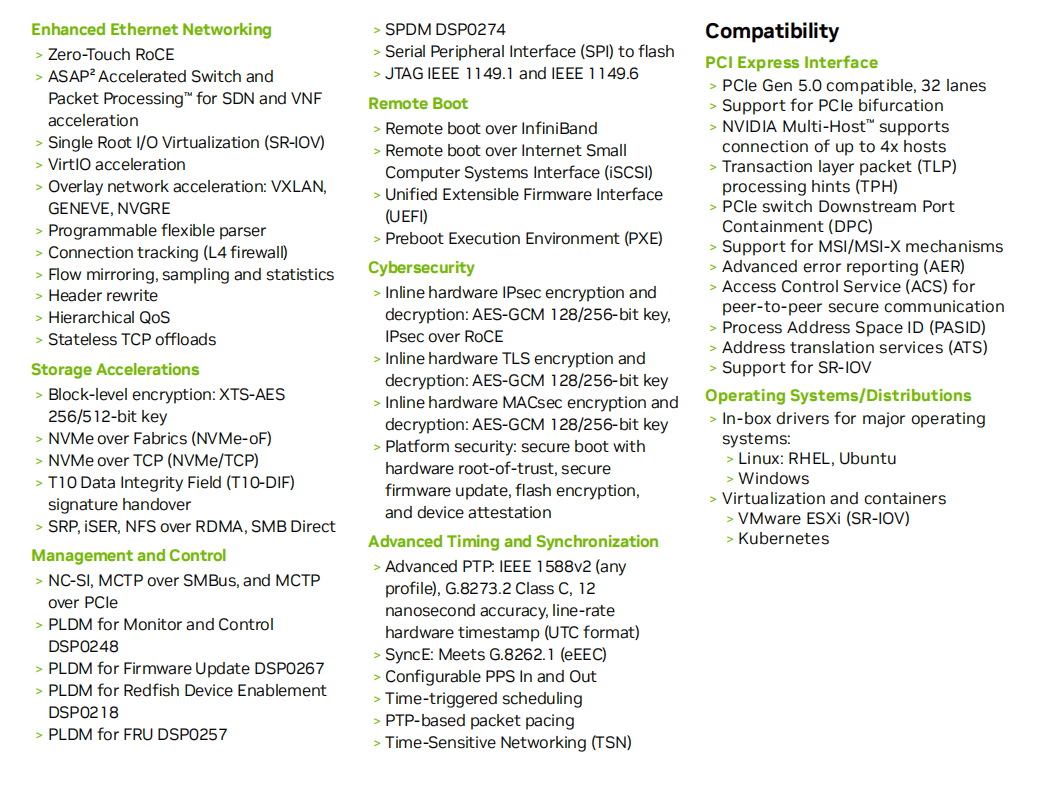

Feature and Compatibility of NVIDIA ConnectX-7

Performance

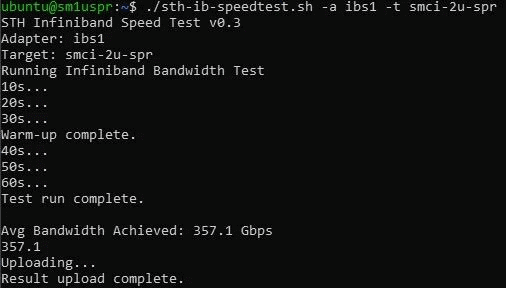

NVIDIA ConnectX 7 400Gbps NDR Infiniband

Of course, there are many other performance options available. We can achieve speeds between 300Gbps and 400Gbps on InfiniBand and Ethernet. For Ethernet, it takes some help to get to 400GbE speeds, as the initial connection is only 200GbE, but we don’t do much in terms of performance tuning.

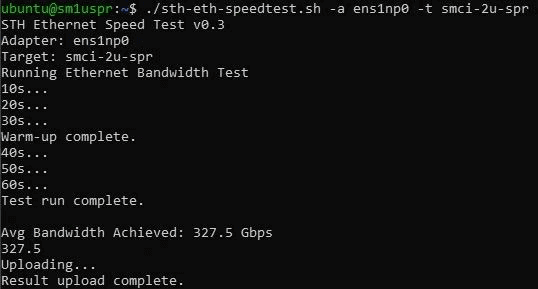

NVIDIA ConnectX 7 400GbE Performance

These speeds are in the range of 400Gbps that can be achieved, well over three times the speed we are used to with 100Gbps adapters, and in a very short time. However, it is important to emphasize that offloading at 400GbE speeds is very important. At 25GbE and 100GbE speeds, we have seen devices like DPUs used to offload CPUs for common networking tasks. In the last three years, modern CPU cores have increased in speed by 20 to 40 percent, while network bandwidth has increased from 100GbE to 400GbE. As a result, technologies like RDMA offloads and OVS/check offloads have become critical to minimize the use of CPUs. This is why the former Nvidia Mellanox division is one of the few companies offering 400Gbps adapters today.

Related Products:

-

OSFP-400G-SR4-FLT 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$650.00

OSFP-400G-SR4-FLT 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$650.00

-

OSFP-400G-DR4 400G OSFP DR4 PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$900.00

OSFP-400G-DR4 400G OSFP DR4 PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$900.00

-

OSFP-400G-SR8 400G SR8 OSFP PAM4 850nm MTP/MPO-16 100m OM3 MMF FEC Optical Transceiver Module

$480.00

OSFP-400G-SR8 400G SR8 OSFP PAM4 850nm MTP/MPO-16 100m OM3 MMF FEC Optical Transceiver Module

$480.00

-

NVIDIA Mellanox MCX653105A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall bracket

$965.00

NVIDIA Mellanox MCX653105A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall bracket

$965.00

-

NVIDIA MCX623106AN-CDAT SmartNIC ConnectX®-6 Dx EN Network Interface Card, 100GbE Dual-Port QSFP56, PCIe4.0 x 16, Tall&Short Bracket

$1200.00

NVIDIA MCX623106AN-CDAT SmartNIC ConnectX®-6 Dx EN Network Interface Card, 100GbE Dual-Port QSFP56, PCIe4.0 x 16, Tall&Short Bracket

$1200.00

-

NVIDIA Mellanox MCX75510AAS-NEAT ConnectX-7 InfiniBand/VPI Adapter Card, NDR/400G, Single-port OSFP, PCIe 5.0x 16, Tall Bracket

$1650.00

NVIDIA Mellanox MCX75510AAS-NEAT ConnectX-7 InfiniBand/VPI Adapter Card, NDR/400G, Single-port OSFP, PCIe 5.0x 16, Tall Bracket

$1650.00

-

NVIDIA MMA1Z00-NS400 Compatible 400G QSFP112 SR4 PAM4 850nm 100m MTP/MPO-12 OM3 FEC Optical Transceiver Module

$650.00

NVIDIA MMA1Z00-NS400 Compatible 400G QSFP112 SR4 PAM4 850nm 100m MTP/MPO-12 OM3 FEC Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$800.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$800.00

-

QDD-OSFP-FLT-AEC2M 2m (7ft) 400G QSFP-DD to OSFP Flat Top PAM4 Active Electrical Copper Cable

$1500.00

QDD-OSFP-FLT-AEC2M 2m (7ft) 400G QSFP-DD to OSFP Flat Top PAM4 Active Electrical Copper Cable

$1500.00

-

OSFP-FLT-400G-PC2M 2m (7ft) 400G NDR OSFP to OSFP PAM4 Passive Direct Attached Cable, Flat top on one end and Flat top on other

$125.00

OSFP-FLT-400G-PC2M 2m (7ft) 400G NDR OSFP to OSFP PAM4 Passive Direct Attached Cable, Flat top on one end and Flat top on other

$125.00

-

OSFP-FLT-400G-PC3M 3m (10ft) 400G NDR OSFP to OSFP PAM4 Passive Direct Attached Cable, Flat top on one end and Flat top on other

$135.00

OSFP-FLT-400G-PC3M 3m (10ft) 400G NDR OSFP to OSFP PAM4 Passive Direct Attached Cable, Flat top on one end and Flat top on other

$135.00

-

NVIDIA MCA7J60-N004 Compatible 4m (13ft) 800G Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Active Copper Cable

$800.00

NVIDIA MCA7J60-N004 Compatible 4m (13ft) 800G Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Active Copper Cable

$800.00

-

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$275.00

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$275.00

-

QSFP-DD-400G-SR4 QSFP-DD 400G SR4 PAM4 850nm 100m MTP/MPO-12 OM4 FEC Optical Transceiver Module

$600.00

QSFP-DD-400G-SR4 QSFP-DD 400G SR4 PAM4 850nm 100m MTP/MPO-12 OM4 FEC Optical Transceiver Module

$600.00

-

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00