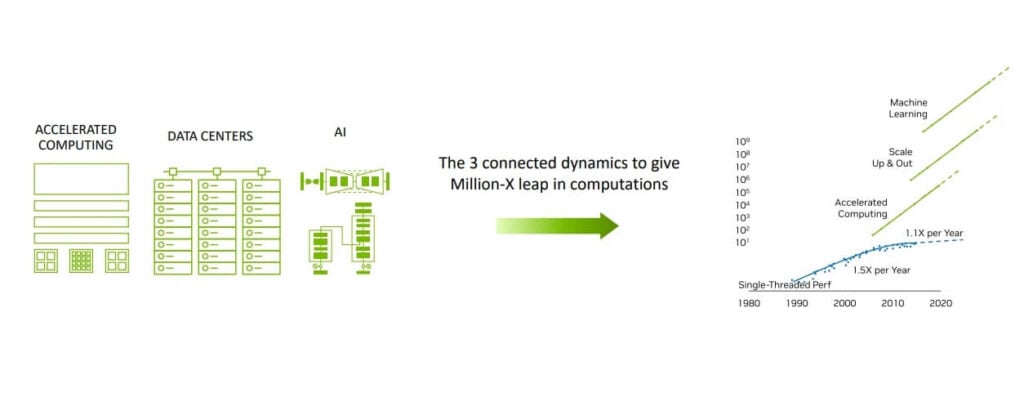

The Data Center Revolution of the AI Era

The deep integration of artificial intelligence, accelerated computing, and data centers is ushering in what may be termed the third scientific revolution. Modern AI models are growing in complexity at an exponential rate, demanding computing power increases by several orders of magnitude for the training of models containing hundreds of billions of parameters. These advances are critical for cutting-edge fields such as computational fluid dynamics, climate simulation, and genomic sequencing.

The Evolution of Data Centers

- Selene 2021: This system employed 4,480 A100 GPUs to achieve a computing performance of 3 exaFLOPS.

- EOS 2023: Upgraded to include 10,752 H100 GPUs, this configuration broke through the 10 exaFLOPS threshold.

- Next-Generation AI Factory: Plans include the deployment of 32,000 Blackwell GPUs, which will deliver a computing capability of 645 exaFLOPS and an enhanced bandwidth of 58,000 TB/s.

This dramatic progression has led to the emergence of a new breed of “AI factories”, which utilize high-density GPU clusters to perform real-time, large-scale AI computations, thereby driving transformative changes to the compute rental model.

Limitations of Traditional Cooling Solutions

Presently, data centers predominantly rely on three air-cooling solutions:

Air-Cooled CRAC/CRAH Systems

- Applicable Scenario: Low-density racks (less than 5 kW).

- Architectural Characteristics: These systems are based on centralized cooling at the data center level, using underfloor air delivery.

- Energy Efficiency Constraints: Power Usage Effectiveness (PUE) figures typically exceed 1.5.

In-Row Cooling Units

- Applicable Scenario: Medium-density racks (between 5 and 15 kW).

- Technical Features: By creating separate hot and cold aisles, these systems employ row-level heat exchangers for more efficient heat dissipation.

- Upgrading Costs: They often require significant modifications to existing data center infrastructure.

Backplane Heat Exchangers

- Innovative Aspect: The cooling module is directly integrated into the server rack’s backplane and supports hot-swappable components.

- Limitation: This method can only dissipate up to 20 kW per rack.

The Rise of Liquid Cooling Technology

Given the challenges posed by GPU clusters operating at 800 Gbps network bandwidth and with power consumptions exceeding 800 W, traditional air-cooling methods have reached their physical limits. In response, NVIDIA has introduced three major liquid cooling solutions:

Liquid-to-Air (L2A) Side Cooling

- Transitional Approach: This solution is designed to be compatible with existing air-cooled data centers.

- Technical Highlights: Within a 2U space, it can provide a cooling capacity of 60 kW.

- Energy Efficiency: The power consumption of this cooling method represents only 4% of the overall cooling capacity.

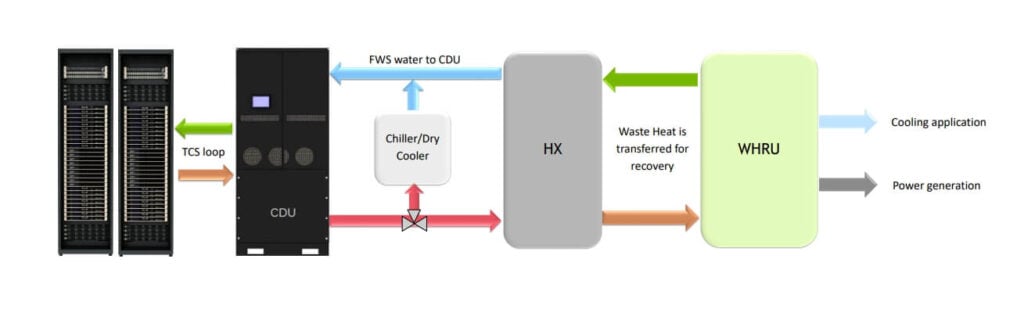

Liquid-to-Liquid CDU System (L2L)

- Revolutionary Breakthrough: Within a 4U space, this system achieves a cooling capacity of 2 MW.

- Spatial Efficiency: It is 6.5 times more energy efficient than traditional CRAC units.

- Operational Advantages: The single-phase flow design significantly lowers the risk of leakage.

Direct-Chip Liquid Cooling (DLC)

- Ultimate Solution: This method employs chip-level microchannel cooling.

- Performance: It supports ultra-high-density configurations, with the capability of dissipating in excess of 160 kW per rack.

- Sustainability: The system can achieve a PUE of less than 1.05.

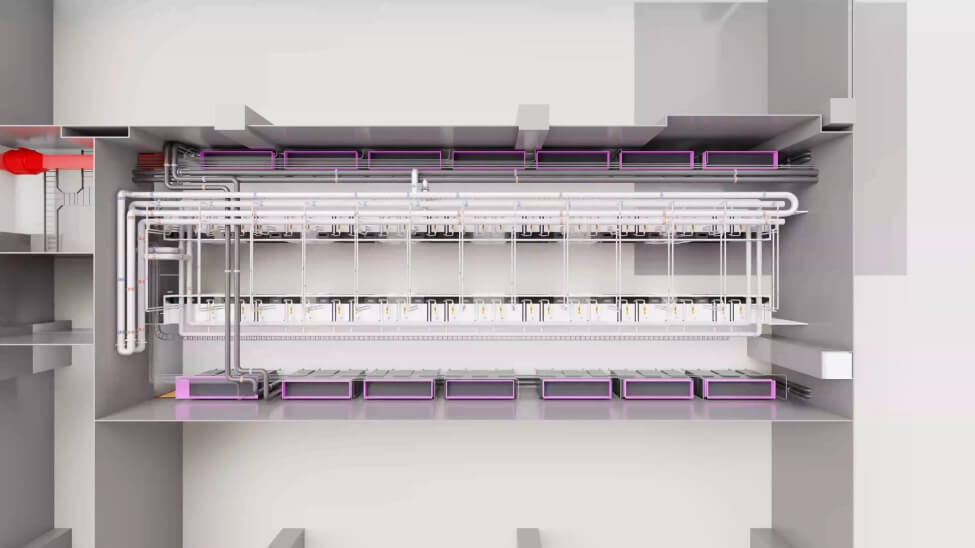

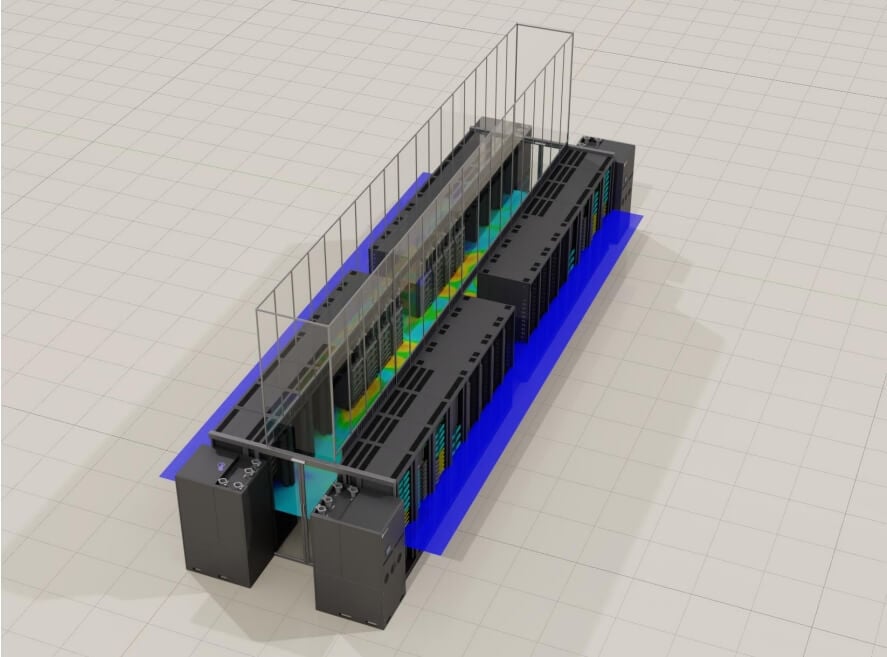

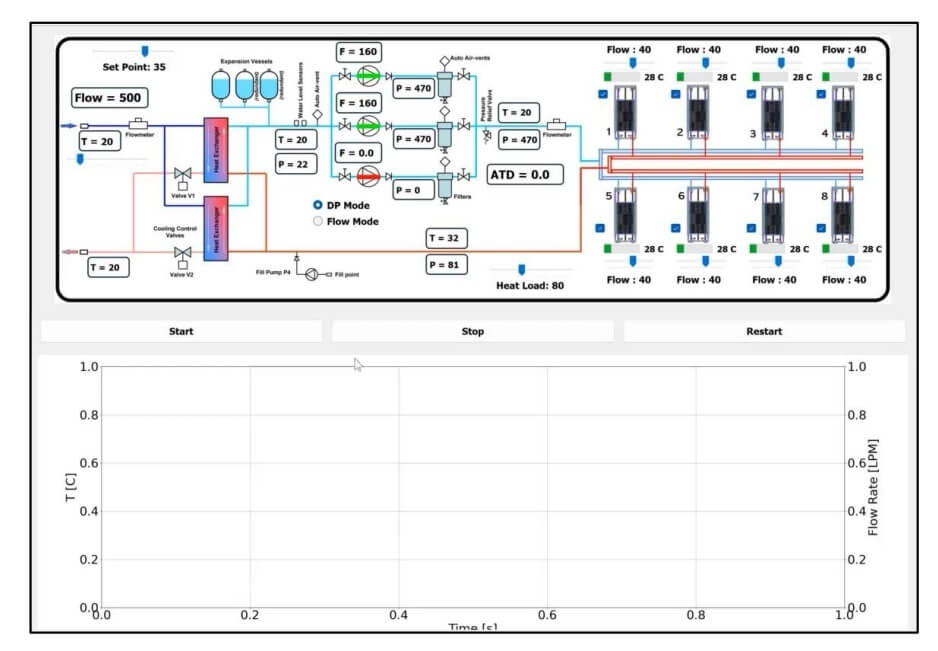

Digital Twin and Intelligent Operations

Leveraging the Omniverse platform, data center digital twins are constructed to enable:

- Real-Time Simulation: The integration of computational fluid dynamics (CFD) with Physics-Informed Neural Networks (PINN) allows for precise predictions of thermodynamic behavior.

- Failure Simulation: Extreme scenarios, such as power outages and leaks, can be modeled and evaluated.

- Intelligent Regulation: Dynamic flow distribution is managed through reinforcement learning algorithms.

Cutting-Edge Research Directions

Development of Novel Cooling Agents

- Nanofluids: Incorporating carbon nanotubes to enhance thermal conductivity.

- Eco-Friendly Refrigerants: Developing refrigerants with a Global Warming Potential (GWP) of less than 1 that do not contribute to ozone depletion.

- Biomimetic Design: Optimizing microchannel flow by replicating the structure of shark skin.

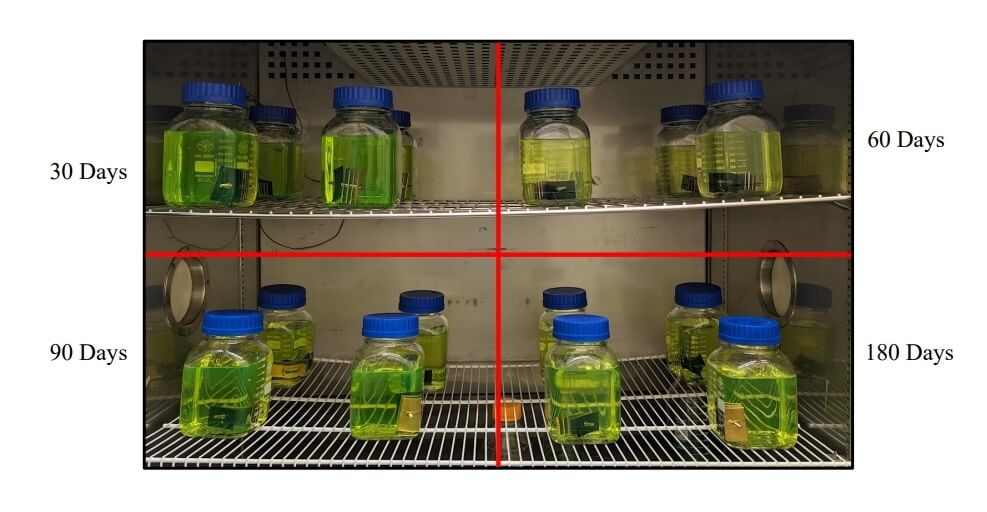

Reliability Verification Framework

- Corrosion Testing: Employing ASTM standards to evaluate the corrosion resistance of copper tubing.

- Biological Contamination Control: Establishing predictive models for the growth of anaerobic bacteria.

- Fluid Dynamic Experiments: Utilizing test platforms that simulate high-speed flushing at 6.5 m/s.

Sustainable Development Initiatives

Waste Heat Recovery Projects

- In collaboration with the Massachusetts Institute of Technology (MIT), adsorption-based cooling units are being developed to recycle approximately 15% of the waste heat generated by IT equipment.

- Goal: To build a zero-carbon ecosystem for data centers.

ARPA-E COOLERCHIPS Program

- The program has received $5 million from the U.S. government as part of a total funding pool of $40 million.

- Core Objectives: Achieve a PUE of less than 1.05; Attain a power density in excess of 160 kW per rack; Employ containerized deployments compliant with ISO standard 40-foot container dimensions.

Future Prospects

With the mass production of Grace Hopper superchips, data centers are anticipated to evolve along three major trajectories:

- Widespread Adoption of Liquid Cooling: By 2025, liquid-cooled servers are expected to constitute over 30% of all deployments.

- Edge Intelligence: Mini liquid-cooling nodes are projected to empower 5G base stations.

- Energy Autonomy: Data centers utilizing liquid cooling will eventually operate on 100% renewable energy.

This silent revolution in cooling technology is reshaping the foundational architecture of digital infrastructure. It signals a future where computing is not only more efficient and intelligent but also greener and sustainable.

Related Products:

-

10G SFP+ SR 850nm LC Pigtail 1m Immersion Liquid Cooling Optical Transceivers

$20.00

10G SFP+ SR 850nm LC Pigtail 1m Immersion Liquid Cooling Optical Transceivers

$20.00

-

10G SFP+ SR 850nm LC Pigtail 5m Immersion Liquid Cooling Optical Transceivers

$23.00

10G SFP+ SR 850nm LC Pigtail 5m Immersion Liquid Cooling Optical Transceivers

$23.00

-

25G SFP28 SR 850nm LC Pigtail 1m Immersion Liquid Cooling Optical Transceivers

$29.00

25G SFP28 SR 850nm LC Pigtail 1m Immersion Liquid Cooling Optical Transceivers

$29.00

-

25G SFP28 SR 850nm LC Pigtail 5m Immersion Liquid Cooling Optical Transceivers

$33.00

25G SFP28 SR 850nm LC Pigtail 5m Immersion Liquid Cooling Optical Transceivers

$33.00

-

100G QSFP28 SR 850nm MPO Pigtail 1m Immersion Liquid Cooling Optical Transceivers

$86.00

100G QSFP28 SR 850nm MPO Pigtail 1m Immersion Liquid Cooling Optical Transceivers

$86.00

-

100G QSFP28 SR 850nm MPO Pigtail 5m Immersion Liquid Cooling Optical Transceivers

$95.00

100G QSFP28 SR 850nm MPO Pigtail 5m Immersion Liquid Cooling Optical Transceivers

$95.00

-

200G QSFP56 SR4 MPO-12 Female Plug Pigtail 1m Immersion Liquid Cooling Optical Transceivers

$600.00

200G QSFP56 SR4 MPO-12 Female Plug Pigtail 1m Immersion Liquid Cooling Optical Transceivers

$600.00

-

200G QSFP56 SR4 MPO-12 Male Plug Pigtail 3m Immersion Liquid Cooling Optical Transceivers

$620.00

200G QSFP56 SR4 MPO-12 Male Plug Pigtail 3m Immersion Liquid Cooling Optical Transceivers

$620.00

-

Q112-400GF-MPO1M 400G QSFP112 SR4 MPO-12 Female Plug Pigtail 1m Immersion Liquid Cooling Optical Transceivers

$1950.00

Q112-400GF-MPO1M 400G QSFP112 SR4 MPO-12 Female Plug Pigtail 1m Immersion Liquid Cooling Optical Transceivers

$1950.00

-

Q112-400GF-MPO3M 400G QSFP112 SR4 MPO-12 Female Plug Pigtail 3m Immersion Liquid Cooling Optical Transceivers

$1970.00

Q112-400GF-MPO3M 400G QSFP112 SR4 MPO-12 Female Plug Pigtail 3m Immersion Liquid Cooling Optical Transceivers

$1970.00

-

OSFP-400GF-MPO1M 400G OSFP SR4 MPO-12 Female Plug Pigtail 1m Immersion Liquid Cooling Optical Transceivers

$1950.00

OSFP-400GF-MPO1M 400G OSFP SR4 MPO-12 Female Plug Pigtail 1m Immersion Liquid Cooling Optical Transceivers

$1950.00

-

OSFP-400GF-MPO3M 400G OSFP SR4 MPO-12 Female Plug Pigtail 3m Immersion Liquid Cooling Optical Transceivers

$1970.00

OSFP-400GF-MPO3M 400G OSFP SR4 MPO-12 Female Plug Pigtail 3m Immersion Liquid Cooling Optical Transceivers

$1970.00

-

OSFP-800G85F-MPO60M 800G OSFP SR8 MPO-12 Female Plug Pigtail 60m Immersion Liquid Cooling Optical Transceivers

$2400.00

OSFP-800G85F-MPO60M 800G OSFP SR8 MPO-12 Female Plug Pigtail 60m Immersion Liquid Cooling Optical Transceivers

$2400.00

-

OSFP-800G85M-MPO5M 800G OSFP SR8 MPO-12 Male Plug Pigtail 5m Immersion Liquid Cooling Optical Transceivers

$2330.00

OSFP-800G85M-MPO5M 800G OSFP SR8 MPO-12 Male Plug Pigtail 5m Immersion Liquid Cooling Optical Transceivers

$2330.00

-

10G SFP+ to SFP+ 850nm 1m Immersion Liquid Cooling Active Optical Cable

$32.00

10G SFP+ to SFP+ 850nm 1m Immersion Liquid Cooling Active Optical Cable

$32.00

-

10G SFP+ to SFP+ 850nm 5m Immersion Liquid Cooling Active Optical Cable

$34.00

10G SFP+ to SFP+ 850nm 5m Immersion Liquid Cooling Active Optical Cable

$34.00

-

25G SFP28 to SFP28 850nm 1m Immersion Liquid Cooling Active Optical Cable

$52.00

25G SFP28 to SFP28 850nm 1m Immersion Liquid Cooling Active Optical Cable

$52.00

-

25G SFP28 to SFP28 850nm 5m Immersion Liquid Cooling Active Optical Cable

$54.00

25G SFP28 to SFP28 850nm 5m Immersion Liquid Cooling Active Optical Cable

$54.00

-

100G QSFP28 to QSFP28 850nm 1m Immersion Liquid Cooling Active Optical Cable

$127.00

100G QSFP28 to QSFP28 850nm 1m Immersion Liquid Cooling Active Optical Cable

$127.00

-

100G QSFP28 to QSFP28 850nm 5m Immersion Liquid Cooling Active Optical Cable

$132.00

100G QSFP28 to QSFP28 850nm 5m Immersion Liquid Cooling Active Optical Cable

$132.00