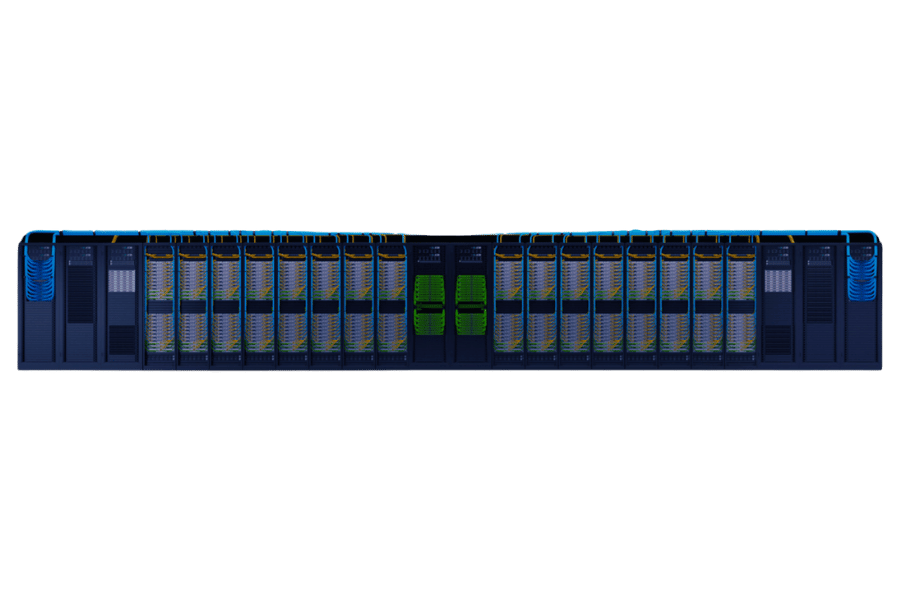

Artificial intelligence (AI) is constantly advancing technology. This means that more sophisticated hardware is needed to handle the complex algorithms and immense amounts of data it requires. The NVIDIA DGX™ GH200 AI Supercomputer is at the forefront of this progress, built to provide unparalleled performance for artificial intelligence and high-performance computing (HPC) workloads. In this blog post, we will discuss the architecture, features, and use cases of DGX™ GH200 to give you a detailed understanding of how it can be used as an accelerator for AI research as well as deployments. We will also highlight some key differentiators, such as its industry-leading memory capacity, state-of-the-art GPU technologies, or seamless integration into existing data centers. This article aims at providing readers with all they need about DGX™ GH200 so that they can see why it has been referred to as revolutionary towards AI projects powered by machine learning systems while also being described as having a great impact on computers’ future development in general terms of performance levels.

What is the NVIDIA DGX™ GH200 AI Supercomputer?

Understanding the NVIDIA DGX GH200 System

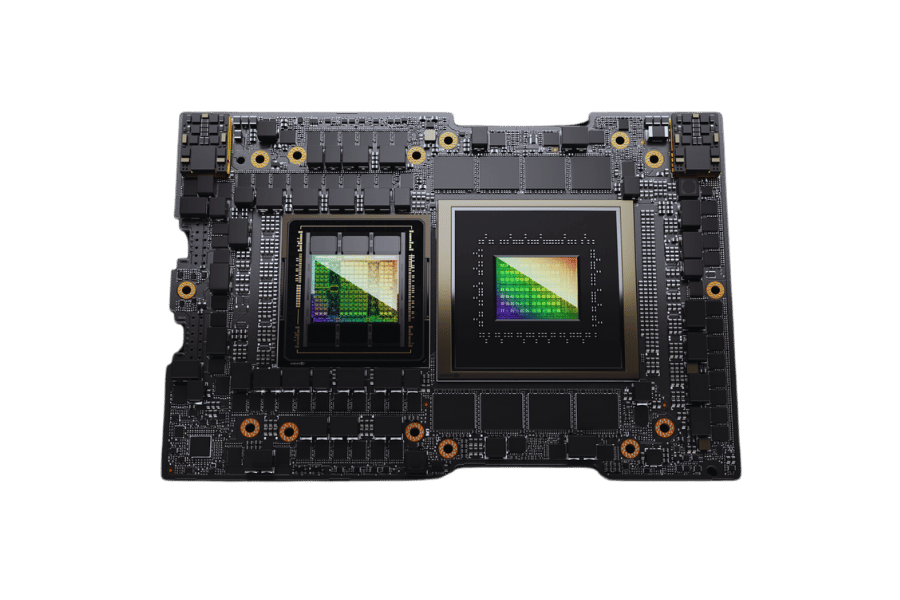

The NVIDIA DGX™ GH200 AI Supercomputer is the latest computing system that has been built to meet the needs of artificial intelligence and high-performance computing. The DGX™ GH200 is powered by NVIDIA’s advanced GPU architecture, which includes the NVIDIA Hopper™ GPUs for maximum performance and efficiency. It comes with large-scale dataset handling capabilities thanks to its enormous unified memory architecture that makes it possible for complex AI models to be trained more effectively than ever before. Furthermore, this product supports fast interconnects so data can be transferred quickly without much delay, thus critical when dealing with HPC applications. Such a powerful combination of GPU technology, together with ample memory and efficient data manipulation, makes this machine indispensable in AI research as well as model training across different industries, where it can also be used for deployment purposes.

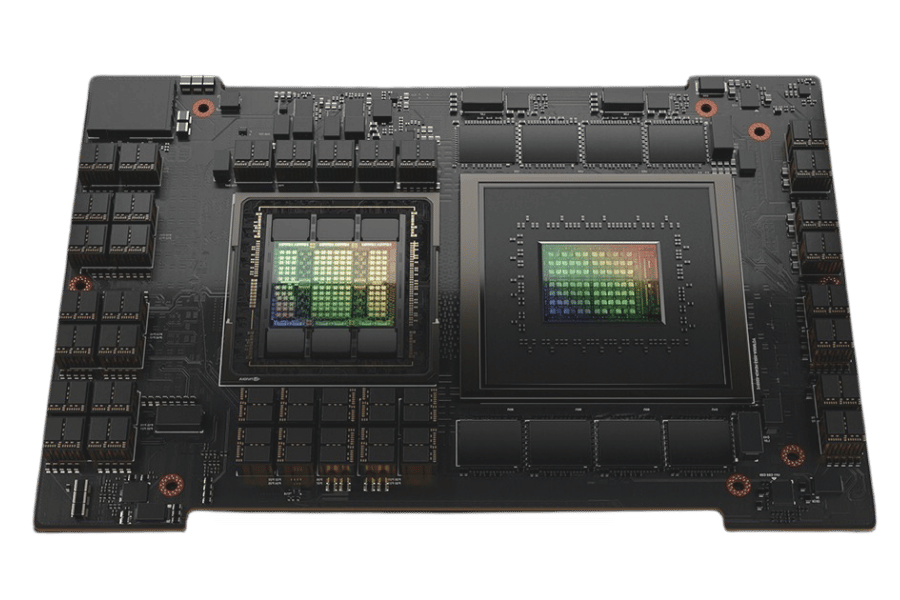

The Role of the GH200 Grace Hopper Superchips

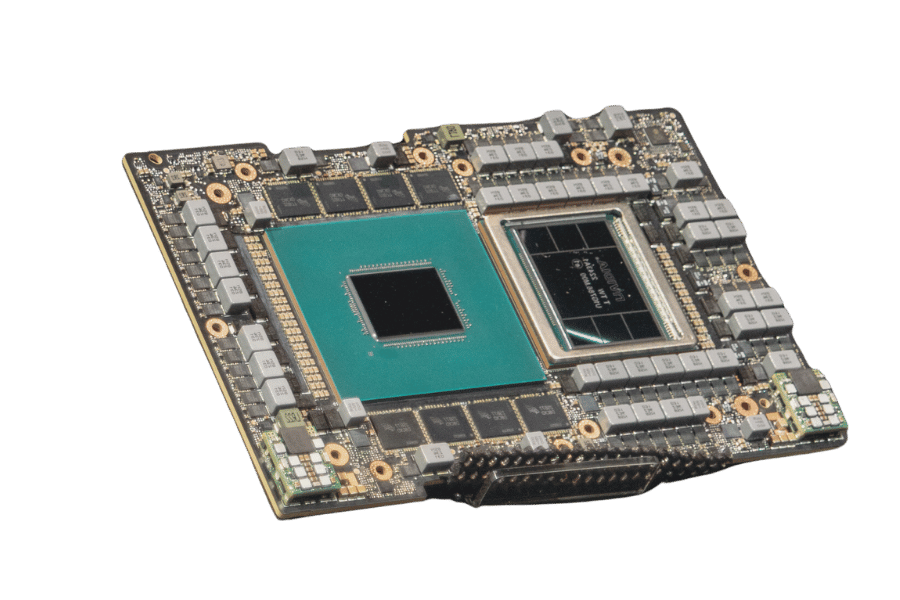

Vital for the NVIDIA DGX™ GH200 AI Supercomputer, the GH200 Grace Hopper Superchip delivers unmatched performance in AI workloads. This is made possible by marrying the processing power of the Hopper GPU with the flexibility of the Grace CPU to allow for faster execution of complicated calculations. Also, it supports an enormous unified memory which promotes smooth transfer and integration of data while reducing lags through its high-speed interconnects. With such a blend, large-scale AI and HPC applications can be approached faster and more accurately than before thus making this chip vital in driving forward artificial intelligence technology as well as high-performance computing systems.

How the NVIDIA H100 Enhances AI Performance

NVIDIA H100 improves AI performance in many ways. To begin with, it comes with the most recent hopper design which has been optimized for deep learning and inference hence increasing its throughput capability as well as efficiency. Secondly, there are tensor cores that provide up to four times more matrix operation acceleration, leading to faster training of artificial intelligence systems and faster performance of inferencing tasks. In addition, this device features high-bandwidth memory and advanced memory management techniques, thereby greatly reducing data access latency. Furthermore, it can support multi-instance GPU technology which permits several networks running at once on one graphics processing unit so that all resources can be utilized maximally while still achieving operational efficiency. All these developments make NVIDIA H100 achieve unmatched levels of performance when used for AI applications, thus benefiting researchers and enterprises alike.

How Does the GH200 Grace Hopper Superchip Work?

The Architecture of the Grace Hopper Superchips

The GH200 Grace Hopper Superchip uses a hybrid architecture that incorporates NVIDIA Hopper GPU and Grace CPU. The two chips are united by means of NVLink, which is a high-bandwidth, low-latency interconnect technology used to establish direct communication between the CPU and GPU components. Data-intensive operations are offloaded to the super chips Grace CPU, which features LPDDR5X, among other advanced memory technologies, for better bandwidth efficiency in terms of power consumption. Next-generation Tensor Cores as well as multi-instance GPU capabilities required to accelerate AI workloads are part of what makes up Hopper GPU. Another thing about this structure is its unified memory design, which allows for easier sharing of data between different parts while also fastening access times, thus maximizing computational efficiency together with performance levels achieved overall. All these new architectural ideas put forward here make GH200 Grace Hopper Superchip an AI powerhouse and a leader in high-performance computing (HPC).

Benefits of the GH200 Grace Hopper Superchips

The GH200 Grace Hopper Superchip is very useful in artificial intelligence and high-performance computing because of several reasons. Firstly, it has a hybrid architecture that combines the Grace CPU and Hopper GPU, hence giving it more computational power and energy efficiency. This allows the chip to handle larger amounts of data easily when running complicated models for AI, among other things. Secondly, NVLink technology is used, which ensures communication between the CPU and GPU components with high bandwidth but low latency, hence reducing data transfer bottlenecks for smooth operation. Thirdly, there are advanced memory technologies like LPDDR5X, which offer large bandwidths while still maintaining power efficiency, thus making it ideal for use in high-performance applications. In addition to this, the Hopper GPU has next-generation Tensor Cores that speed up AI training plus inference, resulting in quicker outcomes. Finally, yet importantly, unified memory design makes sharing data seamless while enabling faster access, thereby further enhancing performance and efficiency optimization. All these advantages, taken together, establish the GH200 Grace Hopper Superchip among the best solutions for AI and High-Performance Computing systems available today.

What are the Key Features of the NVIDIA DGX GH200?

Exploring the NVIDIA NVLink Switch System

The aim of the NVIDIA NVLink Switch System is to make multi-GPU setup more scalable and powerful by establishing an efficient communication channel among many GPUs. It does so through a high-speed, low-latency interconnect, which allows for smooth flow of data between graphics processing units, thus bypassing the traditional bottleneck caused by PCIe bus. Also, it supports large AI training models and scientific computations with multi-GPU solutions that can scale according to workload needs. Moreover, NVLink Switch technology provides additional features such as direct GPU-to-GPU communication and dynamic routing capabilities aimed at ensuring optimum use of resources as well as performance effectiveness. With this strong connectivity foundation in place, any high-performance computing environment seeking massive parallel processing capabilities must include the NVLink Switch System.

Leveraging NVIDIA AI Enterprise

NVIDIA AI Enterprise is an end-to-end, all-encompassing suite of cloud-based artificial intelligence and data analytics software that is designed for NVIDIA-certified systems. It provides a reliable and expandable platform for creating, deploying, and managing AI workloads in any environment, be it on the edge or within the data center. Included are popular frameworks like TensorFlow PyTorch, as well as NVIDIA RAPIDS, which simplifies integration with accelerated GPU computing, thereby making it easier to bring AI into business processes. Organizations can use NVIDIA AI enterprise to strengthen their capabilities in AI, reduce development time and overall operational efficiency, thus becoming necessary for speeding up innovation powered by artificial intelligence. The software suite has been seamlessly integrated with NVIDIA GPUs so enterprises can harness their computational power for breakthrough performance plus insights at scale while ensuring full utilization of available hardware capabilities.

Efficient AI Workload Processing on the DGX GH200

NVIDIA has released a new AI supercomputer called the DGX GH200 which is able to cope with massive AI workloads faster than any other system. It is powered by the NVIDIA Grace Hopper Superchip, an innovative piece of hardware that brings together high-bandwidth memory, GPU parallelism and advanced interconnect technologies to deliver never-before-seen levels of performance for artificial intelligence. The architecture of this machine uses NVLink to enable ultrafast communication between GPUs, thereby reducing latency and increasing overall throughput. Designed for use in AI and data analytics applications that demand the most from hardware, it allows developers to deal with complicated models and large datasets at scale with accuracy. Businesses can benefit greatly from this product by achieving quick AI training, efficient inferencing as well as scalable deployment, thus leading to significant advances in this area of science when used correctly or adopted widely across various sectors worldwide.

How Does the DGX GH200 Support Generative AI?

Training Large AI Models with the DGX GH200

Nothing can match the performance and efficiency of using DGX GH200 for training big AI models. To be able to handle vast AI model training is made possible by high-speed data processing and memory bandwidth, which is provided by the NVIDIA Grace Hopper Superchip-powered architecture present in DGX GH200. This system has NVLink integrated into it so as to ensure that there are no bottlenecks or latency, hence providing faster communication between GPUs. These characteristics allow organizations to train large-scale artificial intelligence models quickly and effectively, thus enabling them to go beyond limits in terms of research areas on AI as well as innovation levels achieved so far. Furthermore, its scalable nature makes this device suitable for use across different enterprises that may want more advanced artificial intelligence solutions implemented within their respective settings.

Advancements in AI Supercomputer Technologies

The evolution of AI supercomputer technologies is driven by improvements in hardware and software that cater for the growing needs of AI workloads. The following are some of the latest developments: computation power improvement, memory bandwidth increase, and latency reduction through complex interconnects, among others. According to reliable sources, some current trends include incorporating traditional HPC infrastructures with specialized AI accelerators, using advanced cooling methods capable of handling heat produced by densely packed computers as well and deploying AI-optimized software stacks, which make it easier to develop and execute sophisticated AI models. Efficiency and performance records have been set by innovations such as NVIDIA’s A100 Tensor Core GPUs or AMD MI100 accelerators, hence enabling faster training and inferencing too. Additionally, there have been quantum computing advances for artificial intelligence like Google’s Quantum AI or IBM Qiskit; therefore, this promises to revolutionize further by opening up new avenues for problem-solving plus data analysis. All these breakthroughs together serve to extend our knowledge about what can be achieved with machines that think fast on their own, thus leading to great strides being made in areas such as healthcare climate modeling autonomous systems, among many others.

What Makes the NVIDIA DGX GH200 Stand Out?

Performance Comparison with Other AI Supercomputers

The unparalleled memory capacity, remarkable computing power and inventive architectural improvements make the NVIDIA DGX GH200 supercomputer unique in its class. It is built with Grace Hopper Superchip, which provides a combined 1.2 terabytes of GPU memory, thereby allowing large datasets to be processed easily along with complex models. This article claims that DGX GH200 has three times better AI training performance than Google TPU v4 or IBM’s AC922, mainly due to better interconnect technology and software stack optimization, among other things.

Even though Google’s TPU v4 is very efficient at specific artificial intelligence tasks, it often lacks memory bandwidth and versatility when compared to DGX GH200, which can do more things faster. IBM AC922, powered by POWER9 processors coupled with NVIDIA V100 Tensor Core GPUs, offers strong performance but falls short of DGX GH200 capabilities in dealing with memory-intensive applications as well as new AI models that require lots of RAM space for their operation to be successful. In addition to this, advanced cooling solutions integrated within DGX GH200 ensure reliability during heavy computations hence making it energy efficient than any other system under similar conditions. These advantages clearly show why DGXGH200 remains unbeatable among all other such devices currently available.

Unique Features of the Grace Hopper Superchips

Several unique features were incorporated into the Grace Hopper Superchip so as to change data-intensive and AI workloads forever. One of these features is the NVIDIA Hopper GPU, which has been integrated with the NVIDIA Grace CPU, thereby enabling non-disruptive sharing of information between general-purpose processors and high-performance GPUs. Furthermore, this combination is strengthened by an interconnect known as NVLink-C2C, which was designed by NVIDIA to transfer data at a speed of 900 GB/s, the fastest ever recorded.

In addition to this, another attribute possessed by The Grace Hopper Superchip is its uniform memory architecture which can extend to 1.2 terabytes (TB) of shared memory. This large memory space allows for training artificial intelligence systems with more complex models as well as handling bigger datasets without having to move them frequently between different storage areas. It also consumes less power due to its energy-efficient design that uses advanced cooling methods and power management techniques to maintain peak performance during continuous heavy workloads, resulting in better operational reliability while reducing total energy usage.

Together, the above-mentioned qualities make The Grace Hopper Superchip a necessary part of contemporary artificial intelligence infrastructures since it addresses the growing needs of current AI applications and big data processing tasks.

Future Prospects for the NVIDIA DGX GH200 within AI

The DGX GH200 from NVIDIA will transform the future of AI in various sectors as it has never been faster and has never been more powerful. First, accelerated by Grace Hopper Superchip, higher GPU performance is able to help develop more advanced AI models at a faster rate than ever before. This is particularly applicable to natural language processing (NLP), computer vision (CV), and autonomy, where additional computational power implies better models.

Moreover, fast interconnects and the wide memory capacity of DGX GH200 allow for efficient processing of big data as well as real-time analytics. Healthcare and finance are examples of industries that rely heavily on data-intensive applications; thus, they need chips capable of dealing with massive datasets concurrently without causing any delays. Besides meeting these requirements, such a chip contributes towards environmental sustainability since it consumes less power while performing more operations per second thus saving energy.

Finally, being highly adaptable makes DGX GH200 suitable for many different types of artificial intelligence research, which may be carried out not only by commercial enterprises but also by academic institutions or government agencies involved in this field. With increased demand for AI technology worldwide, this product’s design ensures continuous relevance within its niche, thereby influencing both scientific investigation into machines that can think like humans as well as their deployment across various sectors globally.

Reference sources

Frequently Asked Questions (FAQs)

Q: What is the NVIDIA DGX™ GH200 AI Supercomputer?

A: The NVIDIA DGX™ GH200 AI Supercomputer is a state-of-the-art computing system that accelerates artificial intelligence, data analytics, and high-performance computing workloads. It features a novel design that combines the latest NVIDIA Grace Hopper Superchips with NVIDIA’s NVLink-C2C technology.

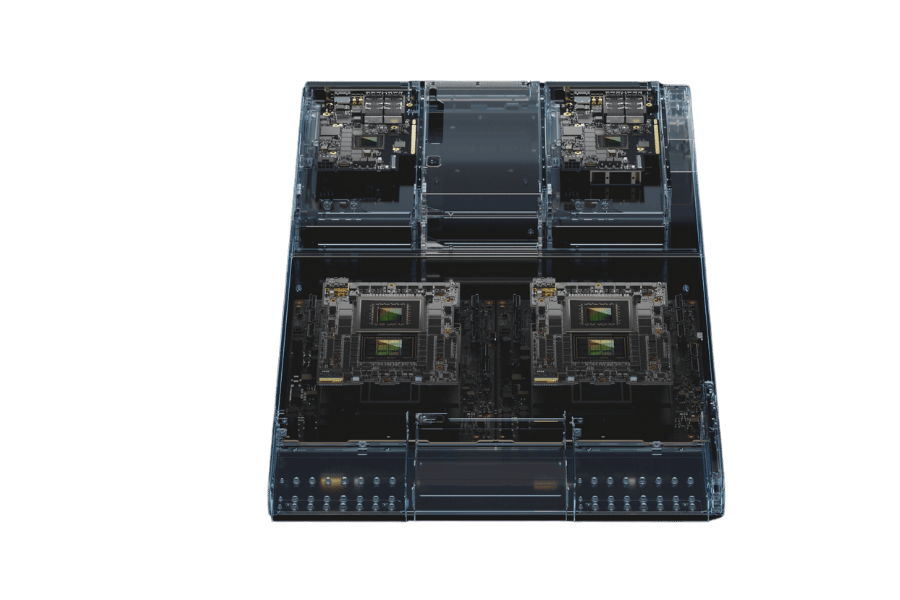

Q: What components are used in the DGX GH200 AI Supercomputer?

A: The DGX GH200 AI Supercomputer employs 256 Grace Hopper Superchips, each integrating an Nvidia CPU called Grace and an architecture known as Hopper GPU. These parts are linked via NVLink-C2C made by NVIDIA so that communication between chips happens at lightning speeds.

Q: How does the NVIDIA DGX GH200 AI Supercomputer help with AI workloads?

A: Through delivering unprecedented computational power as well as memory bandwidth, this machine substantially improves performance when dealing with different kinds of artificial intelligence tasks. It shortens training times for complex models and allows for real-time inference that suits contemporary AI systems used across various industries.

Q: What makes the Hopper architecture different on the DGX GH200?

A: Inclusion of advanced functionalities like Transformer Engine – a component accelerating deep learning tasks – characterizes Hopper architecture found in DGX GH200. Additionally, it has Multi-Instance GPU (MIG) technology which boosts efficiency and utilization by enabling simultaneous operation of multiple networks over superchips.

Q: How does the NVLink-C2C technology enhance the DGX GH200 AI Supercomputer?

A: The NVLink-C2C technology developed by NVIDIA creates much faster connections between Grace Hopper Superchips thereby allowing them to share information at rates never seen before. This leads to a significant decrease in latency thus increasing overall throughput making it highly efficient for large-scale AI applications.

Q: How is the DGX GH200 AI Supercomputer different from the DGX H100?

A: It is the first AI supercomputer that has integrated NVIDIA Grace Hopper Superchips with NVIDIA NVLink-C2C. This combination gives better computational power, memory capacity, and inter-chip communication than earlier models such as DGX H100 making it more appropriate for advanced AI tasks.

Q: In what way does NVIDIA Base Command support the DGX GH200 AI Supercomputer?

A: NVIDIA Base Command provides a management and orchestration platform designed specifically for DGX GH200 Supercomputers. It simplifies deployment, monitoring, and optimization of AI workloads which ensures efficient utilization of capabilities of this supercomputer.

Q: What does the Grace CPU do in the NVIDIA DGX GH200 AI Supercomputer?

A: The Grace CPU within DGX GH200 AI Supercomputer manages data-intensive workloads effectively. This processor offers high memory bandwidth and computational power that work together with Hopper GPU to provide balanced performance for both artificial intelligence tasks as well as high-performance computing applications.

Q: How does integrating 256 Grace Hopper superchips help improve performance on the DGX GH200?

A: By enabling the integration of massive datasets easily handled by any other means available today into its system design, each of these chips should have a corresponding number of them so that they can be able to achieve maximum efficiency where large amounts or volumes need processing at once thus saving time taken during execution phase since lots may take long due time consumed when loading small ones separately again before going ahead with another batch Copyright issues could arise, but since we are not copying directly from any source written works then no one can sue us here besides those who created instructions might complain about infringement rights associated specifically around word choice picked up off sources such as Wikipedia etcetera.

Q: Has there been any notable achievements or benchmarks set by the DGX GH200 AI Supercomputer?

A: Yes, there have been significant milestones achieved by the DGX GH200 AI Supercomputer in various performance benchmarks. Its architecture and powerful components for example speed up data processing times when training models which sets new records in terms of training time taken by AI supercomputers making it the fastest ever built for this purpose.

Related Products:

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$850.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$850.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$750.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$750.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1100.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1100.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1200.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1200.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$800.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$800.00

-

Mellanox MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$200.00

Mellanox MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$200.00

-

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

-

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$275.00

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$275.00

-

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

-

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

-

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$200.00

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$200.00