When talking about the Internet, people usually compare it to a highway. The network card is equivalent to the gate for entering and exiting the highway, the data packet is equivalent to the car that transports the data, and the traffic regulations are the “transmission protocols.”

Just as highways can get jammed, the data highway of the network can also encounter congestion problems, especially in today’s era of rapid development of artificial intelligence, which places higher demands on data center networks.

Today we will talk about what kind of network can meet the needs of the AI era.

Why is the Current Internet not Working?

The Internet has been developed for so many years, so why has it been frequently brought up recently? Why has the traditional network become the bottleneck of modern data centers?

Undoubtedly, this is closely related to intensive computing scenarios such as AI and machine learning. These scenarios have an increasing demand for computing power. According to IDC statistics, the global demand for computing power doubles every 3.5 months, far exceeding the current growth rate of computing power. To meet the increasing demand for computing power, while increasing computing power, it is also necessary to fully improve the utilization efficiency and communication performance of computing power. As one of the three core components of the data center, the data center network will face challenges.

This is because, in the traditional von Neumann architecture system, the network generally only plays the role of data transmission, and the calculations are centered on the CPU or GPU. When large and complex models such as ChatGPT and BERT distribute their workloads to a large number of GPUs for parallel computing, a large amount of burst gradient data transmission will be generated, which can easily lead to network congestion.

This is a natural drawback of the traditional von Neumann architecture. In the AI era with increased computing power, neither increasing bandwidth nor reducing latency can solve this network problem.

So how can we continue to improve the performance of data center networks?

Are there any New Ways to Improve Network Performance?

There are two traditional ways to improve network performance: increasing bandwidth and reducing latency. These two methods are easy to understand, just like transporting goods on a highway, either increasing the width of the road or increasing the speed limit of the road can solve the problem of network congestion.

In our daily lives, when we encounter slow Internet connections, we will also adopt these two methods: either pay extra money to upgrade to higher bandwidth, or buy network equipment with better performance.

However, these two methods can only improve the network to a certain extent. When the bandwidth is upgraded to a certain width and the equipment reaches a certain level, it will be difficult to improve the actual performance of the network further. This is also the main reason for the bottleneck of the network in the current AI era.

Is there a better solution to improve the network?

The answer is yes. In order to accelerate model training and process large data sets, NVIDIA, as the world’s AI computing power leader, has long discovered the bottleneck of traditional networks. To this end, NVIDIA has chosen a new path: deploy computing around data. Simply put, where the data is, there is computing: when the data is on the GPU, the computing is on the GPU; when the data is transmitted in the network, the computing is in the network.

In short, the network should not only guarantee the performance of data transmission, but also undertake some data processing calculations.

This new architecture allows the CPU or GPU to focus on the computing tasks they are good at, and distributes some infrastructure operation workloads to network-connected nodes, thereby solving the bottleneck problem or packet loss problem in network transmission. It is understood that this method can reduce network latency by more than 10 times.

Therefore, infrastructure computing has become one of the key technologies of current data-centric core computing architecture.

Why can DPU Bring about Network Improvements?

When it comes to infrastructure computing, we have to mention the concept of DPU. The full name of DPU is Data Processing Unit. It is the third main chip in the data center. Its main purpose is to share the infrastructure workload of the CPU in the data center except for general computing.

NVIDIA is a global pioneer in the field of DPU. In the first half of 2020, NVIDIA acquired Israeli network chip company Mellanox Technologies for US$6.9 billion, and launched the BlueField-2 DPU in the same year, defining it as the “third main chip” after CPU and GPU, officially kicking off the development of DPU.

Then some people will ask, what role does this DPU play in the network?

Let me give you an example to illustrate this.

Just like running a restaurant, there were fewer people in the past, and the boss was responsible for all the work including purchasing, washing and cutting, preparing dishes, cooking, serving food, and cashiering. Just like a CPU, it not only has to perform mathematical and logical operations, but also manage external devices, execute different tasks at different times, and switch tasks to meet the needs of business application execution.

However, as the number of dining customers to be served increases, different tasks need to be shared by different people. There are multiple shop assistants responsible for purchasing, washing, cutting, and preparing dishes to ensure the chef’s preparation of ingredients; there are multiple chefs cooking in parallel to improve the efficiency of dish preparation; there are multiple waiters providing services and delivering dishes to ensure the service quality of multiple tables of customers; and the boss is only responsible for cashiering and management.

In this way, the store clerk and waiter team are like DPUs, processing and moving data; the chef team is like GPUs, performing parallel computing on data, and the boss is like CPUs, obtaining business application requirements and delivering results.

The CPU, GPU, and DPU each perform their respective functions and work together to maximize the workloads they are good at processing, greatly improving data center performance and energy efficiency and achieving a better return on investment.

What DPU Products has NVIDIA Launched?

After launching the BlueField-2 DPU in 2020, NVIDIA released the next-generation data processor, NVIDIA BlueField-3 DPU, in April 2021 to address the unique needs of AI workloads.

BlueField-3 is the first DPU designed for AI and accelerated computing. It is understood that BlueField-3 DPU can effectively offload, accelerate and isolate data center infrastructure workloads, thereby freeing up valuable CPU resources to run critical business applications.

The AI Era

Modern hyperscale cloud technology is driving data centers to a fundamentally new architecture, leveraging a new type of processor designed specifically for data center infrastructure software to offload and accelerate the massive computational loads generated by virtualization, networking, storage, security and other cloud-native AI services. The BlueField DPU was created for this purpose.

As the first 400G Ethernet and NDR InfiniBand DPU in the industry, BlueField-3 has outstanding network performance. It can provide software-defined, hardware-accelerated data center infrastructure solutions for demanding workloads, accelerating AI to hybrid cloud and high-performance computing, and then to 5G wireless networks. BlueField-3 DPU redefines various possibilities.

After releasing the BlueField-3 DPU, NVIDIA has not stopped exploring. NVIDIA has found that with the emergence and popularity of large models, how to improve the distributed computing performance and efficiency of GPU clusters, improve the horizontal expansion capabilities of GPU clusters, and achieve business performance isolation on generative AI clouds have become issues of common concern to all large model manufacturers and AI service providers.

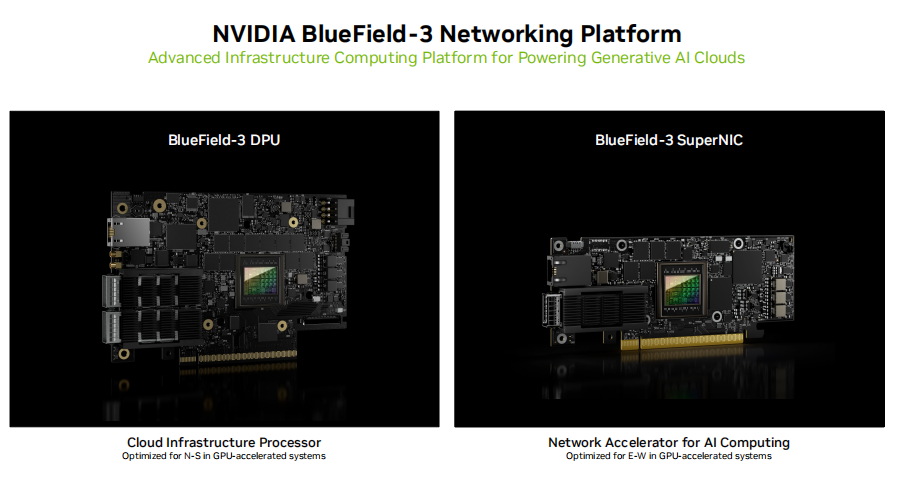

To this end, at the end of 2023, NVIDIA launched the BlueField-3 SuperNIC to optimize performance for east-west traffic. It is derived from the BlueField DPU and uses the same architecture as the DPU, but is different from the DPU. The DPU focuses on offloading infrastructure operations and accelerates and optimizes north-south traffic. The BlueField SuperNIC draws on technologies such as dynamic routing, congestion control, and performance isolation on the InfiniBand network, and is compatible with the convenience of the Ethernet standard on the cloud, thus meeting the performance, scalability, and multi-tenancy requirements of the generative AI cloud.

NVIDIA BlueField-3 Networking Platform

In summary, the current NVIDIA BlueField-3 network platform includes two products, namely the BlueField-3 DPU for speed-limited processing of software-defined, network storage and security tasks, and the BlueField SuperNIC designed specifically to strongly support ultra-large-scale AI clouds.

What is the Use of DOCA for DPU?

When we talk about DPU, we often talk about DOCA. So what is DOCA? What is its value to DPU?

From the above, we know that NVIDIA has two products, BlueField-3 DPU and BlueField-3 SuperNIC, which can greatly accelerate the current surge in AI computing power.

But at present, it is difficult to meet the current different application scenarios by relying solely on hardware products, so we need to rely on the power of software.

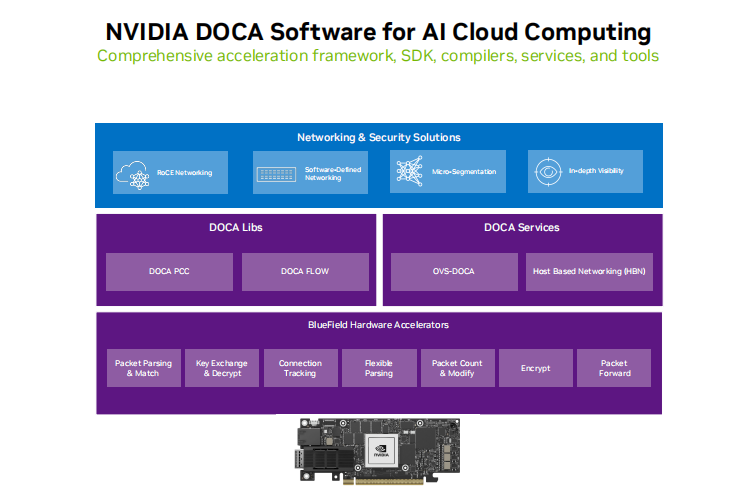

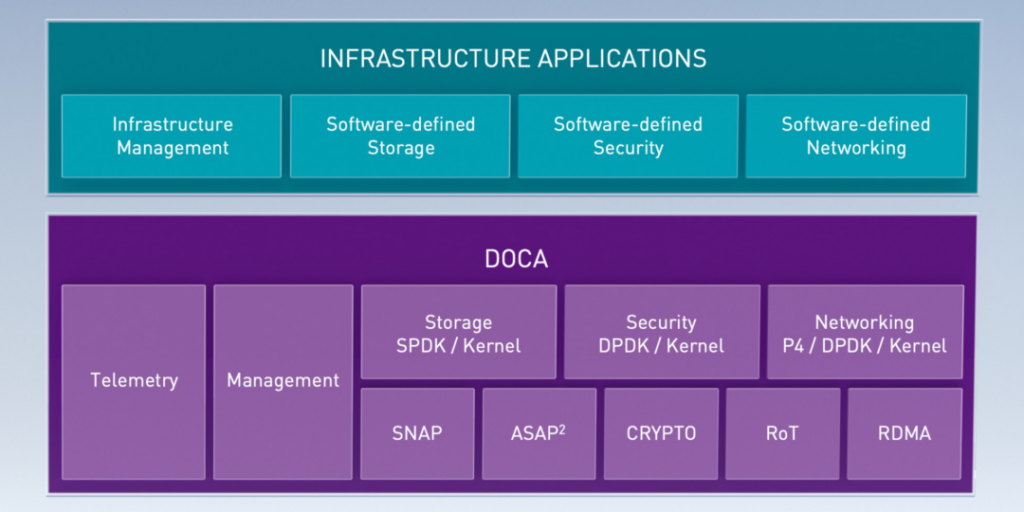

CUDA is a well-known software platform for GPU in the computing power market. In response to the needs of network platforms, NVIDIA adopted the same integrated hardware and software acceleration method. Three years ago, it also launched DOCA, a software development platform tailored for DPU, which is now also applicable to BlueField-3 SuperNIC.

NVIDIA DOCA has rich libraries, drivers, and APIs that provide a “one-stop service” for DOCA developers and are also the key to accelerating cloud infrastructure services.

NVIDIA DOCA Software for AI cloud computing

As a full-stack component, DOCA is a key part of solving the AI puzzle, linking computing, networking, storage, and security together. With DOCA, developers can create software-defined, cloud-native, DPU- and SuperNIC-accelerated services that support zero-trust protection to meet the performance and security needs of modern data centers.

After three years of iterative upgrades, DOCA 2.7 not only expands the role of BlueField DPU in offloading, accelerating and isolating network, storage, security and managing infrastructure in the data center, but also further enhances the AI cloud data center and accelerates the NVIDIA Spectrum-X network platform, providing excellent performance for AI workloads.

Let’s look at the key role of DOCA for GPUs and NVIDIA BlueField-3DPUs or BlueField–3 SuperNICs:

| BlueField-3 DPU | BlueField-3 SuperNIC | |

| Tasks | > Cloud Infrastructure Processor > Uninstall, accelerate, and isolate the data center infrastructure > optimized for N-S in GPU level systems | > Excellent RoCE > for Al computing network > Optimized for E-W in GPU level systems |

| Shared functions | > VPC Network Acceleration > Network Encryption Acceleration > Programmable Network Pipeline > Accurate Timing > Platform Security | |

| Unique Features | > Powerful computing power > Secure Zero Trust Management > Data Storage Acceleration > Elastic infrastructure configuration > 1-2 DPU per system | > Powerful Network > Al Network function set > Full-stack NVIDIA Al optimization > Energy-efficient semi-high design > 8 SuperNIC per system at most |

In summary, NVIDIA DOCA is to DPUs and SuperNICs what CUDA is to GPUs. DOCA brings together a wide range of powerful APIs, libraries, and drivers for programming and accelerating modern data center infrastructure.

Will DOCA Development Become the Next Blue Ocean Track?

There is no doubt that with the emergence of AI, deep learning, metaverse and other technical scenarios, more and more companies need more DOCA developers to join in to make more innovations and ideas come to fruition. The well-known cloud service providers have an increasing demand for DPUs and need to use DOCA hardware acceleration technology to optimize the performance of data centers.

Tools provided by DOCA for developers

And as enterprises increase their demand for efficient and secure data processing, DOCA development has also become a skill for cloud infrastructure engineers, cloud architects, network engineers and other positions to gain a competitive advantage. In addition, DOCA developers can also create software-defined, cloud-native and DPU-accelerated services. Participating in DOCA development can not only improve personal skills, but also enhance influence in the technology community.

At present, the number of DOCA developers is far from meeting market demand. According to official data, there are more than 14,000 DOCA developers worldwide, nearly half of whom are from China. Although it seems that there are a lot of people, compared with CUDA, which has 5 million developers worldwide, there is still a lot of room for DOCA developers to grow.

But after all, DOCA was released only three years ago, while CUDA has a history of nearly 30 years. Of course, this also shows that DOCA is still in its early stages of development and has great potential.

In order to attract more developers to join the development of DOCA, NVIDIA has been actively providing more help to developers through various activities in recent years, including preparing and implementing the DOCA China Developer Community, holding online and offline training camps for DOCA developers, and holding DOCA developer hackathon competitions.

Not only that, in June 2024, the NVIDIA DPU Programming Introductory Course officially started at the Macau University of Science and Technology. The public course outline shows that the content includes a comprehensive introduction to how the NVIDIA BlueField network platform and NVIDIA DOCA framework accelerate AI computing, helping college students gain a competitive advantage in the AI era.

For developers who want to make a transition and college students who are about to graduate, DOCA development is a direction that many people are optimistic about.

In the NVIDIA DOCA application code sharing event that ended at the beginning of the year, many developers stood out and won awards, including many college students. Chen Qin, who won the first prize in this event, is a master student in computer science and technology. He said: “Through the development of DOCA, I have not only improved my ability, but also gained potential job opportunities. I have also received a lot of recognition from seniors in the community, which makes me more confident in myself.”

Today, the NVIDIA DOCA China developer community is still growing, and various activities and contents will continue to be presented. This is undoubtedly a good time for those who want to join DOCA development.