The swift-changing environment brought by the NDR switches can be very challenging for information technology experts as well as those in charge of network administration. This paper wants to present an all-round description of NDR switches, which gives priority to their importance in today’s security structures of computer networks. We shall talk about what NDR technology is based on, its main characteristics, and its benefits in terms of proactive threat detection and network management, among other things. Moreover, it will discuss different ways that can be used when deploying these devices, together with some of the best practices recommended so far, in addition to examining recent developments made on NDR solutions. Eventually, readers should be knowledgeable enough regarding what this guide entails, thus being able to make wise choices concerning the integration of such critical components into their organization’s security system.

Table of Contents

ToggleWhat is an NDR Infiniband Switch?

Understanding Infiniband and Its Throughput Capabilities

Data centers employ Infiniband as a networking technology that has low latency and high performance. This system interconnects servers and storage systems. Its exceptional throughput capabilities are renowned worldwide; in both directions, the capacity can increase from 10 Gb/s to 400 Gb/s per link. It indicates that Infiniband can cope with enormous amounts of data that need to be transferred fast, thus being perfect for such demanding applications as financial modeling or scientific research conducted in high-performance computing environments (HPC), among others like large-scale simulations. This architecture is based on switched fabric, which allows for many concurrent paths of information, thereby improving network reliability and performance at large.

The Evolution from Earlier Infiniband Models to NDR

The development from earlier Infiniband models to NDR (Next-Generation Data Rate) is a big step forward in the capacity and performance of network technology. They originally began with SDR (Single Data Rate), DDR (Double Data Rate) and QDR (Quad Data Rate), each time doubling the throughput. FDR (Fourteen Data Rate) and EDR (Enhanced Data Rate) were created as demand grew for higher data rates and lower latency, which brought much improvement in bandwidth and efficiency. With HDR (High Data Rate), they went even further by giving up to 200 Gb/s per link.

NDR builds on this by trying to reach 400 Gb/s per link, an increase that cannot be overstated in relevance considering modern HPC environments as well as data-centric applications where quick processing and transfer of information is critical. The improvements made on NDR are focused not just around increased throughput but also come with better network reliability, energy efficiency and scalability – making them necessary for any cutting edge DC or cloud infrastructure today supporting such vast amounts of generated data being processed every second.

Key Features of an NDR Infiniband Switch

The NDR infiniband switch has got many critical features that significantly improve its performance and suitability in high-demand computing environments:

- Great Throughput: The NDR InfiniBand switches provide unmatched bandwidths of up to 400 Gb/s per link necessary for data-intensive applications and large-scale computations.

- Low Latency: Designed with minimum latencies, these switches ensure fast transfer of data for real-time data analytics applications where speed is vital.

- Scalability: NDR switches allow networks to grow without sacrificing performance; this works well with growing data centers and evolving HPC ecosystems.

- Improved Efficiency: Modern data centers are power hungry thus energy should be conserved while at the same time achieving high performance levels. They have been optimized for such environments.

- Reliability: Network reliability is improved by detecting errors early enough and correcting them, thereby ensuring that information always arrives intact throughout all points in the network; this also reduces chances of losing or corrupting data.

- Advanced management capabilities: Simplified control over networks achieved through streamlining monitoring tasks using powerful management tools embedded within these switches, which makes network administration easier than before.

The NDR InfiniBand switch is therefore a cornerstone for current computing environments as it enhances storage processing as well as communication between devices used today.

How Does the NVIDIA MQM9700-NS2F Quantum 2 NDR Infiniband Switch Operate?

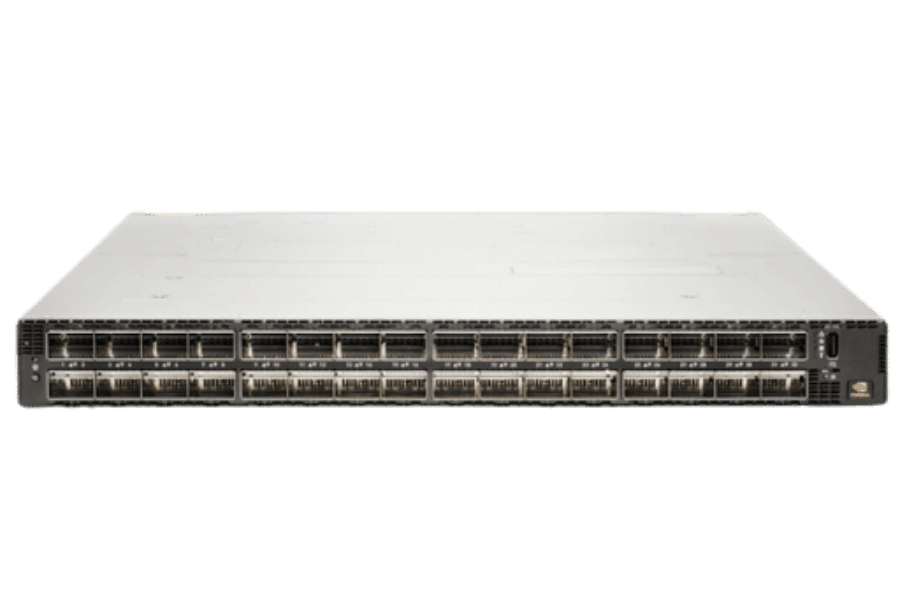

Overview of the NVIDIA MQM9700-NS2F Quantum 2 NDR

The objective of the NVIDIA MQM9700-NS2F Quantum 2 NDR Infiniband Switch is to provide speed and dependability in high-performance computing environments. It comes with 64 NDR 400Gb/s ports that amount to a data throughput of 25.6 Tb/s necessary for high performance computing (HPC) applications and large-scale data centers. This switch is real-time analytics and scientific simulations friendly because it has low latency.

This switch uses sophisticated congestion control mechanisms as well as adaptive routing which optimizes data packet delivery while at the same time reducing cases of packet loss. This feature is very useful in complex HPC tasks where there are many demands for consistent performance and integrity of data throughout these processes, which should be maintained each time without fail. Additionally, this device has been designed so that energy-saving measures are taken into consideration, ensuring that maximum power usage efficiency is achieved during high output periods, thus meeting environmental sustainability goals set by modern-day data centers.

The management process for NVIDIA MQM9700-NS2F Quantum 2 NDR has been made easier thanks to integrated advanced management tools that offer complete visibility into network operations coupled with control options over the same. Such tools play a crucial role in enabling efficient monitoring, maintenance procedures, troubleshooting activities, etc., all aimed at ensuring that even when demand increases exponentially or any other spike occurs, the network remains strong enough to handle it continuously without failing at any point whatsoever. Therefore, this remains an essential component in advancing current computing paradigms’ capabilities as well as those yet to come.

NVIDIA Infiniband and Its Infiniband Per Port Capabilities

NVIDIA InfiniBand technology is well-known for having high-bandwidth and low-latency interconnects that are a necessity in present-day high-performance computing (HPC) settings. Each Quantum 2 switch of NVIDIA has an HDR (High Data Rate) or NDR (Next Data Rate) speed supported by an InfiniBand port, which can deliver up to 200Gb/s or 400Gb/s per port, respectively. This capacity alone does wonders for data transfer and system communication, thereby minimizing bottlenecks and improving overall throughput.

Among other advanced per-port features are adaptive routing, congestion control, and quality of service (QoS) mechanisms that guarantee the effective transmission of data packets from one point to another with accuracy and reliability. These attributes play a significant role in ensuring integrity as well as consistency across different workloads performed on this platform. Additionally, the scalability aspect associated with these ports makes it possible for them to be seamlessly integrated into current infrastructures or even during future upgrades, hence serving a wide range of computing requirements satisfactorily.

To conclude, what makes NVIDIA Infiniband unique is its ability to provide the necessary support needed by ultra-modern HPC and DC applications through robustness brought about through each port’s capabilities, such as massive amounts of data being moved around within the shortest time possible plus very few delays experienced during such processes.

Technologies Such as Remote Direct Memory Access

Remote Direct Memory Access (RDMA) is a groundbreaking technology that improves the performance of HPC and data center environments by enabling memory access from one computer to another without involving the central processing unit (CPU). This eliminates much of the latency and CPU overhead associated with such tasks, thereby speeding up data transfer. RDMA can run on networks like Infiniband, RoCE (RDMA over Converged Ethernet), and iWARP (Internet Wide Area RDMA Protocol), with each providing different levels of speed, accessibility, and scalability.

In networking, RDMA’s ability to do high-throughput, low-latency networking is one of its main advantages because it allows for rapid sharing of information between applications like databases, clustered storage systems, or scientific computing jobs. By going around the OS network stack, this means that within HPC settings, data can be moved directly into user space from remote memory without having to make additional copies, which streamlines operations.

Overall then what makes up RDMA architecture such as limited CPU involvement alongside direct placement of data greatly contributes towards enhancing computational efficiency as well as networking performance thus making it an essential ingredient for driving modern computing infrastructures forward.

What are the benefits of using an OSFP-managed power-to-connector airflow switch?

Advantages of Power to Connector Airflow

Using a power-to-connector airflow switch in data centers has several benefits. It improves the efficiency of cooling by directing airflows exactly where they are needed, thereby getting rid of hot spots and optimizing heat management. This reduces power consumption as cooling systems work more efficiently, which can save a lot of money. In addition, it also enhances the reliability and lifespan of electronic devices by maintaining consistent operating temperatures and preventing thermal overload. Lastly, integrating managed power with airflow control enables better monitoring and controlling of the environment in data centers; this provides real-time information for use in optimizing both power and cooling systems, leading to increased operational efficiencies. All in all, Power to Connector Airflow Switches contribute towards making data center infrastructures more strong and efficient.

Fitting the OSFP Managed Power to Connector in Your Data Center

To put an OSFP Managed Power to Connector in your data center, you need to follow a few systematic steps for integration and performance optimization. First, appraise your present infrastructure to establish its compatibility mainly regarding power needs as well as airflow management capabilities. Secondly, the OSFP connectors should be placed alongside the cooling system design so that they can maximize airflow efficiency. There is the need to set up managed powers depending on what you want them to do; this can be done using software supplied by the manufacturer or control interfaces, among others, where possible, according to operational requirements. Thirdly, continuously monitor the real-time provided by these connectors in order to further optimize power consumption and thermal control too thus helping enhance reliability, efficiency, and overall performance levels of any given data center environment.

Adaptive Routing and Network Efficiency

The dynamic alteration of paths on which data packets travel in response to instantaneous conditions of a network is very important in improving its efficiency. This means that unlike the latter, this type of routing does not use fixed or predetermined paths. What adaptive algorithms do is keep a record of such network performance measurements as latency, availability of bandwidth, and node activity while they are still working. With these figures at their disposal, the routing systems can select the best route for each packet so as to balance loads across all networks and reduce traffic jamming. Additionally, this system is able to restore connections quickly after failure by re-directing traffic, hence ensuring an uninterrupted flow of information with minimal disruptions in service delivery levels. Adaptive routing can, therefore, be said to lead to better performance overall throughout a network, decreased delay times, and more efficient resource usage, thus fostering reliability plus efficiency when it comes to data transmission.

What Should I Know About the 64-Port Non-Blocking and OSFP Ports?

Benefits of 64-Port Non-Blocking Architecture

The 64-port non-blocking architecture is beneficial for modern data centers in several ways. First, it enables high-density port configurations that allow more scalability while maintaining the performance of each individual port. This feature is crucial in supporting the ever-increasing bandwidth needs of data-intensive environments. Second, non-blocking switching fabrics make sure that all ports can operate concurrently at their peak capacity without experiencing congestion, thereby increasing overall throughput and reducing latency. Lastly, this design supports advanced traffic management features like quality of service (QoS) or load balancing, which are necessary for optimizing network performance and ensuring resource efficiency. Data centers, therefore, should consider using a 64-port non-blocking architecture because it delivers better reliability, efficiency, and scalability, thus meeting high-performance networking needs.

Understanding 32 OSFP Ports and Their Applications

32 OSFP (Octal Small Form Factor Pluggable) ports are designed for high-speed data transmission within large data centers and enterprise networks. Each OSFP port supports 400 Gbps of bandwidth, which is necessary to manage bandwidth-hungry applications. These ports connect switches with servers and storage devices efficiently at high speeds. Alongside, they have hot-swappable modules that can be replaced without bringing down the network while ensuring robustness for reliability in critical networking infrastructure maintenance. Besides, this design works well with advanced optical technology that allows long-distance transmissions with weak signal degradation. That being said, it is correct to say that 32 OSFP ports are important components of any contemporary networking solution since they foster smooth and high-performing communication across various complex IT environments.

Current data centers can achieve higher port density, better overall network performance scalability by implementing OSFP technology as it is in line with both present future demands of networks.

Managing High Bandwidth with 64 NDR Ports

64 NDR (Next-Generation Data Rate) ports have been programmed to deal with ultra-high bandwidths which are demanded by modern high-performance computing and data center environments. Each of these ports is capable of providing 800 Gbps, twice as much as the capacity of 32 OSFP ports. This increase in data transfer rate is crucial for applications that need fast processing and moving large amounts of data across different locations; this includes artificial intelligence, machine learning or big data analytics among others.

With 64 NDR ports, it becomes possible for a data center to handle massive volumes of network traffic effectively. These kinds of connections use sophisticated modulation methods coupled with transceivers that are built using new technologies hence lowering latency levels while at the same time ensuring that data integrity is maintained over long distances covered during transmission. Additionally, the dense packing nature associated with an architecture having such many number (64) NDRs simplifies network designs because it reduces cable management complexities together with space utilization requirements.

NDR ports are also designed to be backward compatible with previous interfaces so that they can easily fit into existing systems without causing any inconveniences or requiring too much effort from the users’ end. In addition, they come equipped with capabilities like dynamic reconfiguration support plus adaptable bandwidth allocation features, which make them very important elements for future-proofing networks within data centers. Optimum performance scalability and reliability are achieved when organizations adopt 64 NDR technology, thereby aligning their networking infrastructure with recent advancements made in the data communication field.

How do you shop with confidence for NDR Infiniband Switches?

Identifying the Part ID for Compatibility

When searching for NDR Infiniband switches, it is necessary to find the right Part ID as this will ensure that they are compatible with the existing infrastructure. The product documentation found on the official website of the manufacturer can be consulted in order to do so. This usually contains detailed specifications that include compatibility tables indicating which components and configurations work together.

Moreover, trusted e-commerce platforms and specialized IT suppliers often provide product descriptions with user reviews that reflect real-world compatibility and performance. Finally, technology forums or communities like Stack Exchange or Reddit may present users’ stories as well as expert advice concerning compatibility problems. Therefore by comparing these sources against one another you can be sure about which Part ID should be chosen for your network requirements.

Exploring Options Like the HPE Store

The use of this equipment should be accompanied by a thorough examination of the HPE Store, among other reliable vendors, to identify authentic NDR Infiniband switches. Many networking products are made by Hewlett Packard Enterprise (HPE) to meet different specifications and performance requirements. The HPE Store gives official product descriptions, technical documentation, compatibility information, and other necessary details that help buyers make informed choices about what they need to buy. Furthermore, if you have any specific inquiries about product compatibility or configurations their customer support service is always available for that purpose.

Another reputable vendor is Fibermall which specializes in high-speed interconnection solutions; their website has lots of resources with detailed technicalities, specs etc., all aimed at ensuring easy integration into an already existing setup.

Supermicro is also worth considering thanks to its wide variety of network hardware, such as NDR Infiniband switches. At Supermicro’s site, you will find comprehensive user reviews, expert advice, and in-depth information about different products, which can help you select the best component for your network.

With these top options at your disposal, you are able to make knowledgeable investments into buying NDR Infiniband switches – thus saving on costs while maximizing performance levels vis-à-vis system needs within your environment.

Evaluating Nvidia Quantum-2 As an Industry-Leading Switch

Nvidia Quantum-2 is regarded as an industry standard when it comes to high-performance switches. The Quantum-2 series offers unprecedented data throughput of up to 400Gb/s per port, which makes it perfect for modern data centers and cloud environments. It also has advanced congestion control algorithms, adaptive routing, and best-in-class telemetry support that can greatly improve network efficiency and reliability.

From a technical standpoint, Nvidia Quantum-2 takes advantage of the most recent silicon technology advancements to deliver low latency and high availability essential for AI (artificial intelligence), ML (machine learning), or large-scale simulations workloads. Moreover, this switch works with both InfiniBand and Ethernet protocols thereby ensuring flexibility across various networking infrastructures.

The top tech websites believe that Nvidia Quantum-2 can help reduce operational costs significantly by increasing network utilization while at the same time minimizing downtimes. These sites also mention its strong security features which protect against cyber threats during critical data transmissions.

To sum it all up, NVIDIA’s product not only leads the field in terms of performance but also provides versatility and security needed for driving next-generation computational workloads.

Reference sources

- Cisco

- White Paper: “Network Detection and Response (NDR) Solutions”

- URL: Cisco

- Summary: This Cisco white paper talks about technical aspects, applications, and benefits of Network Detection and Response (NDR) solutions; it also offers a view on how they work to secure network environments.

- Gartner

- Research Report: “Market Guide for Network Detection and Response”

- URL: Gartner

- Summary: The Gartner research report gives a complete examination of the NDR market, which includes explanations, categories and comparisons of various NDR switches along with specific suggestions to choose and implement them rightly.

- Palo Alto Networks

- Technical Documentation: “Understanding Network Detection and Response (NDR) Technology”

- URL: Palo Alto Networks

- Summary: The purpose of this technical documentation by Palo Alto Networks is to give an extensive introduction to NDR technology, its basic parts, how it works and where it can be used as well as some pro tips on how network security could be improved with the help of NDR switches.

Frequently Asked Questions (FAQs)

Q: What does an NDR switch do?

A: NDR switches are network switches for high-performance computing. They were designed for extreme-scale environments and have unique features such as scalable hierarchical aggregation and reduction aimed at improving network performance.

Q: What are some characteristics of the Nvidia Infiniband NDR 64-port OSFP switch?

A: Some of the characteristics of this switch include having 64 ports with a combined bidirectional bandwidth capacity of 51.2 terabits per second (tbs), support for RDMA, as well as scalable hierarchical aggregation and reduction capabilities that can be used to optimize hpc and ai workloads.

Q: Why is the Nvidia Quantum-2 considered the best in its class?

A: It demonstrates higher networking efficiency than any other model available on the market today because it has integrated scalable hierarchical aggregation and reduction protocol together with ability to handle aggregated bidirectional throughput up to 51.2 tbs.

Q: How does Nvidia Scalable Hierarchical Aggregation (SHA) protocol function?

A: SHA optimizes flow of data between multiple nodes within an environment thereby increasing computation power while reducing communication overhead so that large scale hpc platforms can realize greater compute efficiency across their networks.

Q: Why should AI developers and scientific researchers use two NDR Infiniband Switches 64-ports?

A: These provide low-latency, high-throughput in-network computing optimization necessary for dealing with most complex computational problems met by AI developers and scientific researchers in their work.

Q: What does a 1U form factor mean in NDR switches?

A: The 1U form factor of NDR switches means that they are designed compactly to save rack space yet perform networking functions at the highest level like having a 51.2 terabits per second throughput.

Q: What is the benefit of RDMA support for networking performance?

A: RDMA (Remote Direct Memory Access) support improves networking performance by enabling computers nodes to access memory directly without involving CPU thus lowering latency and increasing data transfer rates.

Q: What kind of airflow does the Nvidia Infiniband NDR 64-port OSFP switch use?

A: The Nvidia Infiniband NDR 64–port OSFP switch uses P2C (port-to-connector) airflow for efficient cooling management while maintaining optimum performance.

Q: What is Colfax Direct’s role in NDR switches?

A: Colfax Direct provides dedicated infrastructure and support services for high-performance computing environments which ensure proper integration and optimization of NDR switches.

Q: Could you explain why there are two power supplies in NDR switches?

A: Dual power supply units on NDR switches offer redundancy, thereby guaranteeing uninterrupted operation, hence reducing downtime risks while keeping availability high in critical computing environments.

Related Products:

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$700.00

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$700.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA MMA1Z00-NS400 Compatible 400G QSFP112 VR4 PAM4 850nm 50m MTP/MPO-12 OM4 FEC Optical Transceiver Module

$550.00

NVIDIA MMA1Z00-NS400 Compatible 400G QSFP112 VR4 PAM4 850nm 50m MTP/MPO-12 OM4 FEC Optical Transceiver Module

$550.00

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00