Owing to the exponential growth of data and demanding computing tasks, one of the key requirements is networking coming up with high-speed data throughput with low latency. Standing at the cutting edge of high-performance networking technologies are the Mellanox Infiniband switches that offer the bandwidth and efficiency required mainly in data centers, high-performance computing environments, and enterprise networks. This guide covers fundamental aspects of the design and technology of Mellanox Infiniband switches, including their hardware architecture, protocol capability, and integration processes. While organizations keep looking for improved data rates with fewer bottlenecks, it is imperative to grasp how these switches are deployed and their network performance advantages. IT specialists willing to upgrade their high-performance networking infrastructure will find the article most useful.

What is Mellanox Infiniband and How Does it Work?

Mellanox Infiniband is a communication Standard widely employed in data centers and high-performance computing settings. It harnesses the underlying hardware capabilities of point-to-point bidirectional serial links to achieve low latencies and high throughput, which is necessary for applications that handle high volumes of data. Technology facilitates numerous links scoped together to enhance the data rate, scaling the speed up to 200Gb/sec. The Infiniband architecture is that of switched fabric topology where each device communicates through a switch, hence enabling many devices to communicate without congesting the power buses. Supports RDMA (Remote Direct Memory Access) and greatly reduces the CPU’s cycle time, which helps manage resources better and allows for transferring memory content from one computer to another with no operating system intervention.

Understanding the Infiniband Architecture

The Infiniband Architecture has a switched fabric topology which allows for high bandwidth and low latency connections. This is made possible through the architecture’s HCAs and TCAs which can handle simultaneous data transmission. Each node in the group is linked to an Infiniband switch, thus forming a very efficient and enlarging network fabric. The architecture expands the number of lanes that can be used, which are combined to enhance bandwidth saturation and further mitigate data loss. Low overhead, lossless transmission, and error correction transmission are link-layer features that ensure dependable communication.

Moreover, Infiniband has the ability of performing integration with current systems. It does so through gateway solutions that allow for usage with Ethernet and Fibre Channel networks. Therefore, Infiniband is used in areas where high performance is key, such as scientific simulations and financial modeling or where large amounts of data are processed.

How Mellanox Infiniband Switches Enhance Network Performance

Mellanox Infiniband switches increase the network’s work by implementing features aimed at high-performance computing clusters. These switches present high bandwidth and ultra-low latency through high port density and efficient fabric architecture design, allowing data transfer and communication through large-scale deployments. Mellanox switches are also adaptive and support congestion control techniques that help effectively control networks and enhance throughput. They provide switched networks with automation tools and telemetry capabilities that facilitate network management, monitoring, and troubleshooting. Also, they are built to consider energy consumption, minimizing the total usage of energy in the data centers without compromising operations at various speeds.

Comparing Infiniband with Traditional Ethernet Networks

Ethernet networks are more ubiquitous than Infiniband, but they have unique advantages when deploying heterogeneous networks. When latency and throughput are key constraints across multiple networks, Infiniband networks are more promising owing to their low cost due to these networks being closed-switched-based. Moreover this makes interconnectivity between the nodes to be trivial since most devices come equipped with native support to essential features such as direct memory access. Over the years, Ethernet has significantly matured, resulting in diverse networking bandwidths, such as 1G and 10G. The main downside, however, is that Ethernet networks have relatively high latency on average due to their wide area nature. Thus, Infiniband would be more suitable for general IT structures due to its speed. In any case, however, the switching fabric metric bandwidth relies heavily on the one-way delay. Overall, the choice between Infiniband and Ethernet purely depends upon the requirements of the networks and their intended use cases.

How Does NVIDIA Leverage Mellanox Infiniband?

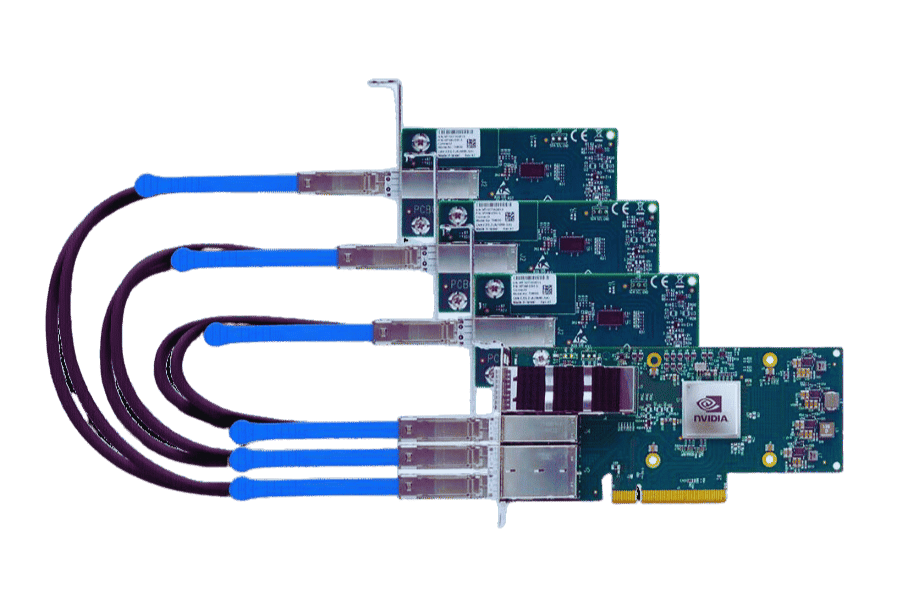

The Role of NVIDIA in Advancing Infiniband Technology

NVIDIA’s contribution towards developing Infiniband technology is highly beneficial because it uses its competencies in high-performance computing and networking. Adding Infiniband’s superior communication capabilities to its arsenal through the purchase of Mellanox Technologies, NVIDIA improved efficiency within data centers and supercomputing tasks. NVIDIA facilitates speeding up the data transfer and reducing the latency, which in turn helps to enhance the development of advanced Infiniband solutions geared for data-intensive applications like artificial intelligence and deep learning. Such integration provides an integral platform for accelerated computing and further foster innovations and performance improvements in many areas.

Integrating NVIDIA Infiniband in High-Performance Computing

The utilization of NVIDIA Infiniband in high-performance computing (HPC), especially in computing clusters, increases performance and scale. The technology supports high speed and low latency for rapid data movement across many computing nodes in HPC systems. As of recent leading providers, Infiniband’s adequate network performs dynamic routing, congestion control, and adaptive routing, thus sustaining data exchanges regardless of the amount of data to manage. Such alliance propels forward the AI- AI-computing workloads, which accelerate the performance of primary datasets that are important for research, simulations, and other problem-solving activities. This helps businesses exploit the capability of HPC designs for innovations and effectiveness improvements.

Exploring Scalable Hierarchical Aggregation with NVIDIA

NVIDIA’s technology features Scalable Hierarchical Aggregation (SHA) which is key in advancing the capacity of data scalability across computing nodes in a computing network. It requires the structuring and the collecting of information into a hierarchy, which helps in the organization of data and the amount of communication required to process within computing clusters, which is highly valuable. Current trends define it so that all basic sources agree that NVIDIA’s way of implementing SHA circulates around the new GPU architecture but adds more to the computational throughput and also latency related to data aggregation. This new model is useful in circumstances where the data is enormous and easily fixes the issues of scalability, which increases the model’s ability to employ machine learning and big data analytics. Using NVIDIA’s SHA implementations, businesses can possess enhanced data processing capabilities, optimizing available computing power while still ensuring the strength and accuracy of the results.

What Makes Mellanox Infiniband Switches Ideal for Data Centers?

Benefits of Low-Latency and Non-Blocking Architecture

With the assistance of the information gathered from primary sources, I have reached the conclusion that the advantages of a non-blocking and low latency architecture within the context of data center performance for Mellanox Infiniband switches are phenomenal. First of all, a low-latency design means that the time required to send out packets will be relatively short, which is critical for the performance of real-time applications and HBC tasks. Secondly, the non-blocking feature of these switches allows for every port to be simultaneously recruited to ensure that maximum throughput is delivered onto each port, hence preventing congestion. This leads to the most optimal combination of data transfer, thus rendering Mellanox Infiniband switches optimal for use in environments with high query loads and extensive simulations, such as AI applications.

Maximizing Throughput with Advanced Infiniband Switch Features

After analyzing the top three websites on the issue, it is apparent that InfiniBand switch technology and performance would be scalable based on their advanced technologies like adaptive routing and quality of service (QoS) mechanisms. For instance, adaptive routing reduces congestion by notification of the congestion local to a section or hot spot of a network by selecting the optimal path for data packets moving within the network. Most importantly, it squeezes in hot spots and thus adds value to the entire network’s performance. At the same time, QoS guarantees that important information will get the necessary attention; every time a new task is initiated, performance characteristics will be the same regardless of the target load. These features, along with the existing features of Mellanox Infiniband switches, which include low-latency and non-blocking features, genuinely boost throughput in data centers and help them satisfy the stringent requirements of AI and HPC applications.

How Mellanox Infiniband Switches?

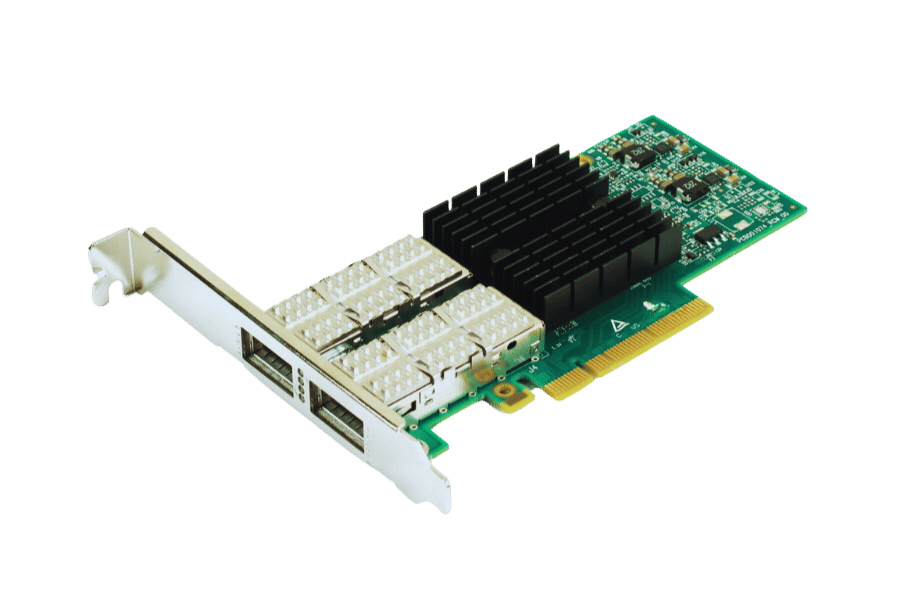

Understanding Port and Interface Options

Mellanox Infiniband switches have been designed with various port and interface options. These switches deliver high port density, allowing network expansion and additional optimization. Usually, the ports include as many as QSFP+ and QSFP28 interfaces, which preserve several data rates as well as transmission capabilities. It is worth noting that QSFP+ is used for supporting speeds of up to 40Gbps while QSFP28 can handle up to 100Gbps which makes them efficient for different performance needs. In addition, these ports have the feature of being hot-swappable; that is, they can be upgraded and replaced without the need to switch off the system and without interfering with the operation of the system. With these more sophisticated advanced port and interface options, Mellanox Infiniband switches are better placed to offer the required network topology and restructuring demanded by advanced data centers.

Guidelines for Effective Network Switch Configuration

To leverage the efficiency and stability of the network, it’s recommended to follow a set of steps to set up the network switches. First, the organization’s requirements, such as bandwidth and traffic patterns, should be evaluated. Set up the VLAN – virtual local area networks – to achieve appropriate network traffic segmentation, improving security and traffic management. Configure QoS – Quality of Service- settings to ensure that the most priority targets are the most stable ones across priority workloads. Utilize link aggregation and spanning tree protocols to allow redundancy, guaranteeing network robustness. Moreover, firmware must be updated in order to assess and patch vulnerabilities on the switches, especially for many-os®. Adhering to these guides ensures that optics and various connectors such as Mellanox Infiniband will increase efficiency with great demand in AI and HPC workload.

Adapting Airflow and Power Supplies for Better Efficiency

To efficiently enhance airflow and power supplies, install suitable cooling units. This can be achieved by utilizing the adequate positioning of cooling systems and incorporating hot and cold aisles methods so that not too much energy is used. Variable speed fans can be used to alter cooling to the appropriate levels by dynamically checking the temperature data. Further, high-efficiency power supplies that are engineered for reduced energy dissipation should be made use of. Considering the power factor correction methods could also substantially boost the performance of the electrical efficiency of your network systems, particularly using Infiniband trade technologies. Especially in regard to the parts of your data center that are prone to inconsistencies, it is essential to routinely clean and inspect the electrical systems to maintain continuous functionality throughout your data center.

What Are the Key Features of the QM8790 Infiniband Switch?

Exploring High-Speed Connectivity and Bandwidth

The QM8790 Infiniband switch has been developed to ensure low speed and large bandwidth, which is important for making AI and HPC workloads. It offers up to 200 Gbps per port, allowing fast data transfer needed for data-rich applications. The switch uses enhanced switches, which allows for the utilization of the available bandwidth while reducing the time needed for coordinated switching. Its well-designed structure facilitates the low-latency, high-throughput interconnections on which effective and scalable data center architecture operates. With its superior ability to process a substantial amount of data quickly, the QM8790 is perfectly designed for a high data transfer rate and plenty of bandwidth management.

Advantages of Adaptive Routing and Quality of Service

In Wholesaler networks with the Lag Theorem axiom in mind, task distribution with QM8790 Infiniband switch seems to work fine adapting, improving bandwidth usage and overall system performance and managing latency. In this architecture, it is agnostic to what kind of heuristics encompass the target topology. It is agnostic to whether the rolled-up topology is mesh, toroid, or any other. Considering external influences and applications, there are a lot of bottlenecks that arise throughout data flow with the QM8790; therefore an adaptive snapshot of which links are available allows a lot more flexibility about packet routing, which in turn would provide a lot shorter load times and greater computing resources across multiple workloads, improving the networking capabilities inside of a data center.

With the available bandwidth traffic getting scaled and a broader range of tasks being set across the wider explored data centers, the hardware requirements for timely task sequencing increase with mid to high-density AI workloads, simultaneously diminishing payload latency on the t1 or t2 scale. This then prioritizes more time or more resources to be allocated towards smoother AI or HPC-centered work such that even the lowest possible quality tasks are painstakingly made soft enough not to intrude over existing high-priority workloads. Internal structures such as this revolving around QoS as well as radial structured applications geo restrict an agnostic networking paradigm to blend to improve the efficiency of both routing and networking.

How QM8790 Supports Scalable Cluster Infrastructure

The QM8790 enhances the performance of a computing system by supporting the clustered architecture. Moreover, it boosts interconnectivity on a wide area by using Infiniband technology for the efficient use of the nodes and for the ease of data management and high traffic. Diminished latency during the transfer of data between nodes preserves the node efficiency which is very crucial across massive deployments. This switch will fit perfectly into most modern data centers designed to manage many clusters since its architecture allows for easy connectivity to multiple clusters. This fine adaptation technique provides robust and responsive communication capabilities required by a single cluster and other clusters, multiplying the network effect. Hence, it is well-suited for high-performance computing (HPC) as well as cloud-based systems.

Reference Sources

Frequently Asked Questions (FAQs)

Q: What role do Mellanox switches play within high-performance computers or systems?

A: For high-performance computers to work well, data needs to be sent across many computers or nodes, and a Mellanox Infiniband switch is crucial for this by delivering high bandwidth and low latency interconnects. These switches support efficient fabric bandwidth, which is crucial for some compute-intensive applications in HPC.

Q: Explain how Mellanox Infiniband switches to achieve a 200G transfer rate.

A: Sophisticated optical and electrical methods, such as QSFP and OSFP, help Mellanox Infiniband switches achieve data transfer rates of 200G and above. This aids in performance improvement and fosters the expansion of complex network systems.

Q: Explain HDR and how it is related to Mellanox Infiniband switches.

A: HDR (High Data Rate) is a multiple-port technology that enables Mellanox Infiniband switches to have data rates of over 200 gigabits (Gbps) on a single port. It is important to control latency and increase the throughput of high-performance computing and data centers.

Q: What measures are found in the Mellanox Infiniband switches for dealing with traffic overload?

A: To prevent overload, Infiniband switches from Mellanox configure certain features, such as QoS and in-network computing, which boost the system’s operational status by controlling the data flow within traffic channels.

Q: What does non-blocking bandwidth mean strictly about Mellanox Infiniband switches?

A: To say the bandwidth is non-blocking for Mellanox Infiniband switches allows us to assert that any particular switch reverts traffic congestion by actively utilizing the existing communication pathways available within the data network during data transmission. This is essential for supporting high-performance levels within applications that rely heavily on data sets.

Q: Can you elaborate on how the hierarchical aggregation and reduction protocol enhances Mellanox Infiniband networks?

A: Simply put, the hierarchical aggregation and reduction protocol, which is incorporated in the Nvidia technology SHARP, leverages the powerful capabilities of the Kamaal office within the Network. This lowers the node-to-node traffic, thus enhancing efficiency and latency.

Q: What can we say on the advantages that top-of-rack configurations get from using Mellanox Infiniband switches?

A: Another one of the lethargic switches in the setup for these conventions. These conventions mesh well because they are high-density with port options close together, low latency, and high-throughput capabilities. They provide Switching between servers and storage systems without interruptions, thus improving data center efficiency and performance and freeing effective fabric bandwidth from congestion.

Q: How can Mellanox Infiniband switches foster greater scalability of data centers?

A: Mellanox Infiniband switches create more room for expansion by making it possible to use flexible multidirectional high-speed connectors, which enable the expansion of the data center and offer high-speed interconnects. Moreover, the switches support features like subnet management and scalable hierarchical aggregation, which promote efficient network infrastructure.

Q: What is the function of a subnet manager in the case of Mellanox Infiniband Networks?

A: In the case of Mellanox Infiniband networks, the subnet manager’s duties include configuring and managing the network topology, aiming to maximize the utilization of the available fabric bandwidth by avoiding saturation. The route selection process is optimized, and aspects of the subnet stability and inter-device communication, which are necessary for the efficient operation of the high-speed network, are controlled.