The Mellanox® InfiniBand Adapter is necessary for high-performance networking solutions because it allows for ultra-fast latency and high bandwidth needed by data center environments. IT professionals and network architects would benefit from this article, which provides an in-depth overview of the technical specifications, functional advantages, and deployment scenarios of the InfiniBand Adapter. By speeding up data transfer rates and improving computational efficiency while supporting expandable architectures, we hope this paper gives readers enough understanding about their networks so they can make better decisions on them. Therefore, whether one wants to improve current systems or implement state-of-the-art ones, this manual will help you maneuver through the intricacies involved with Mellanox® InfiniBand adapter-based high-speed networking.

Table of Contents

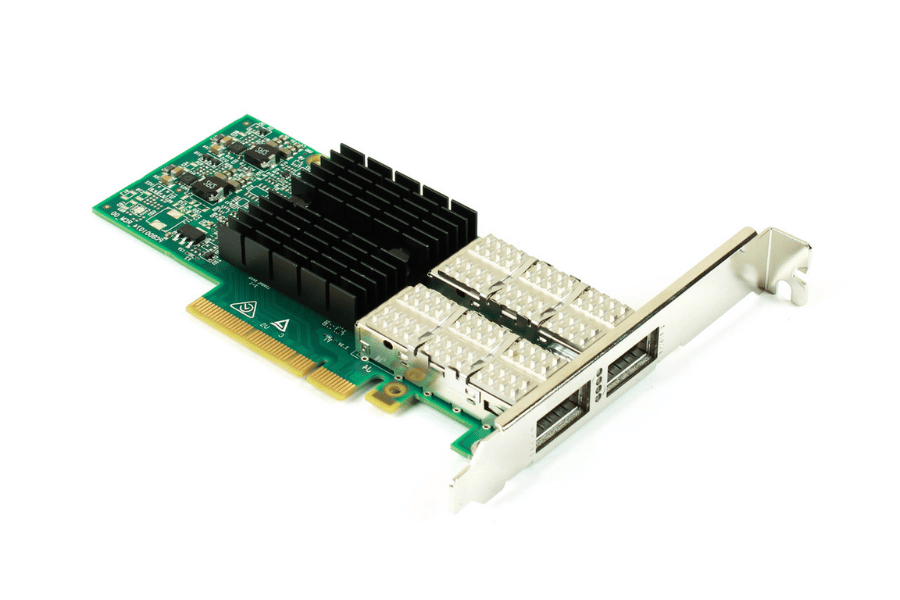

ToggleWhat is a Mellanox® InfiniBand Adapter?

Understanding the InfiniBand Adapter Technology

A high-speed communication interface is the InfiniBand Adapter. It helps to move data between servers and storage systems in a data center. It has a switched fabric topology, which allows for multiple device connections that, in turn, provide higher bandwidth and lower latency than traditional Ethernet networks can offer. InfiniBand supports reliable connection-oriented and connectionless communication, thus making it applicable to various uses. The adapter has been designed for 10Gbps up to more than 200Gbps data rates to cater to high-performance computing (HPC) applications and significant data processing needs. Additionally, this hardware offloading feature set enhances computational efficiency, allowing the system to offload the CPU when dealing with heavy data tasks.

Major Features of Mellanox® Adapters

Various features make Mellanox® Adapters, which are available in both single and dual port options, highly dynamic and challenging to understand:

- Bandwidth: They support rates between 10 Gbps and 200 Gbps so that they can work with applications that require data transfer.

- Latency: The fabric of switches minimizes delays when transferring information, thus making response time much faster, especially under the FDR or EDR setup.

- Scalability: They allow for building networks consisting of thousands of nodes, therefore being a good match for large-scale data centers.

- Hardware Offloading: Complex offload mechanisms integrated into Mellanox® Adapters ease data, reduce CPU overhead, and maximize system resource utilization.

- Connectivity versatility: These adapters enable deployment flexibility by supporting InfiniBand or Ethernet protocols and integrating existing network infrastructures.

- Improved reliability measures: Robust error detection algorithms alongside error correction methods ensure that critical information reaches its destination without any losses. This is very important for mission-critical apps, where data must not be dropped on the way due to hardware failures, etc.

- Advanced management tools: Network performance monitoring & management software tools bundled with these devices allow for proactive maintenance activities to optimize operational efficiency levels within such systems.

Such characteristics establish Mellanox® Adapters, which are necessary, among other things, to achieve high-performance networking solutions suitable for current and future needs.

Differences between Mellanox® and Other Brands

Several features make Mellanox® Adapters unique among other brands for high-performance computing applications. First, data bandwidth is up to 200 Gbps with Mellanox®, higher than most competitors and hence more suited for data-intensive environments. Secondly, no alternative can beat the switched fabric architecture of low latency, which allows faster data retrieval and processing. Thirdly, scalability in Mellanox® solutions makes them fit easily into large data center implementations supporting thousands of nodes without sacrificing performance. Additionally, what sets these adapters apart is their hardware offloading capabilities, which significantly reduce CPU workload and optimize overall system efficiency. In addition, dual support for InfiniBand and Ethernet protocols provides greater deployment flexibility, whereas many only concentrate on one protocol or another. Finally, advanced management tools such as those used by Mellanox® combined with reliability features ensure better performance monitoring within high-performance networks’ installations.

How to Install and Configure Mellanox® InfiniBand Adapters?

Step-by-Step Installation Guide

- Preparation: Power off and unplug your server before installation. Gather a screwdriver and an anti-static bracelet.

- Inserting the Adapter: Open the server’s case and find its PCIe slots. If necessary, remove any cards already there to create space for a new single-port OSFP adapter. Align the Mellanox® InfiniBand Adapter with the PCIe slot, making sure that the card’s notch is snugly fitting. Press softly until seated firmly.

- Securing the Adapter: Utilize screws to fasten down this component onto your chassis tightly – this guarantees stability while it’s working.

- Putting Back Together Chassis: When servers are restarted and everything else is reconnected so they’re all correctly plugged in together, problems during boot-up or operation afterward are prevented.

- Powering Up Servers Again: Plug back the power supply into the system unit and switch it on, then watch out for any error signs throughout the booting process.

- Installation of Drivers: After the server starts, you should download the most recent driver suite for Mellanox® from their authorized website and follow through with installation using the instructions specific to your operating system to enable all features on an Ethernet adapter card.

- Configuration: If necessary, access networking settings and disable any conflicting interfaces. Configure Mellanox® adapter according to IP addressing scheme requirements, with subnetting included where applicable based on network needs.

- Testing: Conduct tests to prove whether or not this device is functioning as expected by checking its connectivity. Check network performance with command-line utilities while also checking latency benchmarks.

By following these steps, one will have installed and configured Mellanox® InfiniBand Adapters correctly, hence optimizing their environment for high-performance computing through networking.

Configuration Tips for Optimal Performance

Please follow these configuration recommendations to maximize your Mellanox® InfiniBand Adapter’s performance:

- Activate Jumbo Frames: Change the Maximum Transmission Unit (MTU) size to enable jumbo frames. This permits larger data packets to be sent, thereby increasing throughput tremendously and reducing CPU overhead.

- Optimize Queue Pairs: Set up sufficient queue pairs depending on the load. This will improve data management efficiency and enhance the ability to perform parallel processing.

- Use Latest Firmware: Always ensure you have installed the most recent firmware update available for your Infiniband adapter. These updates include bug fixes, feature support, and performance improvements.

- Tune Network Parameters: You are advised to tweak system-wide network parameters such as buffer settings and TCP window size according to what works best for your application; this might reduce latency, thus leading to higher overall throughput.

- Utilize RDMA: Enable Remote Direct Memory Access (RDMA) where applicable so that data can move between hosts without involving the CPU, making it faster, hence lowering latency and giving more bandwidth.

These tactics will enable you to optimize Mellanox® InfiniBand Adapter performance and improve effectiveness within your high-performance computing environment.

Troubleshooting Common Issues

- Adapter Not Detected: If the Mellanox® InfiniBand adapter is not recognized by the system, make sure it is inserted correctly into the PCIe slot and all power connections are secure. Also, check whether relevant drivers and their updates have been installed. For more information on drivers, please visit the Mellanox support page.

- Latency Problems: Dual port setups can cause high latencies due to wrong network settings or insufficient queue pairs. Verify MTU values and enable jumbo frames where necessary. Additionally, potential bottlenecks in network buffers could need tuning while monitoring traffic loads.

- Degraded Performance: To troubleshoot decreased throughput, ensure your firmware version is up-to-date and optimize the host system’s network parameters. Hardware performance problems can be differentiated from software configuration issues using performance monitoring tools, thereby allowing timely adjustments.

These general configurations and updating guidelines, when taken stepwise, help improve the reliability of Mellanox® InfiniBand Adapter under intense workloads.

What Are the Key Benefits of Using Mellanox® InfiniBand Adapters?

High Bandwidth and Low Latency Performance

Mellanox® InfiniBand Adapters are well known for their ability to deliver high bandwidth and low latency, which are essential in high-performance computing (HPC) environments with dual port configurations. The adapters have been designed to support up to 200 Gbps data rates- a figure way above what can be achieved by traditional Ethernet solutions. This means that they can quickly handle large amounts of data using features like offloading and remote direct memory access (RDMA) support, which cuts down on delays caused by having the CPU handle all transfers, hence boosting overall system performance. InfiniBand’s inherent architecture makes it possible for systems to scale without sacrificing speed or reliability since it seamlessly integrates with existing structures. Organizations should take advantage of these capabilities to enhance their efficiency when dealing with data-intensive operations, resulting in faster calculations at any given time.

Scalability for Growing Network Needs

The Mellanox® InfiniBand Adapters have excellent scalability to match the changing requirements of more extensive networks. The design allows for easy bandwidth allocation and the addition of extra cards. Organizations can expand their networks without experiencing lengthy downtimes or reduced performance speeds. Such adapters also permit the creation of vast, highly interconnected systems, making it easier to manage heavier workloads and traffic, guaranteeing that performance levels are maintained as the demands on them increase. Furthermore, they support multi-hop topologies that enhance data routing efficiency in large areas, thus responding strongly to current and expected future network problems. Businesses can utilize new technologies without making too many adjustments if they use Mellanox® InfiniBand technology since this will help them safeguard their infrastructure against obsolescence.

Enhanced Compatibility with Various Systems

Mellanox® InfiniBand Adapters are created with a wide range of computing environments in mind and thus can work with various operating systems and application frameworks. This versatility is achieved using drivers and software libraries that integrate well with other systems, such as enterprise-grade servers, whether they are high-performance computing clusters. These adapters also support open MPI among other popular software ecosystems, enabling different devices to communicate within heterogeneous settings. In addition, the industry-standard protocols they support would allow organizations to connect hardware components with dissimilar configurations without making too many changes. Such compatibility quickens deployment while minimizing risks associated with system integration, thus making it easier for businesses to transition into high-bandwidth networking solutions. With Mellanox® InfiniBand, companies can improve operational efficiency, keeping up necessary adaptability amidst changing technological terrains.

What Products are Often Also Viewed with Mellanox® InfiniBand Adapters?

Transceivers and Cables

To get the best results in network environments, most of the time, Mellanox® InfiniBand adapters are combined with excellent quality transceivers and cables. A transceiver, such as Mellanox® QSFP, acts as an interface between the adapter and switch by ensuring that data is transmitted across distances that comply with data centers’ demanding nature. Proper cable selection is essential for signal integrity preservation and latency reduction, particularly in high-speed applications; hence, passive copper and active optical cables, among other types, should be chosen carefully. Additionally, these elements are manufactured to meet strict industry standards, which make them compatible with other devices within an InfiniBand network system and enhance efficiency. These two things enable organizations to establish strong foundations capable of supporting increased workloads’ complexities according to current trends.

Complementary Network Adapters

Usually, when organizations search for complementary network adapters for Mellanox® InfiniBand solutions, they consider many high-speed options that improve their networks. For example, RDMA (Remote Direct Memory Access) and offloading capabilities are supported by the Mellanox ConnectX® series, which has other advanced features that can optimize data transfers in environments with high throughput. Moreover, Intel’s Ethernet portfolio includes various adapters that can be integrated into any network architecture needed. Another option is implementing HP networking solutions that work well with Mellanox® systems, thus creating a consolidated infrastructure capable of meeting different operational requirements. These adapters ensure scalability and efficiency in networking environments where many data-intensive applications must be handled simultaneously.

Other High-Performance Computing Components

Integrating different parts is essential for high-performance computing (HPC) environments to get the best performance possible. Some of the most crucial building blocks include high-speed storage systems like NVMe (Non-Volatile Memory Express) SSDs, which provide faster data access times than traditional storage media. Additionally, advanced cooling techniques such as liquid or immersion can effectively handle heat output, allowing sustained performance during heavy computing workloads. Furthermore, deploying state-of-the-art processor architectures that include GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units), among others, will speed up parallel processing capabilities, making them very useful in deep learning tasks or running complex simulations. When put together, these components form an automated environment that increases the throughput and responsiveness of HPC systems designed for dealing with highly intensive computations.

Are Mellanox® InfiniBand Adapters Compatible with NVIDIA Technologies?

Integration with NVIDIA GPUs for Enhanced Compute Performance

NVIDIA GPUs can work well with Mellanox InfiniBand adapters in high-performance computing environments. They increase computation by realizing data transfer acceleration between GPUs through a system integrated with high throughput and low latency features of Infiniband technology, useful for tasks requiring heavy processing power, such as machine learning or simulation. If NVLink were used alongside Mellanox InfiniBand, bandwidth would be increased, and bottlenecks would be reduced, so much time could be saved during parallel computing across systems. AI and data science are always advancing; therefore, large-scale applications must perform at their best. thus, these combined capabilities should be able to ensure optimal performance and scalability with regards to big data requirements.

Optimizing Performance with NVIDIA Software

To get the best performance possible when using NVIDIA technology, you need to use the suite of software offered by NVIDIA. They created software frameworks like NVIDIA CUDA, cuDNN, and TensorRT to optimize tasks done with parallel computing on GPUs. With CUDA (Compute Unified Device Architecture), developers can take full advantage of NVIDIA GPUs for general-purpose processing, which significantly speeds up workloads associated with data. cuDNN is a library for deep neural networks that use GPUs for acceleration; it optimizes training and inference, making it an essential part of any machine learning application. TensorRT improves upon this by offering better runtime speeds in deep learning models where many layers need to be fused or calibrated more precisely than before. By using them correctly alongside sound infrastructure design principles, such organizations will be able to make maximum use of their investments in NVIDIA while also ensuring high levels of computational efficiency throughout different applications within them.

Reference Sources

Frequently Asked Questions (FAQs)

Q: What is Mellanox® InfiniBand Adapter?

A: The Mellanox® InfiniBand Adapter is a technology that provides the fastest network speed and flexibility in the market. It allows data centers, HPCs, and cloud infrastructures to increase their network throughput and application performance efficiency.

Q: Where can I find the manual for my Mellanox® InfiniBand Adapter?

A: Manuals for these adapters are available on the manufacturers’ official websites or through their support sections. Alternatively, you can contact us for more specific model-related documentation.

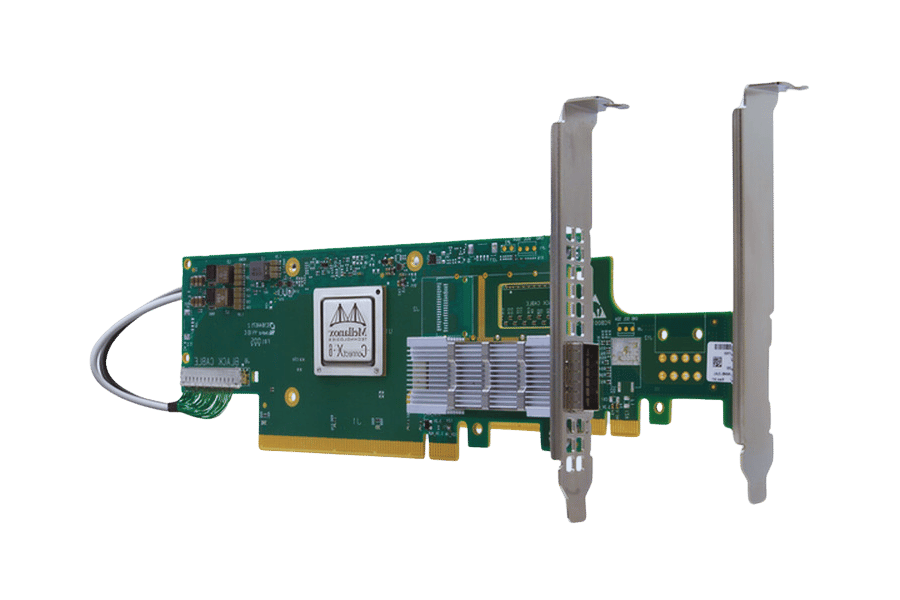

Q: What are the advantages of using ConnectX-6 VPI Adapter Card?

A: The ConnectX-6 VPI adapter card sets industry records with its dual-port QSFP56, which supports Ethernet and InfiniBand connectivity while being compatible with PCI Express 4.0 x16 slots. These features make it ideal for HPC and data center applications.

Q: Can Mellanox® InfiniBand Adapter connect to both Ethernet and InfiniBand networks?

A: Yes, it does. This is because virtual protocol interconnect (VPI) adapters support different types of networks, such as Ethernet or Fibre Channel over Ethernet (FCoE), among others, while still delivering the high-performance levels required by various applications, including those based on Infiniband fabrics.

Q: What are some key features offered by ConnectX-7 VPI Adapters?

A: This adapter’s notable features include support for HDR100, NDR, and 200GbE networking capabilities, dual-port connectivity options, and high throughput rates, making it suitable for use in high-performance computing environments where demanding data centers need robust solutions.

Q: What benefits do the Mellanox® Adapter Cards have if connected to a PCIe4.0 x16 slot?

A: The PCIe4.0 x16 slot brings more speed and less delay, hugely improving the performance of Mellanox® Adapter Cards, enabling them to be used for heavy data processing and high-speed networking.

Q: What can be done regarding connectivity with Mellanox® Adapter Cards?

A: Mellanox® Adapter Cards offer connection interfaces such as single-port QSFP56, dual-port QSFP56, optical, and copper connections, and they can work with Ethernet and InfiniBand protocols.

Q: Can I use the Linux operating system with Mellanox® Adapter Cards?

A: Yes, Linux distributions can be run on machines that have installed Mellanox® adapter cards. These cards have good drivers and software support, allowing them to work at their best performance levels.

Q: What do “single-port” and “dual-port” mean concerning Mellanox® Adapter Cards’ names?

A: The terms “single-port” or “dual-port” describe how many network interfaces are available on an adapter card. Single-port cards have only one port, while dual-port ones come with two ports, thus enabling more redundancy and higher bandwidth capacity.

Q: How do I know which specific model of Mellanox® Adapter Card suits my requirements?

A: To identify the most appropriate Melon adapter card for your needs, you should consider factors such as desired throughput, network type (Ethernet or InfiniBand), compatible slots (e.g., PCI Express x16), and whether it has one or two ports. You may also check product descriptions together with manuals that provide detailed specifications and guidelines.

Related Products:

-

NVIDIA NVIDIA(Mellanox) MCX653105A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall bracket

$965.00

NVIDIA NVIDIA(Mellanox) MCX653105A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall bracket

$965.00

-

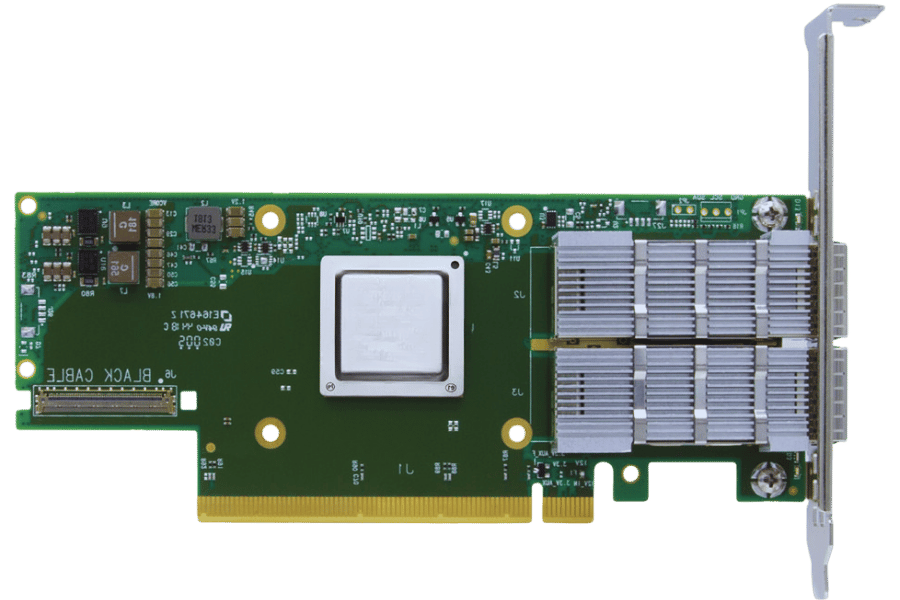

NVIDIA NVIDIA(Mellanox) MCX653106A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Dual-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$828.00

NVIDIA NVIDIA(Mellanox) MCX653106A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Dual-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$828.00

-

NVIDIA NVIDIA(Mellanox) MCX653105A-HDAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR/200GbE, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$1400.00

NVIDIA NVIDIA(Mellanox) MCX653105A-HDAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR/200GbE, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$1400.00

-

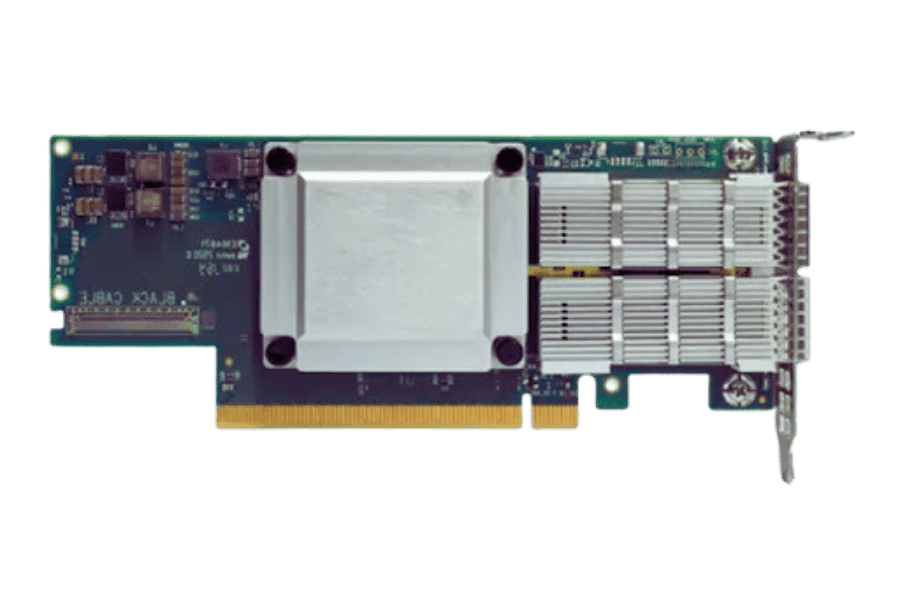

NVIDIA NVIDIA(Mellanox) MCX653106A-HDAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR/200GbE, Dual-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$1600.00

NVIDIA NVIDIA(Mellanox) MCX653106A-HDAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR/200GbE, Dual-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$1600.00

-

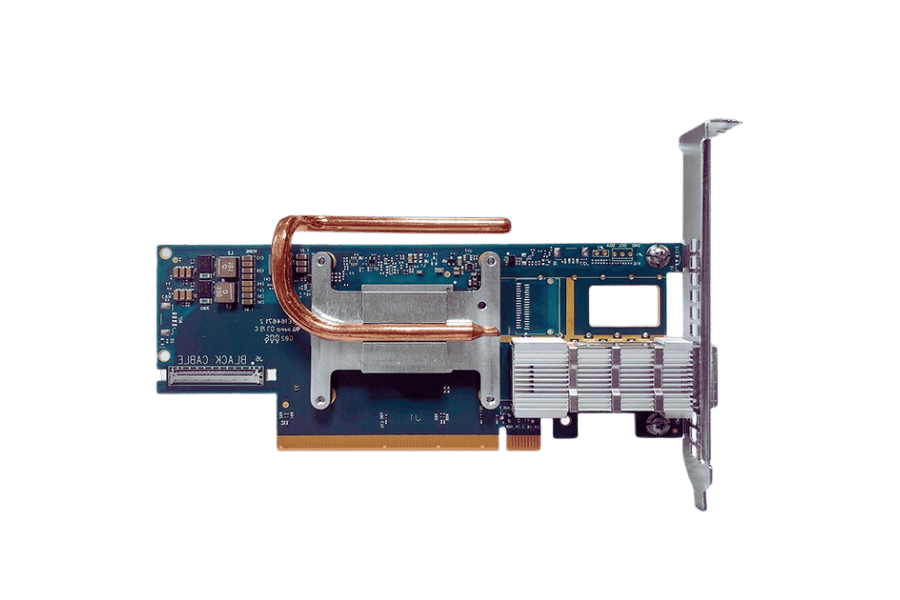

NVIDIA NVIDIA(Mellanox) MCX75510AAS-NEAT ConnectX-7 InfiniBand/VPI Adapter Card, NDR/400G, Single-port OSFP, PCIe 5.0x 16, Tall Bracket

$1650.00

NVIDIA NVIDIA(Mellanox) MCX75510AAS-NEAT ConnectX-7 InfiniBand/VPI Adapter Card, NDR/400G, Single-port OSFP, PCIe 5.0x 16, Tall Bracket

$1650.00

-

NVIDIA NVIDIA(Mellanox) MCX75310AAS-NEAT ConnectX-7 InfiniBand/VPI Adapter Card, NDR/400G, Single-port OSFP, PCIe 5.0x 16, Tall Bracket

$2200.00

NVIDIA NVIDIA(Mellanox) MCX75310AAS-NEAT ConnectX-7 InfiniBand/VPI Adapter Card, NDR/400G, Single-port OSFP, PCIe 5.0x 16, Tall Bracket

$2200.00