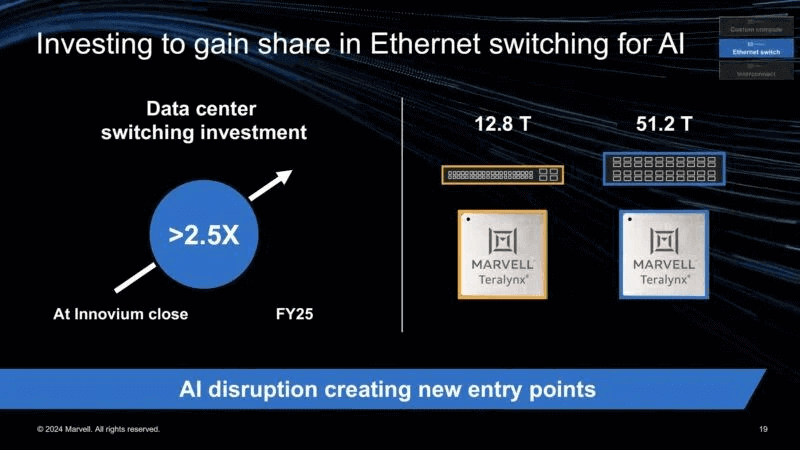

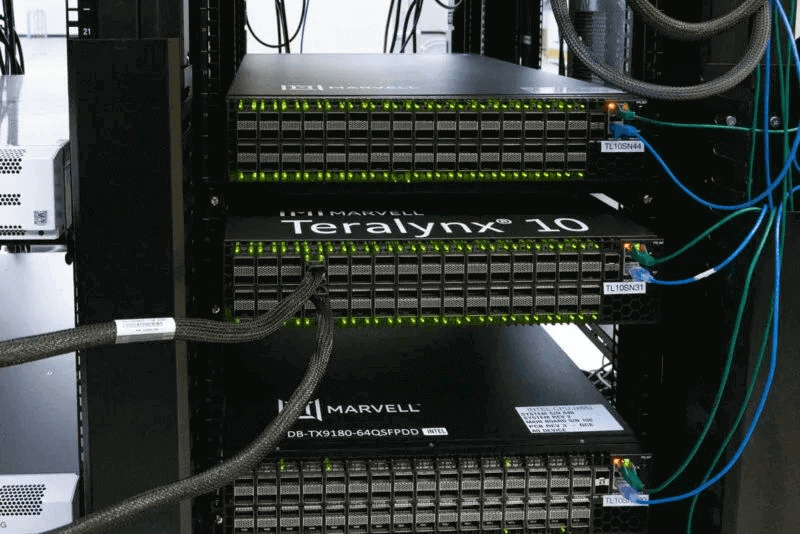

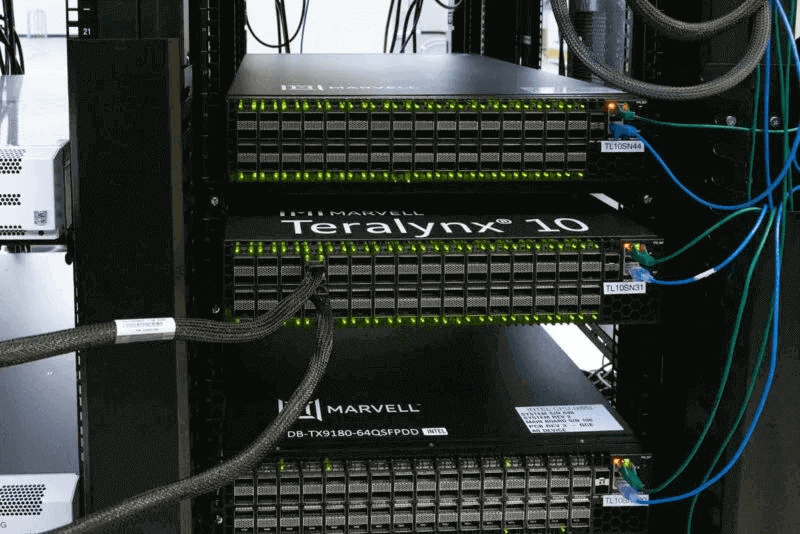

Today, we will explore the internals of a large switch spanning 64 ports of 800GbE. The Marvell Teralynx 10 is a 51.2Tbps switch, set to be a key component in AI clusters by 2025. This substantial network switch is quite fascinating.

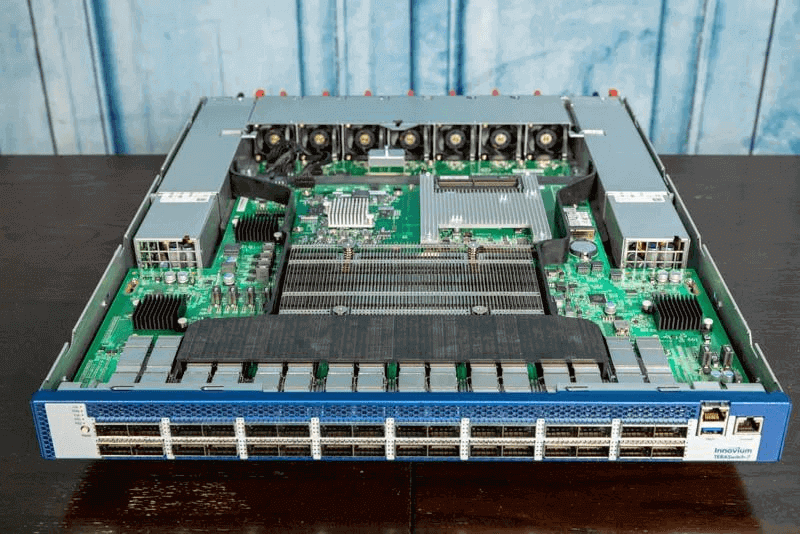

Marvell acquired Innovium in 2021, following our examination of the Innovium Teralynx 7-based 32x 400GbE switch. We had previously disassembled this startup’s 12.8Tbps (32-port 400GbE) generation switch.

Innovium emerged as the most successful startup of its generation, making significant inroads into hyperscale data centers. For instance, in 2019, Intel announced its acquisition of Barefoot Networks to obtain Ethernet switch chips. However, by Q4 2022, Intel declared its intention to divest this Ethernet switch business. Broadcom holds a significant position in the commercial switch chip market, while Innovium/Marvell has successfully penetrated hyperscale data centers, unlike other companies that invested heavily but failed.

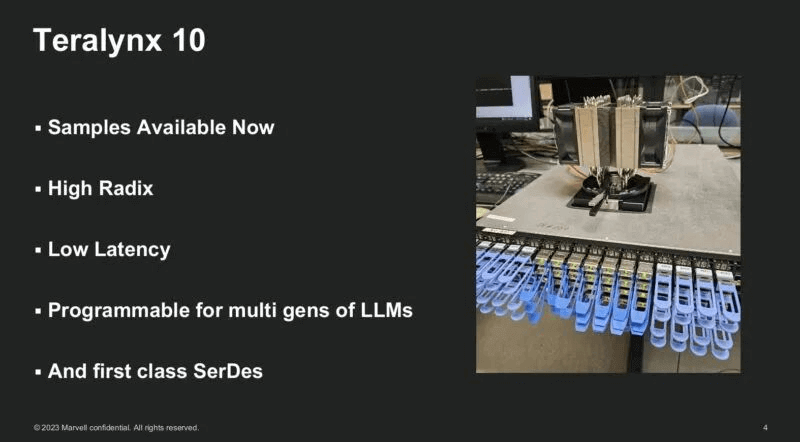

Given the scale of AI cluster construction, the 51.2Tbps switch chip generation is substantial. We inquired if Marvell could update the 2021 Teralynx 7 teardown and provide insights into the new Marvell Teralynx 10.

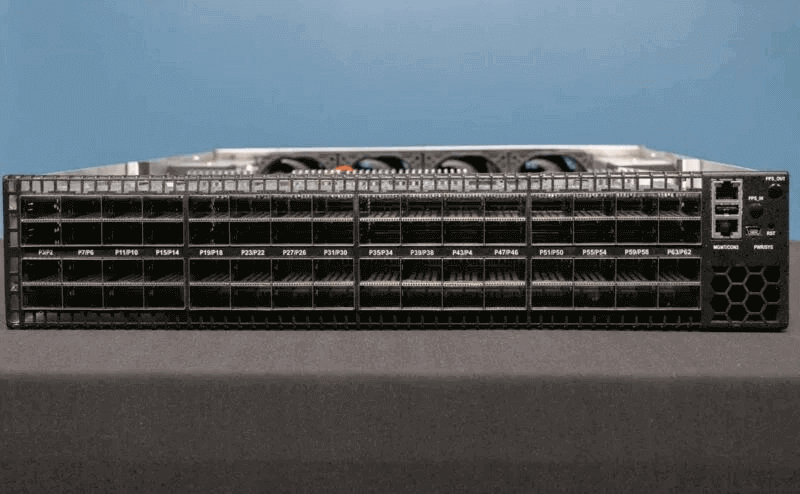

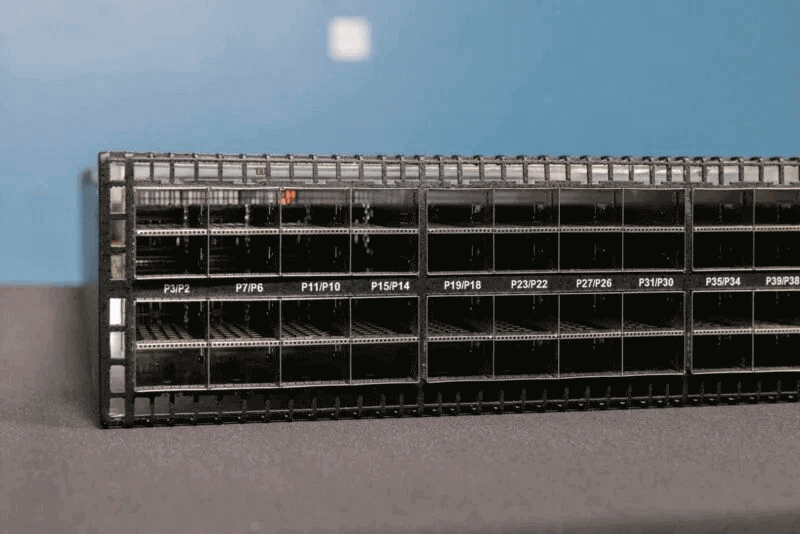

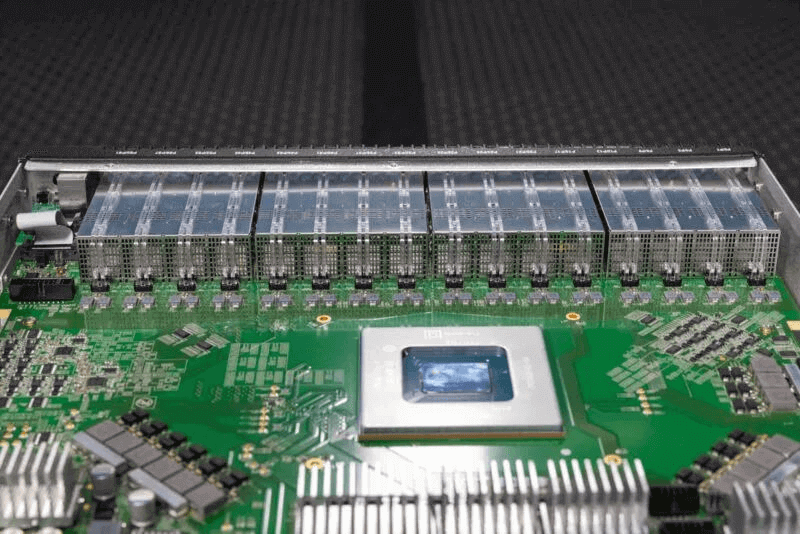

The switch features a 2U chassis, primarily composed of OSFP cages and airflow channels. There are 64 OSFP ports in total, each operating at 800Gbps.

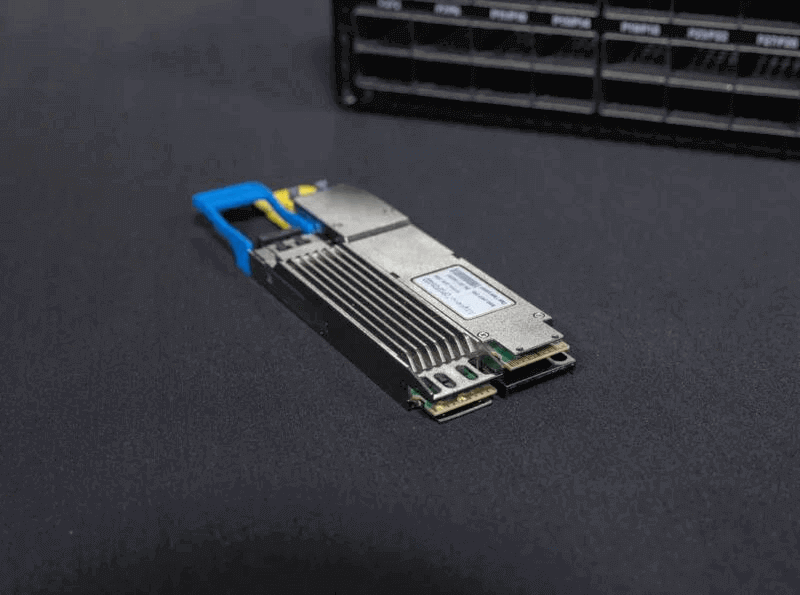

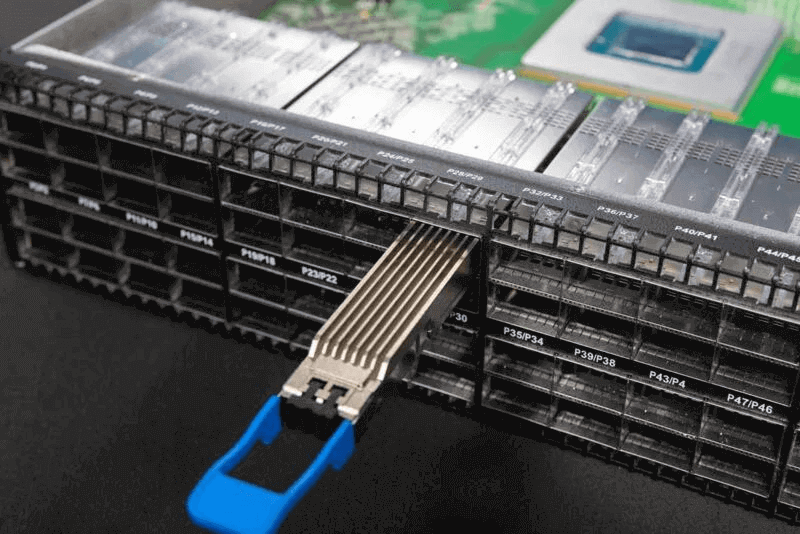

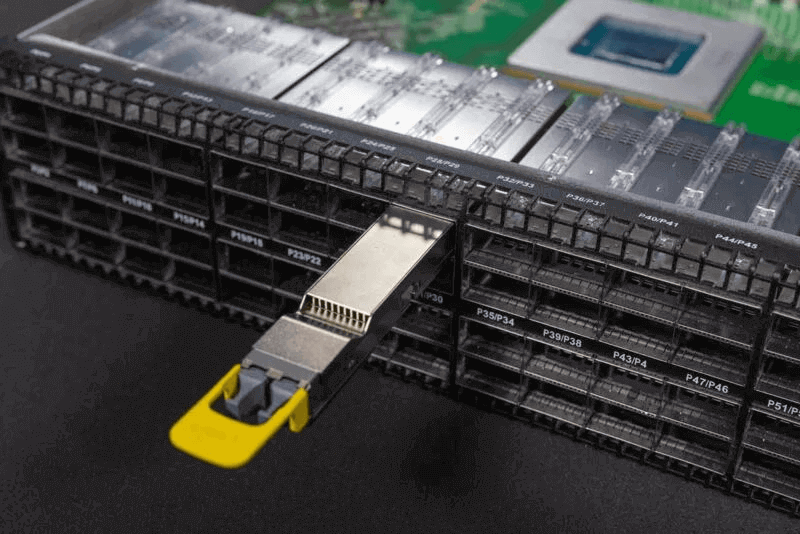

Each port is equipped with OSFP pluggable optics, which are generally larger than the QSFP+/QSFP28 generation devices you might be accustomed to.

Marvell has introduced several optical modules, leveraging components from its acquisition of Inphi. We have discussed this in various contexts, such as the Marvell COLORZ 800G silicon photonics modules and the Orion DSP for next-generation networks. This switch can utilize these optical modules, and the ports can operate at speeds other than 800Gbps.

One of the intriguing aspects is the long-distance optical modules, capable of achieving 800Gbps over hundreds of kilometers or more. These modules fit into OSFP cages and do not require the large long-distance optical boxes that have been industry standards for years.

OSFP modules can have integrated heat sinks, eliminating the need for heat sinks in the cages. In some 100GbE and 400GbE switches, optical cages require heat sinks due to the high power consumption of the modules.

On the right side of the switch, there are management and console ports.

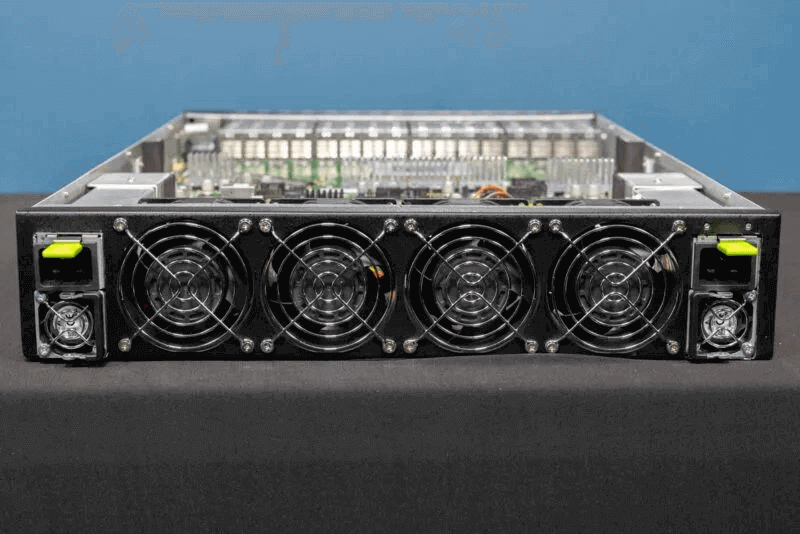

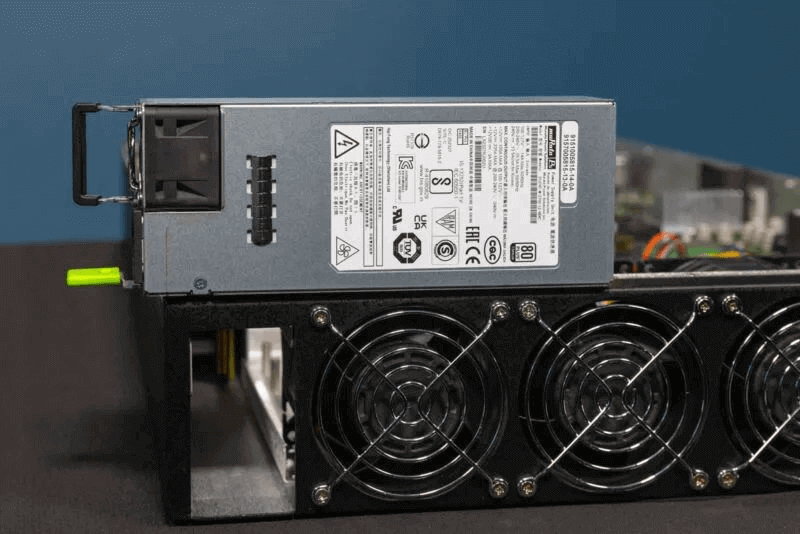

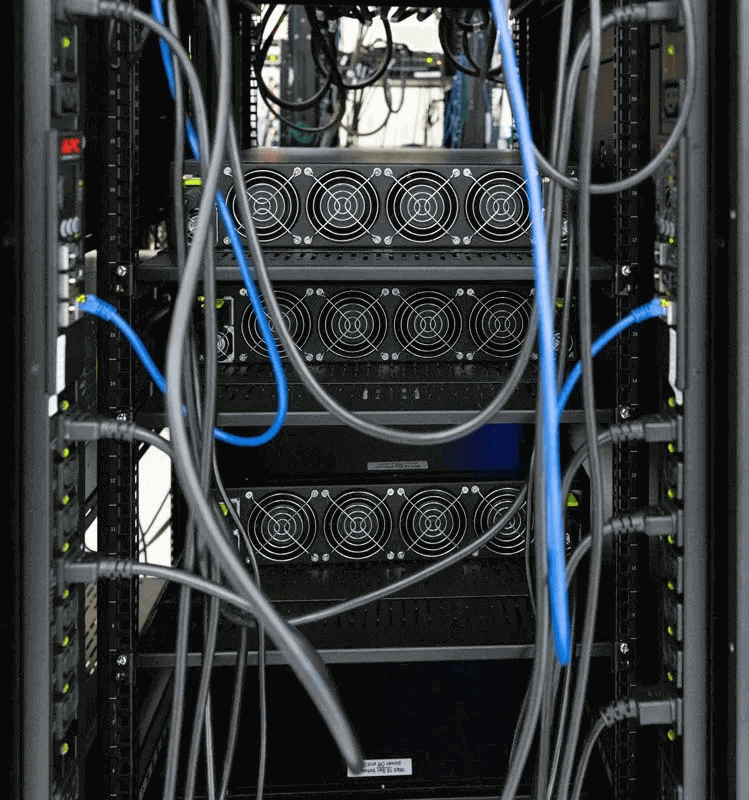

The back of the switch houses fans and power supplies, each with its own fan.

Given that this switch can use optical modules consuming around 1.8kW and has a 500W switch chip, power supplies rated over 2kW are expected.

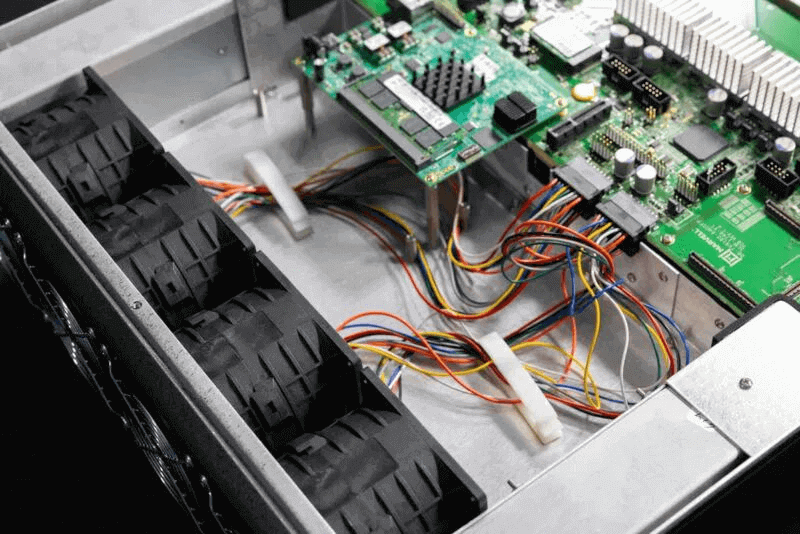

Next, let’s delve into the internals of the switch to see what powers these OSFP cages.

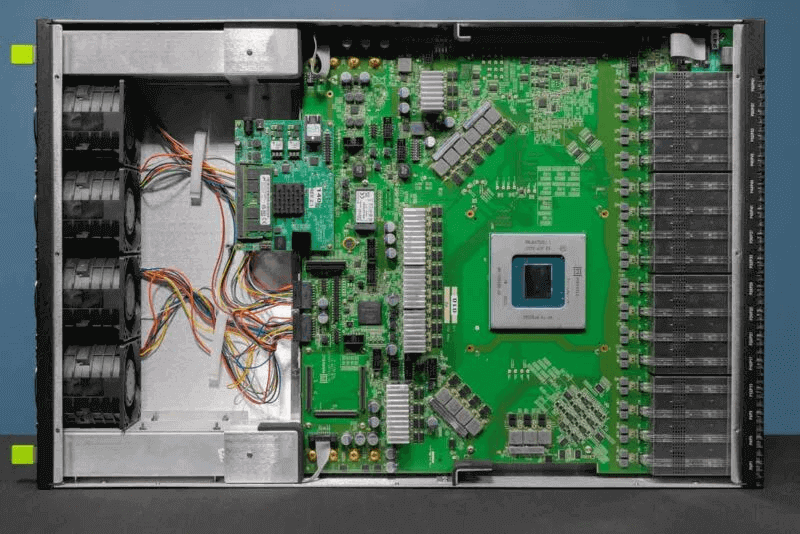

We will start from the OSFP cages on the right and move towards the power supplies and fans on the left.

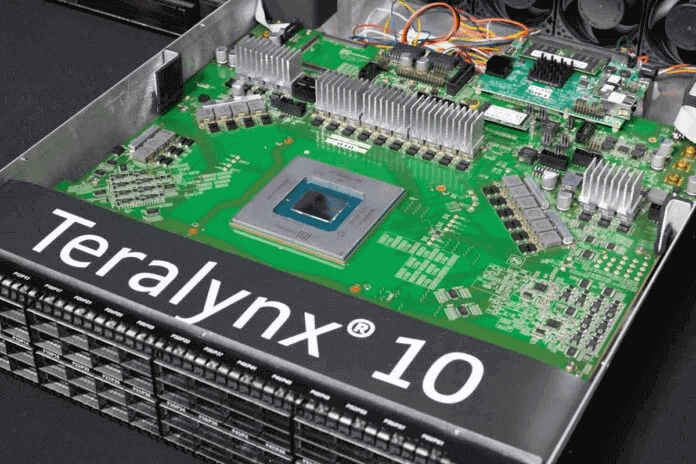

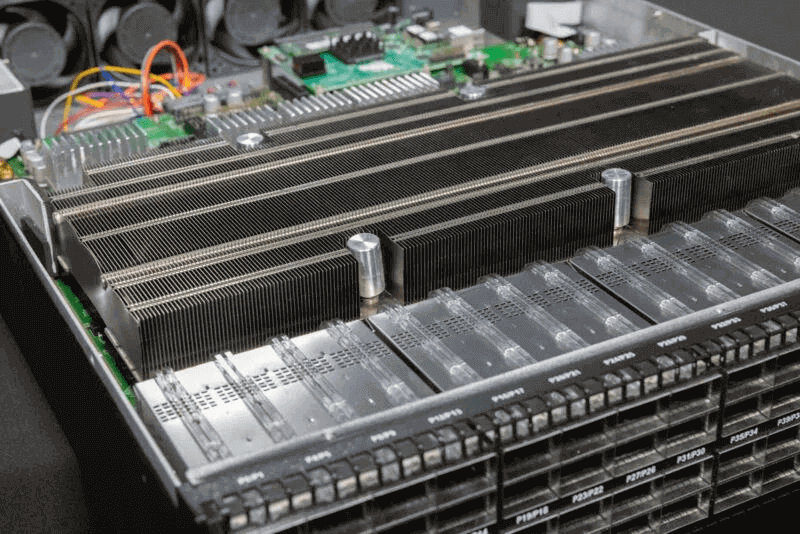

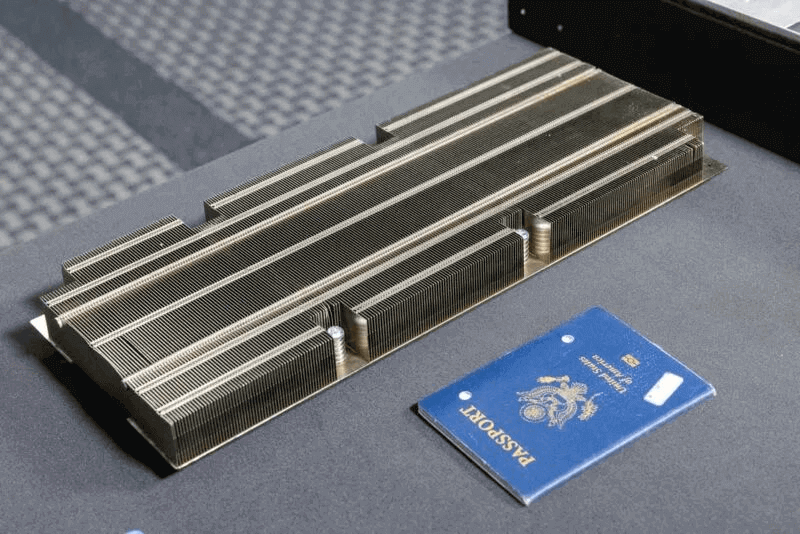

Upon opening the switch, the first thing that catches the eye is the large heat sink.

This heat sink, shown with an expired passport for scale, is quite substantial.

Here is a bottom view of the heat sink.

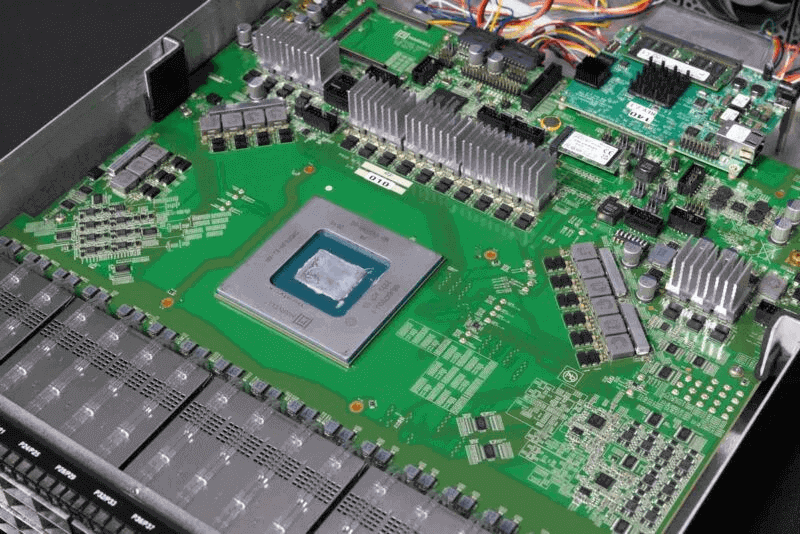

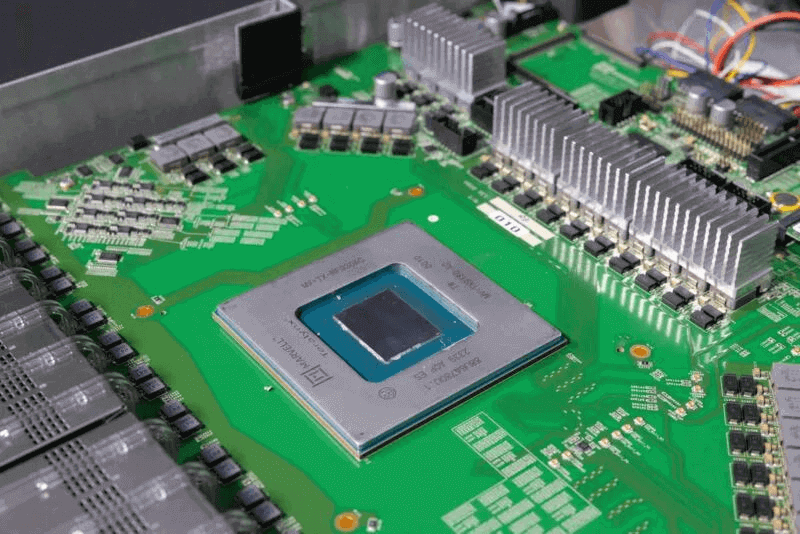

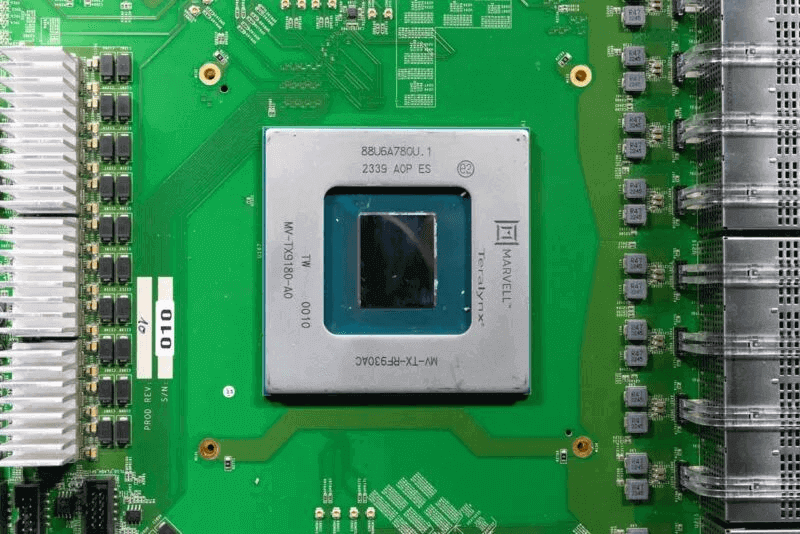

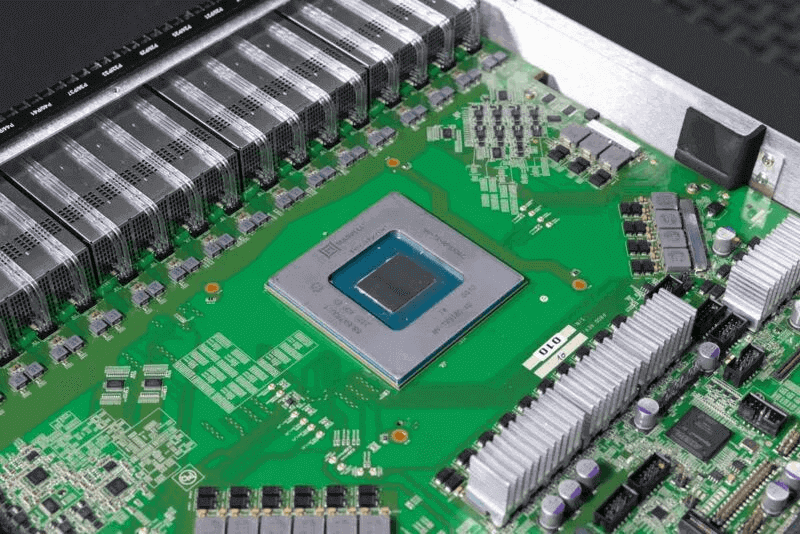

The chip itself is a 500W, 5nm component.

Marvell allowed us to clean the chip to take some photos without the heat sink.

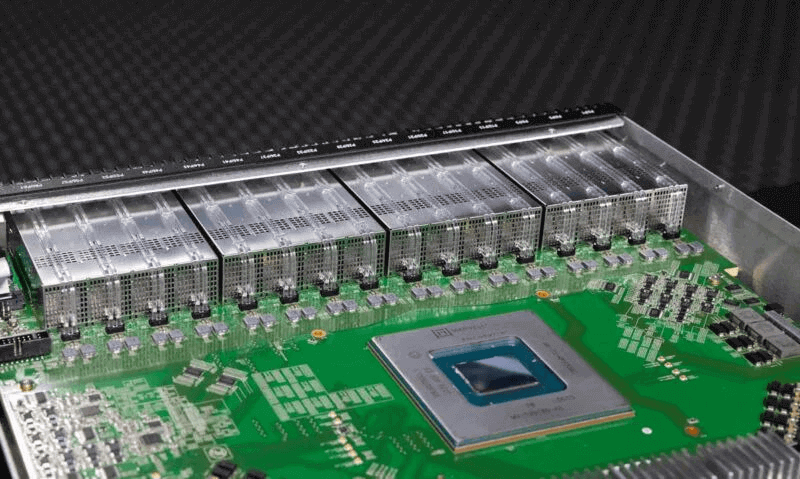

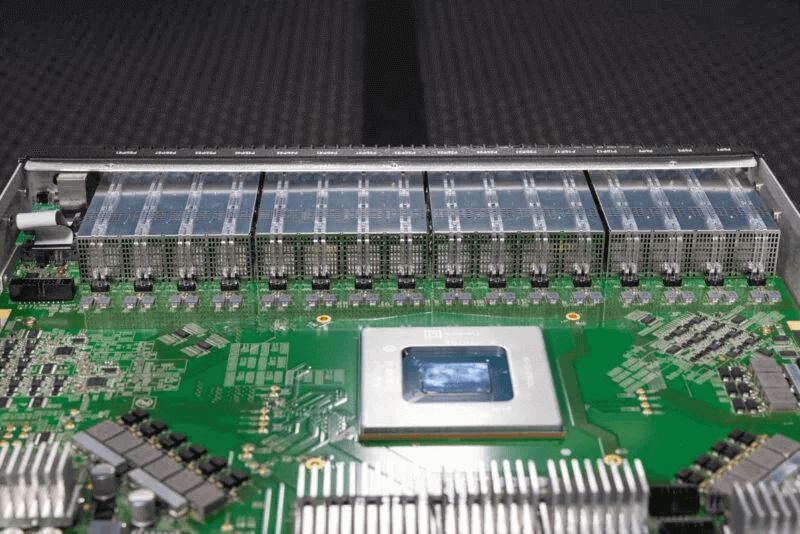

This gives us a clear view of the OSFP cages without the heat sink.

From this perspective, there are only 32 OSFP cages because the switch PCB is situated between two blocks.

Behind the OSFP cages, we have the Teralynx 10 chip.

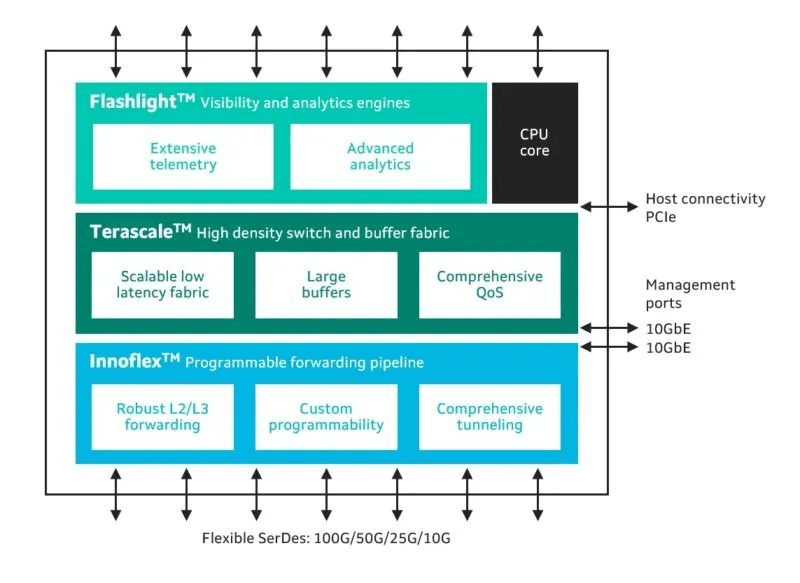

For those interested, more detailed information about the Teralynx 10 can be found in our earlier feature diagram.

One notable difference is that many components on the switch are angled, rather than being horizontal or parallel to the edges of the switch chip.

Here is a top-down photo of the switch, showcasing the 64-port 800GbE switch chip. For those familiar with server technology, we are looking at 800GbE single-port NICs in the PCIe Gen6 era, while today we have 400GbE PCIe Gen5 x16 NICs. This chip has the capacity to handle the fastest 128 PCIe Gen5 400GbE NICs available today.

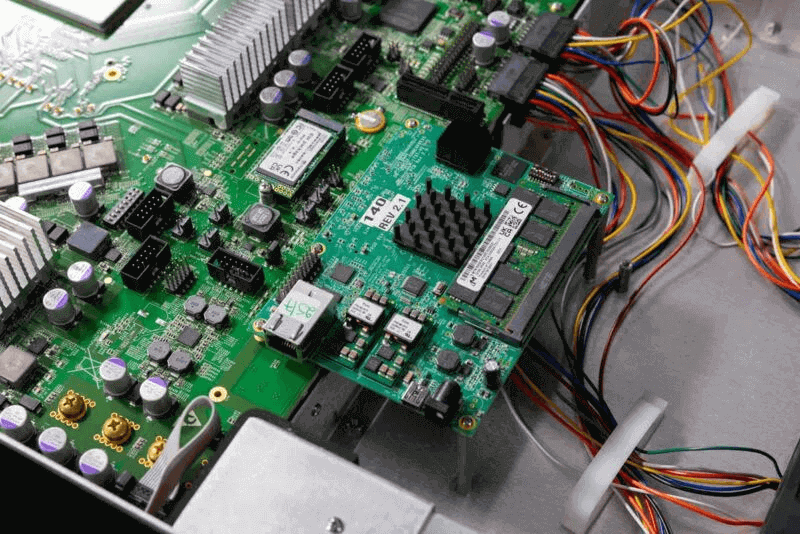

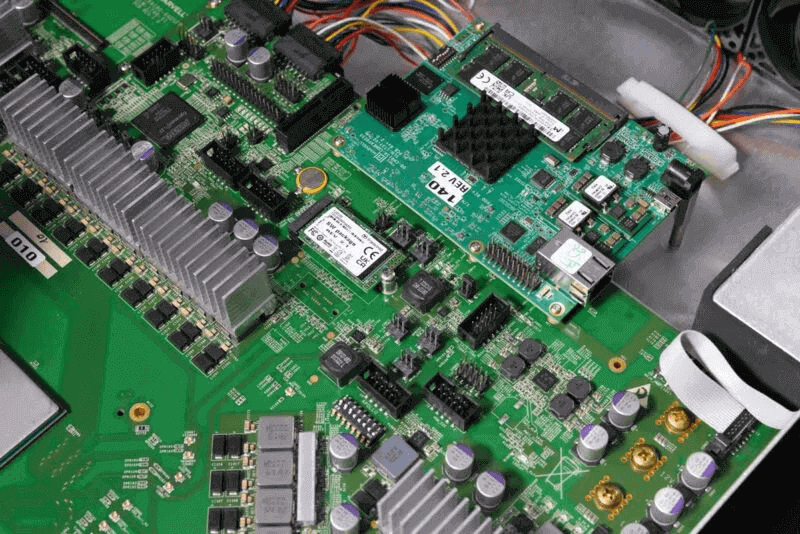

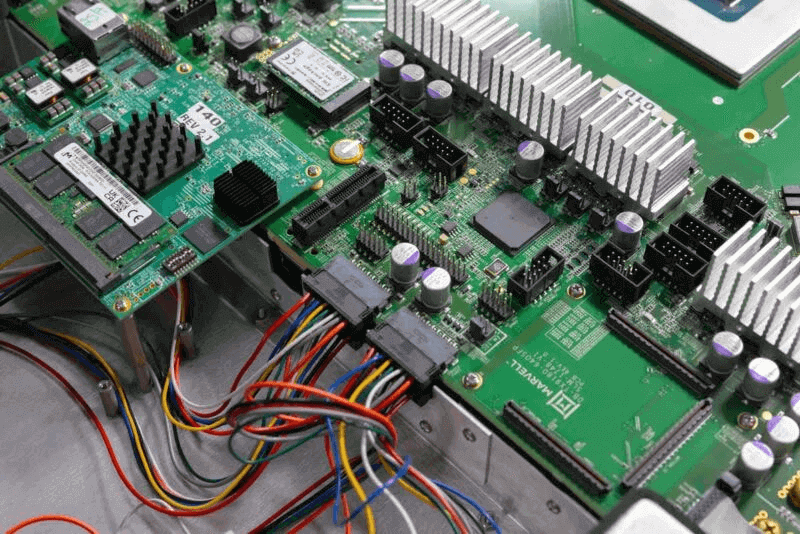

Like many switches, the Teralynx 10 switch has a dedicated management controller, based on the Marvell Octeon management board. We were informed that other switches might use x86.

An M.2 SSD is located on the main power distribution board.

An interesting feature is the built-in PCIe slot for diagnostics.

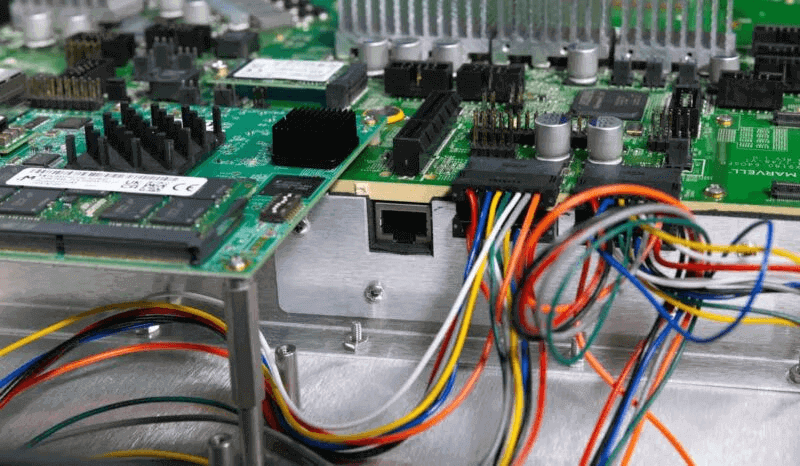

Just below this, there is a 10Gbase-T port exposed internally as a management interface.

Another aspect to consider is the thickness of the switch PCB. If server motherboards were this thick, many 1U server designs would face significant cooling challenges. In terms of cooling, the switch has a relatively simple fan setup, with four fan modules at the rear of the chassis.

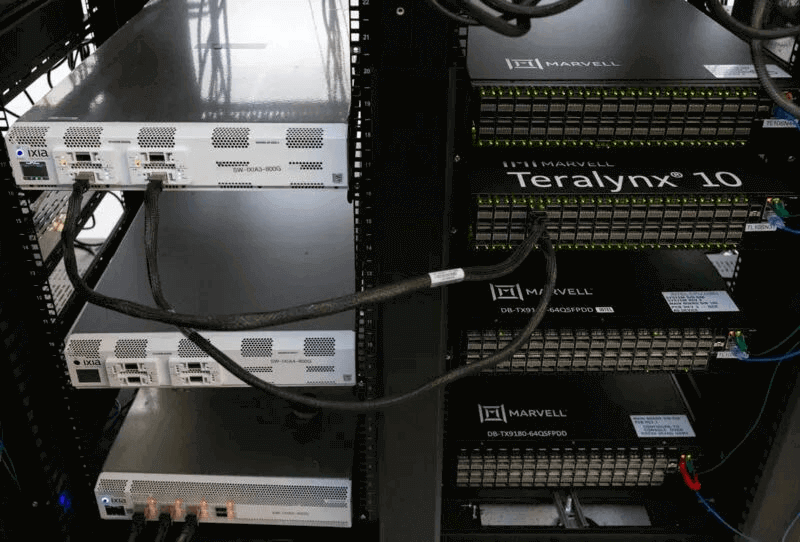

Marvell has a lab in another building where these switches are tested. The company temporarily cleared the lab to allow us to photograph the switch in operation.

Here is the back view.

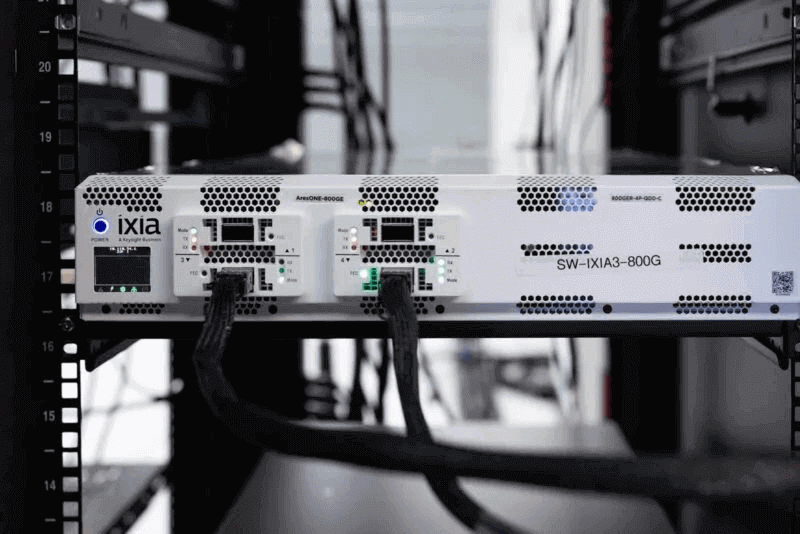

Next to the Teralynx 10 switch is the Keysight Ixia AresONE 800GbE test box.

Generating 800GbE traffic on a single port is no easy feat, as it is faster than PCIe Gen5 x16 on servers. It was fascinating to see this device in operation in the lab. We had previously purchased a neat second-hand Spirent box for 10GbE testing, but Spirent refused to provide a media/analyst license. Devices like this 800GbE box are incredibly expensive.

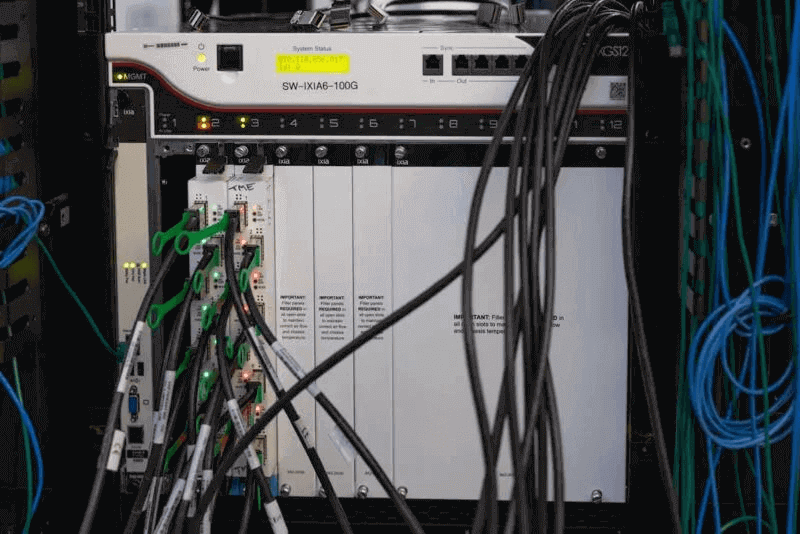

The company also has a larger chassis in the lab for 100GbE testing. As a switch vendor, Marvell needs such equipment to validate performance under various conditions.

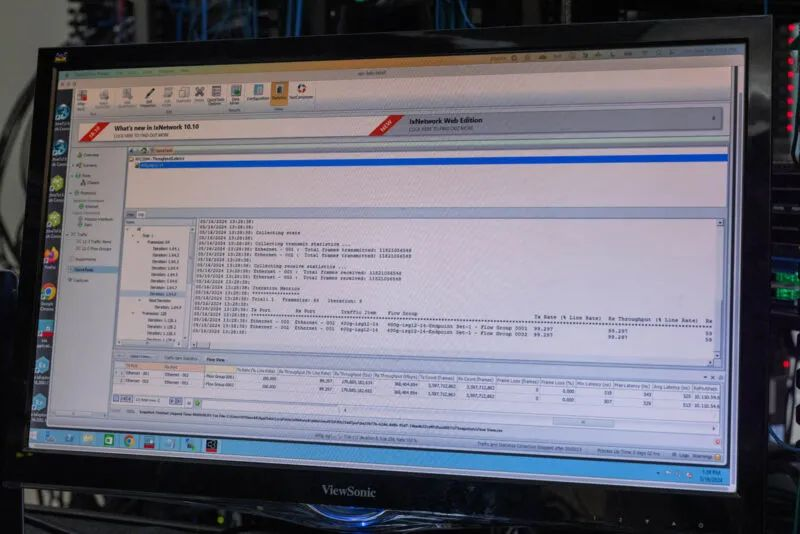

Here is an example of dual 400GbE running through the Teralynx switch at approximately 99.3% line rate.

Why Choose a 51.2Tbps Switch?

There are two main forces driving the adoption of 51.2T switches in the market. The first is the ever-popular topic of AI, and the second is the impact of power consumption and radix.

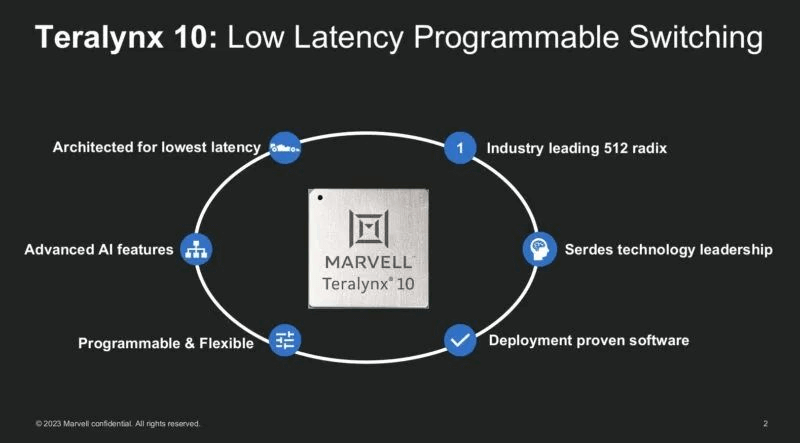

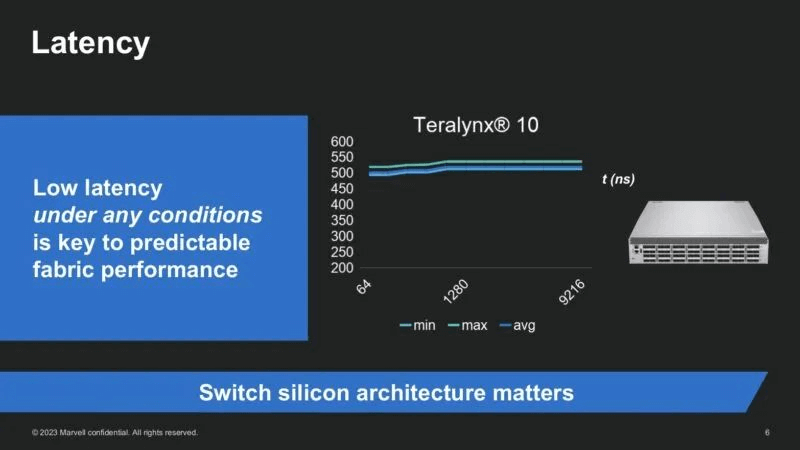

Marvell’s Teralynx 10 offers a latency of approximately 500 nanoseconds while providing immense bandwidth. This predictable latency, combined with the switch chip’s congestion control, programmability, and telemetry features, helps ensure that large clusters maintain optimal performance. Allowing AI accelerators to idle while waiting for the network is a very costly proposition.

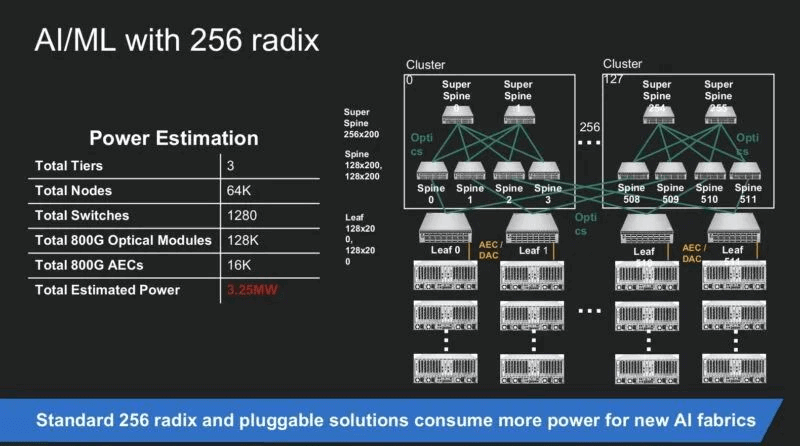

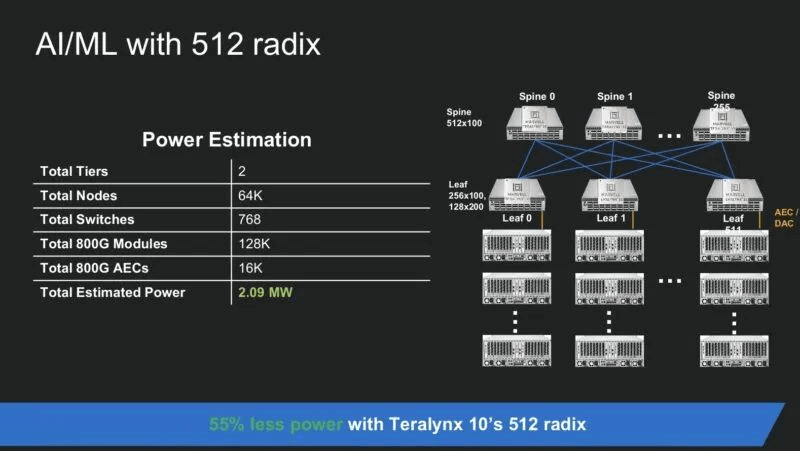

Another example is radix. Larger switches can reduce the number of switching layers, which in turn reduces the number of switches, fibers, cables, and other components needed to connect the cluster.

Since the Teralynx 10 can handle a radix of 512, connecting through up to 512x 100GbE links, some networks can reduce from needing three tiers of switching to just two. In large AI training clusters, this not only saves on capital equipment but also significantly reduces power consumption. Marvell provided an example where a larger radix could reduce power consumption by over 1MW.

Marvell also shared a slide showing a switch with an interesting cooler extending from the chassis. This appears to be a desktop prototype, which we found quite intriguing.

Finally, while we often see the front and even the back of switches in online and data center photos, we rarely get to see how these switches operate internally. Thanks to Marvell, we were able to see the switch in operation and even disassemble it down to the silicon.

Innovium, now a subsidiary of Marvell, is one of the few teams in the industry that has successfully competed with Broadcom and achieved hyperscale victories. We have seen other major silicon suppliers fail in this process. Given the market demand for high-radix, high-bandwidth, low-latency switching in AI clusters, the Teralynx 10 is likely to become the company’s largest product line since the Teralynx 7. The competition in this field is intense.

Of course, there are many layers to all networks. We could even conduct a comprehensive study of optical modules, not to mention software, performance, and more. However, showcasing what happens inside these switches is still quite fascinating.

Table of Contents

ToggleRelated Products:

-

NVIDIA MMS4X50-NM Compatible OSFP 2x400G FR4 PAM4 1310nm 2km DOM Dual Duplex LC SMF Optical Transceiver Module

$1200.00

NVIDIA MMS4X50-NM Compatible OSFP 2x400G FR4 PAM4 1310nm 2km DOM Dual Duplex LC SMF Optical Transceiver Module

$1200.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

QSFP-DD-800G-SR8 800G SR8 QSFP-DD 850nm 100m OM4 MMF MPO-16 Optical Transceiver Module

$850.00

QSFP-DD-800G-SR8 800G SR8 QSFP-DD 850nm 100m OM4 MMF MPO-16 Optical Transceiver Module

$850.00

-

OSFP-800G-2FR4 OSFP 2x400G FR4 PAM4 CWDM4 2km DOM Dual CS SMF Optical Transceiver Module

$1500.00

OSFP-800G-2FR4 OSFP 2x400G FR4 PAM4 CWDM4 2km DOM Dual CS SMF Optical Transceiver Module

$1500.00

-

QSFP-DD-800G-LR8 QSFP-DD 8x100G LR PAM4 1310nm 10km MPO-16 SMF FEC Optical Transceiver Module

$1600.00

QSFP-DD-800G-LR8 QSFP-DD 8x100G LR PAM4 1310nm 10km MPO-16 SMF FEC Optical Transceiver Module

$1600.00

-

OSFP-800G-FR8L OSFP 800G FR8 PAM4 CWDM8 Duplex LC 2km SMF Optical Transceiver Module

$3000.00

OSFP-800G-FR8L OSFP 800G FR8 PAM4 CWDM8 Duplex LC 2km SMF Optical Transceiver Module

$3000.00

-

OSFP-800G-SR8D OSFP 8x100G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

OSFP-800G-SR8D OSFP 8x100G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00