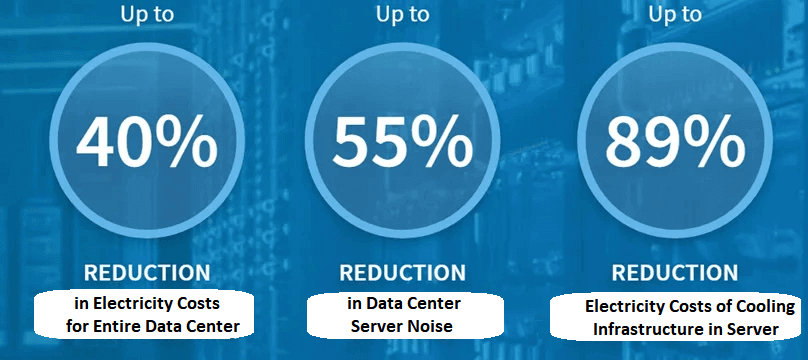

As AI technology continues to advance, more data centers are turning to liquid cooling. Compared to traditional air cooling methods, liquid cooling—especially Direct Liquid Cooling (DLC)—offers significantly higher heat dissipation efficiency. Liquid’s thermal conductivity is 50 to 3,000 times greater than air, enabling better thermal management in high-density server environments that generate substantial heat. Additionally, liquid cooling can reduce overall energy consumption. Research indicates that transitioning from air systems to liquid systems can reduce facility power usage by 27% and total site energy consumption by 15.5%. Another advantage of liquid cooling is its quieter operation and smaller physical footprint.

In summary, liquid cooling boasts high energy efficiency, quiet operation, and space-saving advantages.

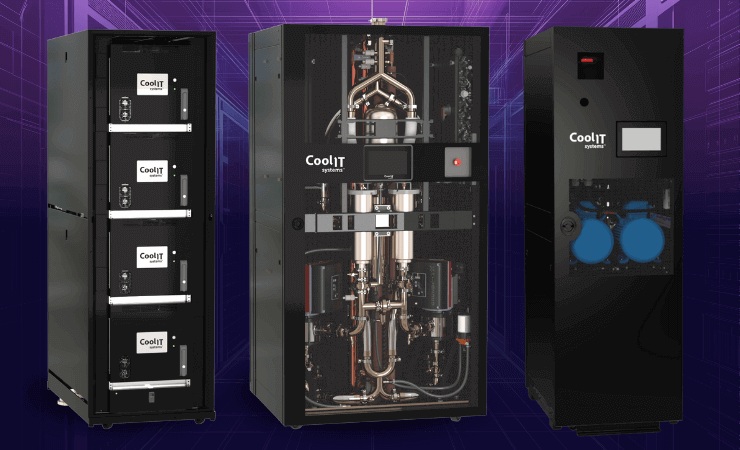

CoolIT Systems Liquid Cooling Solutions

CoolIT Systems (CoolIT), established in 2001, initially designed and distributed direct liquid cooling products for the desktop gaming industry. In 2014, the company began developing products for data centers and server OEMs, and it is now regarded as a leading provider of direct liquid cooling solutions. Headquartered in Calgary, Canada, CoolIT has manufacturing facilities in both Canada and China. Notably, CoolIT provides liquid cooling support for El Capitan, claimed to be the world’s fastest supercomputer.

Recently, amid reports of Blackwell overheating, CoolIT Systems announced the launch of the CHx1000, the world’s highest-density liquid-to-liquid Coolant Distribution Unit (CDU). Specifically designed for mission-critical applications, the CHx1000 is intended to cool the NVIDIA Blackwell platform and other demanding AI workloads requiring liquid cooling.

According to CoolIT COO Patrick McGinn, the CHx1000 leverages over 20 years of DLC innovation and collaboration with leading processor manufacturers and hyperscale companies, delivering 1000kW of cooling capacity at flow rates up to 1.5 liters per minute per kilowatt (LPM/kW) with an approach temperature of 3°C.

The CHx1000 liquid-to-liquid CDU will initially cool ten unprecedented NVIDIA GB200 NVL72 platform racks, providing ample performance for future AI chips and servers with higher thermal densities. The NVIDIA GB200 NVL72 offers inference performance 30 times faster and energy efficiency 25 times higher than its predecessor, making it suitable for large-scale LLM applications.

Designed for in-row maintenance, the CHx1000 features front and rear access and field-replaceable pumps, filters, and sensors without interrupting operation. Its high-reliability design includes stainless steel piping, built-in 25-micron filters, and the highest-grade wetted materials. Intelligent controls dynamically adjust the coolant flow to the chip’s precise temperature, flow, and pressure. The device can be remotely controlled via a 10-inch touchscreen or through protocols like Redfish, SNMP, TCP/IP, and Modbus.

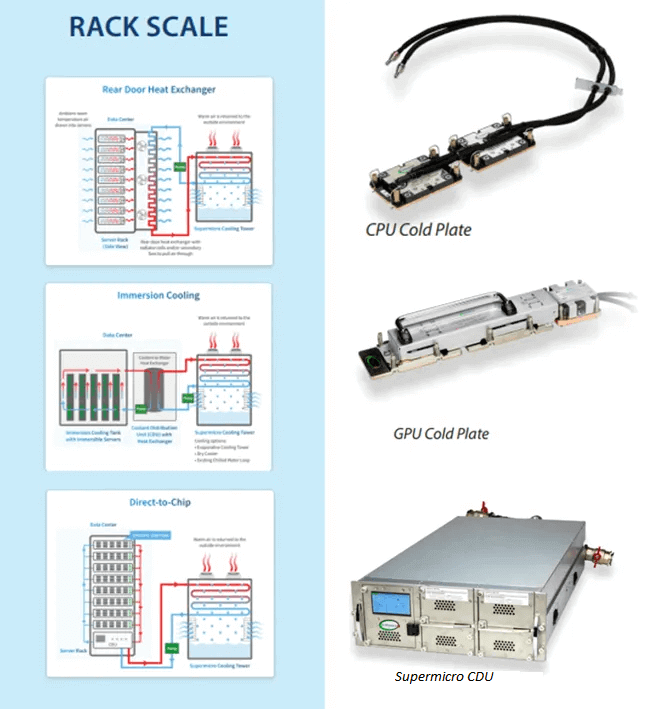

Supermicro Direct Liquid Cooling

Supermicro has launched the SuperCluster solution, integrating NVIDIA Blackwell GPUs into a liquid-cooled rack configuration. This setup enhances GPU computing density and includes advanced features such as vertical coolant distribution manifolds and improved cold plates for optimal thermal management. The design increases efficiency and reduces operational costs, making it suitable for large-scale AI deployments.

At Computex this year, SuperMicro announced systems optimized for Nvidia’s Blackwell GPUs, including 10U air-cooled and 4U liquid-cooled devices for HGX B200-based systems. The company is also developing an air-cooled HGX B100 system and a GB200 NVL72 rack containing 72 GPUs interconnected through Nvidia NVLink switches. Additionally, SuperMicro has committed to launching systems based on Intel’s Xeon 6.

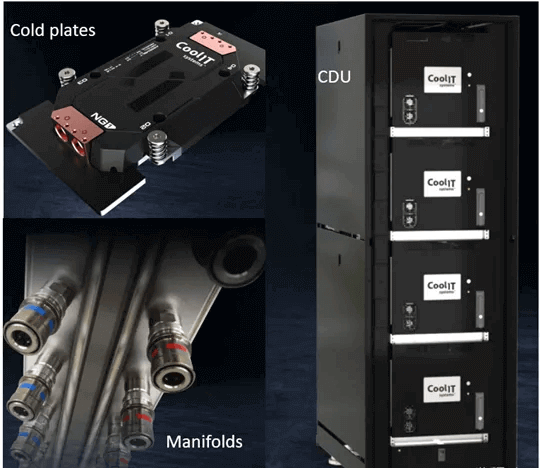

Super-micro’s liquid-cooled rack solution consists of several internally designed components, including:

- Coolant Distribution Unit (CDU): This unit includes a pumping system that circulates coolant to the cold plates cooling the CPUs and GPUs. Supermicro’s CDU integrates two hot-swappable and redundant pumping modules and power modules to ensure nearly 100% uptime. It has a cooling capacity of up to 100kW, enabling very high rack density. The CDU also features an easy-to-use touchscreen, accessible via WebUI for monitoring and controlling rack operations, and is integrated into Supermicro’s Super Cloud Composer data center management software. The control system optimizes power consumption while ensuring efficient cooling for all CPUs and GPUs, with effective anti-condensation strategies to prevent any hardware performance degradation.

- Coolant Distribution Manifold (CDM): The CDM supplies coolant to each server and collects the heated coolant back to the CDU. There are two types of CDMs:

- Vertical: Placed at the back of the rack, these manifolds connect directly to the CDU via hoses. They deliver coolant to the system’s cold plates, with inlet and outlet hoses at the back of the rack.

- Horizontal: Located at the front of the rack within a 1U rack space, these manifolds connect the vertical manifolds at the back to the cold plates on systems at the front of the rack (e.g., SuperBlades and 8U GPU servers).

- Cold Plates: Cold plates are placed on top of the CPUs and GPUs, with coolant flowing through their microchannels to cool the chips efficiently. Supermicro’s cold plates are designed to minimize hotspots on the chips and achieve ultra-low thermal resistance.

Lenovo Neptune Liquid Cooling System

The Lenovo ThinkSystem N1380 Neptune is a sixth-generation vertical liquid cooling system designed to efficiently cool high-density server racks, achieving configurations over 100 kW without requiring specialized air conditioning. This open-loop direct warm water cooling system significantly reduces power consumption—40% lower than traditional cooling methods. Lenovo’s expertise in liquid cooling comes from its acquisition of IBM’s server technology, establishing it as a leader in the field.

The direct water-cooling solution recirculates warm water to cool data center systems, keeping all server components cool and reducing the need for power-hungry system fans. The patented cold plate design, optimized for CPUs and accelerators, maximizes the cooling capacity of accelerators, currently handling power consumption around 700W, with future designs expected to exceed 1000W. The new warm water cooling design (Neptune™ Warm Water Cooling) will enable operation without any specialized data center air conditioning.

Related Products:

-

OSFP-800G-FR4 800G OSFP FR4 (200G per line) PAM4 CWDM Duplex LC 2km SMF Optical Transceiver Module

$5000.00

OSFP-800G-FR4 800G OSFP FR4 (200G per line) PAM4 CWDM Duplex LC 2km SMF Optical Transceiver Module

$5000.00

-

OSFP-800G-2FR2L 800G OSFP 2FR2 (200G per line) PAM4 1291/1311nm 2km DOM Duplex LC SMF Optical Transceiver Module

$4500.00

OSFP-800G-2FR2L 800G OSFP 2FR2 (200G per line) PAM4 1291/1311nm 2km DOM Duplex LC SMF Optical Transceiver Module

$4500.00

-

OSFP-800G-2FR2 800G OSFP 2FR2 (200G per line) PAM4 1291/1311nm 2km DOM Dual CS SMF Optical Transceiver Module

$4500.00

OSFP-800G-2FR2 800G OSFP 2FR2 (200G per line) PAM4 1291/1311nm 2km DOM Dual CS SMF Optical Transceiver Module

$4500.00

-

OSFP-800G-DR4 800G OSFP DR4 (200G per line) PAM4 1311nm MPO-12 500m SMF DDM Optical Transceiver Module

$3500.00

OSFP-800G-DR4 800G OSFP DR4 (200G per line) PAM4 1311nm MPO-12 500m SMF DDM Optical Transceiver Module

$3500.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1200.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1200.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$850.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$850.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1100.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1100.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$750.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$750.00

-

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$800.00

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$800.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$800.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$800.00

-

NVIDIA MMS4X50-NM Compatible OSFP 2x400G FR4 PAM4 1310nm 2km DOM Dual Duplex LC SMF Optical Transceiver Module

$1350.00

NVIDIA MMS4X50-NM Compatible OSFP 2x400G FR4 PAM4 1310nm 2km DOM Dual Duplex LC SMF Optical Transceiver Module

$1350.00

-

OSFP-XD-1.6T-4FR2 1.6T OSFP-XD 4xFR2 PAM4 1291/1311nm 2km SN SMF Optical Transceiver Module

$17000.00

OSFP-XD-1.6T-4FR2 1.6T OSFP-XD 4xFR2 PAM4 1291/1311nm 2km SN SMF Optical Transceiver Module

$17000.00

-

OSFP-XD-1.6T-2FR4 1.6T OSFP-XD 2xFR4 PAM4 2x CWDM4 2km Dual Duplex LC SMF Optical Transceiver Module

$22400.00

OSFP-XD-1.6T-2FR4 1.6T OSFP-XD 2xFR4 PAM4 2x CWDM4 2km Dual Duplex LC SMF Optical Transceiver Module

$22400.00

-

OSFP-XD-1.6T-DR8 1.6T OSFP-XD DR8 PAM4 1311nm 2km MPO-16 SMF Optical Transceiver Module

$12600.00

OSFP-XD-1.6T-DR8 1.6T OSFP-XD DR8 PAM4 1311nm 2km MPO-16 SMF Optical Transceiver Module

$12600.00