When we delve deeper into the field of AI computing networks, we find that there are two mainstream architectures in the market: InfiniBand and RoCEv2.

These two network architectures compete with each other in terms of performance, cost, versatility and other key dimensions. We will analyze the technical characteristics of these two architectures, their application scenarios in AI intelligent computing networks, and their respective advantages and limitations. This article aims to evaluate the potential application value and future development direction of InfiniBand and RoCEv2 in AI computing networks, in order to provide deep insights and professional guidance for the industry.

Table of Contents

ToggleInfiniBand

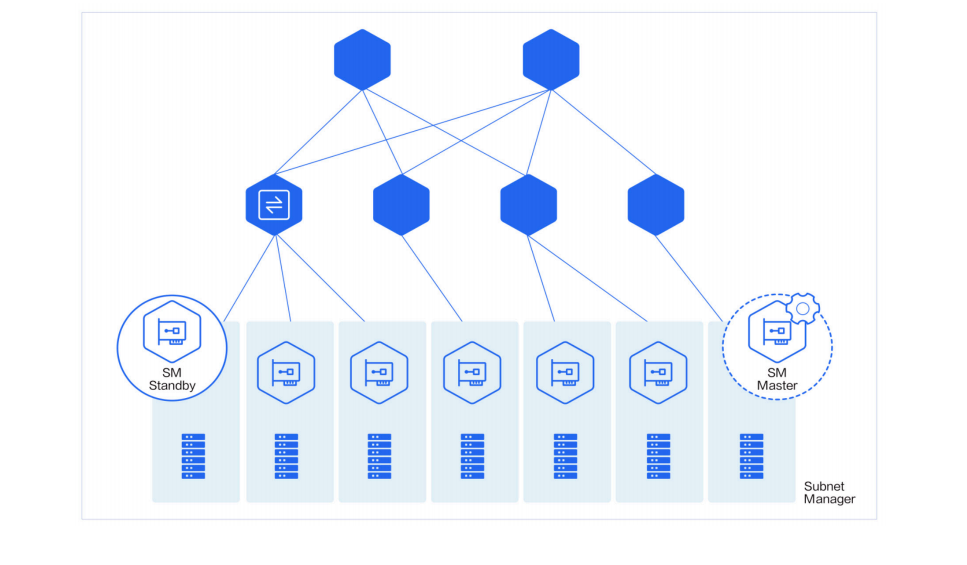

Network Architecture InfiniBand networks are mainly centrally managed through a subnet manager (SM). SM is usually deployed on a server connected to the subnet and acts as the central controller of the network. There may be multiple devices configured as SMs in a subnet, but only one is designated as the master SM, which is responsible for managing all switches and network cards through the internal distribution and upload of management data message (MAD). Each network card port and switch chip is identified by a unique identifier (Local ID, LID) assigned by the SM to ensure the uniqueness and accuracy of the device within the network. The core responsibilities of the SM include maintaining the routing information of the network and calculating and updating the routing table of the switching chip. The SM Agent (SMA) function inside the network card enables the network card to independently process message sent by the SM without the intervention of the server, thereby improving the automation and efficiency of the network.

InfiniBand network architecture diagram

- InfiniBand Network Flow Control Mechanism

InfiniBand network is based on credit mechanism, and each link is equipped with a pre-set buffer. The sender will start sending data only after confirming that the receiver has enough buffer, and the amount of data sent cannot exceed the maximum capacity of the preset buffer currently available to the receiver. When the receiving end receives message, it releases the buffer and informs the sending end of the currently available preset buffer size, thereby maintaining the smooth operation of the network and the continuity of data transmission.

- InfiniBand Network Features:

Link-level flow control and adaptive routing InfiniBand networks rely on link-level flow control mechanisms to prevent excessive data from being sent, thereby avoiding buffer overflow or data packet loss. At the same time, the adaptive routing technology of the InfiniBand network can perform dynamic routing selection based on the specific circumstances of each data packet, achieving real-time optimization of network resources and optimal load balancing in ultra-large-scale network environments.

RoCEv2

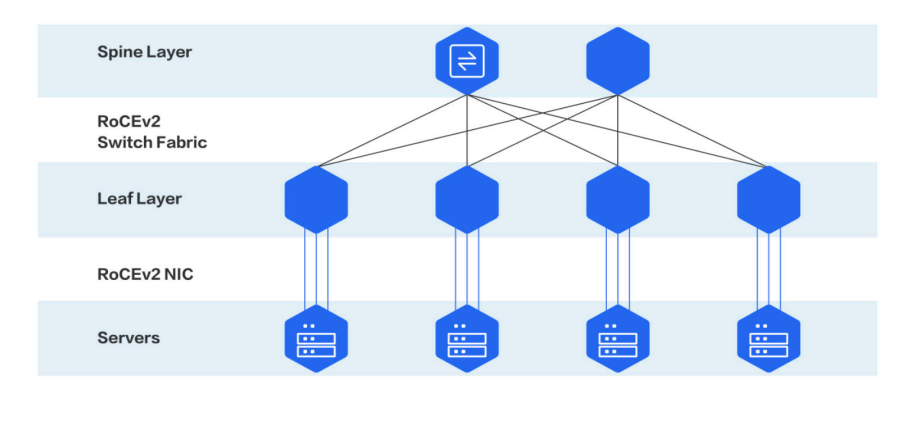

Network architecture RoCE (RDMA over Converged Ethernet) protocol is a cluster network communication protocol that can perform RDMA (Remote Direct Memory Access) on Ethernet. There are two main versions of the protocol: RoCEv1 and RoCEv2. As a link layer protocol, RoCEv1 requires both communicating parties to be located in the same Layer 2 network. RoCEv2 is a network layer protocol that uses the Ethernet network layer and the UDP transport layer to replace the InfiniBand network layer, thus providing better scalability. Unlike the centralized management of InfiniBand networks, RoCEv2 uses a purely distributed architecture, usually consisting of two layers, which has significant advantages in scalability and deployment flexibility.

Architecture Diagram of RoCEv2 network

- Flow Control Mechanism of RoCEv2 Network

Priority Flow Control (PFC) is a hop-by-hop flow control strategy that makes full use of the switch cache by properly configuring the watermark to achieve loss-free transmission in Ethernet networks. When the buffer of a downstream switch port is overloaded, the switch requests the upstream device to stop transmission. The sent data will be stored in the cache of the downstream switch. When the cache returns to normal, the port will request to resume sending data packets, thereby maintaining smooth operation of the network. Explicit Congestion Notification (ECN) defines a flow control and end-to-end congestion notification mechanism based on the IP layer and the transport layer. The purpose of congestion control is achieved by transmitting specific congestion information to the server on the switch, and then the server sends it to the client to notify the source end to slow down. Data Center Quantized Congestion Notification (DCQCN) is a combination of Explicit Congestion Notification (ECN) and Priority Flow Control (PFC) mechanisms, designed to support end-to-end lossless Ethernet communication. The core concept is to use ECN to notify the sender to reduce the transmission rate when network congestion occurs, to prevent unnecessary activation of PFC, and to avoid buffer overflow caused by severe congestion. Through this fine-grained flow control, DCQCN is able to avoid data loss due to congestion while maintaining efficient network operation.

- RoCEv2 Network Features: strong compatibility and cost optimization

RoCE networks use RDMA technology to achieve efficient data transmission without occupying the CPU cycles of remote servers, thereby fully utilizing bandwidth and enhancing network scalability. This approach significantly reduces network latency and increases throughput, improving overall network performance. Another significant advantage of the RoCE solution is that it can be seamlessly integrated into the existing Ethernet infrastructure, which means that enterprises can achieve a performance leap without having to invest in new equipment or replace equipment. This cost-effective network upgrade method is critical to reducing an enterprise’s capital expenditure, making RoCE the preferred solution for improving network performance in intelligent computing centers.

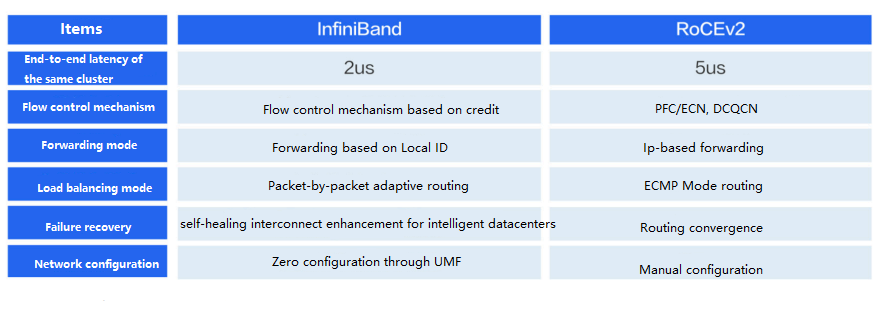

Technical Differences between InfiniBand and RoCEv2

The diverse demands for networks in the market have led to the joint development of InfiniBand and RoCEv2 network architectures. InfiniBand networks have demonstrated significant advantages in application layer service performance due to their advanced technologies, such as efficient forwarding performance, fast fault recovery time, enhanced scalability, and operation and maintenance efficiency. In particular, they can provide excellent network throughput performance in large-scale scenarios.

InfiniBand Network and RoCEv2 Technology Comparison Chart

The RoCEv2 network is favored for its strong versatility and low cost. It is not only suitable for building high-performance RDMA networks, but also seamlessly compatible with existing Ethernet infrastructure. This gives RoCEv2 obvious advantages in breadth and applicability, and can meet network applications of different scales and needs. The respective characteristics and advantages of these two architectures provide a wealth of options for the network design of AI computing centers to meet the specific needs of different users.

Related Products:

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

-

NVIDIA MCA7J60-N004 Compatible 4m (13ft) 800G Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Active Copper Cable

$800.00

NVIDIA MCA7J60-N004 Compatible 4m (13ft) 800G Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Active Copper Cable

$800.00

-

NVIDIA MCP7Y60-H01A Compatible 1.5m (5ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$116.00

NVIDIA MCP7Y60-H01A Compatible 1.5m (5ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$116.00

-

NVIDIA(Mellanox) MCP1600-E00AE30 Compatible 0.5m InfiniBand EDR 100G QSFP28 to QSFP28 Copper Direct Attach Cable

$25.00

NVIDIA(Mellanox) MCP1600-E00AE30 Compatible 0.5m InfiniBand EDR 100G QSFP28 to QSFP28 Copper Direct Attach Cable

$25.00

-

NVIDIA NVIDIA(Mellanox) MCX653106A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Dual-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$828.00

NVIDIA NVIDIA(Mellanox) MCX653106A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Dual-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$828.00

-

NVIDIA NVIDIA(Mellanox) MCX653105A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall bracket

$965.00

NVIDIA NVIDIA(Mellanox) MCX653105A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall bracket

$965.00