The competition between InfiniBand and Ethernet has always existed in the field of high-performance computing. Enterprises and organizations need to weigh the advantages and disadvantages of these two technologies to choose the network technology that best suits their needs. Having multiple options when optimizing systems is a good thing, because different software behaves differently, and different institutions have different budgets. Therefore, we see the use of various interconnects and protocols in HPC systems, and we think that this diversity will not decrease, but may increase, especially as we gradually approach the end of Moore’s law.

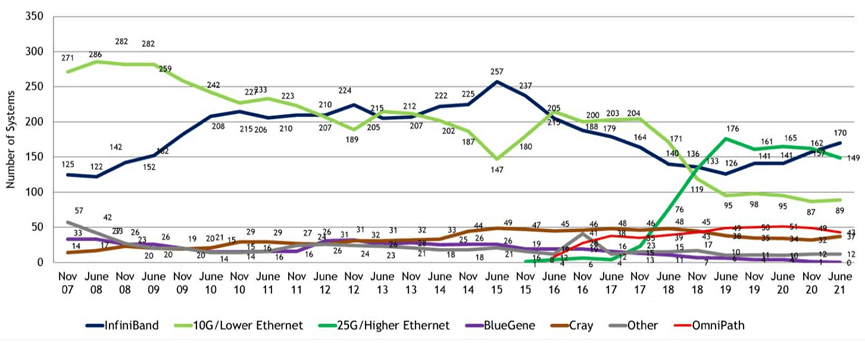

It is always interesting to take a deep look at the interconnect trends in the Top500 supercomputer rankings, which are released twice a year. We analyzed the new systems on the list and all the computing indicators reflected in the ranking, and now it is time to look at the interconnects. Gilad Shainer, senior vice president and product manager of Quantum InfiniBand switches at Nvidia (formerly part of Mellanox Technology), always analyzes the interconnects of the Top500 and shares them with us. Now, we can share his analysis with you. Let’s dive in. Let’s start by looking at the development trend of interconnect technologies in the Top500 list from November 2007 to June 2021.

The Top500 list includes high-performance computing systems from academia, government, and industry, as well as systems built by service providers, cloud builders, and hyperscale computing platforms. Therefore, this is not a pure “supercomputer” list, as people usually call machines that run traditional simulation and modeling workloads supercomputers.

InfiniBand and Ethernet running at 10Gb/sec or lower speeds have experienced ups and downs in the past thirteen and a half years. InfiniBand is rising, while its Omni-Path variant (formerly controlled by Intel, now owned by Cornelis Networks) has slipped slightly in the June 2021 ranking.

CORNELIS Releases OMNI-PATH Interconnect Roadmap

However, Ethernet running at 25Gb/sec or higher speeds is on the rise, especially in the rapid growth between 2017 and 2019, which is because 100Gb/sec switches (usually Mellanox Spectrum-2 switches) are cheaper than previous 100Gb/sec technologies, which relied on more expensive transmission modes, so most high-performance computing centers would not consider using them. Like many hyperscale and cloud builders, they skipped the 200 Gb/sec Ethernet generation, except for backbone and data center interconnects, and they waited for the cost of 400 Gb/sec switches to drop, so they could use 400 Gb/sec devices.

In the June 2021 rankings, if we add up the Nvidia InfiniBand and Intel Omni-Path data, then there are 207 machines with InfiniBand interconnects, accounting for 41.4 percent of the list. We strongly suspect that some of the interconnects called “proprietary” on the list, mostly from China, are also variants of InfiniBand. As for Ethernet, regardless of speed, the share of Ethernet interconnects on the Top500 list has varied from a low of 248 machines in June 2021 to a high of 271 machines in June 2019 in the past four years. In recent years, InfiniBand has been eroding the position of Ethernet, which is not surprising to us, because high-performance computing (and now artificial intelligence) workloads are very sensitive to latency, and the cost of InfiniBand has decreased over time as its sales have gradually increased. (The adoption of InfiniBand by hyperscale and cloud builders helps lower the prices.)

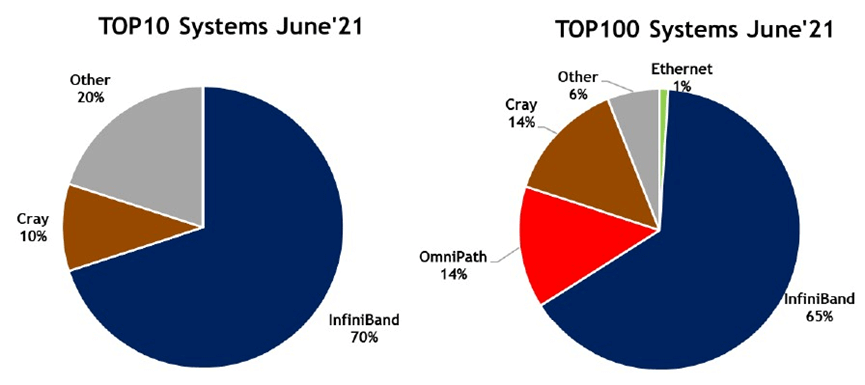

Most of the Top100 systems and the Top10 systems can be called true supercomputers, meaning that they mainly engage in traditional high-performance computing work. However, more and more machines are also running some artificial intelligence workloads. Here is the distribution of interconnects among these top machines.

As can be seen from the figure above, Ethernet does not dominate here, but it will grow as HPE starts shipping 200Gb/sec Slingshot (a variant of Ethernet optimized for high-performance computing developed by Cray), which is already used by the “Perlmutter” system at Lawrence Berkeley National Laboratory with two 100Gb/sec ports per node. We also strongly suspect that the Sunway TaihuLight machine (at the National Supercomputing Center in Wuxi, China) uses a variant of InfiniBand (although Mellanox never confirmed it, nor did the lab). The former number one “Fugaku” (at the RIKEN Institute in Japan) uses the third-generation Tofu D interconnect technology developed by Fujitsu, which implements a proprietary 6D torus topology and protocol. The “Tianhe-2A” (at the National Supercomputing Center in Guangzhou, China) adopts the TH Express-2 proprietary interconnect technology, which is unique.

In the Top100 computer ranking, Cray interconnects include not only the first Slingshot machine but also a batch of machines using the previous generation of “Aries” interconnects. In the June 2021 ranking, there were five Slingshot machines and nine Aries machines in the Top100. If Slingshot is considered as Ethernet, then Ethernet’s share is 6%, and the proprietary Cray share drops to 9%. If Mellanox/Nvidia InfiniBand is combined with Intel Omni-Path, InfiniBand has 79 machines in the Top100.

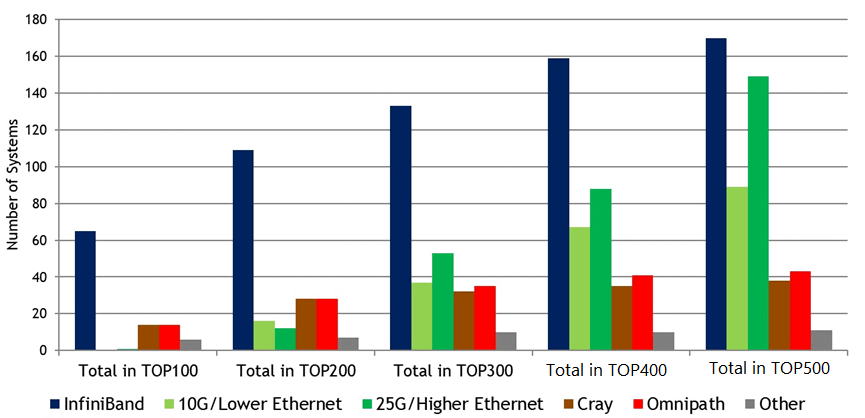

When expanding from Top100 to Top500, the distribution of interconnects is as follows by adding 100 machines each time:

The penetration of Ethernet is expected to increase as the list expands, because many academic and industrial high-performance computing systems cannot afford InfiniBand, or are unwilling to switch from Ethernet. And those service providers, cloud builders, and hyperscale operators run Linpack on a small fraction of their clusters, for political or business reasons. The relatively slower Ethernet is popular in the bottom half of the Top500 list, while InfiniBand’s penetration drops from 70% in the Top10 to 34% in the full Top500.

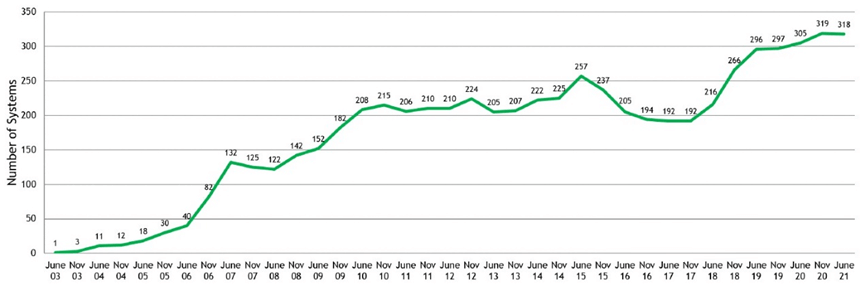

The following figure is another chart, that aggregates most of the InfiniBand and Ethernet on the Top500 list, and it partly explains why Nvidia paid $6.9 billion to acquire Mellanox.

Nvidia’s InfiniBand has a 34% share of the Top500 interconnects, with 170 systems, but the rise of Mellanox Spectrum and Spectrum-2 Ethernet switches in the Top500 is not obvious, as they add another 148 systems. This gives Nvidia a 63.6% share of all interconnects in the Top500 ranking. This is an achievement that Cisco Systems enjoyed for 20 years in the enterprise data center.

Related Products:

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1100.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1100.00

-

10m (33ft) 12 Fibers Female to Female MPO Trunk Cable Polarity B LSZH OS2 9/125 Single Mode

$32.00

10m (33ft) 12 Fibers Female to Female MPO Trunk Cable Polarity B LSZH OS2 9/125 Single Mode

$32.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$750.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$750.00

-

NVIDIA MFP7E10-N015 Compatible 15m (49ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$54.00

NVIDIA MFP7E10-N015 Compatible 15m (49ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$54.00

-

NVIDIA MCP4Y10-N00A Compatible 0.5m (1.6ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive Direct Attach Copper Cable

$105.00

NVIDIA MCP4Y10-N00A Compatible 0.5m (1.6ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive Direct Attach Copper Cable

$105.00

-

NVIDIA MFA7U10-H015 Compatible 15m (49ft) 400G OSFP to 2x200G QSFP56 twin port HDR Breakout Active Optical Cable

$835.00

NVIDIA MFA7U10-H015 Compatible 15m (49ft) 400G OSFP to 2x200G QSFP56 twin port HDR Breakout Active Optical Cable

$835.00

-

NVIDIA MCP7Y60-H001 Compatible 1m (3ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$99.00

NVIDIA MCP7Y60-H001 Compatible 1m (3ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$99.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1200.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1200.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$850.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$850.00

-

NVIDIA MCP4Y10-N00A-FTF Compatible 0.5m (1.6ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive DAC, Flat top on one end and Finned top on other

$105.00

NVIDIA MCP4Y10-N00A-FTF Compatible 0.5m (1.6ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive DAC, Flat top on one end and Finned top on other

$105.00

-

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$650.00

-

NVIDIA Mellanox MCX75510AAS-NEAT ConnectX-7 InfiniBand/VPI Adapter Card, NDR/400G, Single-port OSFP, PCIe 5.0x 16, Tall Bracket

$1650.00

NVIDIA Mellanox MCX75510AAS-NEAT ConnectX-7 InfiniBand/VPI Adapter Card, NDR/400G, Single-port OSFP, PCIe 5.0x 16, Tall Bracket

$1650.00

-

NVIDIA Mellanox MCX653105A-HDAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR/200GbE, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$1400.00

NVIDIA Mellanox MCX653105A-HDAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR/200GbE, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$1400.00

-

NVIDIA MCP7Y50-N001-FLT Compatible 1m (3ft) 800G InfiniBand NDR Twin-port OSFP to 4x200G Flat Top OSFP Breakout DAC

$275.00

NVIDIA MCP7Y50-N001-FLT Compatible 1m (3ft) 800G InfiniBand NDR Twin-port OSFP to 4x200G Flat Top OSFP Breakout DAC

$275.00

-

NVIDIA MCA7J70-N004 Compatible 4m (13ft) 800G InfiniBand NDR Twin-port OSFP to 4x200G OSFP Breakout ACC

$1100.00

NVIDIA MCA7J70-N004 Compatible 4m (13ft) 800G InfiniBand NDR Twin-port OSFP to 4x200G OSFP Breakout ACC

$1100.00

-

NVIDIA MCA7J60-N004 Compatible 4m (13ft) 800G Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Active Copper Cable

$800.00

NVIDIA MCA7J60-N004 Compatible 4m (13ft) 800G Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Active Copper Cable

$800.00

-

NVIDIA MCP7Y00-N001-FLT Compatible 1m (3ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$175.00

NVIDIA MCP7Y00-N001-FLT Compatible 1m (3ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$175.00