In the fast-changing technology world, GPU servers have become vital to advanced computing and deep learning. These servers are equipped with high-performance Graphics Processing Units (GPUs) that offer unmatched computational power; thus, they have revolutionized data-intensive operations. Unlike CPUs, which were used traditionally, GPUs are designed for parallel processing. They can handle many tasks simultaneously, making them suitable for modern applications such as artificial intelligence and machine learning, which require high-throughput processing. This paper discusses the inherent benefits of using GPU servers in accelerating computations and describes their contribution to speeding up different scientific and industrial processes while simplifying complex simulations. We also hope to make people understand better why these machines are so important by giving some examples of where they can be applied both theoretically and practically based on technical background information, besides showing various fields of science or industries affected directly.

Table of Contents

ToggleWhat is a GPU Server and How Does It Work?

Understanding the Basics of GPU Servers

At its core, a GPU server is a computer system that uses one or more Graphics Processing Units (GPUs) to perform calculations. Unlike Central Processing Units (CPUs), which are designed for sequential processing tasks, GPUs can perform massively parallel computations more efficiently. Such servers can thus process huge volumes of data concurrently by utilizing numerous GPU cores. This makes them ideal for applications like graphical rendering, training deep learning models, or running complex numerical simulations, where the raw computational power required is enormous. In most cases, GPU servers consist of CPUs and GPUs that work together – the CPU handles general-purpose processing while the GPU accelerates specialized parallel computing; this leads to much higher performance than any single processor could achieve alone within such systems.

The Role of Nvidia GPUs in Modern Servers

Modern servers rely heavily on Nvidia GPUs because they are unmatched in terms of computational capacity and efficiency. These GPUs are known for their sophisticated architecture as well as the CUDA (Compute Unified Device Architecture) programming model, which makes them very powerful in terms of parallel processing that is necessary for dealing with complicated computational problems such as AI, ML, or big data analytics. Whether it is artificial intelligence, machine learning, or large-scale data analysis – these cards can accelerate computations dramatically, reducing the processing time required for completion. In addition to this, when integrated with server environments, they ensure the best use of resources, thus improving overall system performance while enabling the execution of complex algorithms and simulations at never-before-seen speeds.

How GPU Servers Accelerate Compute Workloads

Servers with GPUs accelerate computing by many times through parallel processing, vast computational power, and modified architectures for intricate operations. These chips have thousands of processors that can perform multiple tasks simultaneously; hence, they are faster in handling data-intensive applications like AI, ML, and rendering, among others. Such servers achieve faster speeds in completing tasks by directing them to be run on graphic cards, which can be done at the same time, unlike traditional systems, which only use CPUs for this purpose. Moreover, Nvidia CUDA software makes it possible for programmers to optimize their codes so as to take full advantage of these kinds of hardware, thus further improving performance while cutting down on delays during computation workloads. In this case, both CPUs and GPUs are used together so that each component works at its maximum power level, thereby giving better overall results across various types of programs.

Why Choose Nvidia GPU Servers for AI and Machine Learning?

The Benefits of Nvidia GPUs for AI Training

AI training gains many things from Nvidia GPUs. First, their parallel processing structure consists of thousands of cores, which allows for simultaneous execution of many calculations that greatly speed up the training process of complicated machine learning models. Second, developers are given powerful AI-optimized GPU performance through the Nvidia CUDA platform; thus, training times can be reduced, and model accuracy can be improved. Thirdly, high memory bandwidth in Nvidia GPUs ensures efficient management of large data sets necessary for training deep learning models. Last but not least is its ecosystem comprising software libraries such as cuDNN or TensorRT, among others, which provide full support together with regular updates so that researchers in this area always have access to current developments in graphic card technologies – all these reasons make it clear why any person dealing with AI would want to use them during their work on different tasks related to Artificial Intelligence.

Deep Learning Advantages with Nvidia GPU Servers

For deep learning applications, Nvidia GPU servers have many benefits. They can do thousands of parallel computations at the same time by utilizing multiple cores, and this greatly speeds up model training as well as inference tasks. The CUDA platform optimizes deep learning workloads so that hardware resources are used efficiently. High memory bandwidth is provided by Nvidia GPUs, which is necessary to process large datasets often used in deep learning. In addition, Nvidia has a wide range of software, such as cuDNN and TensorRT libraries, that ensure high performance and scalability for deep learning models. All these features make it clear why one should choose Nvidia GPUs when deploying or scaling operations for deep learning models.

The Role of Nvidia’s CUDA in GPU Computing

Nvidia’s Compute Unified Device Architecture (CUDA) is extremely important to GPU computing as it provides a parallel computing platform and programming model that is made for Nvidia GPUs. By using CUDA, developers can tap into the power of Nvidia GPUs for general-purpose processing or GPGPU, where the functions usually handled by the CPU are offloaded to the GPU so as to enhance efficiency. Thousands of GPU cores are used by this platform to carry out concurrent operations, which greatly speed up various computational tasks such as scientific simulations and data analysis, among others.

The architecture of CUDA consists of a wide range of development tools, libraries, and APIs that enable the creation and optimization of high-performance applications. The development tools in cuBLAS (for dense linear algebra), cuFFT (for fast Fourier transforms), and cuDNN (for deep neural networks) provide optimized implementations for common algorithms, thus speeding up application performance. It also supports several programming languages, including C, C++, and Python, which allows flexibility during development and integration with existing workflows.

Essentially, what this means is that with CUDA, you can make use of all the computational capabilities offered by Nvidia GPUs, hence making it possible for them to be used in areas requiring high processing power like artificial intelligence (AI), machine learning(ML), etcetera. Thus, its groundbreaking effect highlights the significance that CUDA has on modern GPU computing since it gives necessary tools plus a framework for coming up with next-generation apps.

What are the Key Components of a High-Performance GPU Server?

Essential CPU and GPU Choices

When picking parts for a high-performance GPU server, the CPU and GPU should be considered together to ensure the best performance.

CPU Options:

- AMD EPYC Series: The AMD EPYC processors, such as the EPYC 7003 series, have high core counts and strong performance. They are excellent at multi-threading and offer great memory bandwidths which make them perfect for data-intensive tasks.

- Intel Xeon Scalable Processors: Intel’s Xeon series (especially the Platinum and Gold models) focus on reliability and high throughput. Some features they provide include large memory support as well as robust security, which is essential in enterprise applications.

- AMD Ryzen Threadripper Pro: This line boasts powerful performance levels designed specifically with professional workstations or compute-heavy workloads in mind. Ryzen Threadripper Pro CPUs have many cores/threads, making them suitable for apps that need lots of processing power.

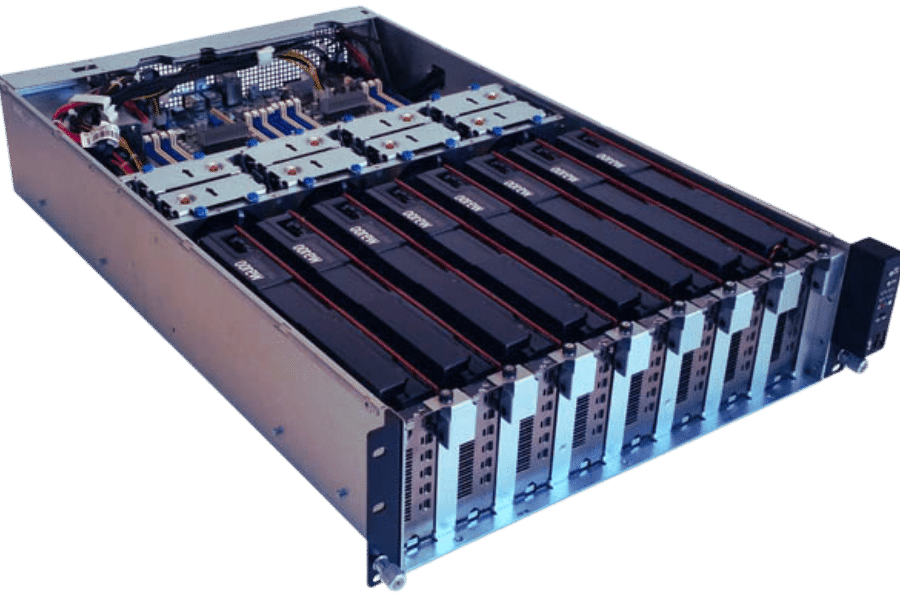

GPU Choices:

- Nvidia A100 Tensor Core GPU: The A100 was created for AI, data analytics, and high-performance computing (HPC). It has MIG support plus massive parallelism, which enables it to perform better in tasks that require significant computational efficiency.

- Nvidia RTX 3090: Although mainly used as a consumer-grade GPU, the RTX 3090 is found in some high-performance workstations because it has huge VRAM along with CUDA cores that make it good for deep learning, rendering or scientific simulations.

- AMD Radeon Instinct MI100: This advanced architecture GPU from AMD is designed for HPC and AI workloads where there needs to be a good balance between competitive performance and extensive support for large-scale parallel processing.

By selecting CPUs & GPUs strategically, enterprises can build GPU servers suitable enough even to handle their most demanding computational jobs while ensuring that they deliver balanced performance per watt efficiency.

Understanding PCIe and NVMe in GPU Servers

Two significant technologies in GPU servers’ architecture, which directly affect their productivity and power efficiency, are Peripheral Component Interconnect Express (PCIe) and Non-Volatile Memory Express (NVMe).

PCIe: A high-speed standard of an input/output interface designed to connect different hardware devices such as graphic cards, storage drives or network adapters directly to the motherboard. It comes with multiple lanes, each described by its data transfer rate (x1, x4, x8, x16, etc.), thus offering substantial bandwidth. PCIe lanes in GPU servers provide fast communication between the CPU and GPUs, thereby minimizing latency and maximizing computational throughput.

NVMe: Non-Volatile Memory Express is a storage protocol that capitalizes on the speed advantages offered by PCI Express for solid-state drives (SSDs). It differs from traditional protocols like SATA by operating over a PCIe bus directly, hence significantly reducing latency while increasing IOPS (Input/Output Operations Per Second). In GPU servers, NVMe SSDs are used to cope with large datasets typical of AI, machine learning, and data analytics due to their high throughput and low latency storage solutions.

Interaction between PCI express and non-volatile memory express within GPU servers allows processing units together with storage resources to function at peak rates, thereby enhancing the smooth flow of information and fastening computational performance. This combination is necessary for heavy data transfer workloads with high computation intensity since it ensures effectiveness plus dependability during operation.

Rackmount vs Tower GPU Servers

When selecting a GPU server, you should consider whether to choose rackmount or tower. You need to take into account factors such as space, scalability, cooling efficiency, and deployment scenarios.

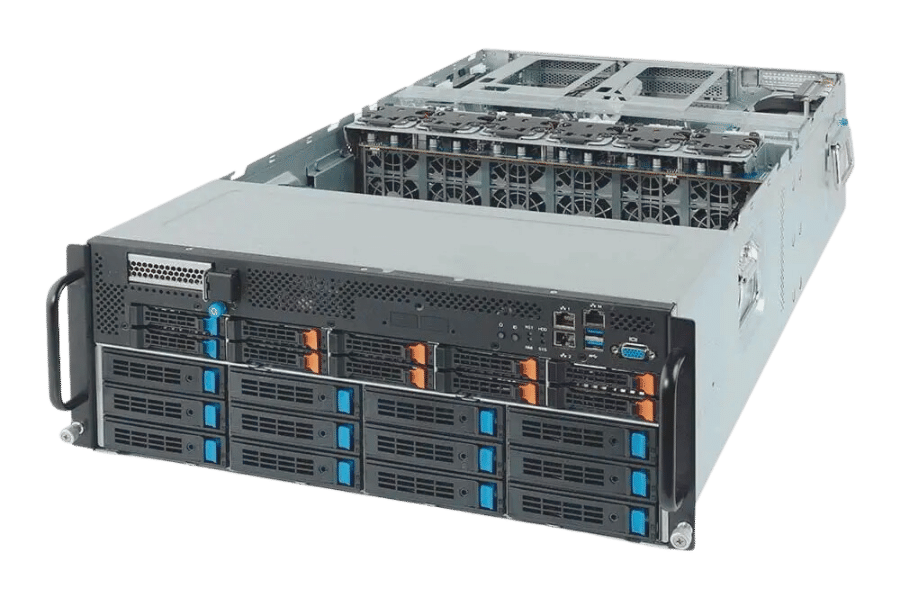

Rackmount GPU Servers: These servers are designed to fit in a server rack; therefore, they have a compact design, which saves space in data centers. In other words, racks allow for higher densities of GPUs within a limited area, making them perfect for large-scale deployments. Their scalability is simple due to modularity. Additionally, they benefit from better cooling because racks are often equipped with advanced air or liquid systems that keep optimal working temperatures.

Tower GPU Servers: Tower GPU servers look like standard desktop PCs and are usually utilized at smaller offices where there is no rack infrastructure or it is not needed. This kind of server allows more freedom in terms of component location and airflow, which can be useful when using different configurations for cooling. Towers as standalone units are generally easier to deploy while offering enough power for less intensive applications. However, their size is bigger than that of rackmounts; thus, they occupy more space physically besides having a lower density of GPUs per unit, making them unsuitable for extensive computational needs.

To put it briefly, the most suitable environment for rack-mounted GPU servers would be high-density, large-scale data centers with necessary cooling systems and efficient space use. On the other hand, towered ones would fit well into small-scale, less demanding deployments where ease of deployment and flexibility matter most.

How to Choose the Right GPU Server for Your AI Workloads?

Analyzing Your AI and Deep Learning Needs

When it comes to choosing a GPU server for AI and deep learning workloads, you need to know what exactly you want. Here are some things that should be in your mind:

- Performance: Determine how powerful your AI models should be. If you have large neural nets that need training or any other high-performance tasks then go for servers with multiple high-end GPUs.

- Scalability: You must consider whether there is room for expansion. As such, if you expect rapid growth, opt for rackmount servers since they can hold more GPUs within smaller areas.

- Budget: Take into account financial capability. Note that a rack mount solution tends to be costly due to advanced cooling systems as well as dense setup whereas tower servers may work well where budgets are low and operations not very massive.

- Energy consumption & Heat management: Different servers have different power requirements and cooling needs. Rackmounts benefit from data center cooling while towers require strong self-contained coolers.

- Deployment Environment: Look at where everything will be set up against what already exists around it, i.e., infrastructure. In case of having space in a data center, use this, but otherwise, go with towers, especially if space is limited or things are far apart, like offices.

By considering these factors, one can easily identify the best type of GPU server for their artificial intelligence and deep learning workload, hence enabling maximum utilization and scalability.

Nvidia A100 vs Nvidia H100: Which to Choose?

To choose between Nvidia A100 and Nvidia H100, you should know what these GPUs are best used for and what improvements they have made. The Ampere architecture-based Nvidia A100 is versatile in AI, data analytics, and high-performance computing (HPC) workloads. This equates to 19.5 teraflops FP32 performance as well as support for multi-instance GPU (MIG) technology that permits splitting a single A100 GPU into smaller independent instances.

The newer Hopper architecture-founded Nvidia H100 on the other hand provides significant enhancements in terms of performance and energy efficiency; it performs well in AI training plus inference with more than 60 teraflops FP32 performance. It introduces Transformer Engine which quickens transformer-based models thus making it ideal for large scale AI applications.

In conclusion, the wide-ranging nature of usability together with MIG supportiveness is what makes Nvidia A100 good while considering flexibility so far as different types of tasks are concerned, whereas on the flip side, cutthroat performance levels coupled with specialized capabilities required by heavy-duty AI workloads are provided by H100s. Therefore, select whichever aligns with specific performance needs and future scalability projections regarding your undertakings.

How to Optimize GPU Servers for Maximum Performance?

Configuring Your GPU Server for HPC Applications

There are several essential configurations you can do to optimize your GPU server for HPC applications. First, choose the right hardware that suits your computational requirements. For example, select GPUs with high memory bandwidth and computational power such as Nvidia A100 or H100. Secondly, ensure that the CPU of your server complements the capabilities of the GPU because balanced performance between these two components helps in reducing bottlenecks.

In addition to this, it is important to have good cooling systems and enough power supply that will keep the GPUs running at their best, even under high loads. On the software side, install up-to-date drivers as well as the CUDA toolkit so that you can exploit all features built into the hardware. If your HPC application runs on a distributed system, then use MPI (Message Passing Interface) for efficient communication between GPU nodes. Furthermore, fine-tuning memory management together with using performance monitoring tools like NVIDIA Nsight may reveal performance limitations and, therefore, enhance the operation of a GPU server during its peak performance period.

Best Practices for Maintaining GPU Performance

To sustain the highest possible GPU performance throughout your server’s lifespan, you have to stick to some of the best practices as recommended by industry leaders.

- Regular Driver and Software Updates: Make sure that you consistently update your GPU drivers alongside other related software, such as the CUDA toolkit, to the latest versions available; this will not only enhance performance but also fix bugs that could be lowering its efficiency.

- Adequate Cooling and Ventilation: You need to manage thermal properly. Clean out dust or any other particles from your GPUs components and ensure that there is enough airflow within the server room so that it does not overheat; good cooling can significantly extend its life span as well as keep up its performance.

- Power Supply Management: Always use reliable power supplies that are capable of providing the sufficient amount required without causing degradation in performance or even damaging hardware due to fluctuation in power; this may affect the operations of a graphic card more than anything else.

- Routine Monitoring and Maintenance: Employ tools for monitoring like NVIDIA Nsight Systems or GPU-Z which enable users to check temperature among others frequently; this can help one detect bottlenecks earlier enough besides troubleshooting them.

- Optimize Workloads: One should know how workloads are assigned by taking advantage of what GPUs can do, then balance computations carried out depending on their strengths; use job scheduling applications for efficient task allocation so that all resources get utilized fully without overloading any single card.

With these moves executed strictly, one achieves sustainability in graphical processing units’ speed while boosting computational effectiveness, thus safeguarding investments made in hardware.

Enhancing Server Performance with Effective Cooling

To maintain server performance at its peak, cooling efficiency has to be ensured. Here are some ways of achieving this:

- Server Room Layout: Correctly positioning the servers with hot and cold aisles can greatly increase airflow and improve cooling efficacy. This means that server racks should face each other in alternate rows such that the front of one row faces the back of another thus pushing warm air away from cool intake air.

- Environmental Monitoring: Placing sensors around different parts of the server room to monitor temperature and humidity levels closely can help identify areas experiencing more heat than others thereby allowing for prompt corrective measures. Continuous monitoring also enables real time adjustment for maintenance of optimum conditions for operation.

- Cooling Infrastructure: Among the most efficient methods of cooling high-density server environments include; in-row cooling systems, overhead cooling systems or even liquid cooled cabinets which provide directed cooling. These precision systems are better than traditional air conditioners because they offer more accurate temperature control management.

Adopting these techniques will enable system administrators to manage heat loads effectively, prevent overheating, and prolong the useful life span of critical hardware components.

Frequently Asked Questions (FAQs)

Q: For advanced computing and deep learning tasks, what are the advantages of using servers with high-performance GPUs?

A: High-performance GPU servers are very useful for advanced computing and deep learning. The devices have faster speeds of processing data, better parallel computing power as well as improved efficiency in handling big data sets; features which are essential for AI and ML applications that have high demands.

Q: How do 4-GPU Servers improve Performance for Demanding AI Workloads?

A: 4-GPU servers, like those having Nvidia A100 GPUs, increase computational power by working together different GPUs simultaneously thereby enhancing performance for demanding AI workloads. This allows models to be trained faster with inference done more quickly leading to higher throughputs overall while also improving efficiency in deep learning tasks.

Q: What form factor configurations can you get GPU-accelerated servers in?

A: Different sized GPU-accelerated servers exist including 1U, 2U and 4U rackmount designs. For instance Supermicro’s 4U servers allow for dense installations with effective cooling whereas smaller 1U setups provide space saving options within data centers.

Q: Why are AMD EPYC™ 9004 Processors suitable for AI & HPC?

A: AMD EPYC™ processors such as the 9004 series offer superior I/O capabilities due to large memory bandwidths and high core counts being their main design focus. These CPUs are a perfect fit when it comes to artificial intelligence or any other calculation-heavy application that demands significant amounts of computational resources combined with efficient handling of data.

Q : What is the role of scalable processors like Gen Intel® Xeon® Scalable Processor in GPU Servers?

A: Scalable processors (e.g., Gen Intel® Xeon® Scalable Processor) provide an adaptable base on which powerful GPU servers can be built. They allow easy transition between small-scale deployments all the way up to larger ones while still maintaining efficiency levels throughout these varying scales. Additionally, this type of processor boasts advanced features such as high-speed interconnects and enhanced security protocols, which greatly enhance performance within GPU-accelerated environments.

Q: How does the server performance get better with the use of PCIe 5.0 x16 slots?

A: In comparison to previous generations, this type of slots offer higher bandwidths and faster rates for data transfers. These changes substantially increase the ability of GPU cards (and other peripherals working at high speed) that are installed in servers to handle intensive computational tasks.

Q: What are the special features of Nvidia A100 GPUs that make them great for machine learning and deep learning applications?

A: The latest tensor core technology is incorporated into their design by Nvidia A100 GPUs hence they provide unparalleled performance when it comes to machine learning or deep learning applications. These devices have exceptional computational power, scalability and efficiency thus making them perfect for AI-driven workloads as well as environments.

Q: What advantages do 4U rackmount servers bring to data centers?

A: Better airflow and cooling, increased compute resource density, improved spatial efficiency among others are some benefits 4U rackmount servers provide data centers with. Spatial capacity is big enough in these machines to house multiple GPU cards alongside other components which makes them suitable for large scale deployments as well as meeting high-performance computing needs.

Q: In a data center environment, what are the common use cases of a GPU-accelerated AI server?

A: High-performance computing (HPC), complex simulation tasks, machine learning infrastructure, etc., are some examples of GPU-accelerated AI servers that can be used within a data center. Therefore, they become necessary for any workload involving artificial intelligence because such work requires train models with lots of computation power while simultaneously running inferences on massive datasets.