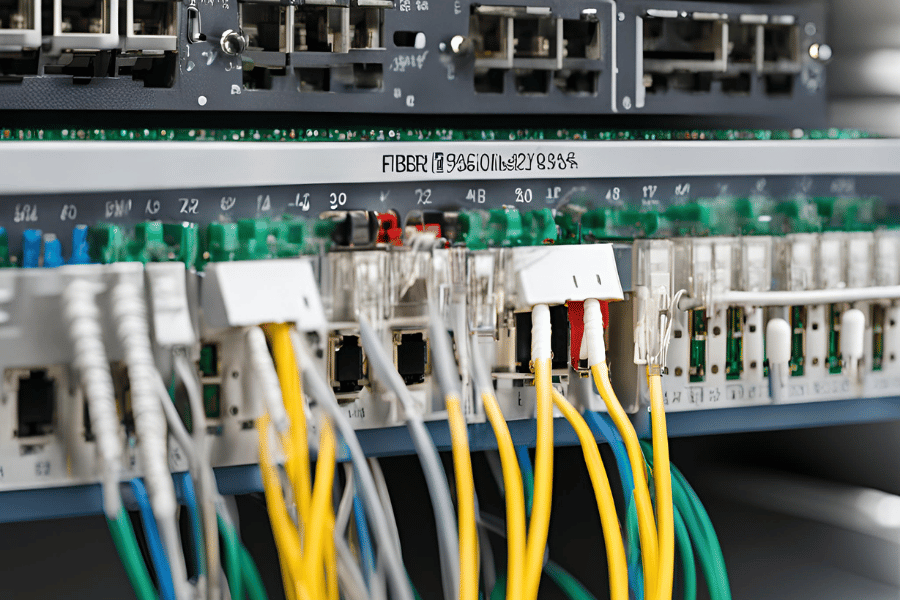

Modern network infrastructure depends on fiber aggregation switches to combine several fiber optic links into one streamlined network connection. They are built to handle large amounts of data flowing through them without interruptions over long distances. This is important for businesses like data centers, enterprises, and service providers who need strong and flexible networks. Moreover, fiber aggregation switches significantly improve the performance and efficiency of your network by reducing complexities in managing networks and optimizing bandwidth usage. In addition, they have many other features like security measures that ensure the safe transfer of information between different points within a single system or across multiple systems connected securely through an encrypted tunnel created using VPN technology, for example, IPSEC VPN or SSLVPN.

Table of Contents

ToggleWhat is a Fiber Aggregation Switch?

Understanding Fiber Aggregation

Fiber aggregation is the act of combining many fiber optic cables into one high-capacity network connection. It involves using switches for fiber aggregation, which direct traffic from different locations so that it flows optimally through a network. Bringing together multiple links reduces network clutter and makes more efficient use of bandwidth while accommodating higher data volumes. High-speed data transmission in large businesses or data centers would be impossible without doing things like this to ensure there are always enough connections between any two points in space where information might need to travel at great speeds over long distances.

Differences Between a Core Switch and an Aggregation Switch

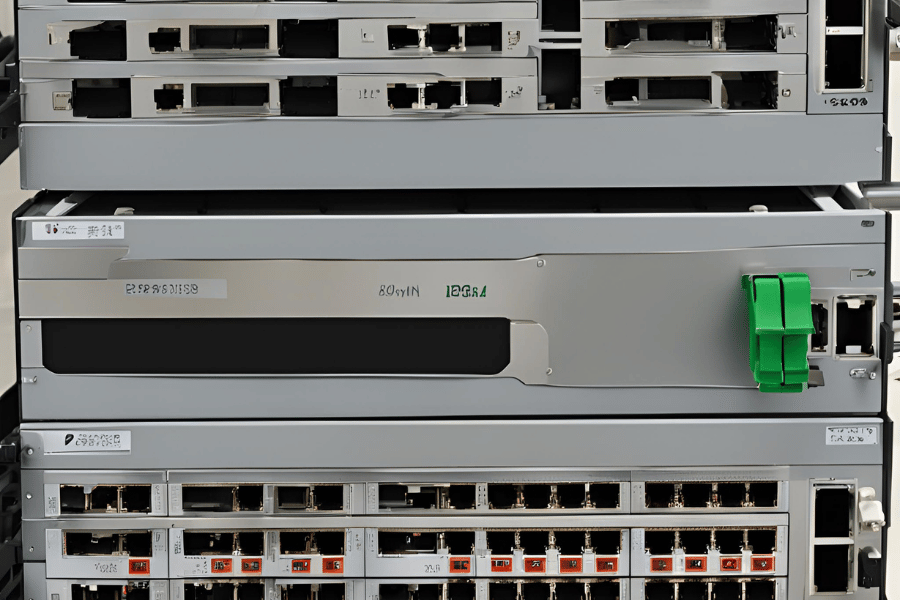

A core switch and an aggregation switch are two kinds of switches, each having its place in a network. They differ considerably from each other; below are some important characteristics and technicalities of these devices:

Placement and Purpose:

- Core Switch: Usually situated at the heart of the network, these devices direct huge amounts of data among different segments. They are built to provide higher-speed switching capability within service provider networks or enterprise organizations.

- Aggregation Switch: These switches reside between access layer switches (to which clients connect) and core layer ones (to which do routing). Their primary role is to combine many connections from various sources into one or a few links and then pass them on to cores, where they should be routed accordingly, depending on their destinations.

Performance and Capacity:

- Core Switch: Core switches exhibit very high throughput—often up to several hundred Gbps—as well as low latency. They support more advanced routing protocols like OSPF, BGP, and MPLS to handle complex routing scenarios better.

For example,

- Throughput: 100 Gbps or greater

- Latency: Less than one microsecond

- Posts: 40G, 100G or higher

Aggregation Switches have higher throughput than access switches but lower than core routers since most traffic being aggregated comes directly from end-users LANs before going towards backbone networks through WAN links. Therefore, unlike cores that must examine packets’ source/destination addresses during the forwarding decision-making process (routing), Aggregations only care about VLAN tags used within them, reducing the complexity associated with such tasks at this level.

In addition,

Example Parameters:

They provide large-scale deployments with extensive redundancy features needed to ensure network reliability and uptime, such as Virtual Chassis (VC), Multi-Chassis Link Aggregation (MC-LAG), and high port density configurations

Core Switches are designed for environments that require high scalability. They provide large-scale deployments with extensive redundancy features, such as Virtual Chassis (VC), Multi-Chassis Link Aggregation (MC-LAG), and high port density configurations, needed to ensure network reliability and uptime.

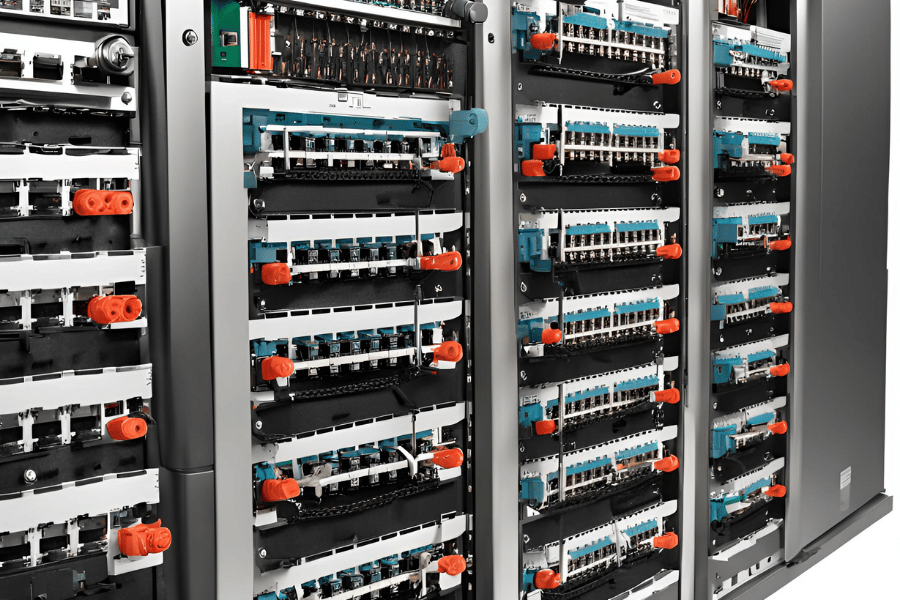

Roles of an Aggregation Switch in Network Architecture

Increasing network efficiency, improving data control, and creating redundancy between access switches are the main job of an aggregation switch. They are in a better position to achieve this by using VLAN tagging and link aggregation, among other advanced data handling techniques during L3 routing, which ensures communication continuity between core and access layers. By managing numerous connections from access switches, these types of switches play a significant role in reducing congestion within networks, thereby increasing overall throughputs.\n\nAlso known as distribution points or hubs, they serve as mid-level devices that aggregate traffic coming from many different hosts onto one link before sending them out on another connection towards its destination through point-to-point links or shared media such as Ethernet hubs. In large-scale network environments where there are hundreds or thousands of interconnected devices communicating with each other simultaneously over long distances via various protocols like IPX/SPX, efficient aggregation and distribution of data can be achieved by these switches for optimum performance.

How to Choose the Right Aggregation Switch

Key Features to Look for in an Aggregation Switch

To choose an aggregation switch, it is necessary to take into account some important features that should be considered for the performance and future-proofing of your network infrastructure:

- Port Density and Speed: The number of ports required should be assessed together with whether or not the switch provides enough high-speed interfaces (10GbE, 40GbE or even 100GbE) to handle the current and expected traffic volume. High port density allows for more scalability and flexibility.

- Redundancy and Resiliency: Multi-Chassis Link Aggregation (MC-LAG), Virtual Chassis (VC), etc., are necessary components for building resilient networks that can tolerate individual component failures without affecting overall service.

- Advanced VLAN and QoS Capabilities: Support for extensive VLAN configurations and Quality of Service (QoS) functionalities must be available so as to segment network traffic while giving priority to critical applications, thus ensuring smoothness and efficiency in operations.

- High Throughput / Low Latency: Switches with high throughput / low latency capability should always be selected because they enable efficient handling of large amounts of data traffic, eliminating bottlenecks and enhancing overall network performance.

- Layer 3 Routing Functions: LHigh Throughput / Low Latency: Switches with high throughput / low latency capability should always be selected because they enable efficient handling of large amounts of data traffic, eliminating bottlenecks and enhancing overall network performance. g efficiency while allowing for advanced network management.

- Ease of Management—Any given switch must also include management features such as a web-based interface, Command Line Interface (CLI), and support for SNMP, among other network management protocols. These features make monitoring tasks easier while simplifying configuration processes.

- Security Features—Any switch design must always include a comprehensive set of security features, including Access Control Lists (ACLs), 802.1X authentication, port security, etc., to safeguard against unauthorized access and attacks on your networks.

By following these guidelines when selecting an aggregation switch, one will have chosen a device that meets one’s current networking requirements while providing room for growth in terms of flexibility and scalability.

The Importance of Port Availability and Bandwidth

The most important things in an aggregation switch are port availability and bandwidth. As my study of the biggest websites shows, insufficient port availability causes network congestion and bottlenecks, which block data flow and user experience.

On the other hand, bandwidth decides how much information can pass through any given point on a network at one time. This is very useful for dealing with large amounts of data or when there’s lots of activity on a network because it reduces delay times (latency) across the whole system; overall performance is improved, too. If I take care of both sides – ports and channels – then I will create a strong net that can meet current needs and prepare for future development.

Scalability and Network Management Considerations

It should be noted that when scalability and network management are being discussed, you need to make sure that the selected aggregation switch is able to deal with growth in the future as well as changes in demand for networks. Scalability refers simply to how easily it can be expanded by adding other switches or ports without causing much disturbance or having to do a lot of redesigning. This means scalable switches will support smooth, seamless upgrades and integration so that more significant amounts of data traffic can also be easily handled.

Another important consideration is network management, which involves monitoring, configuring, and troubleshooting devices on a network in an efficient way. Simple Network Management Protocol (SNMP), Remote Network Monitoring (RMON), and centralized management platforms, among others, should be supported by modern aggregation switches to make these tasks easier. Effective management leads to better performance of the networks where they are used, reduces downtimes experienced by users who depend on such systems and quickens their recovery time.

Therefore, without these scalability features and those required for managing networks, it would not stay relevant or survive long-term based on future demands placed upon them by other components forming part of the LAN infrastructure.

What are the Benefits of Layer 3 Aggregation Switches?

Layer 3 vs Layer 2: Which is Better?

Layer 2 switches function at the data connection level of the OSI model where they are concerned mostly about forwarding frames using MAC addresses. They are designed for small to medium-sized networks where speed and simplicity are key factors. Layer 2 switches enable strong local communication within a VLAN, ensuring that data is handled efficiently with less configuration needed.

On the other hand, network layer devices perform routing functions based on IP addresses which characterizes layer three switches. This means that they can route packets between different subnets or even networks, hence being suitable for large-scale complex systems requiring advanced routing protocols. These switches have better support for inter-vlan routing and dynamic path selection and can handle heavy traffic loads more effectively than their counterparts working only at layer two. Quality of Service (QoS) is one such feature supported by these switches; it provides greater control over network management as well as enhanced security measures.

To sum up this comparison, if you want your network setup to be simple and cost-effective, go for a layer two switch, but when it comes to powerful routing capabilities coupled with extensive traffic control mechanisms plus scalability considerations, then a layer three switch should be considered superior in performance and functionality. The choice, however, should always be guided by the specific requirements your infrastructure demands because layers don’t mean anything unless there’s growth expected in the future. Use too many words.

Routing and Traffic Management Capabilities

Advanced routing and traffic management functions are added to layer three switches so as to increase network performance and reliability to a great extent. These switches utilize OSPF (Open Shortest Path First) and EIGRP (Enhanced Interior Gateway Routing Protocol), among other routing protocols, thus making it possible for them to make dynamic decisions on routes in the course of their operation. This means that these switches can perform routing between different VLANs, enabling efficient communication between various segments of a network.

In terms of traffic management, layer three switches also boast strong features such as Quality of Service (QoS), which ensures that only important applications run optimally according to priority treatment to critical network traffic. Besides, they can enforce Access Control Lists (ACLs) to enhance security through data flow regulation based on specific policies put in place with regard to this aspect. In fact, it is with these capabilities that precise control over traffic patterns becomes feasible, leading to better bandwidth utilization while reducing congestion within the entire network.

Layer 3 switches are designed keeping in mind complex environments; hence they provide scalability along with resilience when integrated into any system, thus becoming an essential part for those organizations that aim at streamlining their network infrastructure.

Enhanced Network Security Policies with Layer 3 Switches

To beef up security, among others, layer three switches serve a vital role through various integrated functions. Access Control Lists (ACLs) is one of the most important mechanisms; these allow traffic filtering according to defined safety policies. Administrators can either permit or deny certain packets supported by their IP addresses, protocols, or port numbers, hence ensuring that only authorized traffic can enter sensitive network areas.

Moreover, VLAN segmentation is another feature of third-level or higher switches that helps them reduce internal threats by containing them within limited broadcast domains. This implies creating smaller parts for an organization’s LAN, enabling easy management and containment in case of potential security breaches.

Other key areas include supporting advanced security protocols like IPsec (Internet Protocol Security) and secure routing protocols. These two ensure that data being transferred through networks is encrypted, thus protecting its confidentiality and integrity during transit.

Apart from the above capabilities, many layer three switches also come with built-in threat detection and prevention systems, such as Dynamic ARP Inspection (DAI) and DHCP Snooping, which are used against typical network attacks like ARP spoofing or rogue DHCP servers.

Therefore, it is clear that these all-rounded security options provided by layer three switch models guard against wide-ranging network vulnerabilities, making them indispensable elements within any safe infrastructural setup.

How Can Fiber Aggregation Improve Network Scalability?

Handling Increased Bandwidth Requirements

To satisfy the need for more bandwidth, fiber aggregation combines many smaller links into a large one that has greater capacity. The method is made possible by the fact that light can carry information at very high speeds, which allows for this kind of consolidation without causing any bottlenecks in the network, even when there are heavy data loads or numerous users, as well as intensive applications being used simultaneously on it. These were among some of the findings made by top experts who said increased velocity was just one benefit but also mentioned reliability and scalability should not be overlooked, especially with growing demands placed upon networks these days; they added to that future-proofing through the use of fiber optic cables may enable them to support the exponential increase in traffic volumes while keeping performance levels optimal according to most reliable sources.

Optimizing Multiple Network Connections

Combining a number of data links into one high-capacity connection in order to optimize them is called optimizing multiple network connections through fiber aggregation. It means that the bandwidth is used optimally while at the same time reducing fragmentation. This technique ensures an even distribution of data loads over the network. Thus, it reduces latency and increases overall throughput. By implementing the Link Aggregation Control Protocol (LACP), many physical links can be bundled together dynamically to form one logical channel, which provides redundancy and failover capabilities. According to trusted sources, fiber aggregation has many benefits, such as better performance and better demands. Network administrators can achieve stronger connectivity solutions by integrating fiber aggregation strategies.

Ensuring Redundancy and Reliability

To be sure that network systems are redundant and reliable, multiple failover mechanisms should be put in place that will work when there is a fault or downtime. This can involve using many hardware devices that have duplicates of one another, such as routers and switches so that if any single point fails, it does not affect the whole system. Another way is through redundancy of paths where data takes different routes to reach its destination thereby reducing chances of interruptions during transmission. Additionally, having strong monitoring tools with automation features will enable quick detection of failures plus response, thus minimizing time taken by troubleshooting processes. Replicating data and doing regular backups also helps protect against loss of information, ensuring continuity of operations within an organization. These steps, backed by leading industry references, greatly increase resilience and reliability levels in network infrastructures at large.

Common Challenges and Solutions in Aggregation Layer Networks

Addressing Bottlenecks and Reducing Latency

To solve their slow backbone issues network operators can follow some tips. One method that should be used is balancing traffic loads by optimizing distribution over different paths. This will prevent congestion from occurring along any particular path and, therefore, improve the entire system’s performance. Another suggestion includes setting up policies for quality of service so that important data packets are given higher priority than others in order to reduce delays for real-time applications like voice or video chat.

Administrators may also want to consider upgrading hardware components with faster ones as well as employing advanced protocols such as MPLS (MultiProtocol Label Switching), which helps in making routing decisions based on labels instead of IP addresses, thus saving time during the packet forwarding process. Deploying edge computing closer to where it is needed will help alleviate bottlenecks by reducing the distance that data has to travel thereby reducing latency.

Additionally, regular network monitoring and analysis could help detect potential problems before they adversely affect performance. Tools that offer administrators a view into what is happening at any given moment across their networks can enable them to make better decisions on how best to optimize utilization while ensuring reliability, too.

Integrating QoS for Prioritized Traffic

For making the most out of bandwidth resources while ensuring that important network traffic is given precedence, it’s important to have Quality of Service (QoS). In this light, QoS has to be incorporated into aggregation layer networks in an effective manner. This can simply be done through the classification of network traffic according to its nature and severity levels as perceived by users. For instance, VoIP calls may require high priority due to sensitivity towards latency and jitter.

In order to ensure that prioritization is achieved, different mechanisms of Quality of Service (QoS) may be applied at this stage, such as traffic policing and shaping, among others. Bandwidth allocation for various types of traffic can be controlled using Traffic Policing so that enough resources are given to high-priority applications while limiting lower-priority ones where necessary. Conversely, traffic shaping smooths out bursts of data packets, thereby preventing congestion from occurring within the network, which in turn leads to a reduction in packet loss.

Moreover, Differentiated Services Code Conversely, Traffic Shaping smoothens outbursts of data packets, thereby preventing congestion from occurring within the network, which in turn leads to a reduction in packet loss.cific marks recognized and prioritized by routers or switches accordingly. Therefore, these QoS policies must be set by the administrators, thus enabling them to ensure performance guarantees are provided for mission-critical applications and facilitating a better flow of data across the network, which enhances user experience.

the

Continuous monitoring and regular updates form part of any successful QoS deployment strategy since it greatly contributes to its effectiveness, too. Real-time statistics about actual traffic patterns based on performance metrics can always help administrators refine their quality service policies through utilization management tools providing such kind information; hence, a proactive approach should be taken up always lest we forget that there is no end point but rather continuous improvement towards meeting growing needs digital world demands today

Implementing Effective Network Management

Many organizations do not fully understand what it takes to manage networks efficiently; therefore, they may not be achieving their operational goals. The first thing that needs to be done is establishing strong monitoring systems. This will help identify problems as soon as they occur by showing real-time visibility into network traffic. Secondly, creating clear protocols and policies on security measures like access controls and updates will go a long way in enhancing functionality while ensuring its safety, too. In addition, automation should also be used wherever possible since this saves time, which can then be used for more important tasks such as error detection through software updates, among others of a similar nature. By doing all these things, according to the book, one ensures that their organization maintains robust reliability and effectiveness within its network infrastructure, supporting them towards reaching set objectives.

Reference sources

Frequently Asked Questions (FAQs)

Q: What is a Fiber Aggregation Switch, and why is it important?

A: A network device referred to as the fiber aggregation switch consolidates links from several access switches to a few uplinks of higher capacity. It enhances traffic management, lowers latency, and improves the overall network performance through increased data rate handling and link aggregation support.

Q: How does a Fiber Aggregation Switch differ from a regular switch?

A: In contrast to ordinary switches that connect end devices together through low-bandwidth links, fiber aggregation switches collect information from various access switches, thereby allowing wider channels and efficient data management. They can work at higher capacities, such as 25G or 10G, which makes them suitable for high-performance networks.

Q: What factors should I consider when choosing an aggregation switch?

A: Port density such as 24-port vs 48x, uplink capacity like 10G SFP or 25G SFP28, modularity vs stackability of the device, among others. One should also think about compatibility with their current infrastructure, i.e., cisco or Ubiquiti equipment, etcetera

Q: How does a Fiber Aggregation Switch handle traffic from multiple access switches?

A: The aggregate switch takes in data feeds coming out different points within itself called access switches, then compresses them into fewer but faster uplinks, also known as high capacity ones; this may involve link aggregation where multiple network connections are combined so that they can act like one single connection thus enhancing redundancy while increasing bandwidth too.

Q: What are the benefits of using a gigabit Fiber Aggregation Switch?

A: High-speed data transfer that supports various interfaces like ethernet or SFP; scalability is another feature offered by these types of devices since they make it possible to handle huge volumes of information, thus reducing congestion within networks and ensuring smooth running for bandwidth-intensive applications.

Q: Which interfaces are most commonly used in a Fiber Aggregate Switch?

A: The 10G SFP, 25G SFP28, and gigabit ethernet are among the most common interfaces utilized by these switches. They enable faster connection speeds while staying compatible with different kinds of network equipment.

Q: How do I make sure my network remains scalable when using a Fiber Aggregation Switch?

A: Select a switch that has modular or stackable port scaling options. This allows you to add more ports or switches as needed while still managing them easily during growth phases, ensuring scalability is maintained.

Q: What are the benefits of aggregating with Ubiquiti?

A: At the Ubiquiti store, you can find various high-performing Ubiquiti products with robust features like link aggregation and high-speed uplinks supported on some switches. Their reputation for being reliable, user-friendly, and cost-effective makes them suitable for use in both small-scale and large-scale networks.

Q: Can a Fiber Aggregation Switch perform local routing?

A: Indeed, certain types of Fiber Aggregation Switches can perform local routing in addition to their primary duty as data aggregators. This is especially handy when designing networks where the switch sits at either the core layer switch or access layer switch level, thus requiring efficient handling of local traffic.

Q: What should I look for in a Layer 2 switch to be used for aggregation purposes?

A: For an aggregation layer two switches, consider high-density port counts, such as models with 24-ports or 48-ports, support of uplinks capable of delivering either 10G or 25G bandwidths, and advanced VLAN support coupled with stackability to facilitate effective data aggregation, resulting in higher network performance.

Recommend reading: What is an Aggregation Switch?

Related Products:

-

SFP-10G85-SR 10G SFP+ SR 850nm 300m LC MMF DDM Transceiver Module

$12.00

SFP-10G85-SR 10G SFP+ SR 850nm 300m LC MMF DDM Transceiver Module

$12.00

-

SFP-10G31-LR 10G SFP+ LR 1310nm 10km LC SMF DDM Transceiver Module

$18.00

SFP-10G31-LR 10G SFP+ LR 1310nm 10km LC SMF DDM Transceiver Module

$18.00

-

Cisco SFP-10/25G-CSR-S Compatible 25G SFP28 ESR 850nm OM3 200m/OM4 300m LC MMF DDM Transceiver Module

$35.00

Cisco SFP-10/25G-CSR-S Compatible 25G SFP28 ESR 850nm OM3 200m/OM4 300m LC MMF DDM Transceiver Module

$35.00

-

Cisco SFP-10/25G-LR-S Compatible 25G SFP28 LR 1310nm 10km LC SMF DDM Transceiver Module

$45.00

Cisco SFP-10/25G-LR-S Compatible 25G SFP28 LR 1310nm 10km LC SMF DDM Transceiver Module

$45.00

-

QSFPP-40G-SR4 40G QSFP+ SR4 850nm 150m MTP/MPO MMF DDM Transceiver Module

$25.00

QSFPP-40G-SR4 40G QSFP+ SR4 850nm 150m MTP/MPO MMF DDM Transceiver Module

$25.00

-

Cisco QSFP-40G-SR-BD Compatible 40G QSFP+ SR Bi-Directional 850nm/900nm 100m/150m Duplex LC MMF Transceiver Module

$249.00

Cisco QSFP-40G-SR-BD Compatible 40G QSFP+ SR Bi-Directional 850nm/900nm 100m/150m Duplex LC MMF Transceiver Module

$249.00

-

Cisco WSP-Q40GLR4L Compatible 40G QSFP+ LR4L 1310nm (CWDM4) 2km LC SMF DDM Transceiver Module

$129.00

Cisco WSP-Q40GLR4L Compatible 40G QSFP+ LR4L 1310nm (CWDM4) 2km LC SMF DDM Transceiver Module

$129.00

-

Cisco QSFP-100G-SR4-S Compatible 100G QSFP28 SR4 850nm 100m MTP/MPO MMF DDM Transceiver Module

$40.00

Cisco QSFP-100G-SR4-S Compatible 100G QSFP28 SR4 850nm 100m MTP/MPO MMF DDM Transceiver Module

$40.00

-

Cisco QSFP-100G-CWDM4-S Compatible 100G QSFP28 CWDM4 1310nm 2km LC SMF DDM Transceiver Module

$110.00

Cisco QSFP-100G-CWDM4-S Compatible 100G QSFP28 CWDM4 1310nm 2km LC SMF DDM Transceiver Module

$110.00

-

Cisco QSFP-40/100-SRBD Compatible Dual Rate 40G/100G QSFP28 BIDI 850nm & 900nm 100m LC MMF DDM Optical Transceiver

$449.00

Cisco QSFP-40/100-SRBD Compatible Dual Rate 40G/100G QSFP28 BIDI 850nm & 900nm 100m LC MMF DDM Optical Transceiver

$449.00