With the development of cloud computing and virtualization technologies, network cards have also evolved, and can be divided into four stages in terms of functionality and hardware structure.

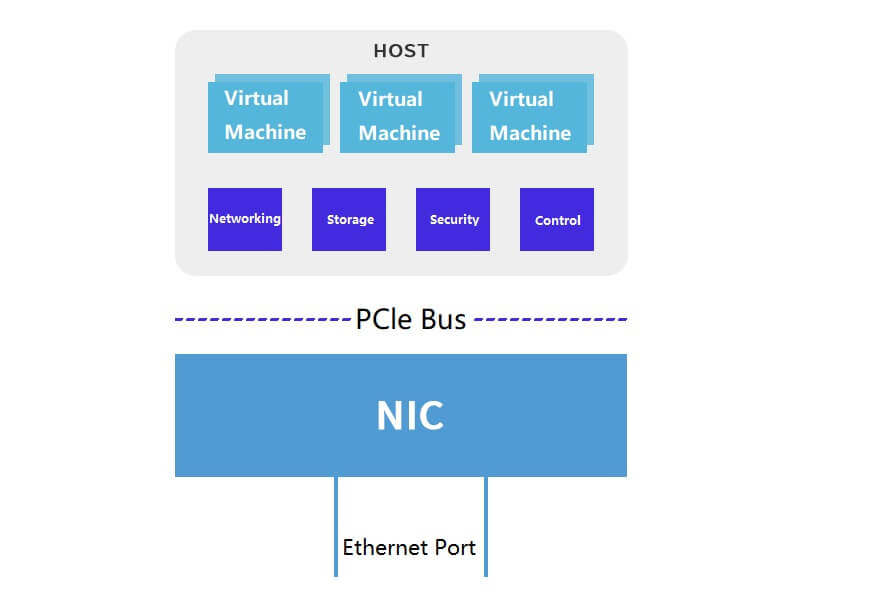

Traditional basic network card (NIC)

Responsible for data packet transmission and reception, with less hardware offloading capabilities. The hardware implements the network physical link layer and MAC layer packet processing with ASIC hardware logic, and later NIC standard cards also supported functions such as CRC check. It does not have programming capabilities.

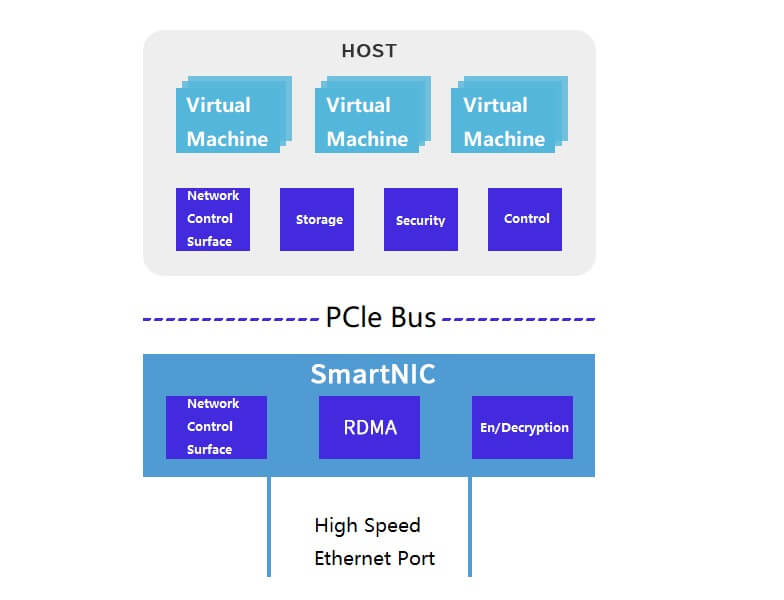

Smart network card (SmartNIC)

It has a certain data plane hardware offloading capability, such as OVS/vRouter hardware offloading. The hardware structure uses FPGA or integrated processor with FPGA and processor core (here the processor function is weak) to achieve data plane hardware offloading.

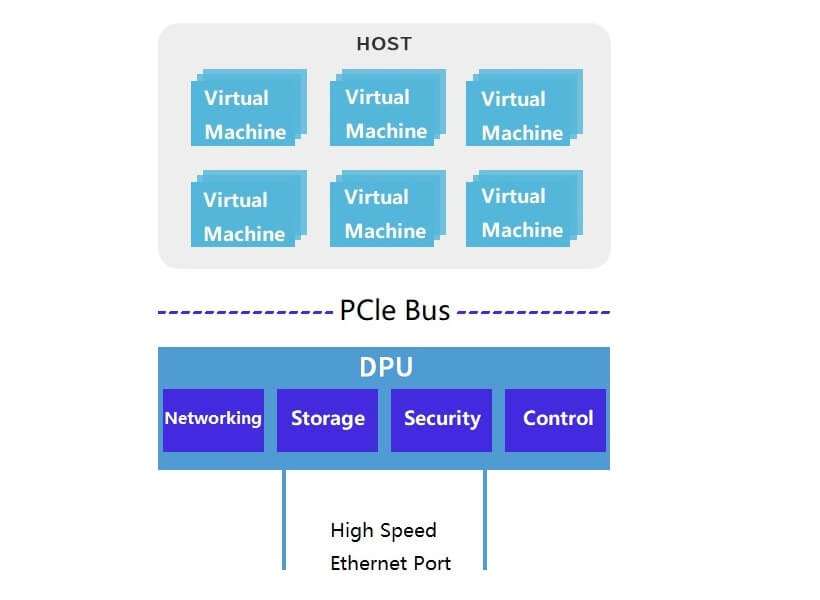

FPGA-Based DPU

This is a smart network card that supports both data plane and control plane offloading, as well as a certain degree of programmability for the control and data planes. Regarding hardware structure development, it adds a general-purpose CPU processor based on FPGA, such as Intel CPU.

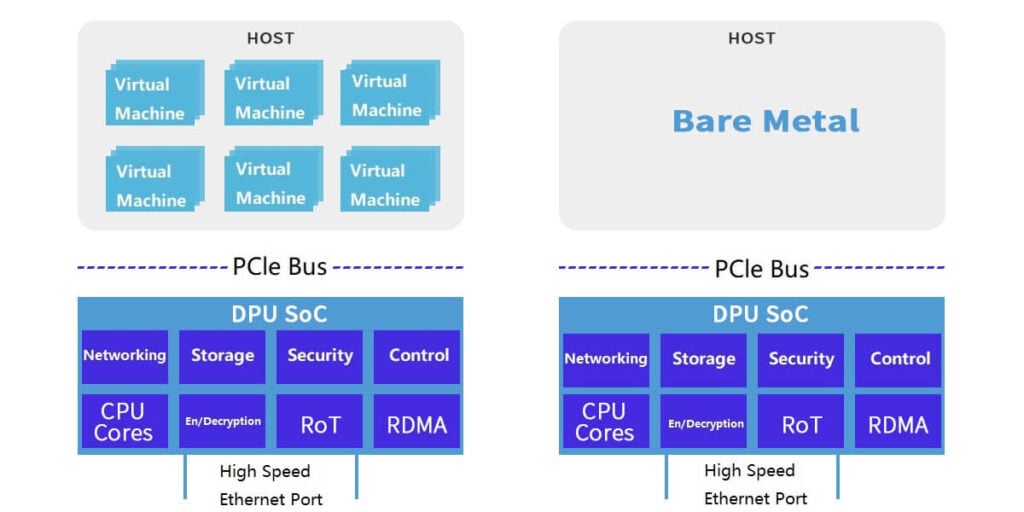

Single-Chip DPU

This is a single-chip general-purpose programmable DPU chip, which has rich hardware offloading acceleration and programmability capabilities, and supports different cloud computing scenarios and unified resource management features. On the hardware side, it adopts a single-chip SoC form, balancing performance and power consumption. The main challenges of FPGA-Based DPU in hardware design come from chip area and power consumption. In terms of area, the structure size of the PCIe interface limits the chip area on the board; in terms of power consumption, the heat dissipation design of the board is closely related to the power consumption of the chip and the whole board. These two factors restrict the continuous development of FPGA solutions. The DPU SoC solution draws on the software and hardware experience and achievements from NIC to FPGA-Based DPU, and is an important evolution path for the data center architecture centered on DPU.

DPU, as a typical representative of software-defined chips, is based on the concept of “software-defined, hardware-accelerated”, and is a general-purpose processor that integrates data processing as the core function on the chip. The DPU general-purpose processing unit is used to handle control plane business, and the dedicated processing unit ensures the data plane processing performance, thus achieving a balance between performance and generality. The DPU dedicated processing unit is used to solve the performance bottleneck of general infrastructure virtualization, and the general-purpose processing unit ensures the generality of DPU, making DPU widely applicable to various scenarios of cloud infrastructure, and realizing the smooth migration of virtualization software framework to DPU.

The Development and Application of NIC

The traditional basic network card NIC, also known as the network adapter, is the most basic and important connection device in the computer network system. Its main function is to convert the data that needs to be transmitted into a format that the network device can recognize. Driven by the development of network technology, the traditional basic network card also has more functions and has initially possessed some simple hardware offloading capabilities (such as CRC check, TSO/UF0, LSO/LR0, VLAN, etc.), supporting SR-IOV and traffic management QoS. The network interface bandwidth of the traditional basic network card has also developed from the original 100M, 1000M to 10G, 25G, and even 100G.

In the cloud computing virtualization network, the traditional basic network card provides network access to the virtual machine in three main ways.

(1) The network card receives the traffic and forwards it to the virtual machine through the operating system kernel protocol stack.

(2) The DPDK user-mode driver takes over the network card, allowing the data packets to bypass the operating system kernel protocol stack and directly copy to the virtual machine memory.

(3) Using SR-IOV technology, the physical network card PF is virtualized into multiple virtual VFs with network card functions, and then the VF is directly passed to the virtual machine.

With tunnel protocols such as VxLAN and virtual switching technologies such as OpenFlow, 0VS, etc., the complexity of network processing is gradually increasing, and more CPU resources are needed. Therefore, the SmartNIC was born.

The Development and Application of SmartNIC

SmartNIC, in addition to having the network transmission function of the traditional basic network card, also provides rich hardware offloading acceleration capabilities, which can improve the forwarding rate of the cloud computing network and release the host CPU computing resources.

SmartNIC does not have a general-purpose processor CPU on it, and it needs the host CPU to manage the control plane. The main offloading acceleration object of SmartNIC is the data plane, such as data plane Fastpath offloading of virtual switches 0VS/vRouter, RDMA network offloading, NVMe-oF storage offloading, and IPsec/TLS data plane security offloading, etc.

However, as the network speed in cloud computing applications continues to increase, the host still consumes a lot of valuable CPU resources to classify, track, and control the traffic. How to achieve “zero consumption” of the host CPU has become the next research direction for cloud vendors.

The Development and Application of FPGA-Based DPU

Compared with SmartNIC, FPGA-Based DPU adds a general-purpose CPU processing unit to the hardware architecture, forming an FPGA+CPU architecture, which facilitates the acceleration and offloading of general infrastructure such as network, storage, security, and management. At this stage, the product form of DPU is mainly FPGA+CPU. The DPU based on FPGA+CPU hardware architecture has good software and hardware programmability.

In the early stage of DPU development, most DPU manufacturers chose this scheme. This scheme has a relatively short development time and fast iteration, and can quickly complete customized function development, which is convenient for DPU manufacturers to quickly launch products and seize the market. However, with the network bandwidth migration from 25G to 100G, the DPU based on FPGA+CPU hardware architecture is limited by the chip process and FPGA structure, which makes it difficult to achieve good control of the chip area and power consumption when pursuing higher throughput, thus restricting the continuous development of this DPU architecture.

The Development and Application of DPU SoC NIC

DPU SoC is a hardware architecture based on ASIC, which combines the advantages of ASIC and CPU and balances the excellent performance of dedicated accelerators and the programmable flexibility of general-purpose processors. It is a single-chip DPU technology solution that drives the development of cloud computing technology.

As mentioned in the previous paragraph, although DPU plays an important role in cloud computing, traditional DPU solutions are mostly presented in FPGA-based schemes. With the server migration from 25G to the next-generation 100G server, its cost, power consumption, functionality, and other aspects face serious challenges. The single-chip DPU SoC not only has huge advantages in cost and power consumption but also has high throughput and flexible programming capabilities. It supports not only the application management and deployment of virtual machines and containers but also bare metal applications.

With the continuous development of DPU technology, the general-purpose programmable DPU SoC is becoming a key component in the data center construction of cloud vendors. DPU SoC can achieve economical and efficient management of computing resources and network resources in the data center. The DPU SoC with rich functions and programmable capabilities can support different cloud computing scenarios and unified resource management, and optimize the utilization of data center computing resources.

In the design, development, and use of DPU, chip giants and leading cloud service providers at home and abroad have invested a lot of R&D resources, and have achieved good cost-effectiveness through continuous exploration and practice.

DPU in AWS (Amazon Cloud)

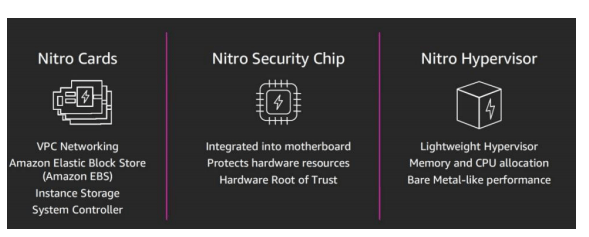

AWS is the world’s leading cloud computing service and solution provider. AWS Nitro DPU system has become the technical cornerstone of AWS cloud service. AWS uses Nitro DPU system to decompose and transfer network, storage, security, and monitoring functions to dedicated hardware and software, and provides almost all resources on the server to service instances, greatly reducing costs. The application of Nitro DPU in Amazon Cloud can make a server earn thousands of dollars more per year. The Nitro DPU system mainly consists of the following parts.

(1) Nitro card. A series of dedicated hardware for network, storage, and control, to improve the overall system performance.

(2) Nitro security chip. Transfer virtualization and security functions to dedicated hardware and software, reduce the attack surface, and achieve a secure cloud platform.

(3) Nitro hypervisor. A lightweight Hypervisor management program that can manage memory and CPU allocation, and provide performance that is indistinguishable from bare metal.

Nitro DPU system provides key, network, security, server, and monitoring functions, releases the underlying service resources for customers’ virtual machines, and enables AWS to provide more bare metal instance types, and even increase the network performance of specific instances to 100Gbps.

NVIDIA DPU

NVIDIA is a semiconductor company that mainly designs and sells graphics processing units (GPUs), widely used in AI and high-performance computing (HPC) fields. In April 2020, NVIDIA acquired Mellanox, a network chip and device company, for $6.9 billion, and then launched the BlueField series of DPUs.

NVIDIA BlueField-3 DPU (as shown in Figure 7) inherits the advanced features of BlueField-2 DPU and is the first DPU designed for AI and accelerated computing. BlueField-3 DPU provides up to 400Gbps network connection and can offload, accelerate, and isolate, supporting software-defined network, storage, security, and management functions.

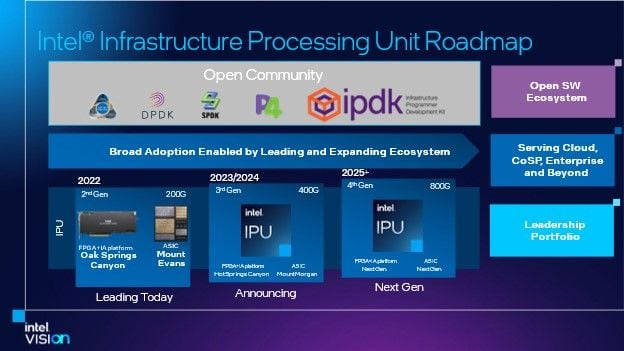

Intel IPU

Intel IPU is an advanced network device with hardened accelerators and Ethernet connections, which can use tightly coupled dedicated programmable cores to accelerate and manage infrastructure functions. IPU provides complete infrastructure offload, and acts as a host control point for running infrastructure applications, providing an additional layer of security. Using Intel IPU, all infrastructure services can be offloaded from the server to the IPU, freeing up server CPU resources, and also providing cloud service providers with an independent and secure control point.

In 2021, Intel announced Oak Springs Canyon and Mount Evans IPU products at Intel Architecture Day. Among them, Oak Springs Canyon is an FPGA-based IPU product, and Mount Evans IPU is an ASIC-based IPU product.

Intel Oak Springs Canyon IPU is equipped with Intel Agilex FPGA and Xeon-D CPU. Intel Mount Evans IPU is a SoC (System-on-a-Chip) jointly designed by Intel and Google. Mount Evans is mainly divided into two parts: the I0 subsystem and the computing subsystem. The network part uses ASIC for packet processing, which has much higher performance and lower power consumption than FPGA. The computing subsystem uses 16 ARM Neoverse N1 cores, which have extremely strong computing capabilities.

DPU in Alibaba Cloud

Alibaba Cloud is also constantly exploring DPU technology. At the Alibaba Cloud Summit in 2022, Alibaba Cloud officially released the cloud infrastructure processor CIPU, which is based on the Shenlong architecture. The predecessor of CIPU is the MoC card (Micro Server on a Card), which meets the definition of DPU in terms of function and positioning. The MoC card has independent I0, storage, and processing units, and undertakes network, storage, and device virtualization work. The first and second-generation MoC cards solved the narrow sense of computing virtualization zero overhead problems, and software still implements the network and storage part of virtualization. The third-generation MoC card realizes the hardening of some network forwarding functions, and the network performance is greatly improved. The fourth generation MoC card realizes the full hardware offload of network and storage and also supports RDMA capability.

As a data center processor system designed for the Feitian system, Alibaba Cloud CIPU has a significant meaning for Alibaba Cloud to build a new generation of complete software and hardware cloud computing architecture systems.

DPU in Volcano Engine

Volcano Engine is also constantly exploring the road of self-developed DPU. Its self-developed DPU adopts a soft and hard integrated virtualization technology, aiming to provide users with elastic and scalable high-performance computing services. In the elastic computing products of Volcano Engine, the second-generation elastic bare metal server, and the third-generation cloud server are equipped with self-developed DPUs, which have been extensively verified in product capabilities and application scenarios. The second-generation EBM instance of Volcano Engine, which was officially commercialized in 2022, was the first to carry the self-developed DPU of Volcano Engine. It not only retains the stability and security advantages of traditional physical machines, and can achieve secure physical isolation, but also has the elasticity and flexibility advantages of virtual machines. It is a new generation of high-performance cloud servers with multiple advantages. The third-generation ECS instance of Volcano Engine, which was released in the first half of 2023, also combines the architecture of Volcano Engine’s self-developed latest DPU and self-developed virtual switch and virtualization technology, and the network and storage IO performance have been greatly improved.

Related Products:

-

NVIDIA MMA1Z00-NS400 Compatible 400G QSFP112 SR4 PAM4 850nm 100m MTP/MPO-12 OM3 FEC Optical Transceiver Module

$650.00

NVIDIA MMA1Z00-NS400 Compatible 400G QSFP112 SR4 PAM4 850nm 100m MTP/MPO-12 OM3 FEC Optical Transceiver Module

$650.00

-

QSFP-DD-400G-SR4 QSFP-DD 400G SR4 PAM4 850nm 100m MTP/MPO-12 OM4 FEC Optical Transceiver Module

$600.00

QSFP-DD-400G-SR4 QSFP-DD 400G SR4 PAM4 850nm 100m MTP/MPO-12 OM4 FEC Optical Transceiver Module

$600.00

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$850.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$850.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$750.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$750.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1100.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1100.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1200.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1200.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$800.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$800.00

-

NVIDIA MCP7Y10-N003 Compatible 3m (10ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$275.00

NVIDIA MCP7Y10-N003 Compatible 3m (10ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$275.00

-

NVIDIA MCA7J65-N005 Compatible 5m (16ft) 800G Twin-port OSFP to 2x400G QSFP112 InfiniBand NDR Breakout Active Copper Cable

$850.00

NVIDIA MCA7J65-N005 Compatible 5m (16ft) 800G Twin-port OSFP to 2x400G QSFP112 InfiniBand NDR Breakout Active Copper Cable

$850.00