In the fast-growing realm of artificial intelligence (AI), having the best hardware is key to staying on top of things. This is where NVIDIA DGX servers come in handy, as they are now considered the industry standard for organizations looking for a way to propel their AI efforts. Built with deep learning applications in mind, these servers offer unmatched computing power and integration capability, not to mention that they have been designed to handle even the most demanding artificial intelligence workloads ever created. In this blog post, we will look at some important features and benefits of the NVIDIA DGX series and discuss its architecture and performance numbers before finishing off with suggestions on how you can use it to change your organizational AI ability. Whether you’re an IT decision maker, data scientist, or AI researcher, then read through this article, which provides a holistic view of why the NVIDIA DGX server happens to be the best AI server for deep learning.

Table of Contents

ToggleWhat is an AI Infrastructure, and How Does the NVIDIA DGX Fit In?

Understanding the AI Infrastructure Landscape

An artificial intelligence (AI) infrastructure refers to the entire system of hardware, software, and networking elements needed for developing, testing, deploying, and managing AI applications. This includes computing resources such as GPUs and CPUs, data storage systems, specialized machine learning frameworks like TensorFlow or PyTorch, etcetera. Within this ecosystem sits NVIDIA DGX: an optimized deep learning performance server that is high-powered specifically for AI workloads. Featuring advanced GPU architecture with integrated software stacks supporting large-scale models – it serves as a critical part of contemporary artificial intelligent infrastructures, which allows businesses/enterprises to accelerate their workflow speed while achieving breakthrough results at the same time.

The Role of NVIDIA DGX in AI-Driven Workloads

AI-driven workloads powered by NVIDIA GPUs are the main focus of NVIDIA DGX servers. They do this by using Tensor Cores specifically built for artificial intelligence that speed up deep learning operations. In fact, these servers have unmatched performance; take for instance DGX A100 which incorporates eight NVIDIA A100 Tensor Core GPUs with capability of providing up to 5 petaFLOPS AI performance.

Most notable among the advantages of the DGX series is its ability to handle large-scale AI models and massive datasets. GPUs are connected through NVLink technology designed by NVIDIA, which ensures that there is high bandwidth and low latency communication between them, thereby enabling faster processing time for complex AI models than traditional servers can offer.

Moreover, the software stack within an NVIDIA DGX system optimizes further Artificial Intelligence works. This comprises of various development tools, libraries and supports popular deep learning frameworks like TensorFlow, PyTorch or MXNet in addition to the Nvidia A100 GPU’s. The comprehensive software environment enhances productivity and simplifies deployment as well as management of artificial intelligent models.

For example, if we look at internal benchmarks, it shows that DGX A100 could reduce weeks-long training periods necessary before convergence occurs according to a BERT-like model down to only a few days. Such acceleration not only speeds up time-to-insight but also allows more frequent iterations plus experiments, thus leading to better accuracy and effectiveness in modeling.

To summarize everything; robust architecture integrated software high-performance capabilities make them indispensable whether one wants scale efficiently his/her organization’s AI driven workload or any other thing alike where success is necessary!

Why Choose NVIDIA DGX™ for AI

With the choice of AI-driven NVIDIA DGX, there come unbeatable benefits such as the latest hardware technology, all-in-one integrated software solutions, and strong support for AI development. Here, DGX systems are designed for accelerated AI training and inference that are powered by NVIDIA A100 Tenor Core GPUs which give top performances in the industry. These GPUs are interconnected through NVLink technology, which ensures smooth high-speed communication, which is necessary for the efficient processing of massive amounts of data within artificial intelligence models. Besides this fact, another feature is also provided by the NVIDIA AI Enterprise suite that brings simplicity into deploying and managing different kinds of AI workloads while being compatible with major frameworks, including TensorFlow or PyTorch, among others. Thus, these capabilities enable enterprises to speed up their AI projects, reduce time spent on developing them as well and improve accuracy and reliability levels achieved by models used in various industries, thus making it imperative that every business should have NVIDIA DGX™ for AI at its disposal.

Which GPUs Power the NVIDIA DGX Servers?

Overview of NVIDIA Tesla GPUs

NVIDIA’s Tesla GPUs have been made for scientific computing, data analytics, and AI work of great magnitude. The range consists of V100, T4, and P100 GPUs, which are all built to provide huge computational power as well as memory bandwidth. Volta-based V100 is best in class for AI and HPC workloads; it has 16GB or 32GB HBM2 memory plus 640 Tensor Cores, giving superior performance on inference and training. Turing-powered T4 supports training, inference, and video transcoding among other diverse workloads and is also known for its energy efficiency, while Pascal-based P100 can handle large-scale analytics due to its ability to perform demanding computations with 16 GB of HBM2 memory alongside 3584 CUDA cores. As such, NVIDIA DGX servers equipped with these graphics cards make an excellent choice when speed-up development cycle time is required in accelerating AI systems for high-performance computing.

Exploring the Capabilities of the A100 and V100 GPUs

Capabilities of NVIDIA A100 GPU

The NVIDIA A100 GPU is constructed on Ampere architecture and represents a huge boost in the field of GPU technology as it not only performs incredibly well but also can be scaled infinitely. It contains an HBM2e memory of up to 80 GB and a memory bandwidth of more than 1.25 terabytes per second, which allows it to handle all sorts of data-heavy operations. A single A100 GPU can be divided into seven smaller ones that are fully isolated from each other using Multi-Instance GPU (MIG) technology, with each having its own cache, compute cores, and high-bandwidth memory. This feature improves infrastructure-as-a-service (IaaS) capabilities while enabling efficient execution of different computing workloads. Furthermore, among other things, the A100 has 432 third-generation Tensor Cores and 6912 CUDA cores and boasts superior performance per watt over previous models, making it perfect for AI training, inference, and HPC.

Capabilities of NVIDIA V100 GPU

Powered by Volta architecture, the NVIDIA V100 GPU still remains one of the most powerful options for tasks related to Artificial Intelligence or High-Performance Computing (HPC). It has got 640 Tensor Cores along with 5120 CUDA cores whereas its HBM2 memory is either16GB or 32GB thereby providing a memory bandwidth equal to or greater than 900 GB/s.The V100’s Tensor Cores are particularly designed so as to accommodate deep learning workloads whereby they deliver training speeds with an output performance estimated at about 125 teraflops for every second during the network training phase. This type of Graphics card suits both business enterprises and scientific research institutions because it supports mixed-precision arithmetic, which ensures fast computation without losing accuracy. Moreover, thanks to its great computational power coupled with large storage capacity, this particular model can perform diverse functions ranging from running massive simulations to real-time analysis that would require complex algorithms.

By integrating these state-of-the-art GPUs into their DGX servers, NVIDIA has enabled organizations to handle any type of AI workload or HPC task more quickly and efficiently than ever before.

Performance Benchmarks of NVIDIA DGX A100 and NVIDIA DGX H100

AI and HPC performance benchmarks have been set by the NVIDIA DGX A100 and DGX H100. With eight A100 Tensor Core GPUs, the DGX A100 achieves an AI peak performance of up to 5 petaFLOPS. This system is good for mixed-precision computing because it does well in both training and inference of AI at the same time. Throughput and efficiency are significantly improved by this system, which supports Multi-Instance GPU (MIG) technology that allows the partitioning of one GPU into several instances for different workloads.

On the other hand, previous benchmarks were surpassed by the NVIDIA DGX H100 with enhanced performance metrics driven by the latest H100 GPUs. Up to 60 teraflops of double-precision performance are offered by these GPUs, which also bring faster training times for deep learning models used in AI tasks. One amazing thing about this device is its inclusion of new 4th-Gen Tensor Cores designed specifically for sparse as well as dense matrix calculations, where they deliver unparalleled speed levels. Besides data throughput amplification through NVLink and NVSwitch technologies between GPUs, there is also increased computational power enabled by these features due to improved inter-GPU communication, thereby further boosting overall capabilities of computation.

These two should be able to handle any kind of workload thrown at them because they were made with toughest computational tasks in mind; thus making it a must-have tool for any organization involved in advanced artificial intelligence research, big data analysis or complex simulations industry wide. Accordingly, they established themselves firmly atop high-end Artificial Intelligence alongside the performance Computing applications landscape today.

How Does the NVIDIA DGX™ Enhance AI and Deep Learning?

Accelerating Deep Learning with NVIDIA DGX

The utilization of NVIDIA DGX™ systems accelerates deep learning because they come with integrated hardware and software that perform well. These systems have the best GPUs that are designed for AI workloads including models like A100 and H100. They use advanced technology like Tensor Cores, Multi-Instance GPU (MIG), NVLink, and NVSwitch to increase computational efficiency as well as throughput while still allowing inter-GPU communication. Therefore this configuration leads to faster training times during complex deep learning model development stages and also improves inference performance optimization. To sum it up, NVIDIA DGX™ hardware, together with software capabilities, greatly enhance various aspects of deep learning such as speediness and scalability, among others, by providing superior levels of them.

Use Cases of NVIDIA DGX in AI Research and Industry

AI studies and business applications are propelled by NVIDIA DGX™ systems that have unmatched computational powers and efficiencies. Here are some examples of use cases:

Medical Imaging and Diagnostics

In medical imaging, diagnoses have been made faster and more accurate thanks to NVIDIA DGX systems. By working through large amounts of medical data, including MRI scans or CT scans, AI models enabled by DGX can detect anomalies and provide early-stage diagnosis at higher precision levels. A research study from Stanford University revealed that AI algorithms ran on DGX A100 with an accuracy rate of 92% in detecting pneumonia from chest X-rays, which is far better than traditional methods.

Autonomous Vehicles

NVIDIA DGX systems are relied upon heavily for the development and improvement of autonomous driving technology. Real-time data processing from various sensors in self-driving cars is made possible by the computational power offered by the DGX platform. Training deep learning models for object detection enhancement along path planning and decision-making algorithms used by companies such as Tesla or Waymo in self-driving vehicles is done using DGX; this ultimately leads to safer self-driving cars.

Natural Language Processing (NLP)

Chatbots, translation services, and virtual assistants, among other NLP applications, are advanced through NVIDIA DGX systems. OpenAI’s GPT-3 model, for instance, needs to process huge volumes of text data within a short period, something achieved through robust architecture present in DGX H100, hence resulting in language generation that is more coherent, contextually accurate, etcetera. What took weeks before can now be completed in days, therefore accelerating innovation in AI-driven communication tools.

Financial Modeling & Risk Management

In the finance industry, quick analysis of market trends together with risk assessments rely on NVIDIA’s deep learning-powered fast computation abilities provided by their system known as NVidia dgx a1000 server rack, so you’re able to read it here. Stock movement prediction-based high-frequency trading data processing using quantitative analysts developed robust risk management frameworks powered by DGX, among others, which led JP Morgan to cut down computational time for their risk models by 40%; this, in turn, enabled quicker, more accurate financial decision-making.

Climate Science & Weather Prediction

The complexity involved in simulating atmospheric phenomena calls for heavy computation, which is why climate scientists use NVIDIA DGX systems for weather forecasting purposes. They can simulate complex atmospheric phenomena accurately only because of the computational power of Nvidia’s dgx a1000 server rack, designed specifically with them in mind. The European Centre for Medium-Range Weather Forecasts (ECMWF) improved its forecasts’ reliability as well as accuracy through the adoption of DGX, where they recorded up to 20% higher forecast accuracy rates for severe events such as hurricanes – a critical step towards preparedness against such natural catastrophes.

These are just but a few examples among many other cases that have seen the light of day thanks to the NVidia dgx platform, which has brought about nothing short of revolutionary changes across a wide range of areas fueled by artificial intelligence (AI) technologies together with deep learning algorithms as applied herein.

Success Stories: NVIDIA DGX in Action

Healthcare and Medical Investigation

Mayo Clinic uses NVIDIA DGX systems to speed up its AI-based medical research. Mayo Clinic has used DGX A100 to process large datasets, which in turn have helped them create more sophisticated diagnostic models for better patient care. For example, the amount of time taken to process medical imaging data has been significantly reduced resulting into quicker and more precise diagnosis.

Autonomous Vehicles

NVIDIA DGX solutions are applied by Waymo, one of the leading autonomous vehicle developers, during training and validation of their self-driving technology. The enormous sensor data quantities processed by Waymo for its different autonomous driving systems have been made possible only through the computational power provided by DGX. This achievement translates to reliable, self-driven cars that can be deployed widely within short periods.

Pharmaceutical Development

In order to fast track drug discovery processes AstraZeneca utilizes NVIDIA DGX systems. The integration of DGX A100 into their research workflows helps in simplifying analysis on complex biological data thereby speeding up identification of potential drug candidates at AstraZeneca. As a result there is a more efficient development pipeline that leads to faster release of new drugs into market.

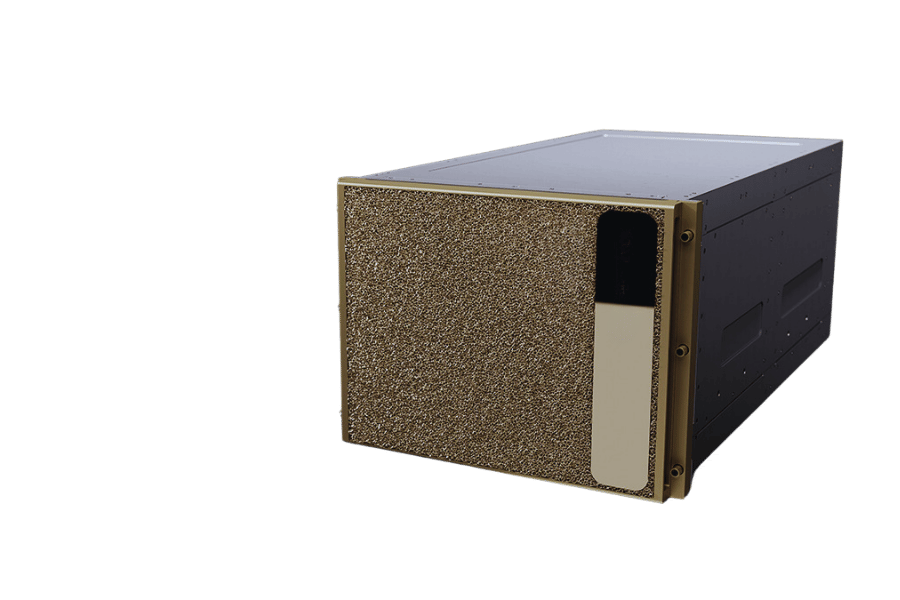

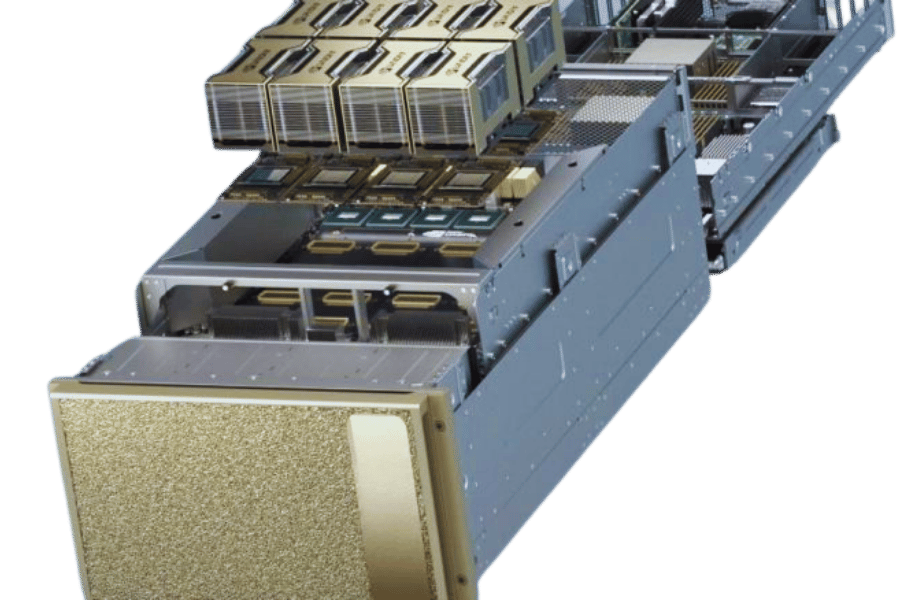

What are the Key Features of the DGX A100 Server?

Technical Specifications of the DGX A100

The AI computing infrastructure of the NVIDIA DGX A100 is revolutionary in terms of performance and flexibility. Here are detailed specifications to demonstrate its technical ability:

- GPU Architecture: This Ampere architecture device is powered by NVIDIA A100 Tensor Core GPUs.

- Number of GPUs: 8 parallel operating NVIDIA A100 Tensor Core GPUs provide a total of 320 GB GPU memory.

- GPU Memory: Each one comes with 40 GB high-bandwidth memory (HBM2), aggregating to 320 GB GPU memory.

- Performance: It delivers up to an unprecedented 5 petaFLOPS, necessary for various workloads on AI and high-performance computing.

- CPU: To handle CPU-bound tasks effectively, this has two AMD EPYC 7742 processors that have a base clock speed of 2.25 GHz each, with 64 cores on every chip.

- System Memory: With expandability up to 2 TB for memory-intensive applications where at least one terabyte of storage space would be needed initially.

- Networking: It has eight hundred gigabits per second InfiniBand connections integrated, which allows for fast data transfer between multiple DGX systems within data centers.

- Storage: The storage capacity is thirty terabytes (TB) made up of NVMe SSDs that are optimized for quick access times and high throughput rates when reading or writing large volumes of information frequently during operations such as machine learning training exercises, thus ensuring fast I/O operations during massive data processing jobs required by artificial intelligence programs running on this device type.

- Power Consumption: Even though it consumes a maximum power of six point five kilowatts (6.5 KW), power management should not be taken lightly, given its computational capabilities.

- Software: Comes pre-installed with TensorFlow and PyTorch, among other popular frameworks, as well as the NVIDIA CUDA toolkit, thereby eliminating any need for additional software installations or configurations.

The NVIDIA DGX A100 achieves scalability and versatility in AI research, development, and deployment across industries by combining these advanced features.

Benefits of the DGX A100 for Enterprise AI

The NVIDIA DGX A100 is a great choice for enterprise AI applications because it has a lot of advantages:

- Unmatched Performance: The DGX A100 can process complex AI workloads with up to 5 petaFLOPS of computing power, which greatly reduces training time and speeds up the time to insight.

- Scalability: With 2 TB of system memory that can be expanded and integrated 100 Gb/s InfiniBand connections, the DGX allows easy scaling across multiple units as data grows and computational needs increase.

- Versatility: Dual AMD EPYC processors and support for major AI frameworks as well as NVIDIA’s optimized software stack make the DGX A100 ideal for various AI tasks such as model training to inference in high-performance computing environments.

- Efficiency: Although it consumes up to 6.5 kW at maximum load, this advanced architecture ensures energy utilization efficiency while delivering exceptional performance per watt.

- Integration and Management: The DGX A100 comes pre-configured with NVIDIA’s software stack thereby simplifying deployment and management of AI workloads within enterprise setting thus streamlining development and operational pipeline processes.

These benefits make the DGX A100 a strong contender among other machines that can be used by organizations looking forward towards maximizing their potential on artificial intelligence technology.

Comparing DGX A100 with DGX-1

In comparison to its previous iteration, DGX-1, there are several advancements and enhancements in the DGX A100:

- Performance: The DGX A100 offers up to 5 petaFLOPS, which is much higher than the one petaFLOP of the DGX-1. This fivefold increase in computing power enables more complex AI workloads to be run on it and faster processing speed.

- Memory and Scalability: While the DGX-1 is limited to 512 GB system memory, the DGX A100 supports as much as 2 TB. Moreover, unlike 56 Gb/s connections on the older model; this one has got lightning-fast 100 Gb/s InfiniBand connectivity that greatly improves scaling performance within multiple units integration capability.

- Architecture and Versatility: According to NVIDIA’s latest information about these two products; they were built based on different architectures – Pascal for DGX-1 and Ampere for DGX A100 respectively. Apart from increasing raw power output this also allows better handling of various artificial intelligence as well high-performance computing tasks by latter device.

- Energy Efficiency: If we compare them in terms of energy consumption (6.5 kW against 3.2 kW), then obviously speaking yes indeed it may consume twice more electricity but still performs many times better so efficiency per watt is higher with new model than old one even though it’s not perfect yet.

- Software and Integration: Both systems come pre-configured with NVIDIA’s software stack although there have been some additional updates made specifically for use cases related to machine learning which were only applied onto DGX A100 making deployment easier while managing AI workloads better too especially when doing bulk operations like training large datasets simultaneously.

Basically what this means overall is that if you want something that can do everything I just said plus more then go get yourself a few pieces of those things called ‘DGX A100’ otherwise stick with ‘DGX-1’ since it still does pretty much everything else.

How to Shop the NVIDIA DGX Series with Confidence?

Shipping and Handling of DGX AI Servers

To ensure a smooth transition when buying and preparing for DGX AI server delivery, there are some points you should know:

- Packaging Specifications: They package servers securely enough to survive shipping, so all parts come undamaged and operational.

- Delivery Timelines: These may take a few days or several weeks depending on availability and destination; it is recommended to verify the estimated arrival date upon purchase.

- Handling Requirements: DGX server systems are large heavy appliances which need professional handlers; ensure that there are unloading facilities at your receiving point together with necessary workforce and equipment for setup purposes.

- Pre-installation Checks: Confirm if various infrastructural requirements such as power supply adequacy, cooling systems installation and network connectivity strength needed for supporting server installation and operation are met by the site.

- Post-Delivery Support: NVIDIA gives extensive support after delivery like installation advice as well as technical help in order to ensure easy integration into current setups.

Understanding Enterprise Support and Warranty Options

NVIDIA ensures that your DGX AI servers operate at maximum performance for the longest time possible by offering strong enterprise support and comprehensive warranty options. Below are some of the major support services they provide:

- Technical Assistance: This program allows you to contact NVIDIA’s technical support team 24/7. These experts are well-trained to resolve any software, hardware, or system configuration-related issues.

- Warranty Coverage: Standard warranties cover repair or replacement of faulty parts in case there is a problem with the materials used or workmanship employed during manufacture. You can buy additional warranty options for extended protection periods.

- Software Updates: They regularly release new software versions together with firmware updates which boost security levels as well as aligning systems’ performance with recent AI tools plus technologies compatibility requirements.

- On-Site Support: If an issue needs immediate attention, on-site professional assistance can be provided according to service level agreements, depending on how critical it is.

- Training and Resources: There is a lot of documentation available coupled with training modules so that teams can learn more about these machines while also getting insights on how best they can utilize them hence becoming efficient users too.

To ensure reliability and efficiency in AI infrastructure, enterprises should know about this support provision as their operations will run smoothly without downtime.

Tips for Purchasing from Authorized Dealers

When organizations buy enterprise hardware that is of high value, such as NVIDIA DGX AI servers, it is important to ensure that they purchase them from authorized dealerships for genuineness, quality service, and post-sales support. The following are some important points to note:

- Establish the dealer’s authorization: At all times, only deal with sellers who are registered as NVIDIA approved. This can be verified by looking up in the official website of NVIDIA or contacting their support team.

- Review online rankings and appraisals: Independent review platforms can provide customer ratings, which will help rate the reputation of a vendor. More often than not, high ratings and positive reviews show that they have reliable services and support.

- Warranty confirmation and support provisions: Ensure that every service indicated by NVIDIA as part of warranty coverage is provided by your seller; otherwise, nobody else other than authorized dealers offers official warranty coverage as well as comprehensive support.

- Assessment after sale service: Find out what kind of support is given past purchase point; it could be availability or excellence or both. Additional assistance may come in form technical helpdesk and training among others which are usually provided alongside resources by authorized dealerships.

- Ask for documents: Keep with you copies such as purchase receipts, agreement papers plus any other relevant record useful during claim making or when seeking for assistance later on.

Enterprises should abide by these rules so that they obtain real products with complete warranty entitlements backed by full nvidia ai investment optimization through enhanced support.

Why Consider DGX Cloud for AI Compute Needs?

Advantages of DGX Cloud for Scaling AI Infrastructure

There are many notable benefits to DGX Cloud that help scale AI infrastructure:

- Scalability Whenever Necessary: It speeds up the process of scaling computing power according to requirements, thereby eliminating the necessity for large amounts of physical infrastructure.

- Services Which Are Managed: This creates an environment that is fully managed by professionals in order to minimize overheads and concentrate on development as well as deployment areas.

- Superior Performance: With NVIDIA’s high-performance GPUs designed specifically for AI workloads, models and applications will always perform optimally.

- Safety First: Robust security measures plus encryption protocols guarantee data protection while meeting compliance standards.

- Worldwide Availability: Artificial intelligence computational resources can be accessed globally from any location where there is internet connectivity thus enhancing collaboration among teams operating across different regions.

Integration of NVIDIA AI Enterprise and DGX Cloud

For a business that wants to improve and expand its AI functions, the integration of DGX Cloud with NVIDIA AI Enterprise is ideal. To be used on NVIDIA’s GPU-accelerated infrastructure, this comes with a powerful range of artificial intelligence and data analytics tools. Below are some advantages that establishments can get from using it:

- Single Platform: This makes easier the deployment, management as well as scaling up or down of artificial intelligence workloads.

- Better Performance: This is possible by combining features which utilize NVIDIA’s software application stack for AI together with their high-performance GPUs thereby ensuring faster processing speeds while reducing latencies.

- Scalability: DGX cloud’s infrastructure, hosted in the cloud, allows an organization to easily adjust the level of resources allocated to machine learning depending on demand fluctuations.

- Management Simplicity: Integrated management tools streamline administrative tasks, leading to less complexity in operation and saving time and effort.

- Improved Safety: The security provided covers everything necessary such as meeting industry standards so that even delicate workflows involving machine learning are protected along with related data sets.

By bringing these two offerings together businesses can create an environment where innovation happens quickly supported by solid foundation for operational excellence in terms scalability within AI systems.

Examples of DGX Cloud Deployments in the Industry

Industries use DGX Cloud to promote innovation and efficiency in a number of ways:

- Healthcare: Using DGX Cloud, medical institutions can accelerate drug discovery and conduct medical imaging faster and genomic analysis faster. These establishments quickly sift through massive amounts of data by availing themselves of its ability for high-performance computing thereby hastening the creation of new treatments and personalized medicine.

- Finance: Financial firms apply the DGX cloud for better outcomes in areas such as algorithmic trading, fraud detection, and risk management. They can process large volumes of financial data more accurately and quickly because they have integrated advanced AI models with machine learning, thereby gaining the upper hand in the market.

- Automotive: The automotive industry relies on DGX Cloud for the development of autonomous vehicles and advanced driver-assistance systems (ADAS). Through the utilization of this cloud-based infrastructure, complex driving scenarios can be simulated, AI models can be trained, and vehicle safety and performance can be improved, among other things.

These instances demonstrate how diverse the application is across different sectors but also shows that it has potential for enabling transformative breakthroughs using scalable AI solutions which are optimized for DGX Cloud.

Reference Sources

Frequently Asked Questions (FAQs)

Q: What is NVIDIA DGX, and how does it help develop AI?

A: The NVIDIA DGX is a competent AI server built to speed up deep learning and generative AI workflows. It works with state-of-the-art tensor core GPUs, offering the best performance any researcher or engineer could wish for. Besides, the DGX has excellent support for AI projects due to its use of the NVIDIA AI Enterprise Software Suite.

Q: What are the various models available in NVIDIA DGX?

A: NVIDIA produces many types of DGX models, including the NVIDIA DGX-1, DGX Station, and DGX Station A100, among other latest versions with more features. Each model is designed differently to handle complex tasks within different processing power and storage levels.

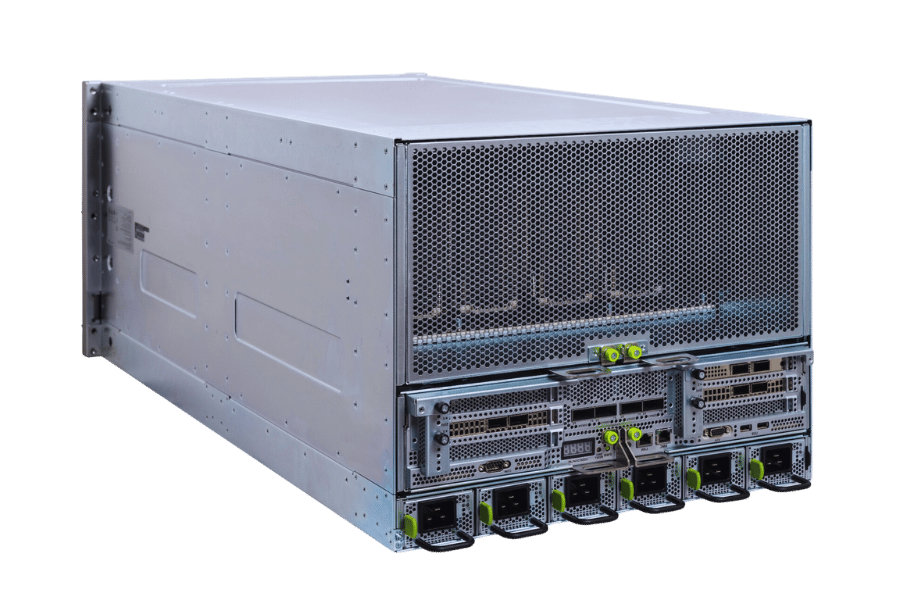

Q: How does NVIDIA DGX SuperPOD™ improve AI performance?

A: Combining several DGX systems into one system, referred to as a supercomputer, gives rise to NVIDIA DGX SuperPOD™, which acts as an artificial intelligence program offering computing power beyond ordinary understanding. This design also provides higher processing speeds, which makes it suitable for big data applications involving machine learning on a large scale or deep neural networks.

Q: Which type of GPUs does the company include in its products, like NVIDIA DGX?

A: In these systems, there can be found cutting-edge graphic processor units such as Tesla V100, 8x V100, and even the H100 system – just recently launched by Nvidia corporation itself; all these components have been created specifically for high-performance computation (HPC) purposes but at the same time they’re able to support various kinds of artificial intelligence projects based on large-scale data processing along with generative models creation.

Q: Can you explain what “NVIDIA DGX Station” means and suggest some fields where it could be used?

A: Office-based artificial workstations like NVIDIA DGX Station help employees perform their tasks faster. Its computing capabilities are similar to those in data centers, which is why scientists who are eager to create and test different AI models need it so much—they can do everything efficiently there. The newest model is called DGX Station A100, which has been developed for data science and AI research purposes.

Q: What are the specs of NVIDIA DGX-1?

A: It implements 8x Tesla V100 GPUs, 512GB RAM, and 4 x E5-2698 v4 CPUs, all aimed at maximum computing efficiency and power to tackle AI tasks.

Q: How does NVIDIA Base Command™ contribute to DGX operations?

A: NVIDIA Base Command™ is an extensive artificial intelligence management platform that simplifies the operation and monitoring process for DGX systems. It allows users to track project progress efficiently, manage resources better, and optimize performance across all DGX servers.

Q: What role does the NVIDIA H100 system play in AI advancements?

A: The NVIDIA H100 system is powered by the NVIDIA Hopper architecture. It is the most advanced tensor core GPU worldwide. This speeds up AI and generative computing dramatically, making it indispensable for cutting-edge AI research and applications.

Q: What considerations should I have when buying a DGX system?

A: When purchasing a DGX system, factors such as destination zip code and time, code and time of acceptance, and shipping service selected should be taken into consideration. Therefore, acceptance may be affected by these factors depending on where you are located or what best suits your needs.

Related Products:

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

-

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

-

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$260.00

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$260.00

-

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

-

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

-

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$190.00

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$190.00