The NVIDIA DGX platform is a cornerstone of artificial intelligence (AI) and high-performance computing (HPC), delivering unmatched performance for data-intensive workloads. The NVIDIA DGX H200, powered by H100 Tensor Core GPUs, NVLink 4.0, and advanced liquid cooling, represents the pinnacle of this portfolio, enabling organizations like OpenAI to push the boundaries of AI innovation. This guide explores the DGX H200’s architectural advancements, performance metrics, and applications, providing insights for data center architects and AI researchers. Whether you’re scaling AI model training or optimizing enterprise workloads, understanding the DGX H200’s capabilities is essential for staying ahead in the AI revolution. Dive into Fibermall’s comprehensive analysis to discover why DGX systems are the go-to solution for cutting-edge computing.

The need for strong computational power has increased with the continuous development of artificial intelligence in various sectors. For AI research and development, nothing beats the NVIDIA DGX H200 as far performance and scalability are concerned. This article looks at the features and functionalities of DGX H200 and how it was strategically delivered to OpenAI vis-à-vis other systems. We shall dissect its architectural enhancements, performance metrics, as well as its effect on speeding up AI workloads; thus showing why this supply chain is important within wider advancements of AI.

Table of Contents

ToggleWhat Is the NVIDIA DGX H200?

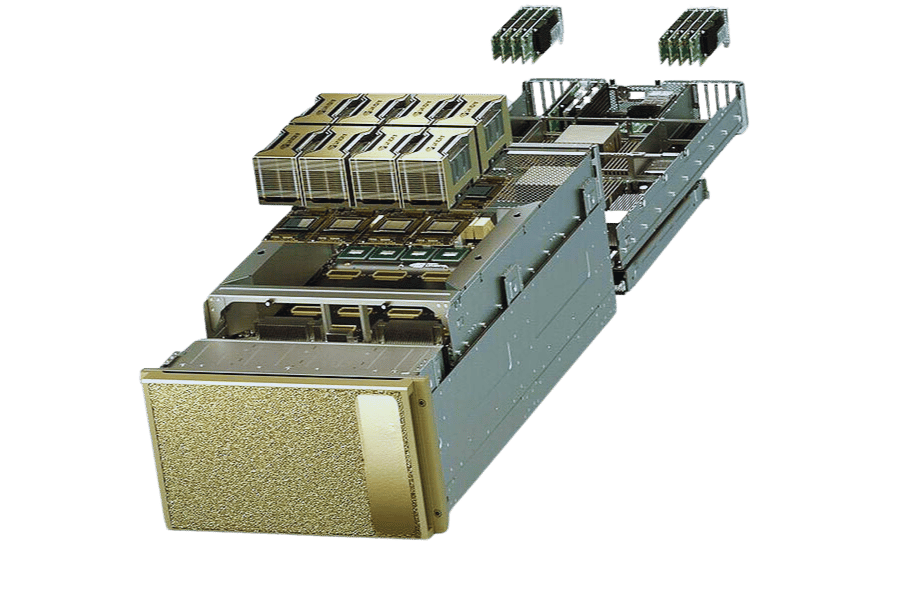

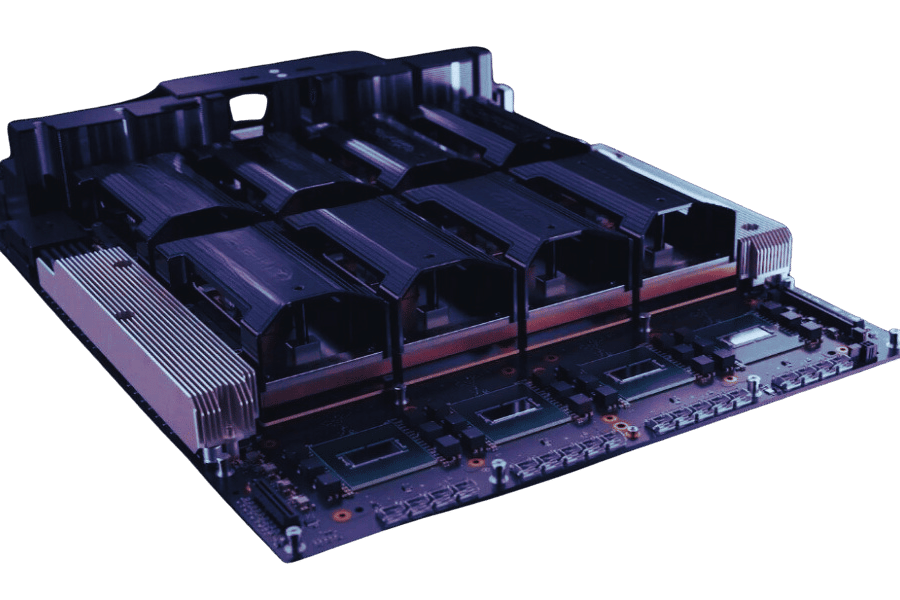

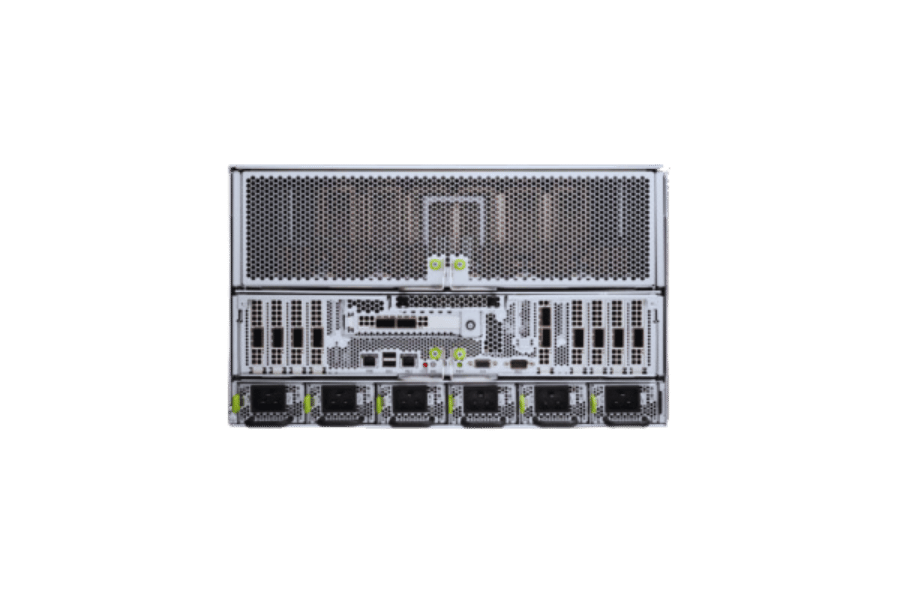

The NVIDIA DGX H200 is an AI supercomputer designed for the most demanding deep learning and machine learning workloads. Built on NVIDIA’s Hopper architecture, the DGX H200 integrates eight H100 Tensor Core GPUs, delivering up to 30 petaflops of FP8 performance per GPU and 640 GB of GPU memory. Unlike standalone GPUs like the H100, the DGX H200 is a fully integrated system with high-speed NVLink 4.0 interconnects (900 GB/s) and third-generation NVSwitch for scalable GPU communication. Its advanced liquid cooling ensures energy efficiency, making it eco-friendly for data centers. The DGX H200’s robust architecture, with massive memory bandwidth (3.2 TB/s per GPU) and NVIDIA’s AI software stack, optimizes performance for large-scale AI models and HPC tasks, as demonstrated by its strategic delivery to OpenAI for cutting-edge AI research.

Exploring the NVIDIA DGX H200 Specifications

A super artificial intelligence computer made by NVIDIA is the DGX H200. It is designed to cope with all kinds of deep learning and intensive machine learning workloads. Many NVIDIA H100 Tensor Core GPUs have been used in its design so that it can train large neural networks within a blink of an eye. The creators also made sure that this system has got high-speed NVLink interconnect technology for faster calculations through GPUs via data transferring. Furthermore, apart from complex datasets processing support, the robustness of DGX H200’s architecture manifests itself in massive memory bandwidths & storage capacities implementation as well. There are no worries about energy saving because advanced liquid cooling technologies allow keeping performance at maximum while using minimal amounts of electricity – thus making it eco-friendly too! In terms of specifications alone – organizations should regard DGXH200 as their most invaluable possession whenever they wish to exploit AI capabilities beyond limits possible before now!

How Does the DGX H200 Compare to the H100?

The NVIDIA DGX H200 is based on the architectural foundation of the H100 Tensor Core GPU, but it has a number of tweaks that help it perform better with AI-focused workloads. Where the H100 is just one GPU optimized for different kinds of artificial intelligence tasks, the H200 is a system that combines several H100 GPUs with sophisticated architecture around them. This enables parallel processing, which greatly accelerates large-scale computational throughput. Additionally, the DGX H200 boasts advanced NVLink connectivity and more memory bandwidth to improve inter-GPU communication as well as data handling speed while working together. On the other hand, as compared to its ability to scale up when dealing with heavy workloads, this single device could prove insufficient alone in managing such loads effectively, hence becoming less useful than expected sometimes. In conclusion, then, we can say that overall, performance-wise, because it was designed specifically for resource-demanding projects within organizations – DGXH200 emerges as being more powerful and efficient than any other AI platforms available today.

What Makes the DGX H200 Unique in AI Research?

The AI research of NVIDIA DGX H200 is special because it can cope with large datasets and complicated models more quickly than anything else. It has an architecture that can be scaled up easily as research needs to grow based on a modular design, which makes it perfect for institutions using NVIDIA AI Enterprise solutions. Moreover, model training times are dramatically reduced by integrating high-performance Tensor Core GPUs optimized for deep learning. Also, inference times are much faster thanks to this integration too. Besides these points, the software side cannot be ignored either, such as NVIDIA’s AI software stack among other sophisticated software included in this system that improves user-friendliness while at the same time optimizing performance for different stages involved in doing research with artificial intelligence like data preparation or feature engineering. This makes DGX H200 not only powerful but also easy-to-use tool for all researchers in the field of machine learning who want to push the boundaries of their current understanding through data analysis and experimentation using these types of environments, which enable them achieve desired results within shortest time, possible thus saving valuable resources like money otherwise spent on buying new equipment required by those working with less efficient systems

Benefits of NVIDIA DGX H200

The DGX H200 offers transformative advantages for AI, HPC, and enterprise applications:

- Unmatched Compute Power: Up to 240 petaflops (FP8) across eight H100 GPUs for rapid AI training and inference.

- Scalability: NVSwitch supports up to 256 GPUs, ideal for large-scale DGX clusters like SuperPODs.

- High Bandwidth: NVLink 4.0 delivers 900 GB/s for low-latency GPU communication.

- Energy Efficiency: Liquid cooling reduces power consumption, making DGX H200 eco-friendly for data centers.

- Optimized Software: NVIDIA’s AI stack (TensorFlow, PyTorch, NVIDIA AI Enterprise) streamlines workflows.

- Versatility: Supports diverse workloads, from LLMs to scientific simulations, with Multi-Instance GPU (MIG) partitioning.

These benefits make the DGX H200 a preferred platform for organizations like OpenAI, accelerating AI innovation and research.

Applications of NVIDIA DGX H200

The DGX H200 powers high-performance applications across industries:

- Artificial Intelligence and Machine Learning: Accelerates training and inference for LLMs, as demonstrated by OpenAI’s deployment.

- High-Performance Computing (HPC): Supports simulations in physics, genomics, and climate modeling with DGX H200’s massive compute power.

- Data Analytics: Enables real-time processing of large datasets in GPU-accelerated databases using DGX H200.

- Scientific Research: Powers supercomputers like NVIDIA Selene for computational breakthroughs.

- Enterprise AI Workloads: Scales AI deployments in data centers, optimizing inference and training with DGX H200.

These applications highlight the DGX H200’s role in driving innovation, making it a critical asset for AI and HPC ecosystems.

DGX H200 vs. DGX H100 and Other Systems

Comparing the DGX H200 to the DGX H100 and other systems clarifies its advancements:

| Feature | DGX H200 | DGX H100 | DGX A100 |

|---|---|---|---|

| GPU Architecture | Hopper (H100 GPUs) | Hopper (H100 GPUs) | Ampere (A100 GPUs) |

| Performance | 240 petaflops (FP8, 8 GPUs) | 32 petaflops (FP64, 8 GPUs) | 5 petaflops (FP64, 8 GPUs) |

| Memory Bandwidth | 3.2 TB/s per GPU | 3 TB/s per GPU | 2 TB/s per GPU |

| NVLink Version | NVLink 4.0 (900 GB/s) | NVLink 4.0 (900 GB/s) | NVLink 3.0 (600 GB/s) |

| NVSwitch | 3rd-Gen (57.6 TB/s) | 3rd-Gen (57.6 TB/s) | 2nd-Gen (4.8 TB/s) |

| Cooling | Liquid cooling | Air/liquid cooling | Air cooling |

| Use Case | LLMs, generative AI, HPC | AI, HPC, analytics | AI, HPC, data analytics |

The DGX H200’s enhanced memory bandwidth (3.2 TB/s vs. 3 TB/s) and liquid cooling make it more efficient than the DGX H100 for large-scale AI workloads, while both surpass the DGX A100 in performance and scalability.

How Does the DGX H200 Improve AI Development?

Accelerating AI Workloads with the DGX H200

The NVIDIA DGX H200 can accelerate the AI workload as it uses modern GPU design and optimizes data processing power. It decreases latency by having high memory bandwidth and inter-GPU communication via NVLink, enabling fast transfer of information among GPUs thus speeding up model training. This ensures that operations are performed quickly during complex computations required by artificial intelligence tasks, specifically when using DGX H200 GPU’s capabilities. Moreover, workflow automation is simplified through integration with NVIDIA’s own software stack so that algorithmic improvements can be concentrated on by researchers and developers who may also want to innovate further. As a result, not only does this lower the time taken for deployment of AI solutions, but it also improves overall efficiency within AI development environments.

The Role of the H200 Tensor Core GPU

The NVIDIA DGX H200’s Tensor Core GPU enhances deep learning optimization. It is made for tensor processing, which speeds up matrix functions necessary for training neural networks. In order to improve the efficiency, accuracy, and throughput of the H200 Tensor Core GPU, it performs mixed-precision computations, thus enabling researchers to work with bigger sets of data as well as more complicated models. Besides that, simultaneous operations on several information channels allow faster model convergence, thereby cutting down training periods greatly and speeding up AI application creation cycle times overall. This new feature further solidifies its status as an advanced AI research tool of choice – the DGX H200.

Enhancing Generative AI Projects with the DGX H200

The generative AI projects are greatly enhanced by NVIDIA DGX H200, built on high-performance hardware and software ecosystem for intensive computational tasks. This fast training of generative models such as GANs (Generative Adversarial Networks) is enabled by advanced Tensor Core GPUs that efficiently process large amounts of high-dimensional data. Parallel processing capabilities are improved through the system’s multi-GPU configuration, resulting in shorter training cycles and stronger model optimization. Moreover, seamless integration of NVIDIA’s software tools like RAPIDS and CUDA offers developers smooth workflows for data preparation and model deployment. Therefore, not only does DGX H200 speed up the development of creative AI solutions, but it also opens room for more complex experiments as well as fine-tuning, thereby leading to breakthroughs within this area.

How to Deploy NVIDIA DGX H200 Systems

Deploying DGX H200 systems requires careful planning to maximize performance:

- Assess Workload Needs: Evaluate AI, HPC, or analytics requirements to determine DGX H200 configuration.

- Select Hardware: Choose DGX H200 systems with eight H100 GPUs and NVSwitch for scalability.

- Configure NVLink/NVSwitch: Optimize NVLink 4.0 (900 GB/s) and NVSwitch for multi-GPU communication.

- Install Software: Use NVIDIA’s AI stack (TensorFlow, PyTorch, NVIDIA AI Enterprise) for optimized workflows.

- Plan Cooling and Power: Implement liquid cooling and robust power infrastructure for DGX H200’s high demands.

- Test Performance: Benchmark with NVIDIA NCCL to ensure DGX H200 meets performance expectations.

Why Did OpenAI Choose the NVIDIA DGX H200?

OpenAI’s Requirements for Advanced AI Research

Advanced AI research at OpenAI needs high computational power, flexible model training and deployment options, and efficient data handling capabilities. They want machines that can handle large datasets and allow experimentation with state-of-the-art algorithms to take place quickly — hence the requirement for things like DGX H200 GPUs delivered to them by NVIDIA. Beyond this point, it must also be able to work across multiple GPUs so that processing can be done in parallel, saving time when trying to find insights from data sets. What they value most of all, though, is having everything integrated tightly so that there are no gaps between any software frameworks involved; this means that the same environment will do everything from preparing data right up to training models on it – thus saving both time and effort. These exacting computational demands combined with streamlined workflows represent an essential driver of AI excellence for OpenAI.

The Impact of the DGX H200 on OpenAI’s AI Models

The AI models of OpenAI are greatly boosted by NVIDIA DGX H200 with unparalleled computational power. They enable the training of larger and more complex models than ever before by using this system. With the advanced multi-GPU architecture of DGX H200, vast datasets can be processed more efficiently by OpenAI. This is possible because it allows for extensive parallel training operations, which in turn fastens the model iteration cycle. Hence, diverse neural architectures and optimizations can be experimented with faster, thus improving model performance and robustness eventually. Besides being compatible with NVIDIA’s software ecosystem, the DGX H200 has a streamlined workflow that makes data management easy as well as implementation of state-of-the-art machine learning frameworks effective. What happens when you integrate DGX H200 is that it promotes innovation; this leads to breakthroughs across different AI applications, thereby solidifying OpenAI’s position at the forefront of artificial intelligence research and development even further

What Are the Core Features of the NVIDIA DGX H200?

Understanding the Hopper Architecture

Hopper architecture is a great leap in the design of graphic processing units that is optimized for computing with high performance and artificial intelligence. It has some new features such as better memory bandwidths thus faster data access and manipulation. The Hopper architecture allows multiple instances of GPUs (MIG) which makes it possible to divide resources among many machines and scale well on AI training tasks. There are also updated tensor cores in this design that improve mixed precision calculations important for speeding up deep learning among other things. Moreover, fortified security measures have been put in place by Hoppers not only to protect but also to guarantee integrity while processing information through them. These improvements provide a wide range of opportunities for researchers and developers alike who want to explore more about what AI can do when subjected to different environments or inputs, thus leading to breakthrough performance levels on complex workloads never seen before.

Bandwidth and GPU Memory Capabilities

Artificial intelligence and high-performance computing programs are powered by the NVIDIA DGX H200. It uses advanced bandwidth and GPU memory to achieve excellent performance levels. Significantly increasing memory bandwidth, the latest HBM2E memory allows for faster data transfers and better processing speeds. This architecture of high-bandwidth memory is built for deep learning and data-centric calculations that have intense workloads; it, therefore, eliminates bottlenecks common in conventional systems of storage.

Moreover, Inter-GPU communication on the DGX H200 is accelerated by NVLink technology from NVIDIA, which improves upon this area by offering greater throughput between GPUs. With this feature, AI models can be scaled up effectively as they utilize multiple GPUs in tasks such as training large neural networks. Having vast amounts of memory bandwidth combined with efficient interconnects results in a strong platform that can handle larger sizes of data and increased complexity found in modern AI applications, hence leading to quicker insights and innovations.

The Benefits of NVIDIA Base Command

NVIDIA Base Command is a simplified platform for managing and directing AI workloads on distributed computing environments. Among the advantages are automated training job orchestration that helps in allocating resources effectively by handling multiple tasks concurrently hence increasing productivity while minimizing operational costs. Besides this, it centralizes system performance metrics visibility, which allows teams to monitor workflows in real-time so they can optimize resource utilization better, particularly with DGX H200 GPU. Such technical supervision reduces the time taken before getting insights because researchers can easily detect where there are bottlenecks and then make necessary configuration changes.

Additionally, it connects with widely used data frameworks and tools thus creating an atmosphere of cooperation among data scientists as well as developers who use them. In addition to this, through Base Command on NVIDIA’s cloud services, large amounts of computing power become easily accessible but still remain user-friendly enough even for complex models or big datasets, which otherwise would have required more effort. These functionalities put together make NVIDIA Base Command a vital instrument for organizations seeking to efficiently enhance their capabilities in AI according to the given instructions prompt.

When Was the World’s First DGX H200 Delivered to OpenAI?

Timeline of Delivery and Integration

The initial DGX H200 systems in the world were brought to OpenAI in 2023, and the process of integration began shortly after. A lot of setup and calibration was done after these things had been delivered so that they would perform optimally on OpenAI’s infrastructure. During Spring 2023, OpenAI worked together with NVIDIA engineers where they integrated DGX H200 into their current AI frameworks so as to enable smooth data processing as well as training capabilities. By mid-2023, it became fully operational at OpenAI, greatly increasing computational power efficiency, which led to driving more research undertakings at this organization according to what was given by NVIDIA. This is a key step forward for collaboration between these two companies because it shows their dedication to advancing artificial intelligence technologies beyond the limits set by anyone else in the industry.

Statements from NVIDIA’s CEO Jensen Huang

Jensen Huang, the CEO of NVIDIA, in a recent statement, praised the transformative significance of DGX H200 on AI research and development. He claimed that “DGX H200 is a game-changer for any enterprise that wants to use supercomputing power for artificial intelligence.” The head of the company drew attention to such capabilities of this system as speeding up processes related to machine learning as well as improving performance metrics which allow scientists to explore new horizons in AI more efficiently. In addition, he highlighted that working together with organizations like OpenAI – one among many leading AI companies – demonstrates not only their joint efforts towards innovation but also sets ground for further industry breakthroughs while underlining NVIDIA’s commitment towards them too. Such blending does not only show technological supremacy over others but also reveals commitment towards shaping future landscapes around artificial intelligence, according to Jensen Huang, who said so himself during his speech where he talked about these matters at hand.

Greg Brockman’s Vision for OpenAI’s Future with the DGX H200

The President of OpenAI, Greg Brockman thinks that the DGX H200 from NVIDIA will be the most important thing in artificial intelligence research and application. He says that before now, it was too expensive and difficult to create some kinds of models, but with this computer, they can be made easily, so he believes that more powerful computers like these will enable scientists to develop much more advanced systems than ever before. Also, such an upgrade is supposed to accelerate progress in many areas of AI, including robotics, natural language processing (NLP), computer vision, etc. According to him, not only will OpenAI accelerate innovation, but it also must ensure safety becomes part of development, hence being custodians with strong technology foundations for humanity.

Reference Sources

Frequently Asked Questions (FAQs)

Q: What is the NVIDIA DGX H200?

A: The NVIDIA DGX H200 is a super-advanced AI computer system equipped with the NVIDIA H200 Tensor Core GPU, which delivers unparalleled performance for deep learning and artificial intelligence applications.

Q: When was the NVIDIA DGX H200 delivered to OpenAI?

A: In 2024 when the NVIDIA DGX H200 was delivered to OpenAI, it marked a major advancement in AI computation power.

Q: How does DGX H200 compare to its predecessor, DGX H100?

A: With the brand-new NVIDIA H200 Tensor Core GPU and improved NVIDIA hopper architecture configured on it, the DGX H200 greatly enhances AI and deep learning capabilities compared to its precursor, DGX H100.

Q: What makes NVIDIA DGX H200 the most powerful GPU in the world?

A: Compute power of this magnitude has never been seen before, making NVIDIA’s latest graphic processing unit (GPU), known as NVidia dgx h2200, so powerful that it is more powerful than any other graphics card available on earth today. It also boasts better AI performance than any other model before it courtesy of integration with Grace Hooper Architecture, among other cutting-edge innovations.

Q: Who announced that they had delivered an Nvidia dgx h2oo to open

A: CEO Jensen Huang announced that his company had delivered its new product, the NVidia dgx h2200, which was received by the Openai research lab. This shows how much these two organizations have been working together in recent times and their commitment to advancing technology for future use.

Q: What will be the effects of DGX H200 on AI research by OpenAI?

A: It can be expected that OpenAI’s artificial intelligence research will grow significantly with the use of DGX H200. This will lead to breakthroughs in general-purpose AI and improvements in models such as ChatGPT and other systems.

Q: Why is DGX H200 considered a game changer for AI businesses?

A: DGX H200 is considered a game changer for AI businesses because it has unmatched capabilities, which allow companies to train more sophisticated AI models faster than ever before, leading to efficient innovation in the field of artificial intelligence.

Q: What are some notable features of NVIDIA DGX H200?

A: Some notable features of NVIDIA DGX H200 include powerful NVIDIA H200 Tensor Core GPU, Grace Hopper integration, NVIDIA hopper architecture, and the ability to handle large-scale AI and deep learning workloads.

Q: Other than OpenAI, which organizations are likely going to benefit from using this product?

A: The organizations that are likely to benefit greatly from DGX H200 are those engaged in cutting-edge research and developments, such as Meta AI, among other enterprises involved with AI technology.

Q: In what ways does this device support the future development of artificial intelligence?

A: The computational power provided by DGX H200 enables developers to create next-gen models & apps and thus can be seen as supporting AGI advancement through deep learning, etc.

Related Products:

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

-

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

-

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$260.00

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$260.00

-

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

-

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

-

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$190.00

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$190.00