The most important things in the landscape of artificial intelligence that evolve quickly are computational power and efficiency. At the forefront of this revolution is the NVIDIA DGX GH200 AI Supercomputer, which has been created to produce never-before-seen levels of performance for AI workloads. This piece will discuss what makes the DGX GH200 so groundbreaking, its architectural innovations, and what it means for AI research and development going forward. It will provide an understanding of how different sectors can be transformed by this supercomputer because it allows for next-generation capabilities in natural language processing through advanced robotics, among others. Follow us as we explore the technical wizardry behind NVIDIA’s newest AI marvel and potential applications thereof!

What is the NVIDIA DGX GH200?

An Introduction to the DGX GH200 Supercomputer

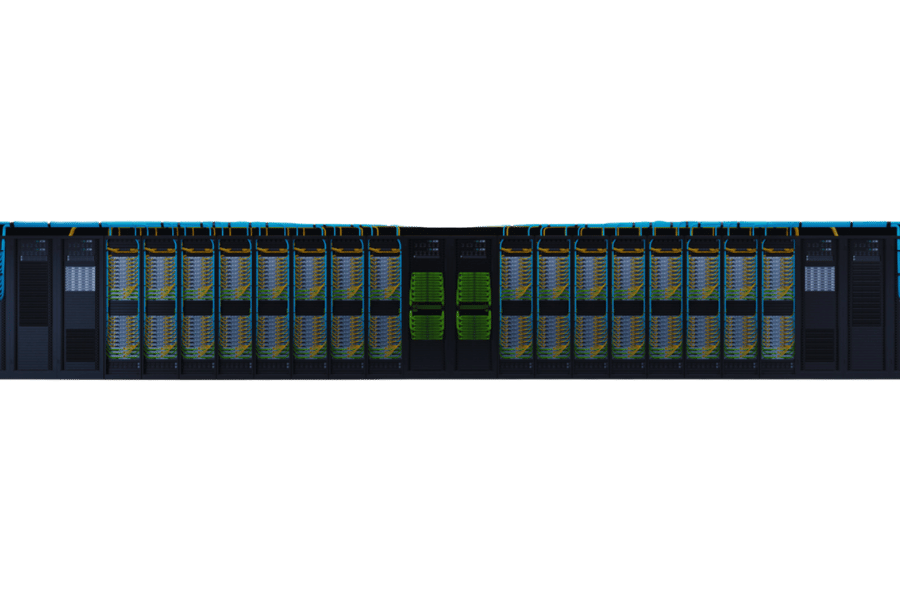

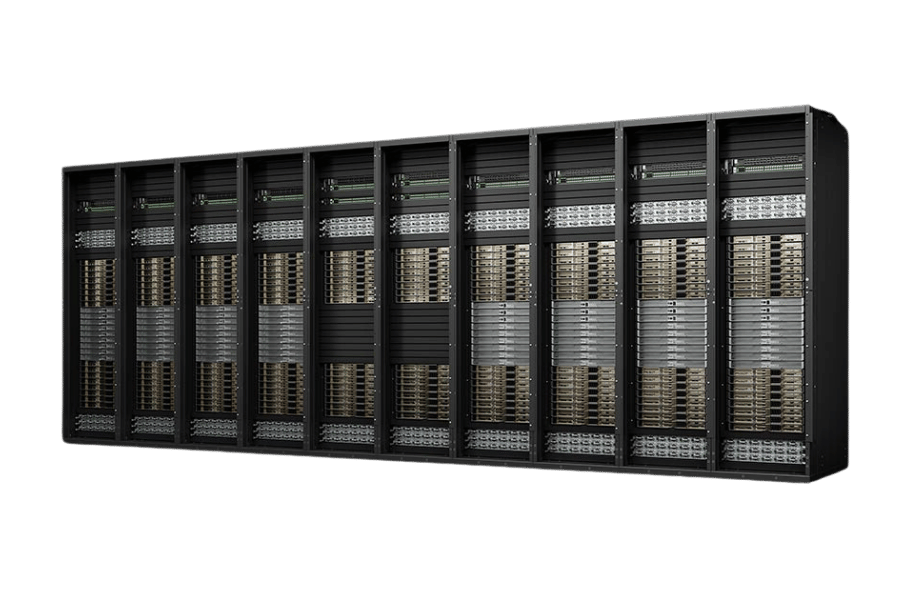

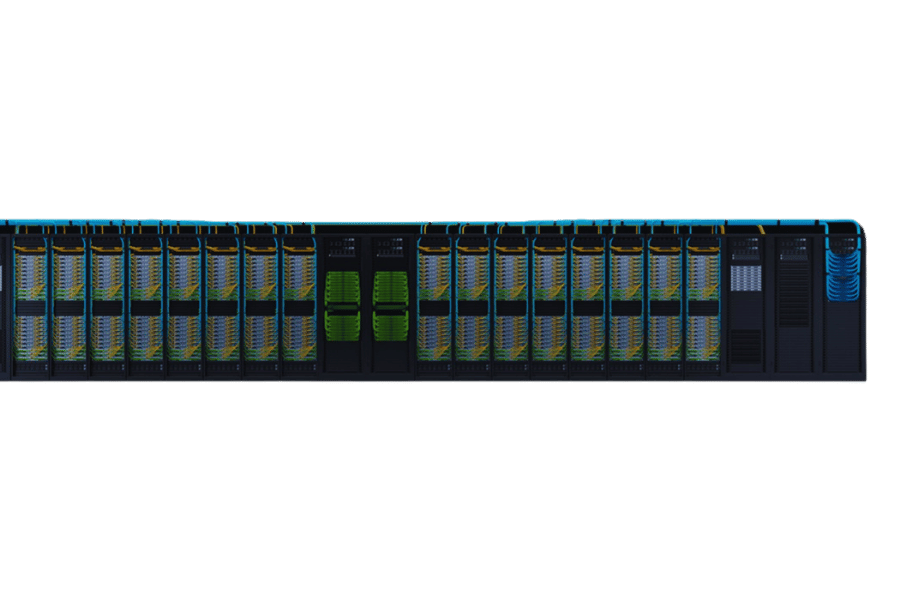

The AI-oriented system NVIDIA DGX GH200 Supercomputer is a more powerful computer with advanced features compared to conventional ones. The DGX GH200 has a new design that allows it to combine the capabilities of several NVIDIA GPUs using fast NVLink connections, so it can scale up easily and deliver high performance. According to its computing power, this model is great for artificial intelligence researchers and developers who work on complex models or try to make breakthroughs in such areas as NLP, computer vision, or robotics. Cooling systems used in this supercomputer are the best in their class; besides, they were designed for energy efficiency, which makes them perfect for use at HPC facilities where heat dissipation may become an issue.

Key Components: Grace Hopper Superchips and H100 GPUs

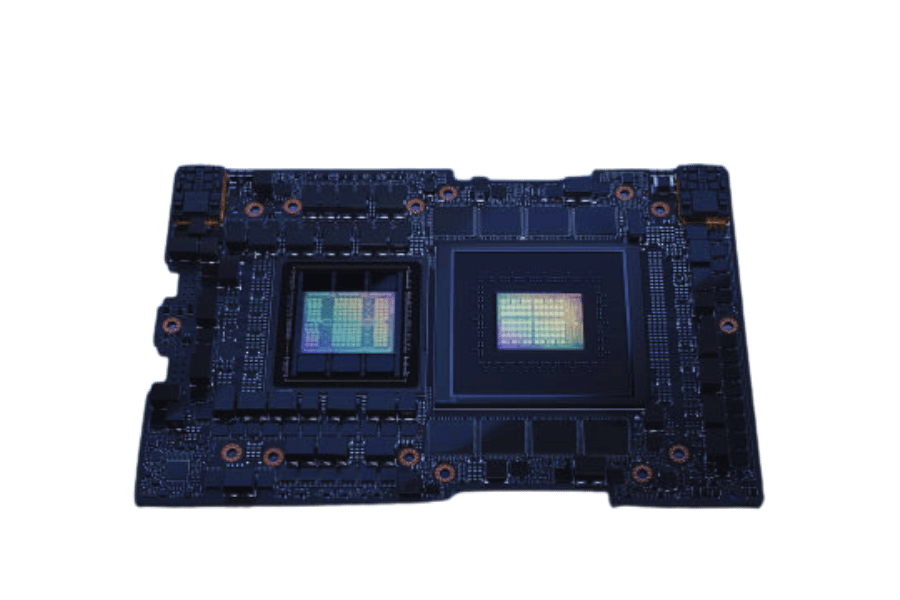

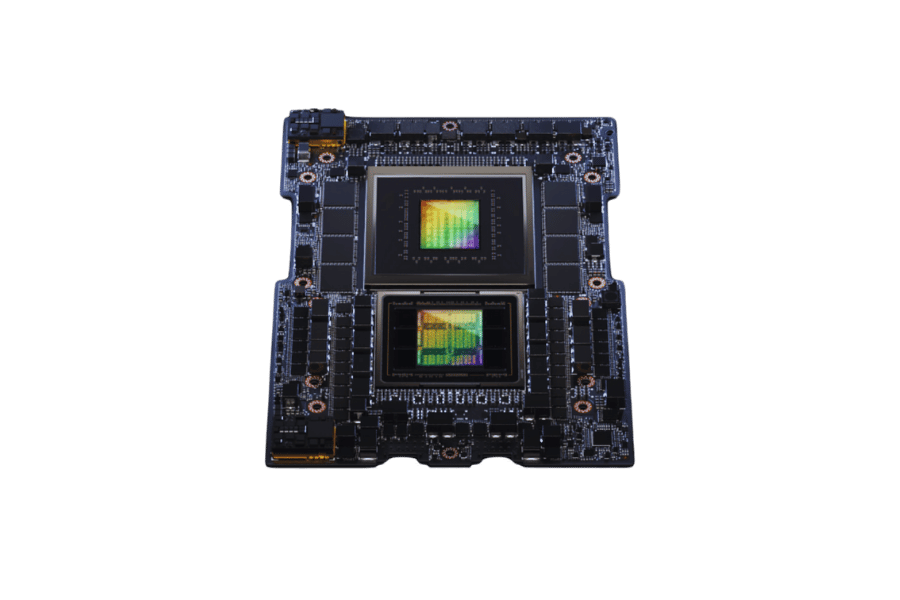

The DGX GH200 supercomputer mainly runs on the Grace Hopper Superchips and the H100 GPUs. The Grace Hopper Superchip incorporates the new Grace CPU with the Hopper GPU architecture for unmatched computational efficiency and performance. It is an inclusive integration where the processing power of the CPU combines with parallel computing capabilities of GPU for deeper learning and more complex simulations.

In DGX GH200’s design, the H100 GPUs are a game-changer. These H100 GPUs are built on the latest architecture called Hopper which gives them much higher performance than their predecessors; this improvement has several technical aspects:

- Transistor Count: There are over 80 billion transistors on each one of these chips that deliver great processing power.

- Memory Bandwidth: They come with up to 3.2 TB/s memory bandwidth so data can be accessed and processed quickly.

- Compute Performance: It performs double precision calculations at 20 TFLOPS, single precision calculations at 40 TFLOPS and tensor operations at 320 TFLOPS.

- Energy Efficiency: They were made to use as little energy as possible through cooling systems that keep them running hard during heavy workloads without overheating while remaining efficient in terms of power usage.

By themselves, these parts give never-before-seen levels of computing in DGX GH200, thereby becoming a must-have for any AI researcher or developer. With its advanced technology, this system supports different AI applications at once, thus pushing forward AI research further than ever imagined before.

Why the DGX GH200 is a Game-Changer in AI

DGX GH200 is a game changer for AI with unmatched power and efficiency for training and deploying large-scale AI models. This system utilizes the new Grace Hopper architecture, which comes with unprecedented memory bandwidth as well as processing capabilities to enable easy handling of huge datasets by researchers and developers. It also integrates high-performance H100 GPUs that accelerate both general-purpose and specialized tensor computations, thereby making it perfect for demanding artificial intelligence tasks like natural language processing, computer vision, or predictive analytics. Moreover, its energy-saving design coupled with strong cooling solutions ensures continuous performance even under heavy workloads thus reducing operational costs greatly. This means that not only does DGX GH200 fasten the creation of future-generation AI programs, but it also improves resource management in this area, considering its efficiency in energy usage during operation.

How Does the NVIDIA DGX GH200 Work?

DGX GH200 System Architecture Explained

The DGX GH200 system architecture uses the original Grace Hopper design that incorporates both NVIDIA Hopper GPUs and Grace CPUs. This design centers around having a CPU complex directly connected to an H100 GPU which has a high-bandwidth memory that enables fast data swapping while reducing latency. In addition, there are high-speed NVLink interconnects that allow various GPUs and CPUs in the system to communicate seamlessly with each other. What’s more, it also features advanced cooling solutions to ensure that it operates within the right temperatures even when under intense computational workloads. With its modularity, this system can scale up or down, thus making it suitable for different types of AI workloads and research applications.

Role of the NVIDIA NVLink-C2C in the System

The DGX GH200 system’s performance and efficiency are improved by the NVIDIA NVLink-C2C (Chip-to-Chip) interconnect. The NVLink-C2C sets up optimal data transfer rates, which speeds up computational tasks by creating a low-latency, high-bandwidth communication channel between the Grace Hopper CPU and Hopper GPU complexes. This is what you need to know about the technical parameters of NVLink-C2C:

- Bandwidth: 900 GB/s maximum.

- Latency: Sub-microsecond range.

- Scalability: It supports upto 256 NVLink lanes.

- Power Efficiency: Advanced signaling techniques are used to minimize power consumption.

These parameters allow for multiple processing units to be integrated seamlessly, thus maximizing the system’s overall computational throughput. The dynamic allocation of bandwidth is also supported by NVLink-C2C, which enables efficient resource utilization across different AI applications through workload awareness.

Power Efficiency of the DGX GH200

DGX GH200 is designed to be power efficient and can support high-performance computing with minimal energy use. It does this through several advanced features:

- Optimized Power Delivery: The system has power delivery systems that are fine-tuned so as to supply each component with the right amount of voltage and current, thereby decreasing energy loss while increasing performance per watt.

- Advanced Cooling Solutions: DGX GH200 uses the latest cooling technology which helps in maintaining optimum working temperatures for its parts hence overall saving power. Effective cooling reduces reliance on energy-intensive methods of cooling thus saving more energy.

- Energy-Efficient Interconnects: NVLink-C2C among other interconnects within the system employ power saving signaling methods that do not compromise on data transfer speeds and latency levels. This leads to substantial savings in electricity usage particularly during massive data operations.

- Dynamic Resource Allocation: Computational resources are allocated dynamically by workload requirements so that no power is wasted on idle or underutilized components through smart management of these resources which ensures efficient utilization of electricity.

All these qualities combine to make DGX GH200 the most power-saving supercomputer in terms of performance thereby being a perfect option for AI researches and other computational intensive applications.

What Makes the DGX GH200 Suitable for AI and HPC Workloads?

Handling Large-Scale AI with DGX GH200

The DGX GH200 is ideal for big AI and high-performance computing (HPC) workloads because it has six main characteristics which are listed below:

- Scalability: The DGX GH200 allows scaling of many parallel processing tasks. This ability is necessary when training huge AI models or running extensive simulations as part of HPC workloads.

- High Computational Power: With advanced GPUs designed specifically for artificial intelligence and high-performance computing, the DGX GH200 delivers an unprecedented amount of computational power that speeds up model training and data processing with intricate structures.

- Storage Capacity: It comes with sufficient memory capacity to handle large datasets thereby enabling complex models processing and big data analytics without suffering from memory limitations.

- Quick Interconnects: For distributed computing systems, fast NVLink-C2C interconnects are used within this device to ensure data is transferred between multiple GPUs within a short duration, hence maintaining high throughput.

- Optimized Software Stack: A complete software stack tuned for AI and HPC applications accompanies the DGX GH200, this ensures that there is seamless integration between hardware and software thus achieving maximum performance levels.

These qualities, taken together, establish the DGX GH200 as an incomparable platform for running sophisticated AI and HPC workflows; such capability leads to efficiency gains while achieving faster results during cutting-edge research endeavors or industrial applications where time plays a critical role.

DGX GH200 for Complex AI Workloads

In considering how the DGX GH200 handles complicated AI workloads, it is essential to emphasize its unique technical parameters that substantiate this claim.

- Efficiency of Parallel Processing: The DGX GH200 can support a maximum of 256 parallel processes at once. This means that it can efficiently manage heavy computations and train models without experiencing any significant lags in time.

- GPU Specs: NVIDIA H100 Tensor Core GPUs are installed in each DGX GH200 which offers up to 80 billion transistors per GPU and has 640 Tensor Cores. These features allow for high performance during deep learning tasks or computation-heavy jobs.

- Memory Bandwidth: With a memory bandwidth of 1.6 TB/s, this system has enormous throughput required for fast input/output operations involving large datasets consumed by AI algorithms during training phases.

- Storage Capacity: To minimize data retrieval latency during ongoing calculations and ensure quick access to information, the DGX GH200 comprises 30TB NVMe SSD storage devices.

- Network Interconnects: The DGX GH200 utilizes NVLink-C2C interconnects with a bandwidth of 900 GB/s between GPU nodes which enable fast communication among them necessary for distributed AI workload coherency maintenance coupled with speediness in execution time.

- Power efficiency – Cooling systems were designed properly so as not only keep optimal performance but also save on power consumption targeted towards high-density data centers hosting such machines like these ones; thus creating an environment where heat dissipation becomes easy due to effective cooling mechanisms integrated within its architecture designs resulting into better power management strategies being put in place thus leading unto less energy wastage as compared with other competing products currently available in market.

All these together cater for the demands imposed by complex artificial intelligence workloads thereby allowing execution of intricate activities including mass neural network training; live streaming analytics on huge amounts real-time data; extensive computational simulations etcetera. Scalability through hardware scaling options coupled with software optimization measures guarantees that DGX GH200 meets and surpasses the demands set by modern AI/HPC applications.

Integration with NVIDIA AI Enterprise Software

The software suite called NVIDIA AI Enterprise has been designed for many places, both on-premises and in the cloud, so as to optimize and simplify the use of artificial intelligence. It is a comprehensive suite because it seamlessly integrates with DGX GH200, which offers several frameworks of AI, pre-trained models, and tools that increase productivity. This software includes other necessary components for running AI, such as NVIDIA Triton Inference Server, TensorRT inference optimizer, and NVIDIA CUDA toolkit, among others. By using all these tools, it becomes possible to make full use of hardware capabilities in DGX GH200 thus improving efficiency as well as scalability when dealing with AI workloads. Moreover, this enterprise guarantees that machine learning frameworks like TensorFlow, PyTorch, or Apache Spark are compatible with it, hence allowing developers an easy time building, testing, and deploying their models through these APIs. Such integration creates strong foundations for diverse tasks within an organization while ensuring the efficient completion of challenging projects involving Artificial Intelligence.

How Does the NVIDIA DGX GH200 Enhance AI Training?

AI Model Training Capabilities

Exceptional computing power and scalability are offered by the NVIDIA DGX GH200, which greatly enhances the capabilities of the AI model training. With the most advanced NVIDIA H100 Tensor Core GPUs, it delivers matchless performance for big-sized AI models as well as intricate neural networks. Rapid data transfer is made possible through high-bandwidth memory and state-of-the-art interconnect technologies employed in this system, thus reducing latency while increasing throughput. In addition to that, mixed-precision training is supported by DGX GH200, which helps speed up calculations yet keeps the accuracy of the model intact. All these features combined together allow efficient processing of massive datasets along with complicated architectural designs of models, thereby significantly cutting down training times and enhancing overall productivity in AI development.

Leverage NVIDIA Base Command for AI Workflows

To streamline the workflow of AI and optimize multi-DGX resource management, NVIDIA Base Command was created as part of the NVIDIA AI ecosystem. It enables enterprises to orchestrate, monitor, and manage AI training jobs effectively, thus ensuring that computational resources are fully used while idling is minimized.

Main features and technical parameters:

- Job scheduling and queuing: NVIDIA Base Command uses advanced scheduling algorithms which prioritize queuing AI training jobs for best resource allocation. This reduces waiting time hence increases efficiency through increased throughput.

- Resource allocation: The platform allows dynamic partitioning of resources so that GPUs can be allocated flexibly depending on different AI projects’ requirements. Such adaptiveness enhances the utilization of available computing power.

- GPU Resource Management: It supports 256 GPUs over many DGX systems.

- Dynamic Allocation: Allocates GPUs based on job size and priority.

- Monitoring & Reporting: Real-time monitoring tools show how much GPU has been utilized and memory consumed as well as network bandwidth used, thus enabling administrators to make informed choices on resource management & optimization.

- Metrics: Tracks GPU usage, memory consumption and network bandwidth in real-time.

- Dashboards: Performance visualization dashboards can be customized according to one’s needs.

- Collaboration & Experiment Tracking: Features for experiment tracking, version control and collaboration have been integrated into NVIDIA Base Command so as to facilitate smooth cooperation among data scientists and researchers.

- Experiment Tracking: Keeps record of hyperparameters, training metrics, model versions, etcetera.

- Collaboration: Shareable project workspaces that foster team-based AI development should also be noted down here.

When DGX GH200 infrastructure is used with this software (NVIDIA base command), it creates an environment where artificial intelligence can grow efficiently because both hardware and software resources are utilized optimally.

Supporting Generative AI Initiatives

Generative AI efforts are given a big boost by NVIDIA’s Base Command platform integrated with DGX GH200 infrastructure. With powerful computing capabilities and simplified management functions, the system can handle the immense requirements of training and deploying generative models. In addition, the feature of dynamic resource allocation serves to make sure that GPUs are utilized in the best way possible, thereby speeding up AI workflows and cutting down development time.

In order to manage extensive computational tasks, monitoring tools that report on events in real-time were added to this platform. These tools give visibility into GPU utilization as well as memory usage among other things such as network bandwidth which is very important when it comes to tuning performance for efficient running of generative processes based on artificial intelligence.

Collaboration between data scientists has been made easier through experiment tracking and version control functionalities embedded within NVIDIA Base Command. This creates an environment where team members can share their findings easily, leading to reproducibility of experiments, thus driving model iterations required for coming up with innovative solutions in generative AI.

What are the Future Implications of the DGX GH200 in AI?

Advancing AI Research and Innovation

The DGX GH200 is a super AI computing platform that can greatly boost the research and development of artificial intelligence by virtue of its amazing computation and storage capacities. Such capabilities include but not limited to providing a maximum of one exaflop AI performance and 144TB shared memory, which allows it to deal with the toughest workloads in terms of size, as well as sophistication or complexity thus far known.

NVIDIA NVLink Switch System is one of the vital technical components on which DGX GH200 relies; this system enables seamless communication between GPUs, resulting in lower latency while transferring data, therefore enhancing data transfer efficiency too, particularly among tasks requiring high levels of parallelism like deep learning or simulations involving numerous variables such as those used in physics modeling software packages.

Furthermore, due to integration with NVIDIA Base Command, there are advanced resource management tools available for use within the system itself, thereby making it more useful than ever before when coupled with other similar systems around. These tools ensure that computational resources are optimally allocated so as to minimize idle times which may arise during implementation, thus leading into increased productivity levels within an organization where this technology finds wide application; also being able to monitor various real-time performance metrics, including GPU utilization rate together with power consumed per unit time helps users fine-tune their hardware configurations accordingly hence meeting specific requirements imposed by different types or classes of experiments performed on given machine learning model.

Another advantage brought about by DGX GH200 is that it provides researchers from different disciplines an opportunity to work together towards achieving common goals faster than if they were working in isolation. This feature allows multi-disciplinary teams to access the same datasets simultaneously without anyone having to copy over files from one computer onto another hence saving both time and effort required during such collaborations; moreover, features like experiment tracking coupled with version control ensures reproducibility across multiple research efforts so that any new finding can easily be verified against previous results leading rapid advances within field thus enabling development next-gen machine intelligence systems.

The Evolving Role of AI Supercomputers

AI supercomputers are changing computational research and industrial applications deftly. The main use of these systems is to cut down the time taken to train complicated machine learning and deep learning models. This is because they use high-performance hardware like GPUs which have been optimized for parallel processing, have large-scale memory, and high-speed interconnects.

Technical Parameters

NVIDIA NVLink Switch System:

- Basis: It guarantees GPU-to-GPU communication at low latency and high speed required for parallel processing jobs.

- Mostly used in deep learning tasks and simulations.

NVIDIA Base Command:

- Basis: It offers advanced resource management and orchestration tools.

- This allows for the efficient allocation of computing resources, thereby reducing idle time and enhancing productivity.

Real-time Performance Metrics:

- GPU utilization.

- Power consumption.

- Reason: These help in fine-tuning system performance to meet specific research demands.

Experiment Tracking and Version Control:

- Justification: This ensures that research efforts can be reproduced easily whenever necessary.

- Enables effective sharing as well as collaboration among teams of different disciplines.

Through such technological improvements, AI supercomputers are applicable across the board, from healthcare to finance, robotics to autonomous systems. Organizations can break new ground in AI innovation by utilizing these complex machines thus creating solutions that solve intricate real-life problems.

Potential Developments with Grace Hopper Superchips

The Grace Hopper Superchips are a huge step forward for AI and high-performance computing. They can process colossal amounts of data at astonishing speeds while making use of the most up-to-date chip architecture advancements.

Better performance under artificial intelligence workloads:

- The Grace CPU unifies the Hopper GPU with it, forming a single platform that is ideal for AI model training and inference.

- This design makes for quicker processing times when dealing with complex algorithms or large sets of information, which is vital to cutting-edge AI research and development.

Energy efficient:

- Without sacrificing power, these chips save more energy by incorporating cooling mechanisms as well as power management features alongside their CPU and GPU functionalities.

- Such optimization allows sustainability in operation costs – something every modern data center should consider seriously if they want to remain relevant over time.

Scalable flexibility:

- AI deployments on a large scale require systems like those offered by Grace Hopper Superchips which provide scalable solutions capable of growing alongside increasing computational requirements.

- Resource allocation within these setups can be adjusted according to need thereby enabling them fit different sectors including but not limited to healthcare , finance industry among others; also autonomous vehicles would greatly benefit from such capabilities too!

In conclusion, It’s clear then that what we have here are devices called grace hopper superchips. They are about to change everything we know about artificial intelligence and high-performance computing forever because, for one thing, they offer never-before-seen levels of efficiency when it comes to speed, power, or capacity. Additionally, this newfound ability will enable companies to solve harder problems than ever before. That means more innovation in the world of Ai.

Reference sources

Frequently Asked Questions (FAQs)

Q: What is the NVIDIA DGX GH200 AI Supercomputer?

A: The NVIDIA DGX GH200 AI Supercomputer is an advanced supercomputer for artificial intelligence research and applications. It is equipped with cutting-edge components such as the NVIDIA Grace Hopper superchip which offer superior performance in demanding AI tasks.

Q: What sets apart the DGX GH200 AI Supercomputer?

A: For AI tasks, the DGX GH200 combines 256 NVIDIA Grace Hopper Superchips in this new design with NVIDIA NVLink Switch System that provides unheard-of computation power and efficiency.

Q: How does the NVIDIA Grace Hopper Superchip come into play here?

A: With its ability to handle complex data processing needs while working on heavy-duty AI workloads, created by combining Grace CPU’s strength with Hopper GPU’s mightiness, Our so-called efficient-powerful ‘NVIDIA Grace Hopper superchip’ does not disappoint as part of this system.

Q: In what ways does the DGX GH200 Software improve upon AI capabilities?

A: The DGX GH200 software suite optimizes performance of large-scale AI model training through seamless scalability on the hardware, management tools robustness for maximum infrastructure efficiency in artificial intelligence computing.

Q: What is the benefit of AI performance in the NVIDIA NVLink Switch System?

A: The NVIDIA NVLINK SWITCH SYSTEM makes it possible for many DGX GH200 supercomputers to be hooked up at once, which creates fast connections with a lot of bandwidth that allow data to be transmitted quickly between them; this increases throughput and speeds up complex artificial intelligence calculations as well as model training.

Q: What benefits does the GH200 architecture offer?

A: GH200 offers the ability for faster processing times on AI applications because there are 256 Grace Hopper superchips integrated into each one which also results in lower power consumption due to their high computational density and energy efficiency.

Q: How is the DGX H100 different from other models like the DGX A100?

A: It has better performance abilities than most previous versions including its usage of new NVIDIA H100 GPUs so that it can handle more complex artificial intelligence tasks while still being able to process them quicker overall by improving computational efficiency.

Q: Why is this machine good for AI researchers?

A: AI researchers need lots of computing power when working with large models, so having something like the NVIDIA DGX GH200 system designed specifically for training and deploying such models at scale would greatly assist them in that regard since it provides abundant computational resources along with the necessary scalability and flexibility.

Q: What does adding NVIDIA Bluefield-3 do for the DGX GH200 Supercomputer?

A: NVIDIA Bluefield-3 greatly enhances networking capabilities within these machines themselves, which allows for much faster management of huge datasets required during AI model training phases where both input/output latencies are critical points needing optimization, thus making this feature useful because it enables faster training of better models.

Q: What are some implications of future development around Nvidia’s DGX GH200 AI Supercomputer?

A: With unmatched computing capabilities, The future prospects presented by Nvidia’s DGX GH200 Ai Supercomputer are limitless; hence, it is expected to revolutionize artificial intelligence across multiple sectors ranging from healthcare and transport to computing.

Related Products:

-

OSFP-XD-1.6T-4FR2 1.6T OSFP-XD 4xFR2 PAM4 1291/1311nm 2km SN SMF Optical Transceiver Module

$17000.00

OSFP-XD-1.6T-4FR2 1.6T OSFP-XD 4xFR2 PAM4 1291/1311nm 2km SN SMF Optical Transceiver Module

$17000.00

-

OSFP-XD-1.6T-2FR4 1.6T OSFP-XD 2xFR4 PAM4 2x CWDM4 2km Dual Duplex LC SMF Optical Transceiver Module

$22400.00

OSFP-XD-1.6T-2FR4 1.6T OSFP-XD 2xFR4 PAM4 2x CWDM4 2km Dual Duplex LC SMF Optical Transceiver Module

$22400.00

-

OSFP-XD-1.6T-DR8 1.6T OSFP-XD DR8 PAM4 1311nm 2km MPO-16 SMF Optical Transceiver Module

$12600.00

OSFP-XD-1.6T-DR8 1.6T OSFP-XD DR8 PAM4 1311nm 2km MPO-16 SMF Optical Transceiver Module

$12600.00

-

NVIDIA MMS4X50-NM Compatible OSFP 2x400G FR4 PAM4 1310nm 2km DOM Dual Duplex LC SMF Optical Transceiver Module

$1350.00

NVIDIA MMS4X50-NM Compatible OSFP 2x400G FR4 PAM4 1310nm 2km DOM Dual Duplex LC SMF Optical Transceiver Module

$1350.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1200.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1200.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$850.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$850.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1100.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1100.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$750.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$750.00

-

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$800.00

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$800.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$800.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$800.00

-

NVIDIA MMA1Z00-NS400 Compatible 400G QSFP112 SR4 PAM4 850nm 100m MTP/MPO-12 OM3 FEC Optical Transceiver Module

$650.00

NVIDIA MMA1Z00-NS400 Compatible 400G QSFP112 SR4 PAM4 850nm 100m MTP/MPO-12 OM3 FEC Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$650.00