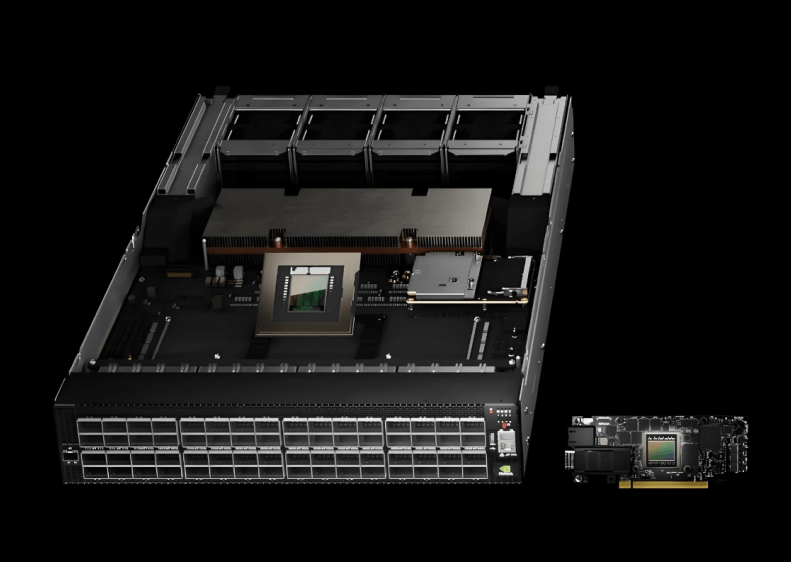

Product Overview

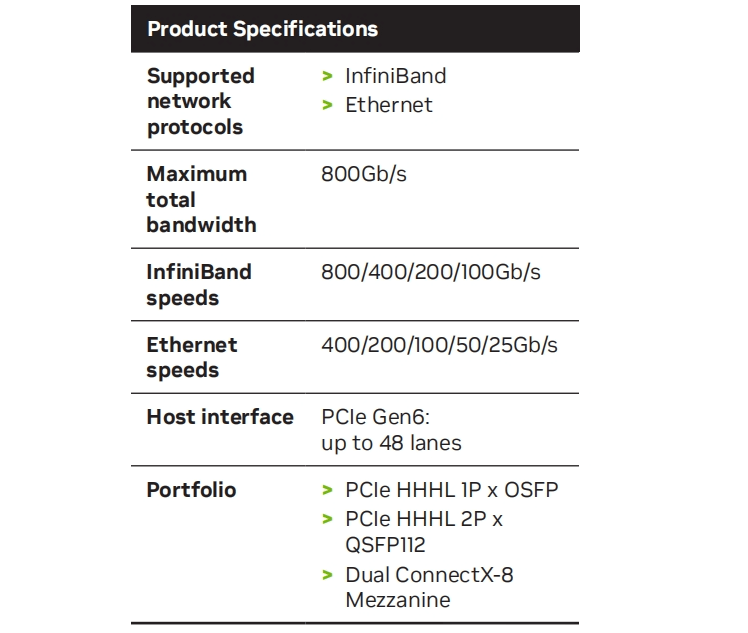

The ConnectX-8 SuperNIC is NVIDIA’s seventh-generation smart network interface card designed for next-generation AI computing clusters, large-scale data centers, and high-performance computing (HPC) scenarios. It deeply integrates network acceleration and computational offloading capabilities, providing ultra-high-speed support for 400GbE/800GbE. Through hardware-level protocol offloading and GPU-NIC co-optimization, it significantly reduces network latency and enhances throughput efficiency, offering ultra-low latency and lossless network transmission capabilities for AI training, inference, and distributed storage scenarios.

Software Protocols and Acceleration Functions

ConnectX-8 SuperNIC optimizes full-stack network performance through the deep collaboration of the software protocol stack and hardware acceleration engine:

Protocol Support

- RDMA/RoCEv2: Based on Converged Ethernet for Remote Direct Memory Access, achieving zero-copy data transfer with latency as low as sub-microseconds.

- GPUDirect Technology: Supports GPUDirect RDMA and GPUDirect Storage, enabling direct GPU-to-storage/NIC data interaction, bypassing the CPU.

- NVIDIA SHARPv3: Aggregated communication hardware acceleration supporting AllReduce, Broadcast, and other operations to enhance AI training efficiency.

- TLS/IPsec Hardware Offload: Supports full traffic encryption and decryption without performance loss.

Software Ecosystem

- DOCA 2.0 (Data Center Infrastructure-on-a-Chip Architecture): Provides an API-driven development framework supporting user-defined data plane acceleration functions (e.g., DPU collaborative orchestration).

- Deep Integration with the CUDA Ecosystem: Optimizes multi-GPU cross-node communication efficiency through the NCCL library.

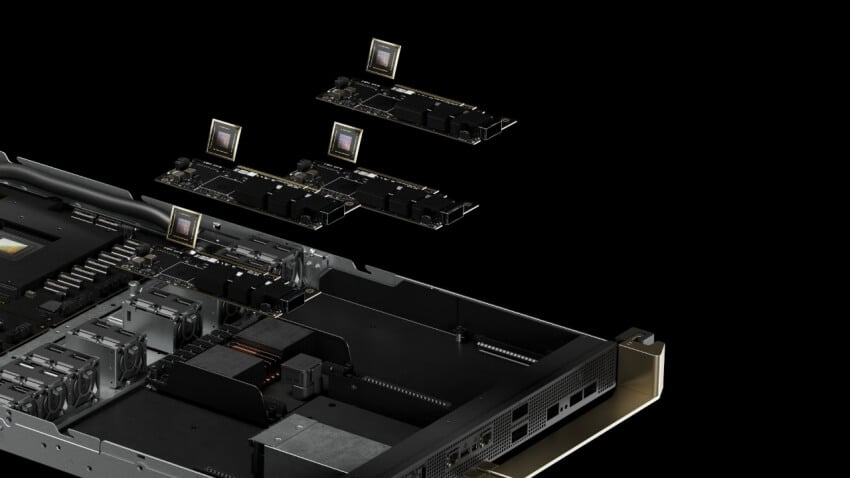

Hardware Architecture and Connectivity Design

Host Interface

PCIe 5.0 x16, theoretical bandwidth of 128GB/s, fully unleashing 400G/800G network performance.

Network Interface

Supports single-port 800GbE OSFP112 or dual-port 400GbE QSFP112 flexible configurations.

Backward compatible with 200GbE/100GbE speeds, adapting to existing infrastructure.

On-Chip Acceleration Engine

Integrates dedicated ASICs supporting flow table management, congestion control (DCQCN), packet verification, and other full hardware offloads.

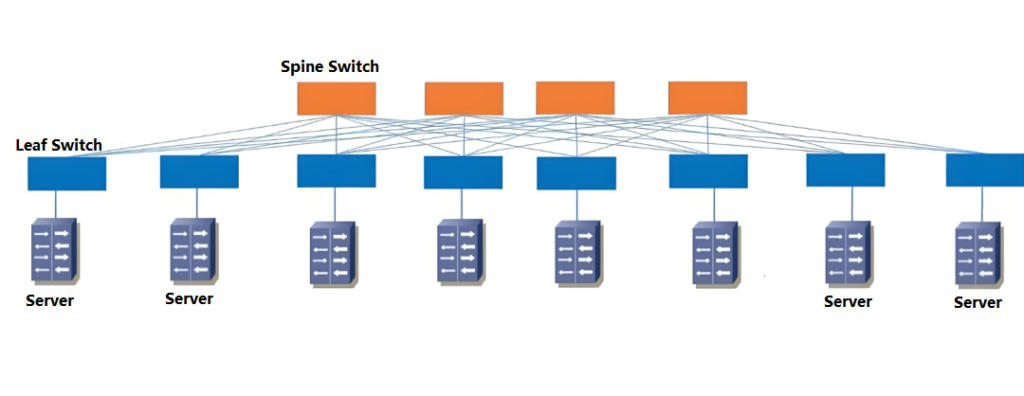

Networking Architecture and Connectivity

ConnectX-8 SuperNIC supports multi-layer CLOS architecture networking, building high-bandwidth, non-blocking AI computing clusters

Single Node Connection

Each server deploys 1-2 ConnectX-8 NICs, interconnected with the host through PCIe 5.0.

Each port connects directly to the leaf switch via QSFP-DD optical fiber, forming dual uplink redundancy.

Cluster Networking

- Leaf Switch: NVIDIA Quantum-3 series (800G) or Spectrum-4 series (400G), supporting RoCEv2 and adaptive routing.

- Spine Switch: Fully interconnected with leaf switches through 800G high-speed ports, providing non-blocking bandwidth.

- Spine-Leaf Architecture

- GPU Direct Networking: Multi-node GPUs achieve cross-node memory direct access via RDMA, forming a distributed training cluster.

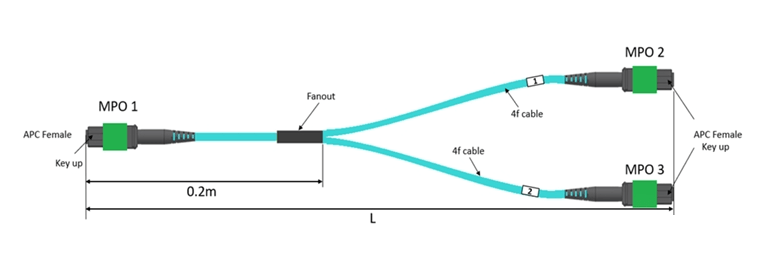

Optical Modules and Fiber Selection

Optical Modules

800G Scenarios: OSFP112 800G-SR8/VR8 (multi-mode, 100m) / 800G-DR8 (single-mode, 500m).

400G Scenarios: QSFP112 400G-VR4/SR4/DR4.

Fiber Types:

Multi-Mode (MMF): OM5/OM4 (850nm, supporting 400G-SR8 up to 100m).

Single-Mode (SMF): OS2 (1310nm/1550nm, supporting long-distance transmission over 10km).

Compatible Switches and Ecosystem Collaboration

NVIDIA Switches:

Quantum-3: 800G InfiniBand switch supporting SHARPv3 aggregated communication acceleration.

Spectrum-4: 400G Ethernet switch supporting RoCEv2 and intelligent traffic scheduling.

Third-Party Switches:

Arista 7800R3 (800G), Cisco Nexus 92300YC (400G): Ensure support for RoCEv2 and ECMP load balancing.

Related Products:

-

QSFP112-400G-DR4 400G QSFP112 DR4 PAM4 1310nm 500m MTP/MPO-12 with KP4 FEC Optical Transceiver Module

$1350.00

QSFP112-400G-DR4 400G QSFP112 DR4 PAM4 1310nm 500m MTP/MPO-12 with KP4 FEC Optical Transceiver Module

$1350.00

-

QSFP112-400G-FR1 4x100G QSFP112 FR1 PAM4 1310nm 2km MTP/MPO-12 SMF FEC Optical Transceiver Module

$1300.00

QSFP112-400G-FR1 4x100G QSFP112 FR1 PAM4 1310nm 2km MTP/MPO-12 SMF FEC Optical Transceiver Module

$1300.00

-

QSFP112-400G-FR4 400G QSFP112 FR4 PAM4 CWDM 2km Duplex LC SMF FEC Optical Transceiver Module

$1760.00

QSFP112-400G-FR4 400G QSFP112 FR4 PAM4 CWDM 2km Duplex LC SMF FEC Optical Transceiver Module

$1760.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$750.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$750.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1200.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1200.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$850.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$850.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1100.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1100.00

-

QSFP112-400G-SR4 400G QSFP112 SR4 PAM4 850nm 100m MTP/MPO-12 OM3 FEC Optical Transceiver Module

$650.00

QSFP112-400G-SR4 400G QSFP112 SR4 PAM4 850nm 100m MTP/MPO-12 OM3 FEC Optical Transceiver Module

$650.00