Table of Contents

ToggleTraditional OEM GPU Servers: Intel/AMD x86 CPU + NVIDIA GPU

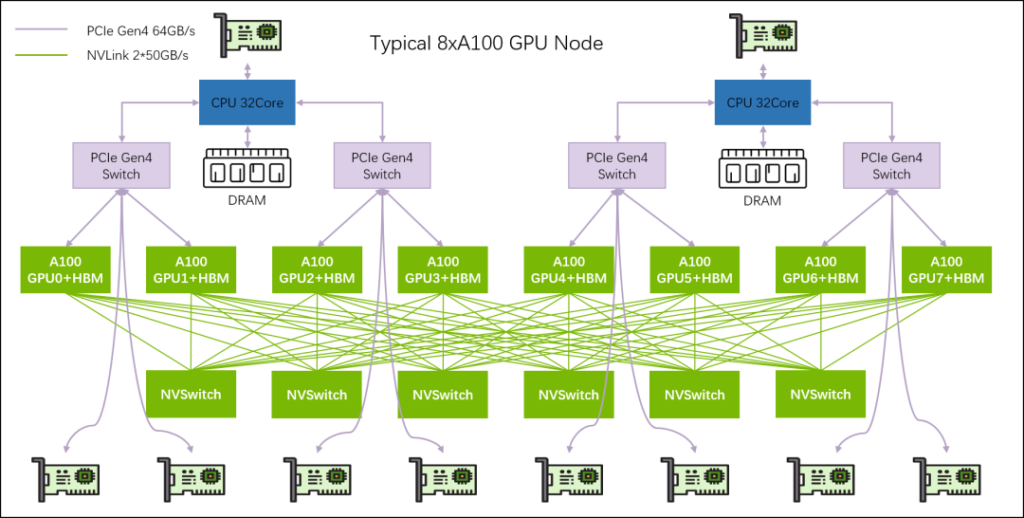

Before 2024, both NVIDIA’s own servers and third-party servers equipped with NVIDIA GPUs were based on x86 CPU machines. The GPUs were connected to the motherboard via PCIe cards or 8-card modules.

At this stage, the CPU and GPU were independent. Server manufacturers could assemble their servers by purchasing GPU modules (e.g., 8*A100). The choice of Intel or AMD CPUs depended on performance, cost, or cost-effectiveness considerations.

Next-Generation OEM GPU Servers: NVIDIA CPU + NVIDIA GPU

With the advent of the NVIDIA GH200 chip in 2024, NVIDIA’s GPUs began to include integrated CPUs.

- Desktop Computing Era: The CPU was primary, with the GPU (graphics card) as a secondary component. The CPU chip could integrate a GPU chip, known as an integrated graphics card.

- AI Data Center Era: The GPU has taken the primary role, with the CPU becoming secondary. The GPU chip/card now integrates the CPU.

As a result, NVIDIA’s integration level has increased, and they have started offering complete machines or full racks.

CPU Chip: Grace (ARM) is designed based on ARMv9 architecture.

GPU Chip: Hopper/Blackwell/…

For example, the Hopper series initially released the H100-80GB, followed by further iterations:

- H800: A cut-down version of the H100.

- H200: An upgraded version of the H100.

- H20: A cut-down version of the H200, significantly inferior to the H800.

Chip Product (Naming) Examples

Grace CPU + Hopper 200 (H200) GPU

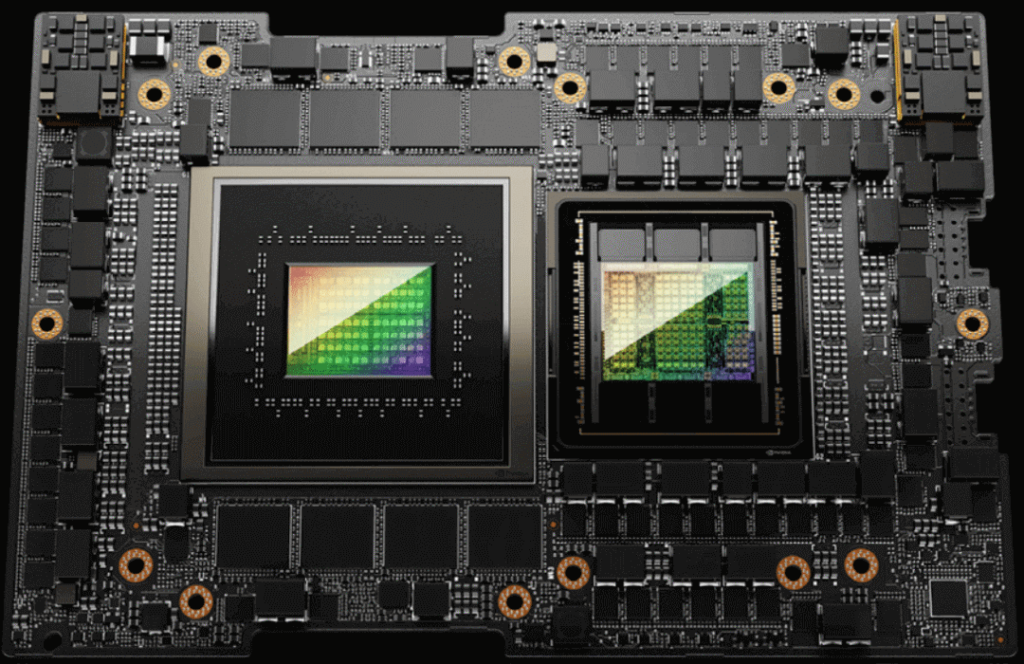

GH200 on a single board:

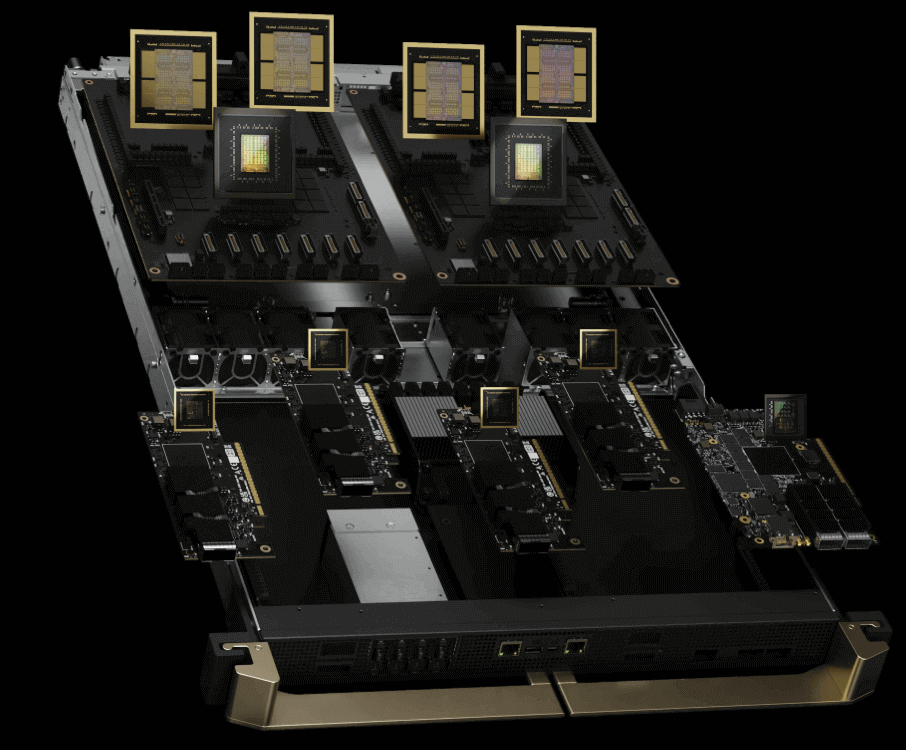

Grace CPU + Blackwell 200 (B200) GPU

GB200 on a single board (module), with high power consumption and integrated liquid cooling:

72 B200s form an OEM cabinet NVL72:

Internal Design of GH200 Servers

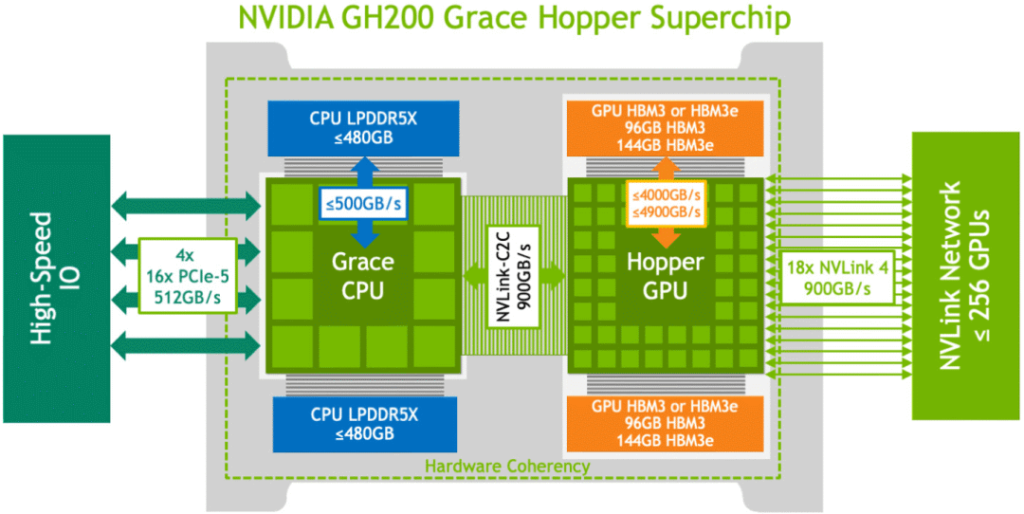

GH200 Chip Logical Diagram

Integration of CPU, GPU, RAM, and VRAM into a Single Chip

Core Hardware

As illustrated in the diagram, a single GH200 superchip integrates the following core components:

- One NVIDIA Grace CPU

- One NVIDIA H200 GPU

- Up to 480GB of CPU memory

- 96GB or 144GB of GPU VRAM

Chip Hardware Interconnects

The CPU connects to the motherboard via four PCIe Gen5 x16 lanes:

- Each PCIe Gen5 x16 lane offers a bidirectional speed of 128GB/s

- Therefore, the total speed for four lanes is 512GB/s

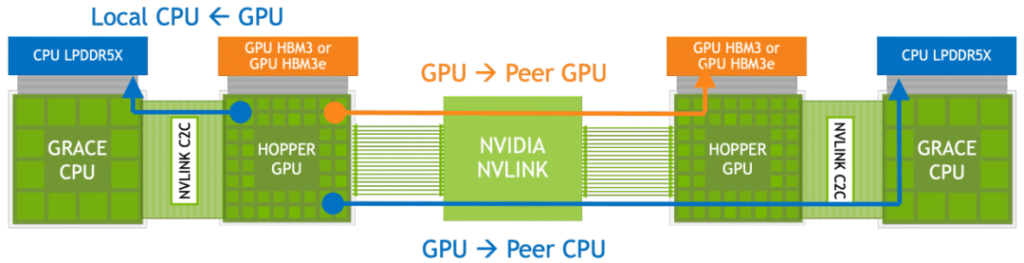

The CPU and GPU are interconnected using NVLink® Chip-2-Chip (NVLink-C2C) technology:

- 900GB/s, which is seven times faster than PCIe Gen5 x16

GPU interconnects (within the same host and across hosts) use 18x NVLINK4:

- 900GB/s

NVLink-C2C provides what NVIDIA refers to as “memory coherency,” ensuring consistency between memory and VRAM. The benefits include:

- Unified memory and VRAM up to 624GB, allowing users to utilize it without distinction, thereby enhancing developer efficiency

- Concurrent and transparent access to CPU and GPU memory by both the CPU and GPU

- GPU VRAM can be oversubscribed, using CPU memory when needed, thanks to the large interconnect bandwidth and low latency

Next, let’s delve into the hardware components such as the CPU, memory, and GPU.

CPU and Memory

72-core ARMv9 CPU

The 72-core Grace CPU is based on the Neoverse V2 Armv9 core architecture.

480GB LPDDR5X (Low-Power DDR) Memory

- Supports up to 480GB of LPDDR5X memory

- 500GB/s per CPU memory bandwidth

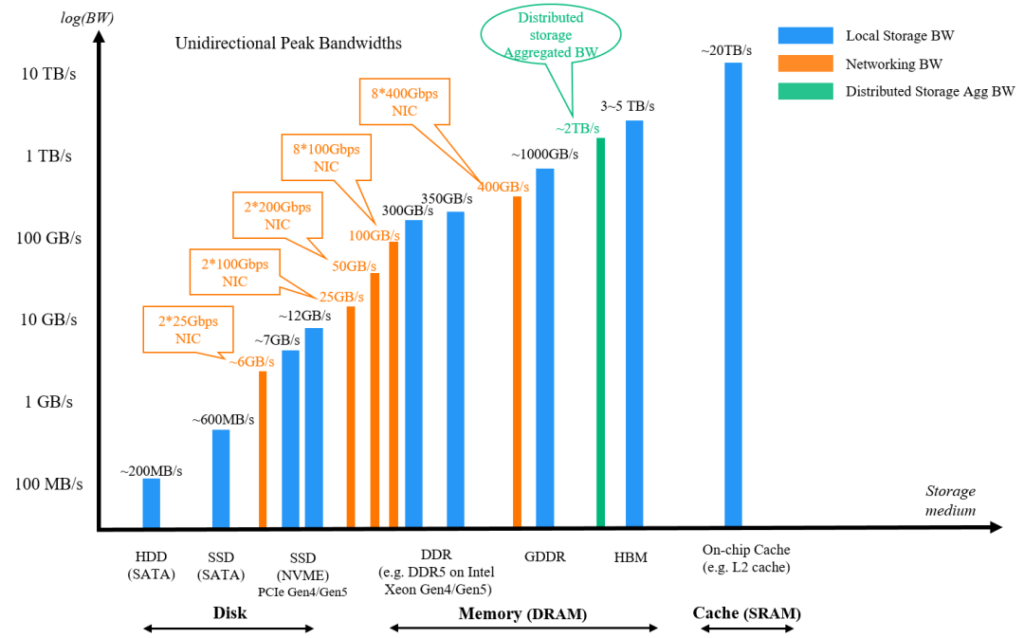

To understand this speed in the context of storage:

Comparison of Three Types of Memory: DDR vs. LPDDR vs. HBM

Most servers (the vast majority) use DDR memory, connected to the CPU via DIMM slots on the motherboard. The first to fourth generations of LPDDR correspond to the low-power versions of DDR1 to DDR4, commonly used in mobile devices.

- LPDDR5 is designed independently of DDR5 and was even produced earlier than DDR5

- It is directly soldered to the CPU, non-removable, and non-expandable, which increases cost but offers faster speeds

- A similar type is GDDR, used in GPUs like the RTX 4090

GPU and VRAM

H200 GPU Computing Power

Details on the computing power of the H200 GPU are provided below.

VRAM Options

Two types of VRAM are supported, with a choice between:

- 96GB HBM3

- 144GB HBM3e, offering 4.9TB/s bandwidth, which is 50% higher than the H100 SXM.

Variant: GH200 NVL2 with Full NVLINK Connection

This variant places two GH200 chips on a single board, doubling the CPU, GPU, RAM, and VRAM, with full interconnection between the two chips. For example, in a server that can accommodate 8 boards:

- Using GH200 chips: The number of CPUs and GPUs is 8 * {72 Grace CPUs, 1 H200 GPU}

- Using the GH200 NVL2 variant: The number of CPUs and GPUs is 8 * {144 Grace CPUs, 2 H200 GPUs}

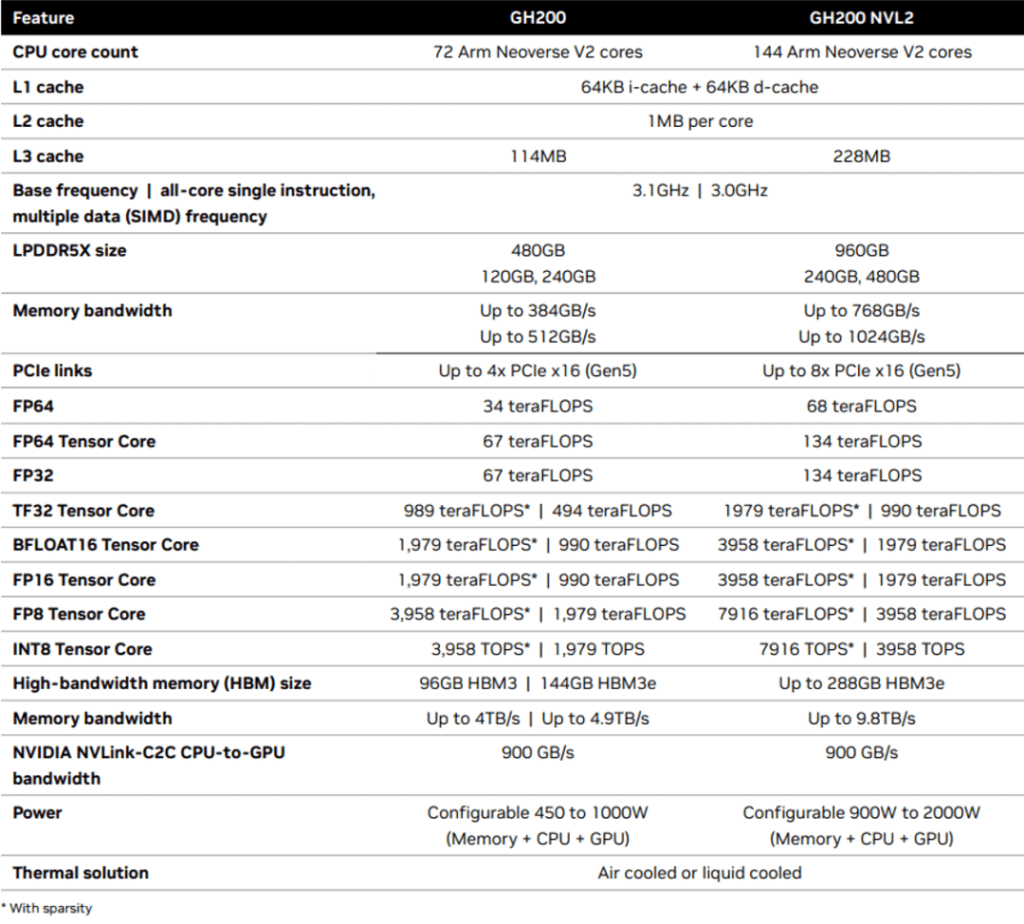

GH200 & GH200 NVL2 Product Specifications (Computing Power)

The product specifications for NVIDIA GH200 are provided. The upper section includes CPU, memory, and other parameters, while the GPU parameters start from “FP64.”

GH200 Servers and Networking

There are two server specifications, corresponding to PCIe cards and NVLINK cards.

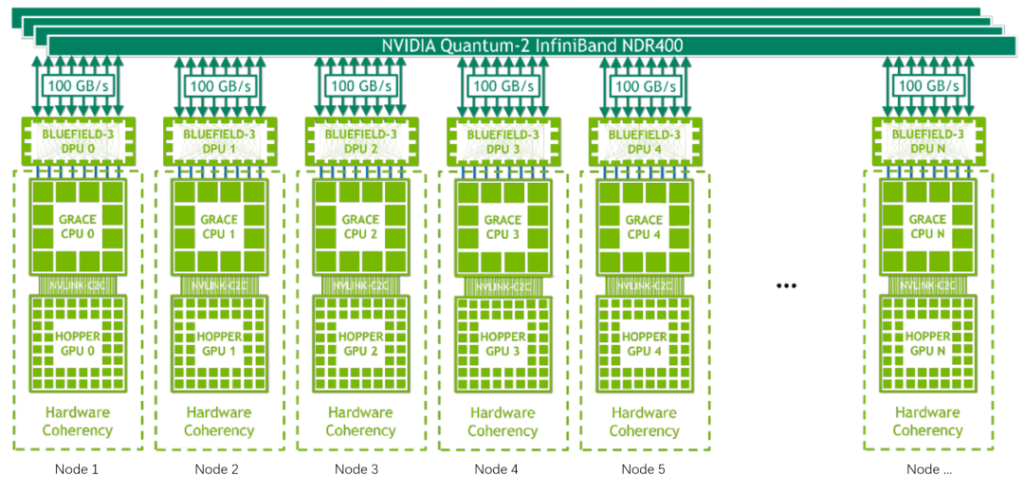

NVIDIA MGX with GH200: OEM Host and Networking

The diagram below illustrates a networking method for a single-card node:

- Each node contains only one GH200 chip, functioning as a PCIe card without NVLINK.

- Each node’s network card or accelerator card (BlueField-3 (BF3) DPUs) connects to a switch.

- There is no direct connection between GPUs across nodes; communication is achieved through the host network (GPU -> CPU -> NIC).

- Suitable for HPC workloads and small to medium-scale AI workloads.

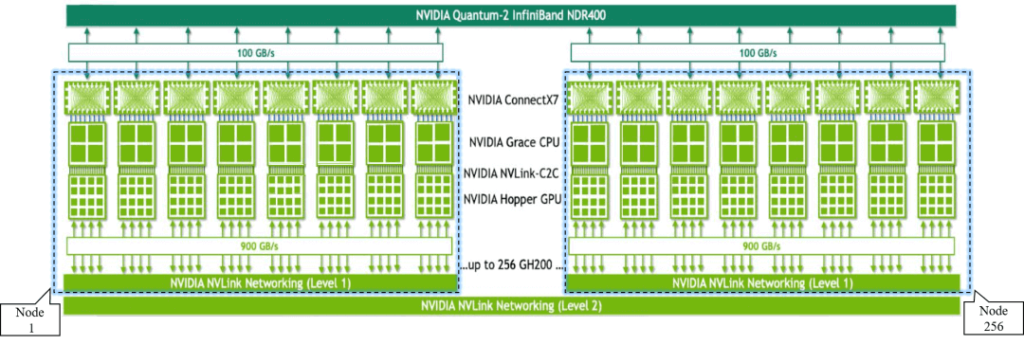

NVIDIA GH200 NVL32: OEM 32-Card Cabinet

The 32-card cabinet connects 32 GH200 chips into a single logical GPU module using NVLINK, hence the name NVL32.

The NVL32 module is essentially a cabinet:

- A single cabinet provides 19.5TB of memory and VRAM.

- NVLink TLB allows any GPU to access any memory/VRAM within the cabinet.

There are three types of memory/VRAM access methods in the NVIDIA GH200 NVL32, including Extended GPU Memory (EGM).

Multiple cabinets can be interconnected through a network to form a cluster, suitable for large-scale AI workloads.

Related Products:

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

-

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

-

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$260.00

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$260.00

-

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

-

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

-

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$190.00

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$190.00