All companies now need to keep pace with the evolving technology of the digital infrastructure, focusing mainly on efficiency and innovation. A significant event regarding networking is the commissioning of the 400GbE switch, which increases the bandwidth and minimizes the latency to no end as it meets the ever-growing demands of today’s applications. This innovation has seen organizations adopt new working methods that require a great deal of data transmission and the support of AI-jaded applications; the 400GbE switch appears to be setting the stage for the future. This article describes the physical aspects of the 400GbE technology, the architecture of the network that it will engender, and the usage of this technology within the context of the extended digital ecosystems that the organization manages.

What is a 400GbE Switch and How Does it Work?

Switching Network connections that do not have extensive fiber backbones becomes easier using 400GbE switches. Greater dependency on Fibre for network interconnection has increased the hardware’s switching requirements. With the advancement of AI, the requirements for larger 400GbE switches have increased to assist in routing large volumes of data with low latency. With the expected deployment of 5G and subsequent variants in the following decade, such switches will become required for large AI systems. Supporting the infrastructure necessary to interconnect multiple networks becomes possible with the infusion of automation and management networks. With the infusion of AI, the need for low latency and high volume switches will become widespread in multiple networks ranging from enterprise to cloud networks.

Understanding Switch Architecture

The development of 400GbE switch architecture includes the horizontal integration of many subsystems to achieve maximum efficiency and data crossflow, including higher-level interfaces for airflow control. In a nutshell, the switching fabric is the leading structure for efficiently routing data packets among ports. This high-speed matrix is structured to reduce congestion to the bare minimum through parallel data paths, guaranteeing maximum throughput and minimum delay. Furthermore, distinctive high-speed external copper transceivers are connected to the switch ports, which convert electronic signals into optical signals, enhancing fast data transmission over fiber optic cables. The Great Crossfire and Packet Processing Engines are also embedded in the architecture. They are accustomed to high-capacity network functions and attributes such as auto-configuration and environmental adaptability to increase performance in changing network situations. All the above-mentioned architectural features aid in achieving the 400GbE switch performance parameters, thus enhancing the development of more advanced networking technologies.

The Role of 400GbE in Modern Networks

The purpose of 400GbE is to meet the increasing demand for bandwidth connectivity fuelled by data-intensive applications and services. With this, interconnections between data centers improve, allowing these devices and systems to communicate faster and support other interconnected services such as AI, machine learning, and extensive data services. While communication networks that employ 400GbE technology are designed to support substantial bandwidth requirements, they also promise the slightest delays and a high level of dependability of the service. In addition, the availability of increased automation and more sophisticated network management protocols within the 400GbE framework guarantees streamlined operation of the network and its growth, which is necessary in this age of digital transformation and strong performance of networks in business and industrial use.

Key Features of 400GbE Switches

Switches built around the 400GbE standard and terminology have several features that allow network management more effectively and improve overall performance. Such switches have achieved a level of direct access latency that is bearable to the high-frequency traders and mission-critical applications in that particular network as long as it is 400GbE. Cut-through switches also have the quality of services QOS, which assists in managing network traffic by allocating bandwidth appropriately depending on the application. More Detail also comprises a set of coding protocols and security against other forms of information espionage. The configuration of these switches allows their modification and extension by further modernization of technologies and business expectations. There are also manager provisioning and configuration tools embedded in the network, which assist in the working of the network instead of the purpose, which can reduce workload and chances of error, thus increasing efficiency in operation.

How do you choose the right HPE or Juniper Switch?

Comparing HPE and Juniper Options

When comparing HPE and Juniper switches, the performance, price, technical support, and airflow management are some of the main parameters. The focus of HPE switches is their variability and the provision of networking solutions through user-friendly interfaces and management tools, which makes them suitable for medium and large enterprises. They focus more on green energy-saving technologies but are competitive in the market. On the other hand, Juniper is addressing data centers’ high-performance environments that prioritize security and flexibility in network architecture design in data-rich settings and large data centers. In most cases, Juniper’s ability to automate processes is good enough to get most of the tasks done, which in turn minimizes human effort concerning network management. The decision between HPE and Juniper seems to be primarily driven by the prevailing factors within an organization, such as budget, network intricacy, and suitability with the available IT framework.

Considerations for High Bandwidth Applications

Switches installed in high bandwidth environments must quickly satisfy several criteria. First, the switch capacity and throughput are essential since the amount of switch traffic must be significant enough to avoid possible bottleneck situations. Port density and backplane model composition have to be high. Second, Quality of Service (QoS) features are required to make some traffic classes more critical than others, especially with VoIP, streaming, or essential data services. Latency and the amount of data fetched are two related aspects that must be dealt with. Such considerations comply with recommendations from specialized industry leaders and aim to build a strong network capable of dealing with heavy data requirements.

What are the Benefits of a 64-Port 400GbE Switch?

Enhanced Connectivity and Port Density

The modern 64-port 400GbE switch is equipped with exceptional connectivity and port density, establishing a solid foundation on which data-driven applications can be built. Built for high-density deployments, this switch aims at scaling out networking and increasing the number of devices on the enterprise network, which translates to higher data traffic across the various ecosystems. Such density is ideal for data operations and reduces the capital needed for the infrastructure. Besides, the evolutional design allows for high data rate transfers, low transmission time, and increased deliverable data. Consequently, these switches are highly beneficial when data density is high, such as cloud-based services and extensive data centers networking 400GbE per port technologies. The addition of a 64-port 400GbE switch allows integration of other parts of the network and, therefore, boosts the performance and stability of the network while being in line with the ongoing technology trends and preparing the network for future investments.

Boosting Throughput and Reducing Latency

The function of a 64-port 400GbE switch is to increase the network performance attributes since it can perform data processing at lower latencies. These switches use Thornton’s Advanced packet ‘forwarding’ technologies and dynamic algorithms to facilitate effective data transmission, which reduces network node transfer time. Facilities that rely on high-speed Ethernet, such as these, dramatically reduce throughput bottlenecks, even during peak usage times. Service providers have always built-in quality of service (QoS) features, guaranteeing that latency and performance will be low by assuring that critical traffic is prioritized. Further, features such as RDMA over Converged Ethernet (RoCE) enhance the interaction between the storage and compute resources of the virtualized environment. In conjunction, such technological improvements align with significant standard practices in the infrastructure design and look like ones that would enhance the outgoing traffic while reducing latency, thus addressing present and future connectivity needs.

Exploring Open Ethernet Switch Solutions from FS

Why Open Ethernet Is Gaining Popularity

The open Ethernet market expansion could be attributed to its flexibility, cost-efficient, and scalable features. As pointed out by some recent sources, open Ethernet solutions ensure interoperability with multiple vendors, thus eliminating vendor lock-in and increasing personnel in the development and infrastructure of the network. There is more openness for most network customization and innovative application deployment across the business. Also, the result is often reduced costs as open Ethernet provides for using less costly white box hardware rather than proprietary, sensitive systems. Operating this way can reduce almost every infrastructure-related expense — and initial and maintenance expenses — and permit network expansion in reasonable productivity patterns. Hence, the summary of all the points made leads organizations looking to shift to an open Ethernet architecture to have a good case and reason to do so.

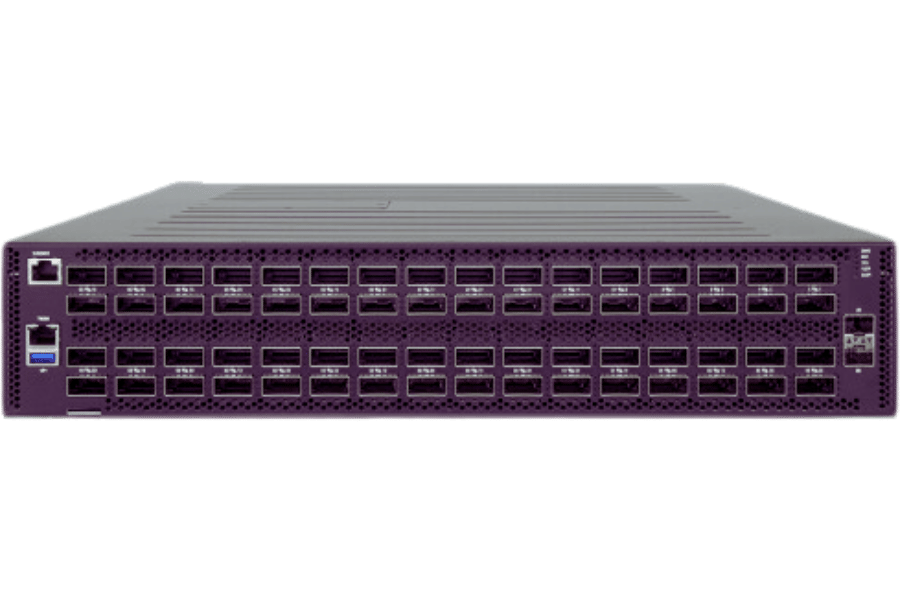

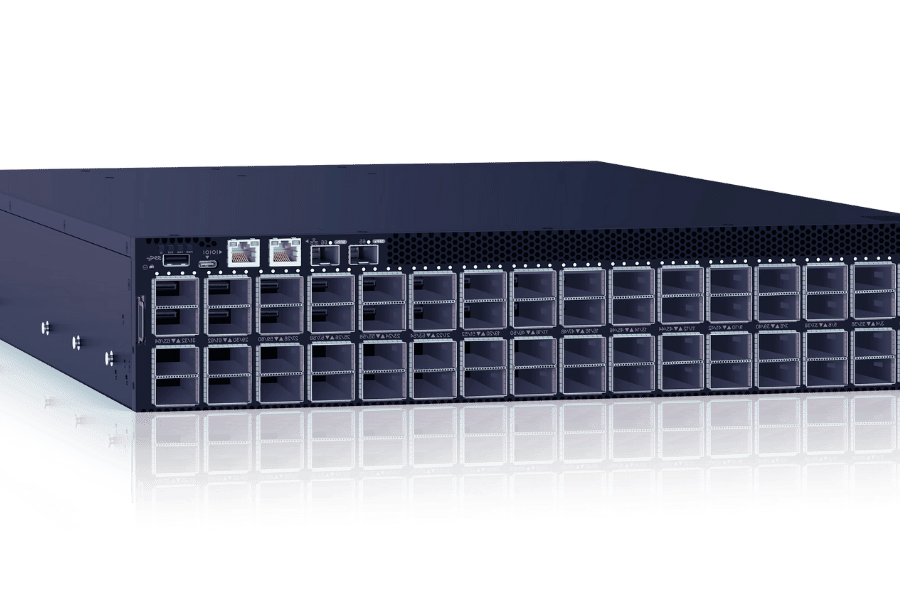

Take a Look at the FS N9510-64D

FS N9510-64D is one of the newest open medium Ethernet switches on the market, designed to suit the current trends in network tissues. Built with 64 400G ethernet ports, this switch has a very high throughput optimized for data center enterprise and provider environments. It also supports many Layer 2 and 3 protocols, enhancing parallel and multitasking processing capabilities. Moreover, the N9510-64D can incorporate several different constraining parameters that constitute service quality (QoS) and effective means of congestion assistance in a virtualized environment to safeguard traffic-directed acyclic graph (DAG) transfer.

Additionally, this switch incorporates some features like RDMA over converged Ethernet (RoCE) v2, which enables a scalable Switch includes some features like RDMA over converged Ethernet (RoCE) v2 that allows rapid switching of data in bandwidth-restrictive applications, thus improving the processing velocity and stability. Its ability to work with virtually any network operating system provides an open networking and flexibility advantage, allowing a controlled adaptation of changing operational needs to a given organizational network environment. The FS N9510-64D should be identified by effective enterprises that require robust, low cost and scalable networking structures.

Integration with Linux and Cumulus

The N9510-64D managed switch integrates quite well with open cumulus Linux and thus becomes a solid and flexible base for network operations inside a chassis. Cumulus Linux is built around the kernel of the Linux operating system and is, therefore, quite flexible. Indeed, many Linux tools and scripts can be used to modify and automate several tasks, including network switching. Such freedom allows IT managers to fine-tune a switch, especially for a network service, and reduce networking TCO. Moreover, the FS N9510-64D’s interoperability with Cumulus Linux simplifies deployment processes and allows DevOps networking, leading to fast expansion, excellent performance, and low-cost maintenance.

What Challenges Do You Face with Routing in a 400GbE Network?

Managing Scalability in Large Networks

When managing large-scale networks, for instance, 400GbE (Gigabit Ethernet networks), Network expansion, on the one hand, should not outplace the available resources on the other. The top strategies involve incorporating advanced traffic management techniques and leveraging robust network infrastructure flexibility. Putting ZOTS (Zero One Time Services) in place and optimizing packet routing approaches is essential. Also, using modifiable and extendable large hardware that will meet the existing network supports the expansion of the network. Further automation of management and implementation of Software Defined Networking (SDN) simplifies resource allocation and scaling processes. From the study, it can be pointed out that there are areas where an organization can emphasize addressing the challenge of scalability and improving throughput while enhancing operational efficiency using more advanced power supplies.

Optimizing for Virtualization and AI Workloads

It is essential to focus on high-speed and low-latency interconnects while deploying adaptable and easily scalable structures to address the challenges of virtualization and AI applications. Advanced load balancing and a traffic prioritization strategy come in handy to cater to the data-demanding needs in such environments and models. Integrating GPUs and FPGAs as next-gen hardware accelerators would be a big plus as these units are ideally suited for parallel processing, which AI work requires. Moreover, using cloud networks allows for better and more dynamic resource utilization and workload distribution, making large data center’s resource planning more efficient. Organizations can provide practical virtualization and AI application environments to their employees through these measures to produce more creative outputs.

Addressing Power and PSU Requirements

Declining to acknowledge or suppress power and PSU (Power Supply Unit) requirements is extremely important for ensuring stability and efficiency, specifically in high sea space, while setting 400 GbE networks. Nowadays, data centers require high-efficiency PSUs to cut down on power loss and factor in the expenses. In this regard, selecting the correct PSU means understanding the power requirements for each of the network parts, servers, switches, network interface controllers, etc., so as not to be overpowered or underpowered, which is a considerable cost. Authoritative sources have pointed out that better cable arrangement and scaling possibilities are achieved when utilizing their power supply (PSU) with modular construction. However, redundancy is equally essential. Furthermore, the obligation of solutions for the monitoring of power will help to improve power consumption with a view towards energy use and infrastructure reliability. Also, the power supply unit (PSU) and the group energy management can significantly improve economic and environmental efficiency.

Reference Sources

Frequently Asked Questions (FAQs)

Q: What are the advantages of operating a 400GbE switch in the context of data centers?

A: 400GBE switch is a relatively modern 400-gigabit ethernet switch that can meet the needs of high bandwidth data centers. It is necessary for today’s big applications envisaging the needs of machine learning, hyper-converged infrastructures, HPC, etc., and they are also very beneficial since they alleviate bandwidth constraints and assist in reducing the distance. These switches are critical in deploying the network capacity growth and enhancing data center performance.

Q: How many ports does a standard 400GbE switch have?

A: Typically, a 400GbE IIU form factor switch has 32 ports of 400GbE. The combination of low rack space and high bandwidth movement trends permits a very high port density. Other versions may be able to offer 128 ports at lesser speeds of 100 or 200 to meet particular business areas that require that flexibility.

Q: Are there any other port speeds that a 400GbE switch can work with?

A: Both 400-GbE switch ports range from 25G to 400G, accommodating gradual rollouts and heterogeneous environments. 400GbE data centers can be configured natively using 25GbE, 100GbE, 200GbE, and 400GbE uplink ports based on their requirements and existing architecture.

Q: If a switch has 32 ports and supports 400GbE, what is the cumulative switching capacity of that switch?

A: In most cases, 32-port 400GbE switches would have been estimated to possess a switching capacity of 12.8 Tbps (Terabytes per second). This immense bandwidth would make it possible to allow speedy data transfers and, in the cases of large-scale data center traffic, ensure the smooth flow of that traffic for various applications and services.

Q: In your opinion, how do 400GbE switches offer a better economic value for the investment at the data center?

A: 400GbE switches can do that in several ways. They can provide greater port density, allowing them to lower the number of switches required and subsequently lower power usage. Furthermore, the shift in play is using a fixed bandwidth per port. Thus, one can limit the growth of the expensive network and, hence, the complexity of network management. In addition, those switches can electrically link automation features that let building blocks system-level configuration without human operators reducing configuration requirements.

Q: Do 400GbE switches add to the existing data center’s infrastructure?

A: Yes, the infrastructure in the center would accept the 400GbE switches due to their high adaptability. They are also designed to fit various deployment scenarios such as top-of-rack, end-of-row, and spine-leaf. In this manner, roughing and cooling are achieved quickly. Additionally, most 400GbE switches support lower speed connections such as GBE and 100G, allowing an incremental change in the existing networks and servers without disrupting anything.

Q: Which networks benefit from incorporating 400GbE switches with advanced features and functionalities?

A: As far as у ZTE C05250 is concerned, high availability features are offered where multiple fibers are spliced together; advanced load balancing techniques like ECMP (Equal-Cost Multi-Path), which balances the incoming data usage among all ports, are also implemented. The application encompasses computer clusters for storage, enterprise servers, virtualization, video security, real-time communications, etc. Their other defining aspects involve the provision of SDN and other networking automation, robust traffic management, and QoS control features.

Q: What configuration options do 400GbE switches provide?

A: Scalability is one of the key considerations while designing data center infrastructure. 400GbE switches add high port densities, allowing backbone connectivity between multiple lower-speed links. The resulting scalability will enable data centers to quickly expand their network infrastructure capacity, adding more servers, storage, and other networked devices without completely revamping the existing system.

Related Products:

-

QSFP-DD-400G-SR8 400G QSFP-DD SR8 PAM4 850nm 100m MTP/MPO OM3 FEC Optical Transceiver Module

$180.00

QSFP-DD-400G-SR8 400G QSFP-DD SR8 PAM4 850nm 100m MTP/MPO OM3 FEC Optical Transceiver Module

$180.00

-

QSFP-DD-400G-DR4 400G QSFP-DD DR4 PAM4 1310nm 500m MTP/MPO SMF FEC Optical Transceiver Module

$450.00

QSFP-DD-400G-DR4 400G QSFP-DD DR4 PAM4 1310nm 500m MTP/MPO SMF FEC Optical Transceiver Module

$450.00

-

QSFP-DD-400G-SR4 QSFP-DD 400G SR4 PAM4 850nm 100m MTP/MPO-12 OM4 FEC Optical Transceiver Module

$600.00

QSFP-DD-400G-SR4 QSFP-DD 400G SR4 PAM4 850nm 100m MTP/MPO-12 OM4 FEC Optical Transceiver Module

$600.00

-

QSFP-DD-400G-FR4 400G QSFP-DD FR4 PAM4 CWDM4 2km LC SMF FEC Optical Transceiver Module

$600.00

QSFP-DD-400G-FR4 400G QSFP-DD FR4 PAM4 CWDM4 2km LC SMF FEC Optical Transceiver Module

$600.00

-

QSFP112-400G-SR4 400G QSFP112 SR4 PAM4 850nm 100m MTP/MPO-12 OM3 FEC Optical Transceiver Module

$650.00

QSFP112-400G-SR4 400G QSFP112 SR4 PAM4 850nm 100m MTP/MPO-12 OM3 FEC Optical Transceiver Module

$650.00

-

QSFP112-400G-DR4 400G QSFP112 DR4 PAM4 1310nm 500m MTP/MPO-12 with KP4 FEC Optical Transceiver Module

$1350.00

QSFP112-400G-DR4 400G QSFP112 DR4 PAM4 1310nm 500m MTP/MPO-12 with KP4 FEC Optical Transceiver Module

$1350.00

-

QSFP112-400G-FR1 4x100G QSFP112 FR1 PAM4 1310nm 2km MTP/MPO-12 SMF FEC Optical Transceiver Module

$1300.00

QSFP112-400G-FR1 4x100G QSFP112 FR1 PAM4 1310nm 2km MTP/MPO-12 SMF FEC Optical Transceiver Module

$1300.00

-

QSFP112-400G-FR4 400G QSFP112 FR4 PAM4 CWDM 2km Duplex LC SMF FEC Optical Transceiver Module

$1760.00

QSFP112-400G-FR4 400G QSFP112 FR4 PAM4 CWDM 2km Duplex LC SMF FEC Optical Transceiver Module

$1760.00

-

OSFP-400G-SR8 400G SR8 OSFP PAM4 850nm MTP/MPO-16 100m OM3 MMF FEC Optical Transceiver Module

$480.00

OSFP-400G-SR8 400G SR8 OSFP PAM4 850nm MTP/MPO-16 100m OM3 MMF FEC Optical Transceiver Module

$480.00

-

OSFP-400G-DR4 400G OSFP DR4 PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$900.00

OSFP-400G-DR4 400G OSFP DR4 PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$900.00

-

OSFP-400G-FR4 400G FR4 OSFP PAM4 CWDM4 2km LC SMF FEC Optical Transceiver Module

$900.00

OSFP-400G-FR4 400G FR4 OSFP PAM4 CWDM4 2km LC SMF FEC Optical Transceiver Module

$900.00

-

OSFP-400G-PSM8 400G PSM8 OSFP PAM4 1550nm MTP/MPO-16 300m SMF FEC Optical Transceiver Module

$1200.00

OSFP-400G-PSM8 400G PSM8 OSFP PAM4 1550nm MTP/MPO-16 300m SMF FEC Optical Transceiver Module

$1200.00