The integration of AI into society has rapidly accelerated the dependency upon network infrastructure, requiring scaling and efficiency more than ever before. This paradigm shift is epitomized by the emergence of Ultra Ethernet, a new breed of AI-centric Ethernet that is specifically crafted for AI networks. The newest video of our ongoing presentation series that explains the workings and benefits of Ultra Ethernet is now live on YouTube. To understand the impact of this new technology, my blog post correlates the portions of video Ultra Ethernet and the ‘AI is Hard Presentation’ ultra connectivity and the AI inspired world we live in. Together with the video, this blog post provides a detailed walkthrough, explaining the problems most AI networks face today. For network engineers, AI researchers, and any tech enthusiasts, this blog explores the impact of Ultra Ethernet and the future of connectivity for AI networks.

Table of Contents

ToggleWhat is Ultra Ethernet and How Does it Work?

Understanding the Core Principles of Ultra Ethernet

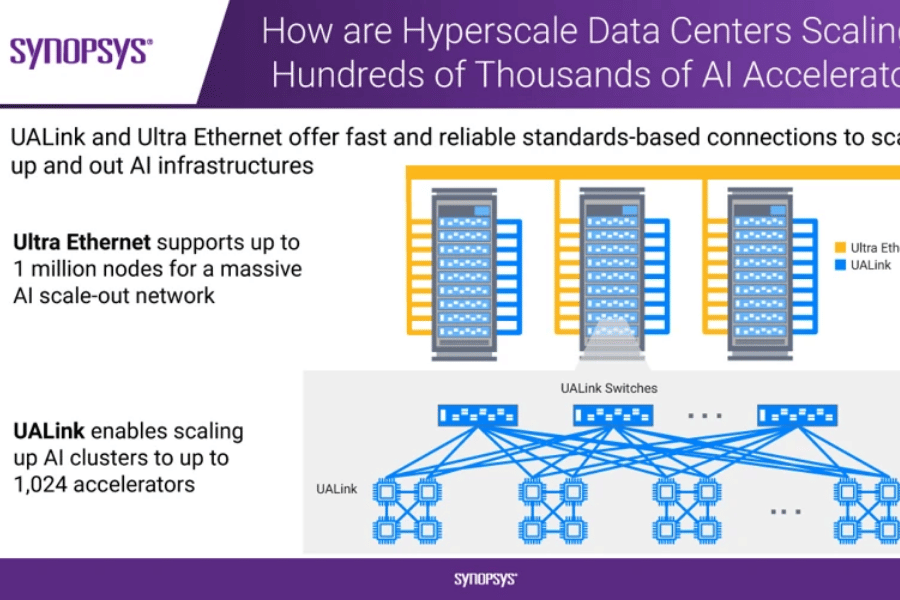

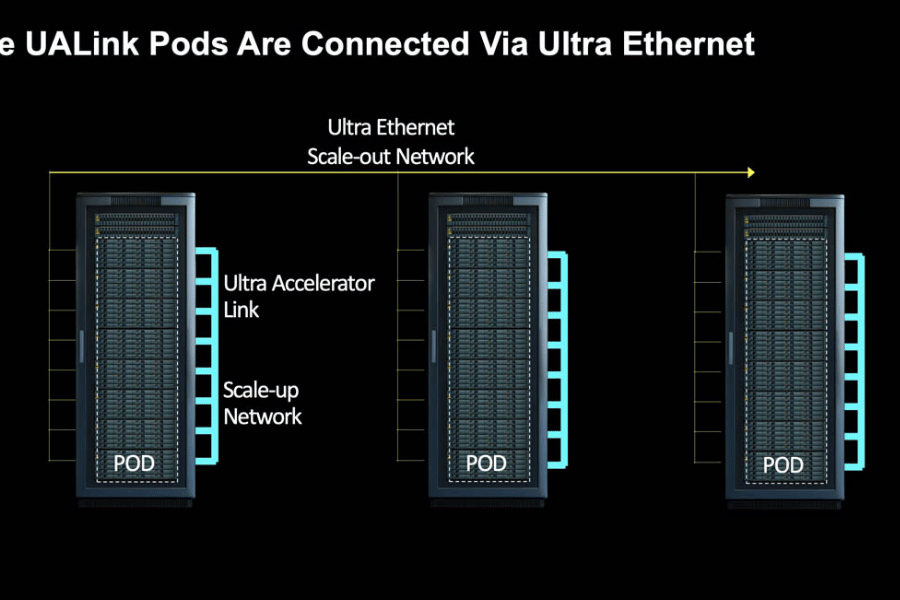

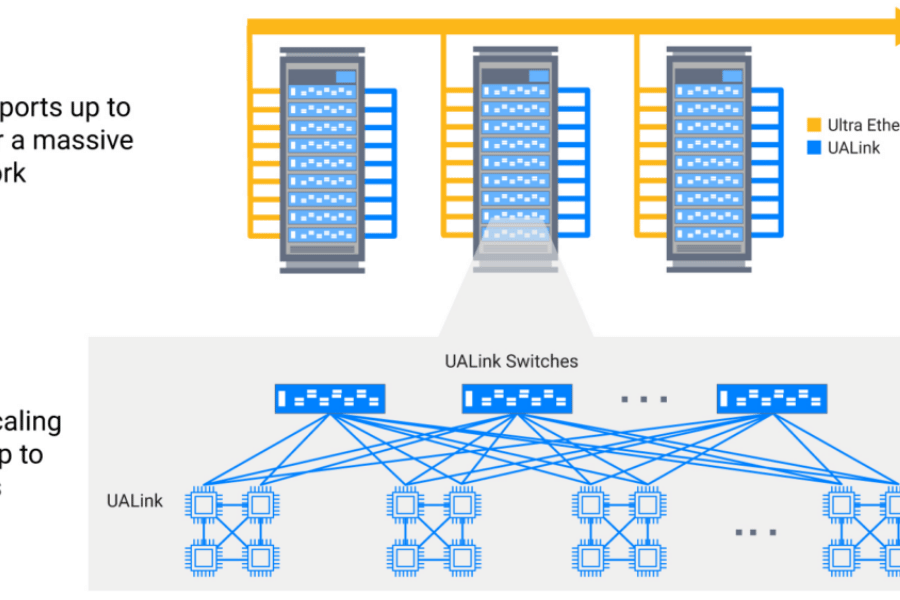

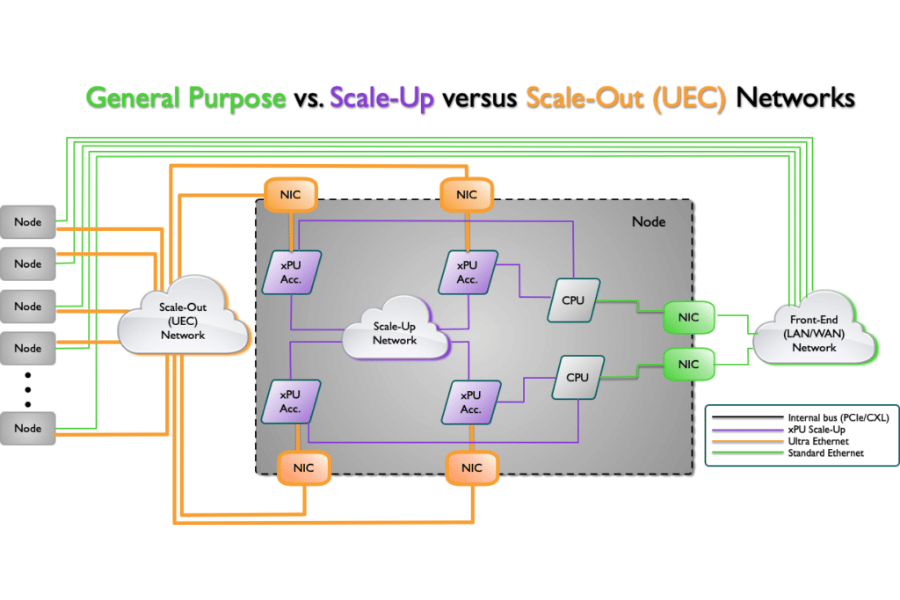

Ultra Ethernet is a new era networking technology created to meet the specific needs of modern AI systems. It aims towards creating low latency, high data throughput interconnected systems for optimized transfer of information and computation. Ultra Ethernet employs sophisticated protocols and high precision timing interfaces to enable coordination among AI tasks while alleviating bottlenecks and augmenting packet loss. Its design supports extreme levels of scalability as well as network reliability, which translates into high performance in the context of complex networks. Consequently, Ultra Ethernet becomes enabler of AI fueled networks where time and accuracy are indispensable.

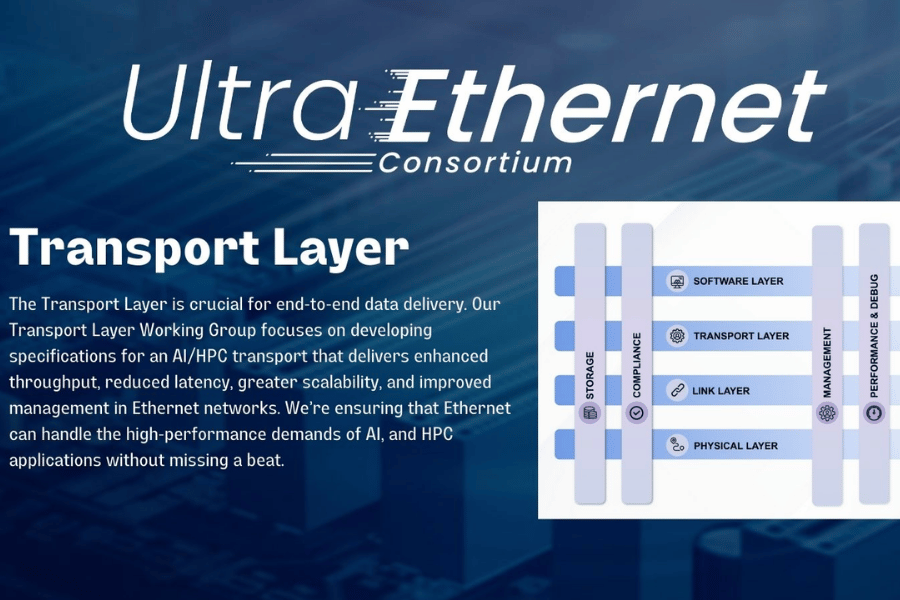

The Role of the Ultra Ethernet Consortium in Networking

The Ultra Ethernet Consortium is instrumental in spearheading change in issues of networking and interfacing, especially with the advent of AI and machine learning. These developments have completely transformed the methods with which industry players approach business and collaboration. It is finding ways to bring together existing players to fuse their ideas and create open standards and structures which would provide the next generation networking systems the needed efficiency and interoperability. The latest reports indicate keenness from the consortium to improve Ethernet technology to achieve ultra-low latency, robust bandwidth, low jitter, and better dependability critical to AI in real-time data processing and immensely loaded environments.

Recent reviews highlight the growing necessity for advanced networking solutions as global data traffic is expected to exceed 200 zettabytes by 2025. The consortium tackles the challenges with breakthrough innovations that enable the precision expansion of networks with the increasing data growth. In addition, his contributions to techniques such as precision time protocol (PTP) and application-layer congestion control systems have been well known for aiding in improving the rates of data transfer and reducing congestion in the network. These improvements guarantee that Ultra Ethernet will be able to sustain the stringent requirements of AI workloads, while also ensuring expansion and integration in novel environments across different domains.

With collaborative research and development through technological standardization, the Ultra Ethernet Consortium is building a reliable and scalable base for the global network infrastructure which is vital for a hyper connected world. The emphasis on adaptability ensures that the evolution of Ethernet technology is responsive and relevant to the emerging data-centric future.

The Importance of AI and HPC in Ultra Ethernet

The integration of Artificial Intelligence (AI) and High-Performance Computing (HPC) is crucial to the development of Ultra Ethernet. AI requires advanced computing tech for its applications such as complex reasoning, data analytics and data interpretation that processes massive and intricate datasets at remarkable speed and precision. Consequently, the network that is serving to such applications will need backhaul that has capability of high data throughput and low latency. HPC systems also require data transfer efficiency to perform large scale calculations, simulations, and other automated tasks. To address the performance-defining parameters such as bandwidth, throughput, reliability, and scalability, Ultra Ethernet offers qualitatively higher parameters of bandwidth and performance reliability. These changes enable better performance of AI tasks as well as HPC applications. The combined capacity Ultra Ethernet has enable these improvements, which translates into reduced time to market and enable advancements across industries dependent on complex and large scale data processing.

In What Aspects Does Ultra Ethernet Improve AI Networks?

Combining AI and HPC With Ultra Ethernet

Ultra Ethernet furthers the integration of AI and HPC by improving the movement of data with high-speed, low-latency links. This guarantees that AI models are able to tackle complex datasets efficiently, while at the same time, HPC systems are able to perform resource-intensive simulations with minimum delays. Ultra Ethernet facilitates rapid and reliable communication among distributed systems by increasing the bandwidth, permitting more accurate and quicker computations which are vital for both AI and HPC workloads.

Advantages for AI Networks Designed for High Performance

- Enhanced Scalability: Makes it possible for automatic increases in AI workload due to having sturdy infrastructure capable of sustaining rising amounts of data and higher computation requirements.

- Reduced Latency: Reduces delays during data transfer for speedier decision-making and real-time processing of AI applications.

- Improved Data Throughput: Ensures that data transfer between systems is done efficiently, resulting in better performance of sophisticated AI models.

- Reliability: Sturdy and stable connection uptime that reduces disruptions during computations with high intensity.

- Cost Efficiency: Improved system performance which results in enhanced resource utilization, reducing operating costs for the AI infrastructure.

How Developed is Ultra Ethernet Technology Today?

Ultra Ethernet Consortium Activities

The focus of the Ultra Ethernet Consortium is to enhance Ethernet’s functionalities for high-performance computing and AI-driven workloads. Efforts are currently underway to increase Ethernet protocol optimization for low latency, increase the bandwidth for data-intensive applications, and increase the efficiency of energy consumption during data transmissions. The consortium also aims to promote standardization for system interoperability to encourage the adoption of ultra-low latency Ethernet technologies. These activities are expected to enhance adoption of ultra Ethernet and maintain Ethernet’s role as one of the central technologies in computing nowadays.

Audio recordings and descriptions of ultra Ethernet innovations of the past few months.

One of the highlights of evolution in Ethernet technology is the innovation of 400 GbE (Gigabit Ethernet) and its successors. 400 GbE bandwidth is sufficient for modern needs such as hyperscale data centers and AI based analytics. This innovation features new technologies, including PAM4 (Pulse Amplitude Modulation) signaling, which enable more efficient data encoding and transmission over high-speed networks. Research indicates that network latency can be reduced by as much as 75% with the implementation of 400GbE, leading to significantly improved application responsiveness.

One of the advancements is the deployment of SmartNICs (Smart Network Interface Cards) into the Ethernet structure. SmartNICs contains dedicated additional processors which move some of the workload from the host central processing unit (CPU) like encryption, compression and packet processing. This improves performance and lower overhead. According to the measureable benchmarks, the network throughput increased by 30% while the energy consumption directly reduced with the deployment of SmartNICs.

Moreover, Ethernet restructures are finding new ways to meet the ever increasing need for energy efficient networking. Energy Efficient Ethernet (EEE) is a new innovation by EEE that aims at reducing the power used when there is low data activity. Some reports claim that this technology can decrease energy expenditure beyond 50% in some circumstances so it is important for environmentally friendly IT infrastructure.

These innovations show how Ethernet is still evolving ‘evolving’ to make these rapid changes. Performance, scalability and reducing energy use while meeting the needs of the industry is what drives the adoption of these technologies.

Resources for Learning about Ultra Ethernet Technology Online

Educational Material on Ultra Ethernet

For educational material on Ultra Ethernet, you should first look at specialist technology websites, research journals, and publications from the primary networking bodies. Websites like Cisco’s, IEEE and Techopedia provide Ethernet updates and coverage, along with many universities and other online learning center’s classes and webinars that teach the basics and new changes for a nominal fee. It is advised to utilize all these recommended resource Ultra Ethernet and its features as it pertains to modern networking technology and the scope of the world.

The Use of Video and Chapter Instruction Presentation

Videos and chapter presentations facilitate students to analyze complex topics such as Ultra Ethernet with relative ease. Tutorials, demonstrations and animated explanations provide practical context and help garner attention. Intermediate depth videos and lower division lectures are openly available on YouTube, LinkedIn Learning, and Coursera for profressors to utilize in their Multi Media Logic classes. Many industry professionals also share the condensed form presentations that break down the crucial aspects of Ultra Ethernet and help the learn the questions they were not able to grasp. These resources prove useful for the learners seeking a summary of Ultra Ethernet technology in a planned and practical manner.

Learning Through the World on YouTube

YouTube serves as a library of information, content related to Ultra Ethernet technology can easily be accessed. From basic introductions to the more advanced technical videos, the platform has everything from industry experts, institutions, and brands that deal with networking technologies. For example, users can access expertly crafted Step by Step tutorials, explaining how Ultra Ethernet works along with its parts and its uses in the world today. \

Further, searching for Ultra Ethernet shows emerging industry trends, including the Ultra Ethernet’s contributions towards faster data transfer, lower latency, and advanced cloud-based services. Video content often comes with statistical information that illustrates the use of Ultra Ethernet and its performance in comparison to traditional Ethernet technologies. Creators often employ the use of visualized performance metrics, such as throughput and latency comparisons, which helps the audience visually grasp the dissimilarities and advantages. \

By combining the curated video content with verified pieces of technical information, learners in the acquiring process get more objective knowledge. Youtube serves as a great platform for merging concepts, surrounded by a lot of theory, with a lot of detailed practical observations, helping learners appreciate this modern technology even better.

What Challenges Does Ultra Ethernet Face?

Technical Challenges in Ultra Ethernet Implementation

The implementation of Ultra Ethernet is faced with multiple stringent technical constraints. First, the inter-operability with current network systems is difficult because of the diversity in hardware and protocols. Second, there is the challenge of maintaining a low latency and high throughput, at the same time, in dense and high traffic optimization environments. Another major hurdle is maintaining the scalability of Ultra Ethernet solutions while ensuring that costs remain reasonable. It is also necessary to integrate Ultra Ethernet into crucial applications on the system side, but it also becomes increasingly important to protect data integrity from various vulnerabilities. Overcoming these challenges is important to reap the benefits of this technology.

Overcoming Boundaries in AI Networks

Improving the efficiency of computing and data processing is crucial for overcoming boundaries within AI networks. The application of specialized hardware like AI-optimized processors can dramatically reduce latency and increase throughput, leading to improved performance. Application of advanced networking protocols guarantees communication among AI systems, especially in distributed settings. Safeguarding growing datasets and workload demands is accomplished by optimizing scalability and outgrowth algorithms. Protecting sensitive information within AI networks necessitates addressing security issues by employing encryption and stringent access control measures. The implementation of these strategies allows AI systems to function reliably across a wide range of applications.

Ultra Ethernet vs. InfiniBand: Which is Better for AI?

Historically, InfiniBand dominated AI and HPC networking due to its low latency and high throughput. However, Ultra Ethernet is emerging as a compelling alternative, offering:

Cost Efficiency: Ethernet’s multi-vendor support reduces costs compared to InfiniBand’s single-vendor ecosystem.

Scalability: Ultra Ethernet’s open standards enable seamless network expansion.

Performance: With UEC 1.0 specifications, Ultra Ethernet rivals InfiniBand in latency and speed, especially in AI clusters.

Frequently Asked Questions (FAQ)

Q: What Ultra Ethernet distinguishes and sets apart from other networking technologies used with AI systems.

A: Ultra Ethernet is a significant evolution of technologies, such as InfiniBand and standard Ethernet, that support AI workloads. As can be seen in the UEC presentation video, Ultra Ethernet is Ethernet-based, which means it has the benefits and cost considerations of networking compatibilit. It has been purposely built to solve the problems of bandwidth, latency, and scaling of large AI clusters, while still allowing a more open ecosystem hardware vendor support relative to proprietary solutions which is easier to accomplish by vendors.

Q: Which companies belong to the Ultra Ethernet Consortium?

A: Almost all big names in technology, including those operating in computing and networking, now belong to the UEC. There are active participants like Nvidia, AMD, Intel, Broadcom, Cisco, Meta, Microsoft, and Google. As one comment puts it in response to the video, it seems that the Ultra Ethernet will metalikely become a standard given the wide industrial participation. Having industry representatives from different technology families provides the consortium with a broad leadership base to strive for universal acceptance and use of the Ultra Ethernet Ultra Ethernet SOS.

Q: In what way does Ultra Ethernet fulfill the bandwidth requirements of contemporary AI systems?

A: Ultra Ethernet was shown in the presentation to be adaptable to sustain extraordinarily high bandwidths necessary for next generation AI systems. Its current low-performance tier is already quite high because it starts at 400Gbps. Furthermore, it has a roadmap that goes beyond 1.6Tbps. These numbers are massive, but it does provide the required bandwidth for large language models that need to move large amounts of data between compute nodes. There’s a set of optimizations within the specification that are tailored to the unilaterally dominant traffic patterns of AI workloads relative to traditional enterprise networking requirements that J. Metz and other contributors but using the technical documentation provided, try to explain.

Q: How can I upload content related to Ultra Ethernet, and is the full UEC presentation publicly available?

A: Yes, the entire UEC presentation video can be accessed on YouTube. Users may share their opinions, but restatement without approval is a violation of copyright law, as is uploading original content from the video. The consortium, however, does encourage the community to participate in and discuss relevant topics in the proper community forums. Should you be working on such technologies, or if you have questions on related implementations, the UEC webpage has information on how to participate with the tech working groups.

Q: What does Ultra Ethernet bring to the table in terms of AI training latency improvements?

A: Ultra Ethernet, in comparison with traditional networking protocols, increases speed and reduces latency significantly. The presentation shows how Ultra Ethernet includes specialized congestion management, optimized transport protocols, and hardware acceleration for implementing an Ultra Ethernet low latency paradigm. These reductions are essential for distributed AI training where the need for synchronization between nodes may become a slowdown. The technical details given by J. Metz in the video explain these improvements aid the “bottleneck” phenomenon experienced for scaling model training over large clusters.

Q: In which ways will Ultra Ethernet change the cost stratification for building AI infrastructure?

A: Ultra Ethernet’s goal is to offer lower-priced, specialized high-performance networking solutions that are less expensive than current offerings. Because it is built on top of the Ethernet ecosystem Ultra Ethernet will have more competitive economics for deploying AI infrastructure when compared to Ethernet. This way, non-tech conglomerates will also be able to construct capable AI clusters. Standardization means that different vendors’ networking devices within the same family branding will work together which minimizes vendor lock in and subsequently, long term costs.

Q: What was the said timeline for the adoption of Ultra Ethernet in commercial products?

A: As noted in the presentation, the initial Ultra Ethernet products are expected to start rollout in late 2024 with fuller adoption through 2025. Initial specification is aimed at features which can be implemented in the shortest timeframe with a longer term provision for more complicated features. Several consortium members, which includes large players in the broadband communications equipment market have already publicly stated their intention to build Ultra Ethernet-capable products in the coming years. The presentation stressed that the existing Ethernet infrastructure will help ease adoption cycles since there will be less disruptive changes needed.

Reference Sources

- Ultra Low-latency MAC/PCS IP for High-speed Ethernet

- Authors: Dezheng Yuan, Hongwei Kan, Shangguang Wang

- Publication Date: December 1, 2020

- Key Findings:

- This paper details a design approach for a fast Ethernet ultra low latency MAC/PCS (Media Access control/Physical Coding Sublayer) IP.

- The designed structure seeks to cater to the growing requirements for data processing and communication time in rapid technologies, especially in the scope of 100 Gbit/s and past that mark.

- Methodology:

- The writers examine the low-latency IP design implementations and perform simulation analysis on the latency in various systems.

- The outcomes show great enhancement in latency, making it adequate for use cases that need fast data transfer.

- Ultra High Resolution Jitter Measurement Method for Ethernet Based Networks

- Authors: Karel Hynek, Tomás Benes, Matěj Bartík

- Publication Date: July 30, 2019

- Key Findings:

- This article presents a novel method for measuring network jitter in Ethernet networks that can critically examine high-speed data streams for jitter at the sub-8 nanosecond level.

- Now the developed monitoring device can analyze incoming streams at a pace of 1 Gb/s while being capable of supporting up to 100 Gb/s.

- Methodology:

- The authors propose a monitoring device architecture that incorporates statistical functions to quantify network jitter and offer its average and peak-to-peak values.

- Performance and accuracy benchmarks are validated in the system’s testing across multiple platforms.

- Design of TSN-based Ethernet Driver Working Model for Time-aware Scheduling

- Authors: Bowen Wang, Feng Luo, Yutao Jin

- Publication Date: October 31, 2021

- Key Findings:

- This document proposes a novel architecture for a Time Sensitive Networking (TSN) based Ethernet driver that enhances deterministic delays for sensitive time streams.

- The developed paradigm meets the requirements to increase bandwidth in automotive and industrial settings.

- Methodology:

- The authors study the preconditions of time-aware scheduling as well as provide a novel operative model of the Ethernet driver.

- An embedded system implements the architecture, and its performance is evaluated against improvements in deterministic delay.

- YouTube

- Ethernet

Related Products:

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA MMA1Z00-NS400 Compatible 400G QSFP112 VR4 PAM4 850nm 50m MTP/MPO-12 OM4 FEC Optical Transceiver Module

$550.00

NVIDIA MMA1Z00-NS400 Compatible 400G QSFP112 VR4 PAM4 850nm 50m MTP/MPO-12 OM4 FEC Optical Transceiver Module

$550.00

-

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$700.00

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$700.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00