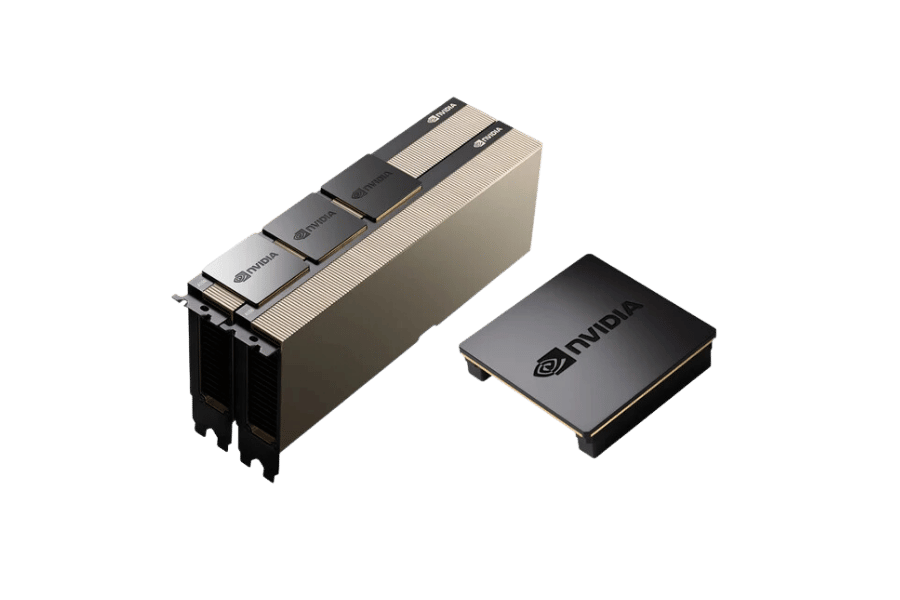

In the modern high-performance computing arena, increased data throughput and efficiency go without saying, as it is needed to get the best out of computer systems. The NVIDIA NVLink Connector for Dell PowerEdge C4130 is modern technology that enhances inter-GPU communication to perform demanding tasks accurately and efficiently. The present blog discusses in detail the place of the NVLink Connector in the structure of the Dell PowerEdge C4130, its parameters, and, what is especially interesting, its contribution to computational workloads. We focus on the NVLink Connector to explain its features and possibilities for increased computational effectiveness in various GPU-accelerated tasks.

Table of Contents

ToggleWhat is the NVLink and what is its mode of operation?

Elucidating the NVLink Technology

NVLink bridges a potential bottleneck in CUDA application performance, which has become an essential NVIDIA technology. NVlink is a high-speed Nvidia interconnect used to improve data transfers between GPUs by establishing a direct communication line. Compared to physical interconnects like PCIe, NVLinks bandwidth is higher, latency is lower, and coherent memory is possible across interlinked devices. This is very important in applications dedicated to parallel processing because it enhances the way GPUs function at sharing data, enabling performance to be maximized on compute-intensive jobs or applications. With the use of NVLink in Dell PowerEdge C4130 servers, users are likely to achieve better scaling and faster execution times in multiprocessor systems. The scalability will result in higher computational speed for AI, scientific computation, and large-scale simulation.

How NVIDIA NVLink Stands Out as Compared with Conventional PCIe

A key differentiation that NVIDIA NVLink offers is the increase in bandwidth and memory coherence for GPUs compared to a traditional PCIe interface. Nurturing a single connection, regardless of the version e, PCIe gives one data bandwidth of around 32 GB/s. It runs extremely high, with as much as 600 GB/s, making the bottleneck in multi-GPU setups using SLI bridge much less formidable. Being NVLink enabled also means that GPUs incorporate cache-coherent memory, allowing more efficient use of memory resources. PCIe, on the other hand, means that the data mapping between discrete GPU memory spaces is handled manually, increasing the complexity of the computing workflow. Enhanced interconnectivity of devices with NVLink technology leads to better flexibility in the architecture and faster performance in computing-intensive workloads due to improved inter-device communication.

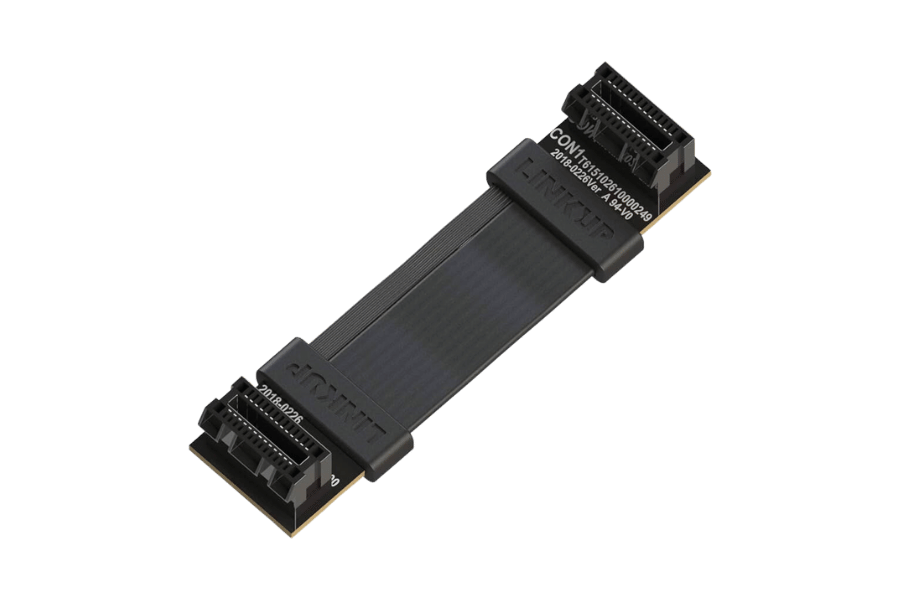

Key Components: NVLink Connector and Nvlink Bridge

This fast interface connects compatible GPUs and links them using the NVLink Bridge to form multi-GPU setups. The key feature of NVLink technology is its implementation — the creation of NVLink Bridge, which is essential for data transfer between GPUs. With the help of the NVLink Bridge, it was also possible to cope with problems of ensuring the coherence of memory spaces of GPUs and the stability of data transfer between them. All these components comprehensively improve interconnectivity GPUs, increasing performance in systems utilizing NVIDIA NVLink when used for parallel processing over interconnections of copper cables.

How Does NVLink Provide an Added Boost to the GPU-Sustained Power Limit?

Advantages of High-speed Interconnect Among GPUs

The advantages of inter-GPU data exchange that employs high bandwidth and low latency links are improved performance and the removal of interconnect node star-like bottlenecks typical of multi-GPU systems. This enhancement makes shared memory access more efficient, facilitating quick parallel processing models and more computational load streams across intricate projects. By enabling two GPUs to communicate directly, NVLink enhances data synchronization, enabling quicker performance for large-scale simulations and rendering tasks. This integration will improve the performance of an individual GPU and the PC system to lower the demand for high-cost components in a system for HPC applications.

The Role of NVLink in Multi-GPU Setups:-

NVLink helps inter-GPU communication in multi-GPU systems with the help of a dedicated high-speed link that does not go through the standard PCIe, which helps reduce data transfer bottlenecks and maximizes bandwidth availability. NVLink enhances the ability to interconnect multiple GPUs, thus making it easier to pool GPU memory and share GPU data, resulting in more seamless and coordinated operations. This arrangement greatly streamlines the extent of computations and increases the effective memory bandwidth, making it possible to handle more intricate and complex data models. Consequently, NVLink is key in extending performance in highly demanding tasks such as neural network training processes, scientific simulations, and realistic rendering activities.

Concepts of Bandwidth and High Data Transfer speed

Bandwidth refers to the maximum amount of information that a transmission medium can convey at a particular level. It is critically important to assess data transfer processes/data transfer speed-efficient options for high-speed data transfer. In environments with multiple GPUs, a large bandwidth is necessary for fast movement of a large amount of data between the GPUS, reducing the time needed to move data and increasing efficiency. Such technologies as NVLink are aimed at increasing the bandwidth that is provided by other interface types, such as PCIe, therefore increasing the speed of data transfer and the performance of synchronizing complex processes. This consequently leads to better performance in high-end computation work where fast data movement is essential.

Do My GPUs Have the Necessary Specifications to Function through NVLink?

An Overview of Models that Can Support NVLink as Well as the Compatibility Chart with Other Models

In seeing the compatibility of certain models with NVLink, it is important to affirm the specific ones that support this technology. Currently, NVLink is supported by several commonly used NVIDIA GPUs in consumer and professional environments. These include but are not limited to RTX 3090 by NVIDIA and the Quadro RTX models. However, it is essential at this stage to check the official NVidia site or the product specifications for the most precise and reliable integration with end systems compatibility information, which will eliminate any surprises during the deployment.

Verifying NVLink Compatibility for NVIDIA GeForce RTX 3090 and Others

In order to verify NVlink support for the NVIDIA GeForce RTX 3090, please note that the particular model of GPU that you possess should mention support for NVLink feature within its specifications provided by NVIDIA. RTX 3090 is in NVIDIA’s catalog of products that are NVLink enabled, and hence, they can share data across different GPUs effectively. The NVIDIA specifications must be cross-referenced for the rest of the variants to confirm their compatibility. This step helps to guarantee that the inherent high-speed data transfer capabilities of NVLink can be fully exploited within your setup, resulting in improved performance across connected video cards. If you need further assistance, look at the user’s guide for the product or contact NVIDIA support.

Examining NVlink Support of Quatro Series

The Quadro series has several models equipped with NVLink technology, especially models oriented toward the high-end computing market and professional visualization tasks. The Quadro RTX 8000 and Quadro RTX 6000 models are often cited as NVLink-ready. To establish this, it would suffice to peruse the official NVIDIA website or specific marketing materials for your Quadro GPU model. With NVLink, superior inter-GPU communication can be achieved, which is crucial to operations involving multiple computational tasks where a lot of bandwidth and low latency are required.

Setting Up NVLink on Your System

Step-by-Step Guide to Installing NVLink Bridges

- Confirm Compatibility – Ensure your GPUs support NVLink technology by reviewing the product specifications on the official NVIDIA website.

- Obtain NVLink Bridges – Purchase the appropriate NVLink bridge designed for your GPU model, ensuring it matches the number of GPUs you plan to connect.

- Power Down and Unplug – Safely shut down your system and disconnect all power sources to prevent any electrical damage during installation.

- Locate GPU Slots – Open your computer case and identify the PCIe slots where the compatible GPUs are installed.

- Install the Bridge – Connect the NVLink bridge securely between the designated NVLink slots on the GPUs. Ensure firm and appropriate seating.

- Secure Connections – Double-check all connections, ensuring the NVLink bridge and GPUs are properly seated and secured in their slots.

- Power Up and Driver Update – Reconnect power, boot your system and ensure all relevant drivers are up-to-date using NVIDIA’s official resources.

- Verification – Use NVIDIA’s diagnostic tools or software to verify that NVLink is correctly configured and operational for optimal performance.

Resolving Residual NVLink Connection Problems

“What’s the first thing I should check when experiencing NVLink connection problems?” Connectors: Check if each GPU is inserted firmly into PCIe slots and the NVLink bridge is fastened properly. Ensure the GPU models and NVLink bridge, including the 2-slot bridge, are appropriate and do not exceed the limitations in NVIDIA coordinated documents. Then, check that the system’s BIOS has been upgraded to a version compatible with NVLink systems. Ensure that every driver required for the system to work properly has been extracted and updated using the NVIDIA web interface. When problems continue, NVIDIA’s diagnostic instruments can be used to conduct some tests that may help to localize or aggravate the problems. When there is suspicion of hardware problems, investigate the connectors carefully for any sign of injury and call NVIDIA’s helpdesk for orders.

Configuring Multiple GPUs for Optimal high performance.

In settings having multiple GPUs, read this for ease of installation: make sure the motherboard has enough PCIe lanes so that each of the GPUs is fully functional and does not share bandwidth with the others. Have a good look at cooling solutions since good airflow is essential to mitigate the throttling of the GPUs due to overheating. For compatibility reasons and to maintain the same processing requirements, configuring the same GPU models is advisable. Use SLI or NVLink when possible because these technologies bring better GPU communication and, thus, less lag. Install all relevant device drivers and use NVIDIA performance tuning tools that are appropriate for the GPU workload. Doing all these steps correctly might improve the computational throughput by using multiple GPUs to work in a single machine.

Advanced Applications of NVLink

The Role of NVLink in Efficient Computing and AI

NVLink significantly improves the performance of high-performance computing (HPC) and artificial intelligence (AI) workloads thanks to enhanced data interchange between GPUs. NVLink’s advanced technology minimizes the issues related to data movement traditionally found between GPUs in PCIe configurations, improving performance within intensive calculations. In AI applications, this is advantageous for NVLink as it facilitates the scaling of neural networks, leading to shortened training periods and enhanced accuracy of the models. NVLink in HPC expands the efficiency of computations by providing a unified memory resource and fast GPU-to-GPU communications to speed up complex simulation and data analytic operations. These improvements are essential about the creation and development of those areas in which large amounts of parallel computing are needed.

The Future of NVLink: What Is in Store and What New Things Must Be Expected

The future of NVLink appears to have some great advancements as far as the connectivity and performance of GPUs is concerned. As there is an increasing need for quick data processing, NVLink will need more bandwidth and energy efficiency. One possibility is coupling NVLink with future GPU architectures, which are expected to enable faster communication networks. Other fast communication links could emerge as more development is done on CPUs for data-oriented workloads. We could also see more advancement on NVLink’s interaction with other computational accelerators and pedals. Further advancements could include using NVLink in hybrid computing, where both CPUs and GPUs would be used. Undoubtedly, these advancements will continue overstepping the already established parameters of high-performance computing and artificial intelligence.

NVLink Compared with Infiniband and Other Technologies

The use of NVLink in multi-GPU systems has the advantage of higher bandwidth through direct connections to the GPU compared to Infiniband. However, Infiniband brings useful networking capabilities to data centers by providing connectivity to different nodes with lower latency. PCIe interfaces and other technologies provide general interconnectivity but at a lesser bandwidth compared to NVLink, making NVLink more suited for high-speed GPU communication. The choice between these technologies is determined by the requirements of the specific application which may include degree of communication speed, system design, or system expandability.

Reference Sources

Frequently Asked Questions (FAQs)

Q: What is NVLink, and how does it benefit Dell PowerEdge C4130 Servers?

A: NVLink is an NVIDIA-developed technology that allows for high bandwidth connections between GPUs. In terms of Dell PowerEdge C4130 servers, NVLink accelerates data movement between NVIDIA RTX 2080 Ti GPUs, drastically enhancing performance for tasks that utilize multiple GPUs such as rendering technique. It has greater bandwidth and lower latency in comparison to PCIe interfaces, which provide higher efficiency in artificial intelligence, machine learning, and high-performance computing applications.

Q: Which NVLink-compatible NVIDIA GPUs can be used in the Dell PowerEdge C4130?

A: Dell PowerEdge C4130 supports NVLink-capable graphics cards such as GeForce RTX 2080, RTX 3090, and several other 30 series graphics cards. It is also compatible with professional GPUs like NVIDIA Quadro and RTX A6000. However, always ensure that the particular GPU model is supported for the server configuration you are using.

Q: What are some benefits of NVLink over SLI?

A: Even though SLI and NVLink both allow for multi-GPU setups, NVLink is the more efficient technology. SLI, for instance, has a higher level of gaming enhancement, whereas NVLink enables greater bandwidth and allows for greater inter-GPU connections. This is very appropriate for data-heavy tasks in professional and scientific environments and applications. It is also common with NVLink to have features like GPU memory pool, which SLI does not have.

Q: What is the difference between NVLink 2.0 and NVLink 3.0?

A: The technical specifications of NVLink 3.0 clearly show that it is in a different class from NVLink 2.0. NVLink 3.0 easily beats its predecessor due to its better bandwidth and performance. Older GPU models like NVLink 2.0 had up to 50 GB/s of bidirectional bandwidth per link; on the other hand, the newer models contain NVLink 3.0 and offer an astounding 100GB/s bidirectional bandwidth. This connection lengthened the data transfer through the Nvidia Dell PowerEdge C4130 to higher speeds than obliviously possible with NVLink 2.0.

Q: Please detail the steps to connect NVLink to the Dell PowerEdge C4130 server.

A: In detail, these are the steps to set up NVLink on your Dell PowerEdge C4130: First, ensure that compatible NVIDIA GPUs have been installed on your server. Use the appropriate NVLink bridge when connecting the GPUs (for example, a 2-slot, 3-slot, or 4-slot bridge). Next, proceed to install the most recent NVIDIA drivers. Then, you need to configure system GPUs in the NVIDIA Control Panel. Finally, the NVLink connection can be diagnosed using NVIDIA diagnostic tools or CUDA diagnostic utilities.

Q: Can NVLink be connected with different GPUs installed on the Dell PowerEdge C4130?

A: In general, identical GPU models are recommended when setting up NVLink in the Dell PowerEdge C4130. Some combinations of different GPU models may work; however, it is advisable to use one model to obtain maximum performance and compatibility. Configuration limitations concerning supported environments must be sought in NVIDIA’s documentation and Dell’s server specifications.

Q: What are the benefits that NVlink has to offer that PCIe does not?

A: The Dell PowerEdge C4130 has 1. NVLink offers increased bandwidth over PCI express PCIe. 2. Improved communication between GPUs: NVLink GPUs experience a direct interconnect for data transfers, increasing performance for multi-GPU setups. 3. Improved multi-GPU systems: Many multi-GPU systems are more easily supported due to low interconnect latency for NVIDIAs NVLink architecture. 4. GPU memory sharing: NVLink architecture permits resources such as GPU memory to be used by other connected GPUs. 5. More additional bandwidth for deep learning or GPU simulations: Deep learning and scientific simulation tasks favor NVLink’s features.

Q: In what way does NVLink help CUDA performance on the Dell PowerEdge C4130?

A: In the Dell PowerEdge C4130, NVLink makes inter-GPU communications faster, which in turn leads to an active increase in the performance of CUDA. More GPUs can engage in diverse tasks more effectively through data sharing which Enhances the effectiveness of CUDA applications. CUDA and NVLINK support applications are configured to throttle messages for concurrent GPUs efficiently, leading to enhanced throughput and overall performance for intricate computations.

Related Products:

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

-

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

-

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$260.00

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$260.00

-

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

-

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

-

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$190.00

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$190.00