At the recent CES conference, Jensen Huang, dressed in a new jacket, announced the official release of the RTX 5090.

Here are the prices for the 50 series GPUs.

RTX 5090: $1999 / RTX 5090 D: 16,499 RMB

RTX 5080: $999 / 8,299 RMB

RTX 5070 Ti: $749

RTX 5070: $549

The RTX 5090 and RTX 5080 will be available from January 30, while the RTX 5070 Ti and RTX 5070 will launch in February. The RTX 50 series laptops will be released in March.

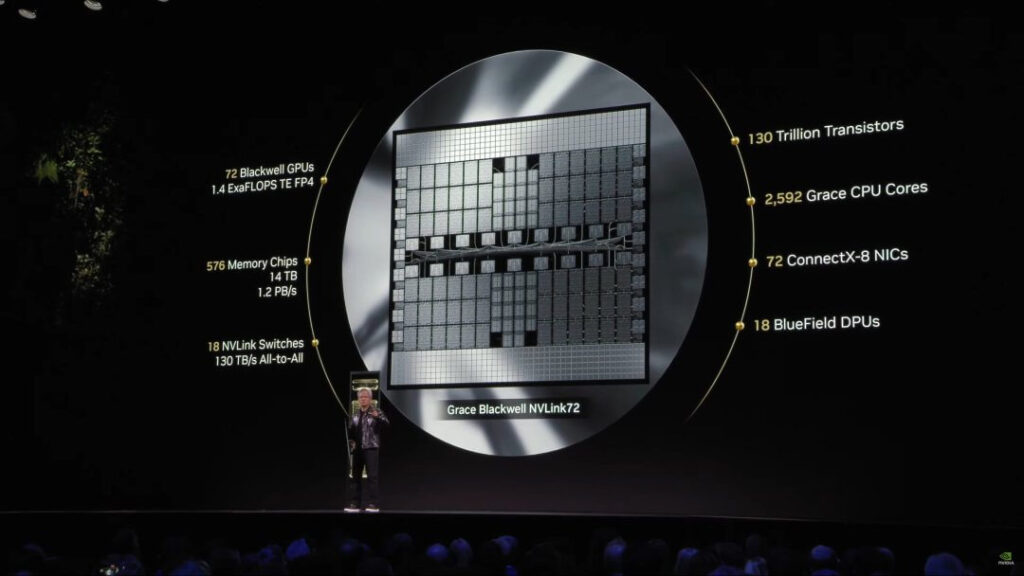

Huang also showcased the new data center super chip—Grace Blackwell NVLink72, which features 72 Blackwell GPUs, 1.4 exaFLOPS of computing power, and 1.3 trillion transistors, aiming to surpass the world’s fastest supercomputers.

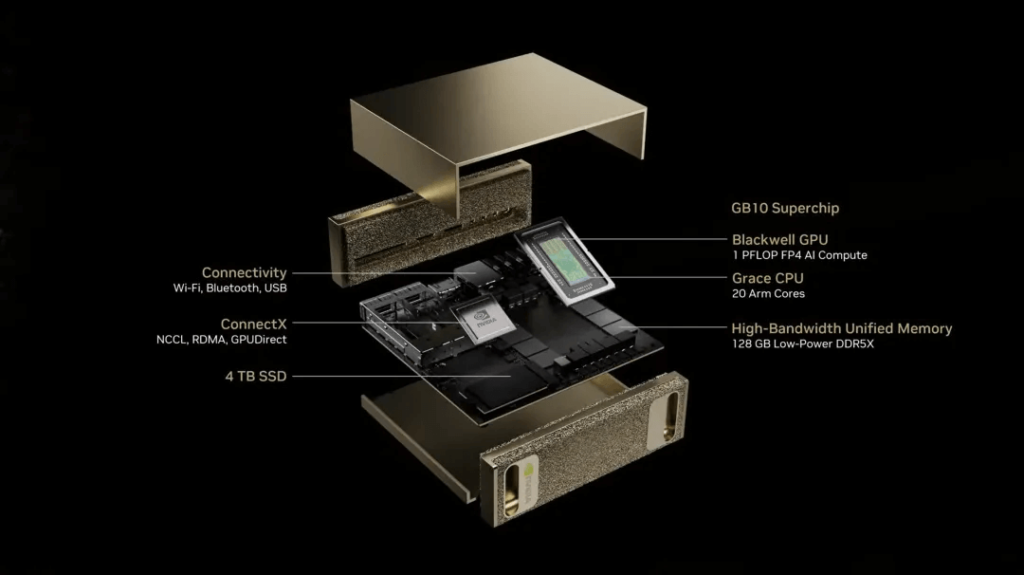

Furthermore, the world’s first true desktop supercomputer, Project Digits, was unveiled, priced at just $3000. With this, you can run 200 billion parameters large models right on your desk, occupying only the space of a coffee mug but providing data center-level computing power.

Equipped with the new GB10 Grace Blackwell super chip, Project Digits can deliver up to 1 PFLOPS performance under FP4 precision.

Huang predicts that in the future, every data scientist, researcher, and student will have a Project Digits personal AI supercomputer on their desk. The AI era will belong to everyone.

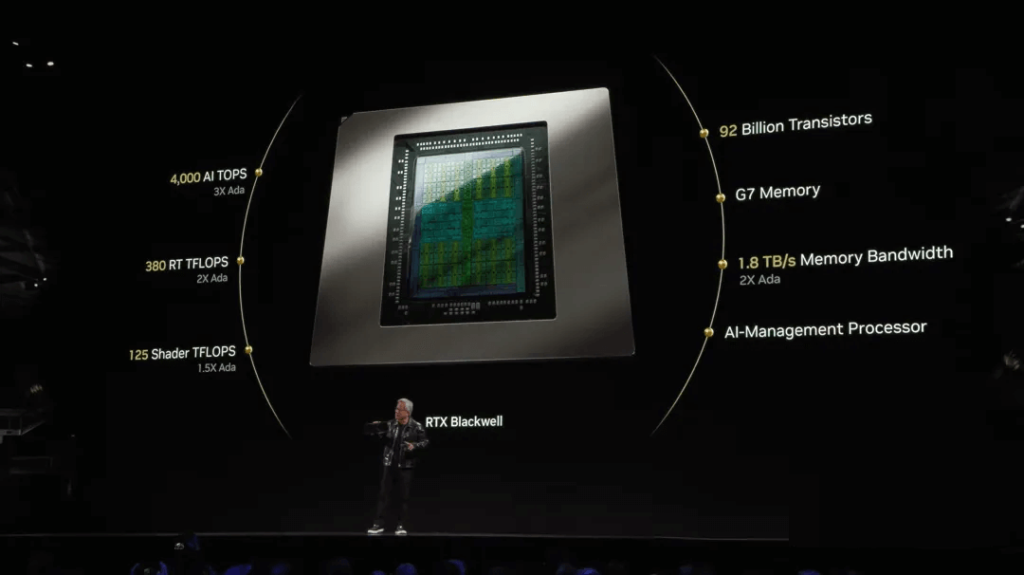

During the RTX 5090’s debut, DLSS 4 was also introduced. After months of leaks and rumors, the new generation of RTX Blackwell GPUs has officially been unveiled with the following performance parameters:

- 92 billion transistors

- 4000 TOPS of AI computing power

- 380 TFLOPS of ray tracing performance

- 125 TFLOPS of shader performance

- 32GB of GDDR7 memory

- 1792GB/s memory bandwidth

- Up to 21,760 CUDA cores

It’s worth noting that the RTX 5090 D’s AI computing power is only 2375 TOPS, but still double that of the 4090 D.

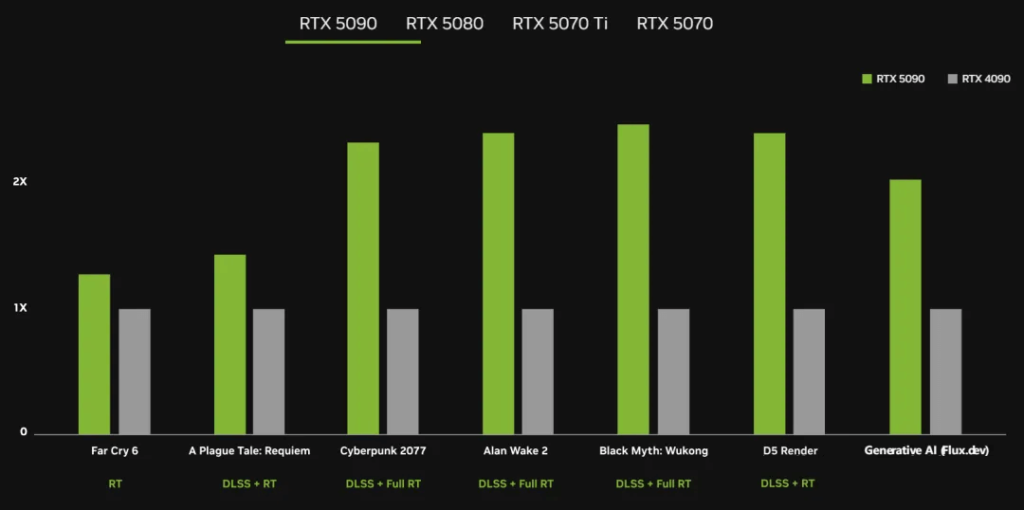

With such luxurious configurations and the support of DLSS 4 and Blackwell architecture, the RTX 5090’s performance is directly double that of the RTX 4090. However, this also means it has high power consumption, with a total graphics card power of 575 watts and a recommended power supply of 1000 watts.

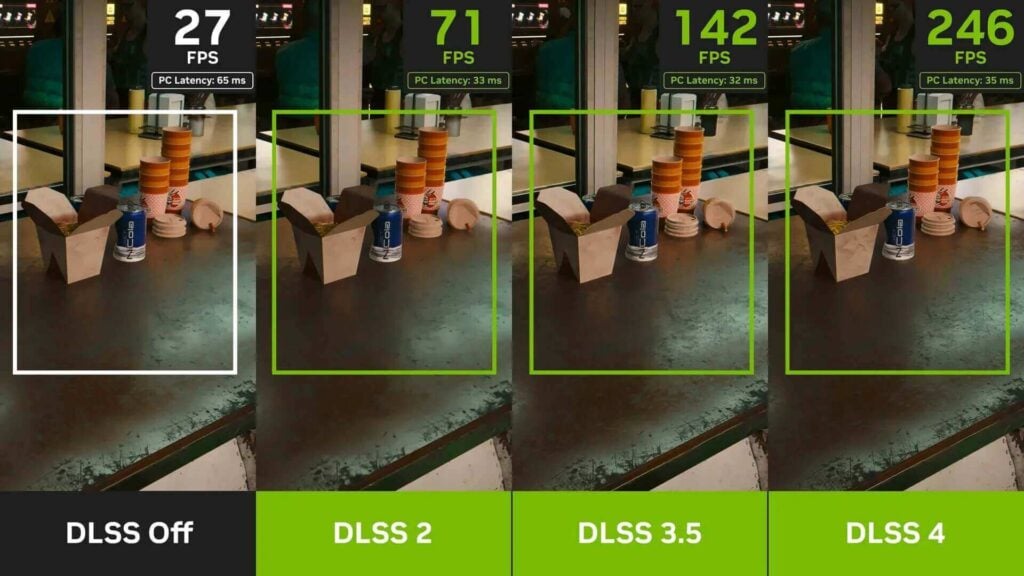

A demo showed that running “Cyberpunk 2077” on the RTX 5090 with DLSS 4 enabled reached 238 frames per second, compared to only 106 frames per second on the RTX 4090 with DLSS 3.5 enabled.

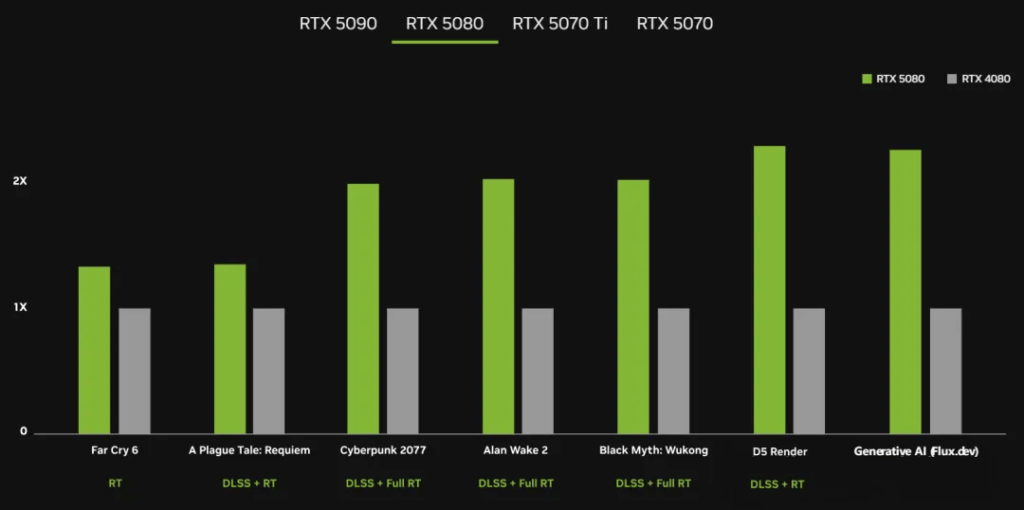

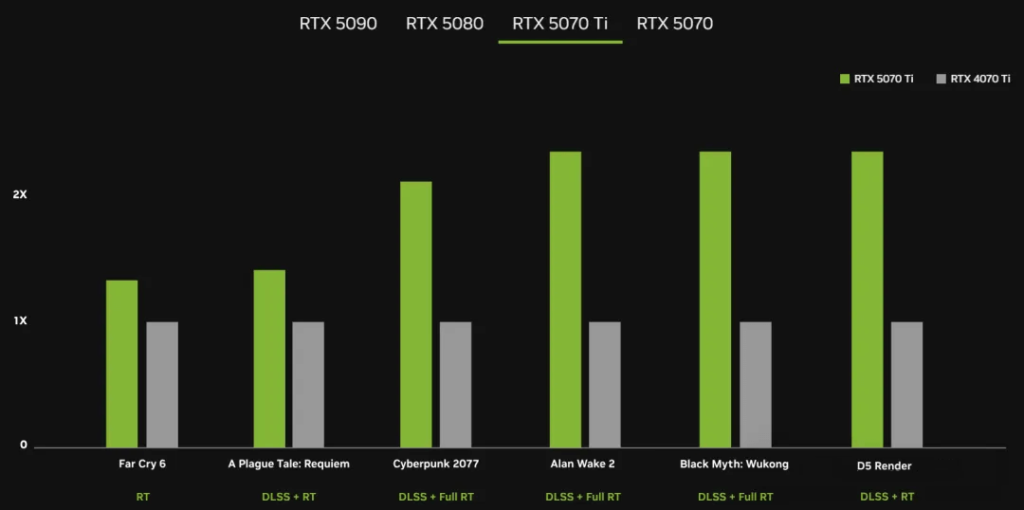

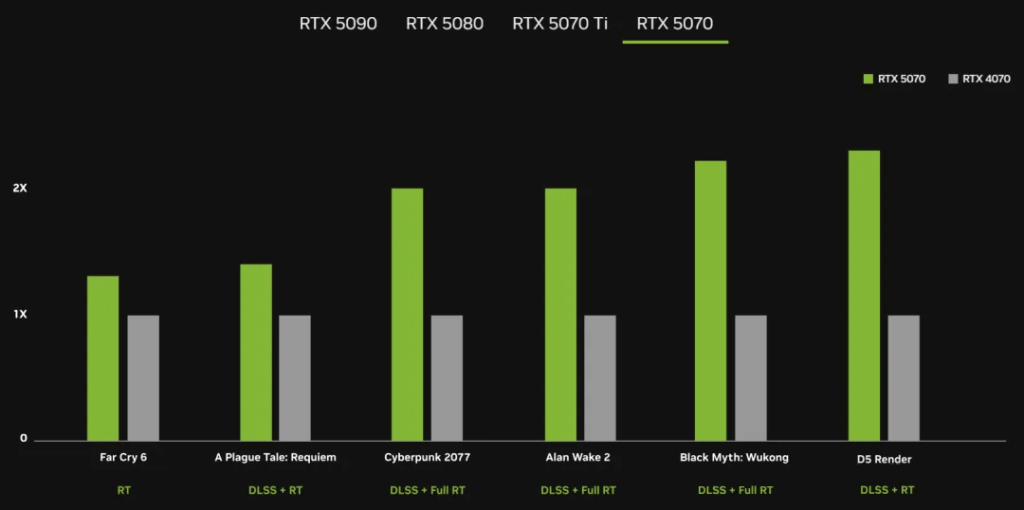

The RTX 5080 is twice as fast as the RTX 4080, equipped with 16GB of GDDR7 memory, a memory bandwidth of 960GB/s, and 10,752 CUDA cores. The RTX 5070 Ti comes with 16GB of GDDR7 memory, 896GB/s bandwidth, and 8,960 CUDA cores. The RTX 5070 has 12GB of GDDR7 memory, 672GB/s capacity, and 6,144 CUDA cores. Jensen Huang even claimed that the RTX 5070, priced at $549, will provide RTX 4090-level performance, thanks to DLSS 4.

Additionally, Huang showcased the RTX Blackwell GPU with a real-time rendering demo. He stated, “The new generation of DLSS not only generates frames, but it also predicts the future. We pushed AI with GeForce, and now AI is revolutionizing GeForce.” NVIDIA’s new RTX neural shaders can be used to compress game textures, and RTX neural faces leverage generative AI to enhance facial quality. The next-gen DLSS includes multi-frame generation technology, producing up to three additional frames per traditional frame, increasing frame rates up to 8 times. DLSS 4 also employs Transformers in real-time applications to enhance image quality, reduce ghosting, and add more detail to dynamic scenes.

It’s noteworthy that NVIDIA has introduced a new design for the RTX 50 series Founders Edition, featuring dual axial flow fans, a 3D vapor chamber, and GDDR7 memory. All RTX 50 series GPUs support PCIe Gen 5 and are equipped with DisplayPort 2.1b interfaces, capable of driving 8K resolution at 165Hz. Surprisingly, the RTX 5090 Founders Edition is a dual-slot graphics card, making it suitable for small form factor cases—a significant change compared to the RTX 4090.

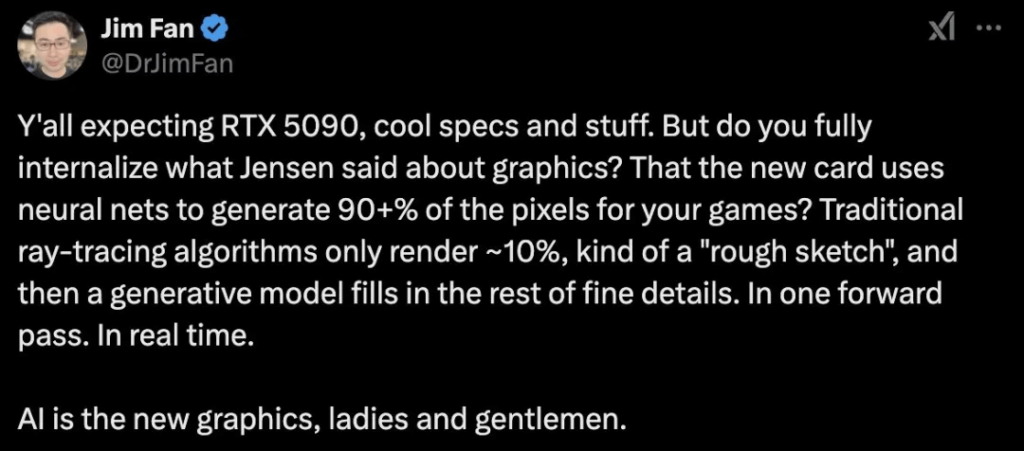

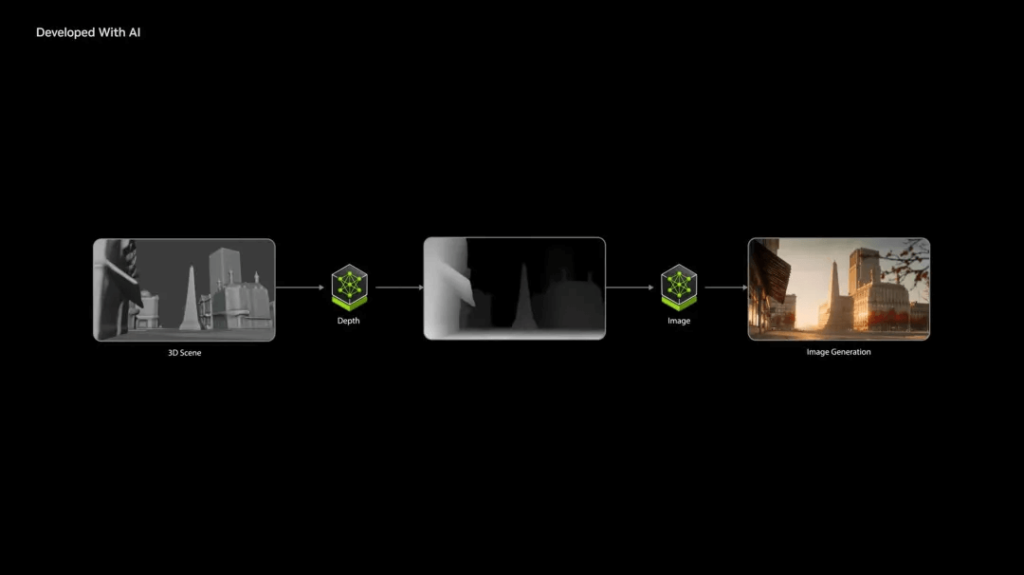

Jim Fan, NVIDIA’s senior scientist, highlighted the “essence” of Jensen Huang’s presentation on graphics technology. Huang explained that the new GPUs use neural networks to generate over 90% of the pixels in games. Traditional ray tracing algorithms render only about 10% of the content, akin to a “rough sketch,” with generative models filling in the remaining details in real-time. Ladies and gentlemen, AI is the new generation of graphics technology.

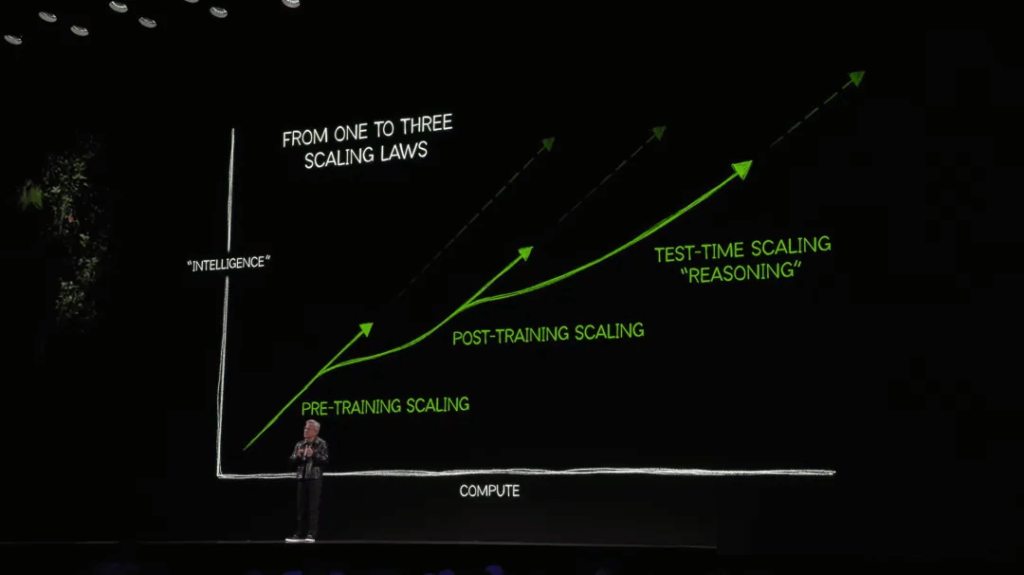

After the 50 series GPUs debut, Huang mentioned that the “scaling law continues”:

- The first scaling law is pre-training.

- The second scaling law is post-training.

- The third scaling law is inference-time computation.

These evolving scaling laws drive the immense computational demand for AI. Astonishingly, approximately 15 supercomputing centers, including those of Microsoft, Meta, and xAI, are already equipped with Blackwell GPUs.

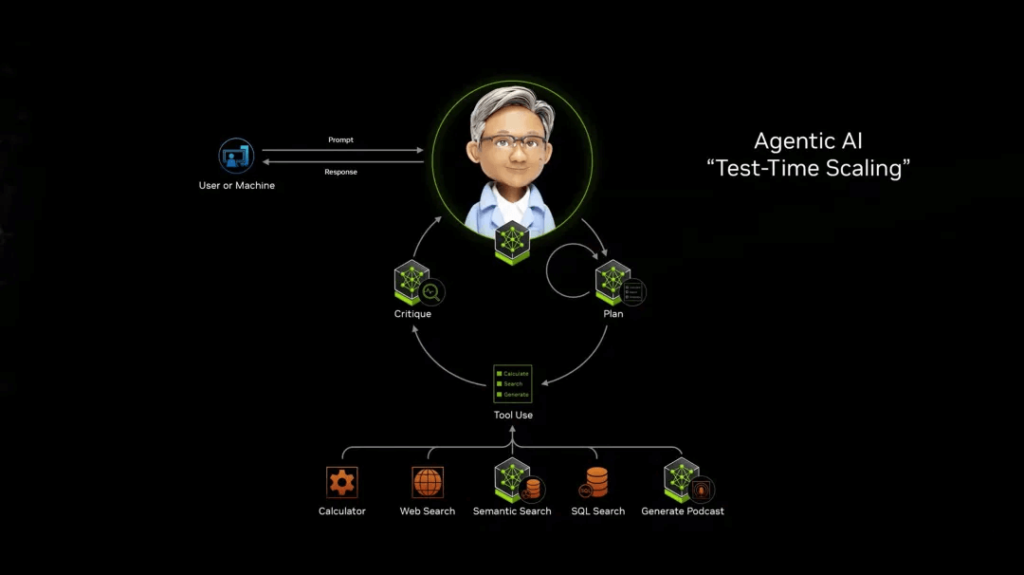

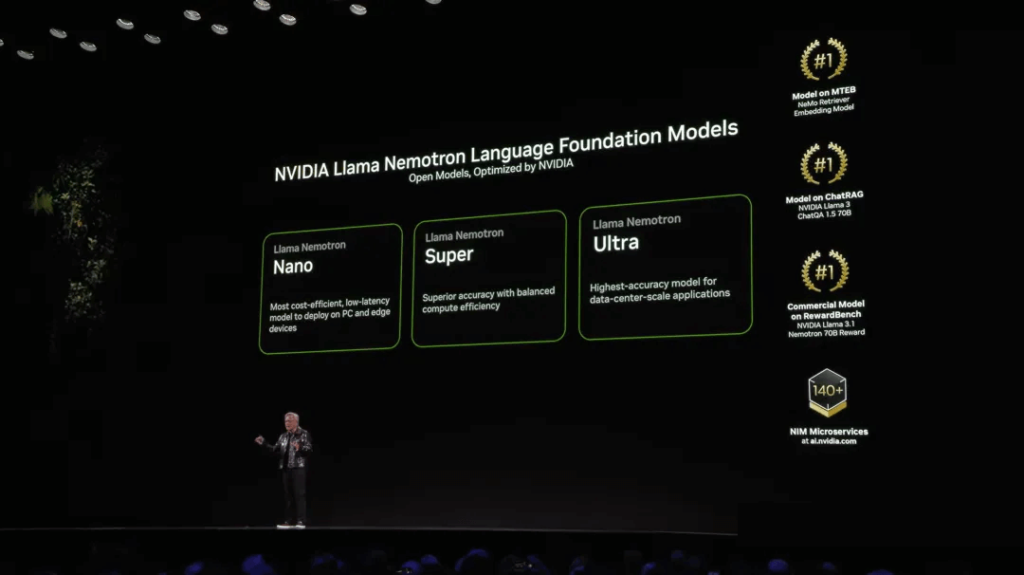

Next, he mentioned AI agents as a perfect example of scaling during testing. Additionally, he announced the launch of a series of open-licensed foundational models called Llama Nemotron, which provide high accuracy for various AI agent tasks. Jensen Huang stated, “AI agents could be the next robotics industry, potentially representing a multi-trillion dollar opportunity.”

Furthermore, NVIDIA’s NIM Blueprint will soon be available on PC. With these blueprints, developers can create podcasts based on PDF documents and generate stunning images guided by 3D scenes.

Desktop-Level AI Supercomputer Capable of Running 4.05 Trillion LLM Parameters

Before concluding the CES conference, Jensen Huang unveiled a revolutionary product—Project Digits—a truly “desktop supercomputer!” It is designed for AI developers, data scientists, students, and other professionals engaged in AI work.

This compact computer is the world’s smallest AI supercomputer capable of running a 200-billion parameter model, priced at $3,000 (approximately ¥21,986). As demonstrated by Huang, this compact desktop system provides immense computing power while occupying minimal desk space—about the width of an average coffee cup and roughly half its height. Imagine having a miniature device on your desk that offers data center-level computing power. This is the revolutionary breakthrough brought by Project Digits!

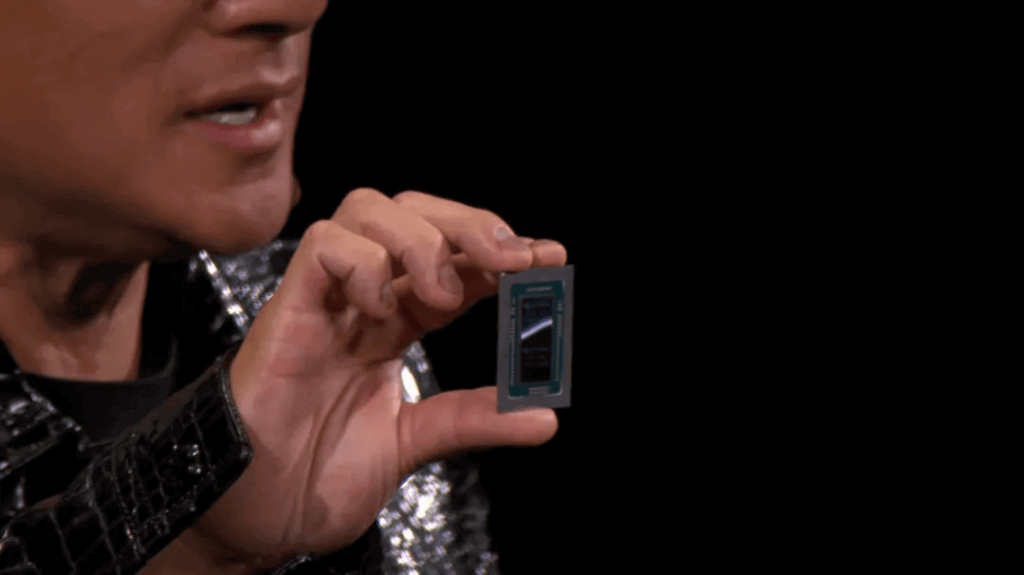

Project Digits features the new GB10 Grace Blackwell superchip, capable of delivering up to 1 PFLOPS (petaflops) of AI performance at FP4 precision.

This powerful chip also includes a 20-core ARM-based Grace CPU. The CPU and GPU are interconnected using NVIDIA NVLink C2C technology for high-speed communication. Each Project Digits is equipped with 128GB of low-power, highly consistent unified memory, and up to 4TB of NVME storage. With this setup, developers can run models up to 200 billion parameters directly on their desktops. Moreover, with the ConnectX network chip, two Project Digits supercomputers can be interconnected to run models with up to 4.05 trillion parameters.

Additionally, Project Digits comes pre-installed with the NVIDIA DGX foundational operating system (based on Ubuntu Linux) and the NVIDIA AI software stack, providing developers with a plug-and-play AI development environment. Developers can quickly get started with their AI projects right out of the box. For millions of developers, it will be a game-changing innovative product, especially for those needing cloud computing/data center resources to run large AI models. This desktop AI supercomputer has a wide range of applications, including AI model experimentation and prototyping, model fine-tuning and inference (for model testing or evaluation), and local AI inference services (such as chatbots or code intelligence assistants). Additionally, data scientists can utilize the system to run NVIDIA RAPIDS, efficiently handling large-scale data science workflows directly on their desktops.

With the comprehensive support of NVIDIA’s AI technology stack (frameworks, tools, APIs), Project Digits becomes an ideal development platform for edge computing applications, particularly in robotics and VLM (vision-language models) fields. The advent of Project Digits marks a new era in personal AI computing. It enables developers worldwide to run large-scale AI models on their desktops, supplementing existing cloud computing resources and significantly enhancing AI development efficiency.

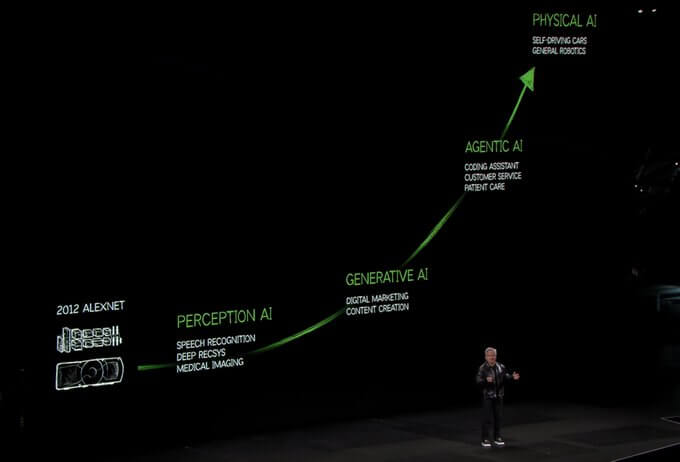

The New Era of Physical AI: Open Source World Model

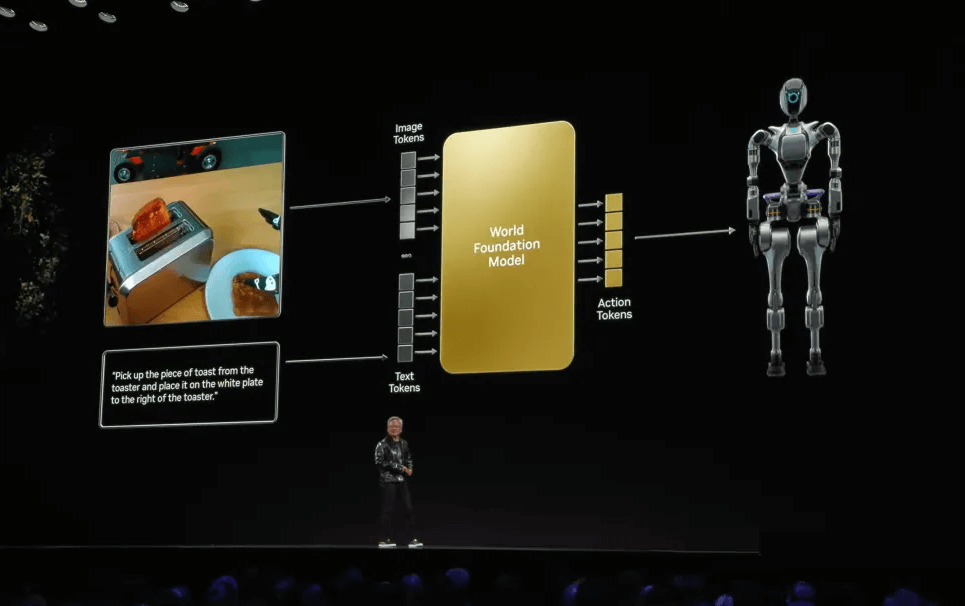

Following the introduction of intelligent AI, Jensen Huang has now directed the conversation towards “Physical AI.” In his view, “the next frontier for AI is Physical AI.” The principle of large models is to generate output one token at a time based on prompts. If this context becomes the real-world environment and the prompt becomes a request, the model needs to shift from generating “content tokens” to generating “action tokens.” What we need now is to create an effective “world model” rather than GPT-based language models.

This “world model” must understand the language of the world, comprehend physical dynamics such as gravity and friction, grasp geometric and spatial relationships, understand causality, and recognize physical permanence.

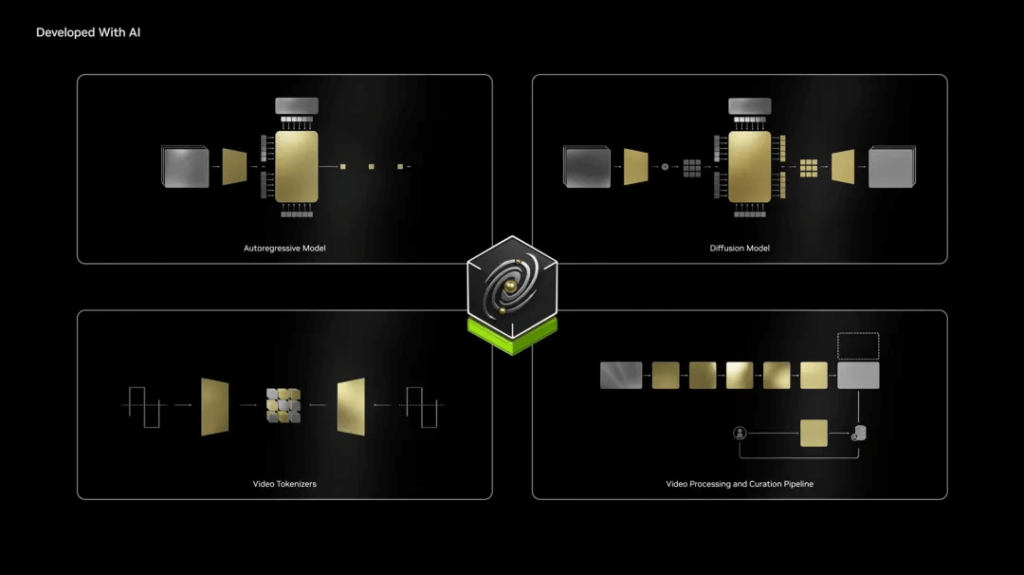

At CES, Jensen Huang announced a revolutionary world foundational model development platform called Cosmos, aimed at understanding the physical world. Trained on a 20-million-hour dataset, Cosmos can take text, images, and videos as input and generate virtual world states and videos. The platform includes multiple functional modules, such as diffusion models, autoregressive models, and video tokenizers, allowing developers to choose based on specific needs. Notably, Jensen Huang announced that Cosmos, in its entirety, including Nano, Super, and Ultra, will be open-sourced and available for download.

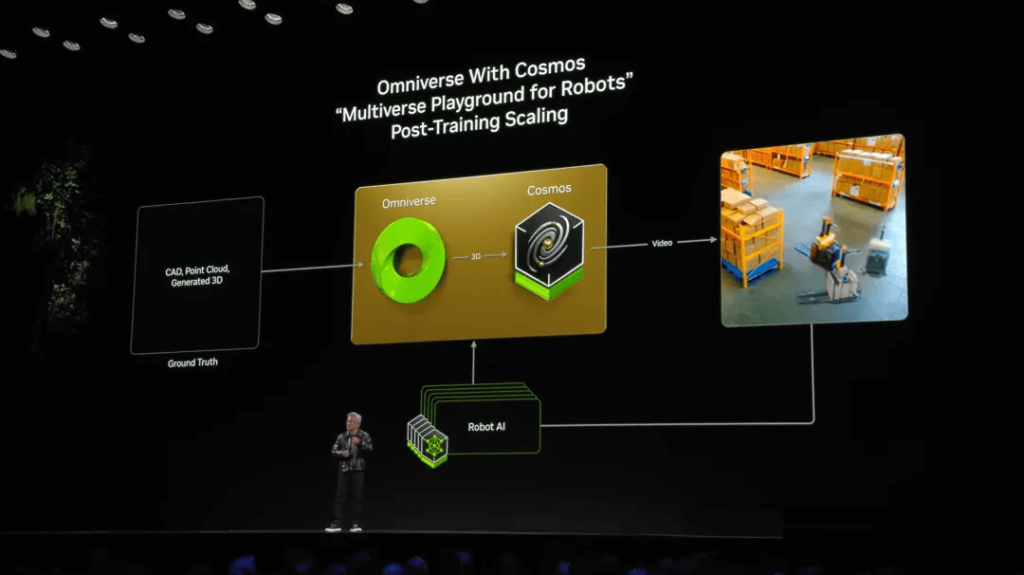

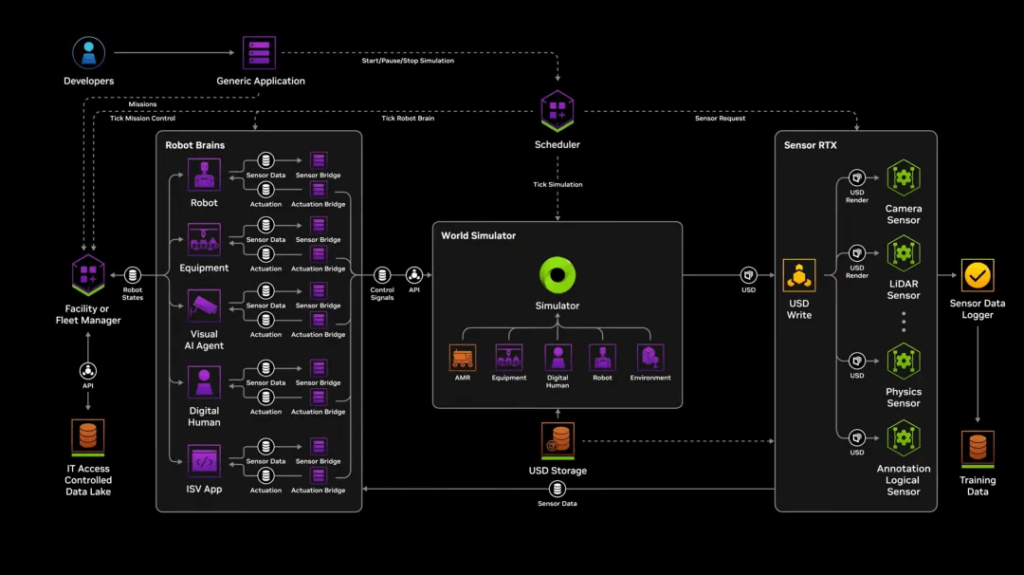

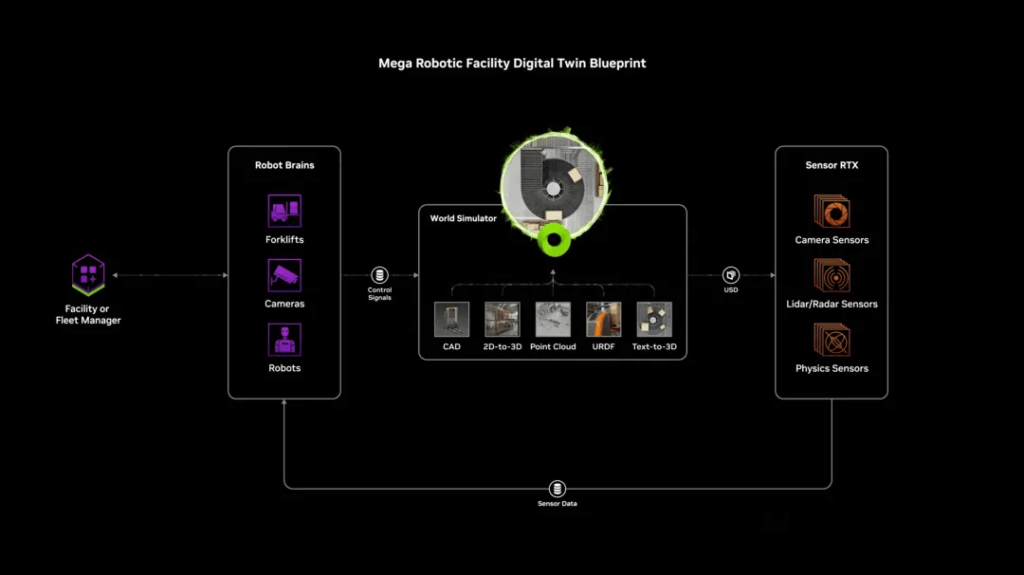

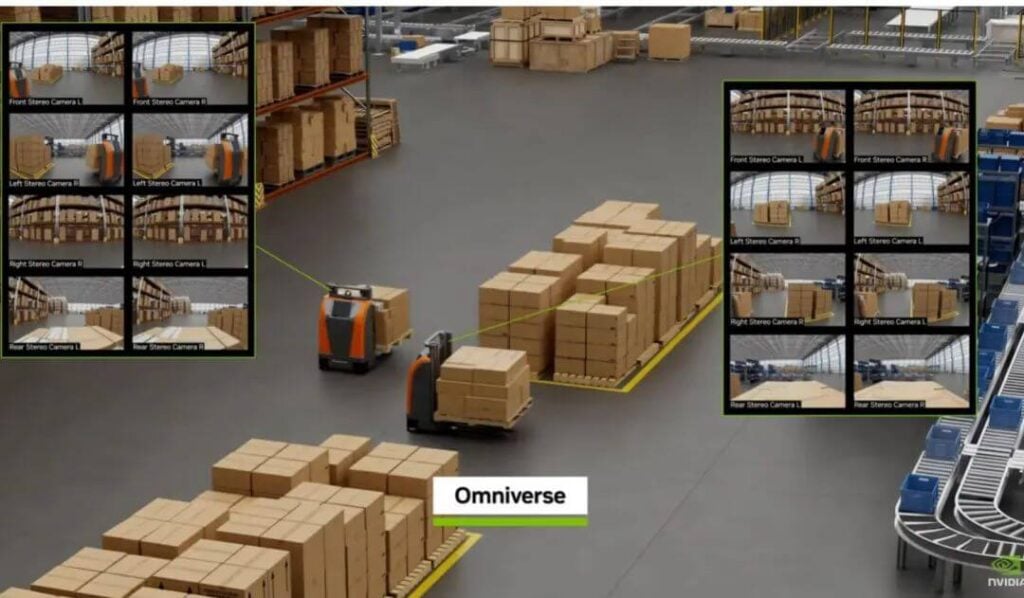

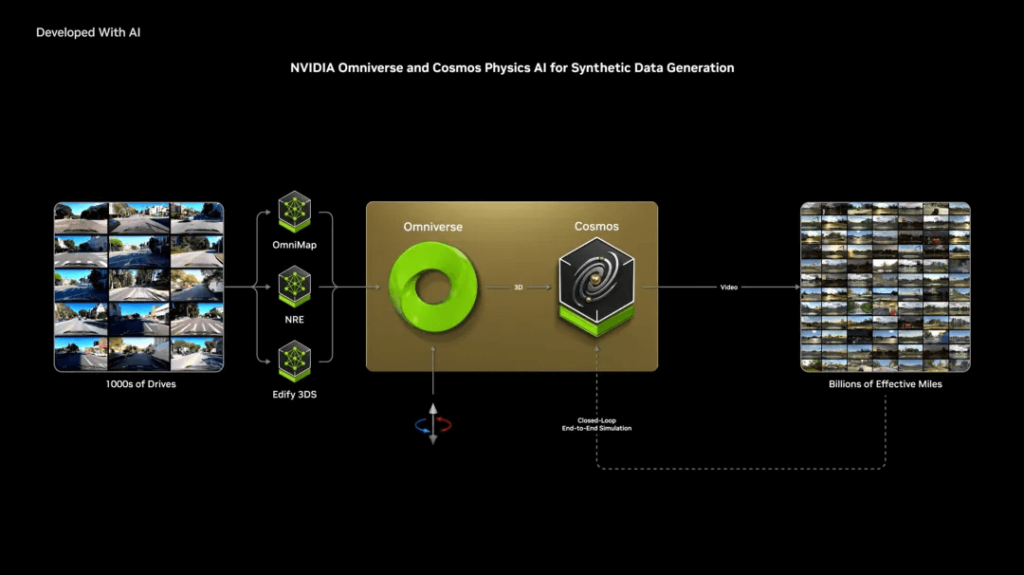

Additionally, Cosmos can be integrated with Omniverse to provide a physically realistic multi-generator, meaning that everything in the physical simulation world can be generated at once through Cosmos.

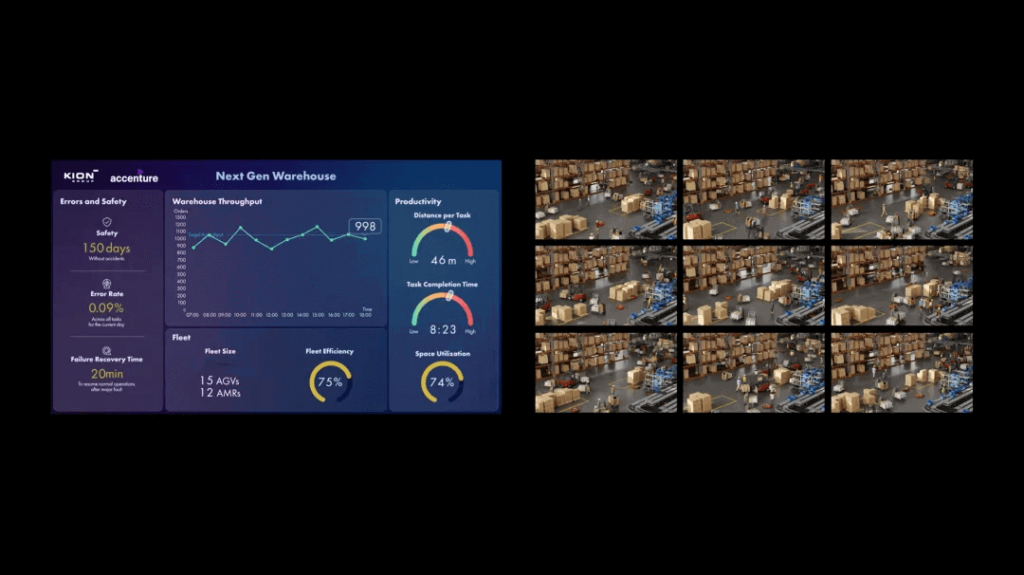

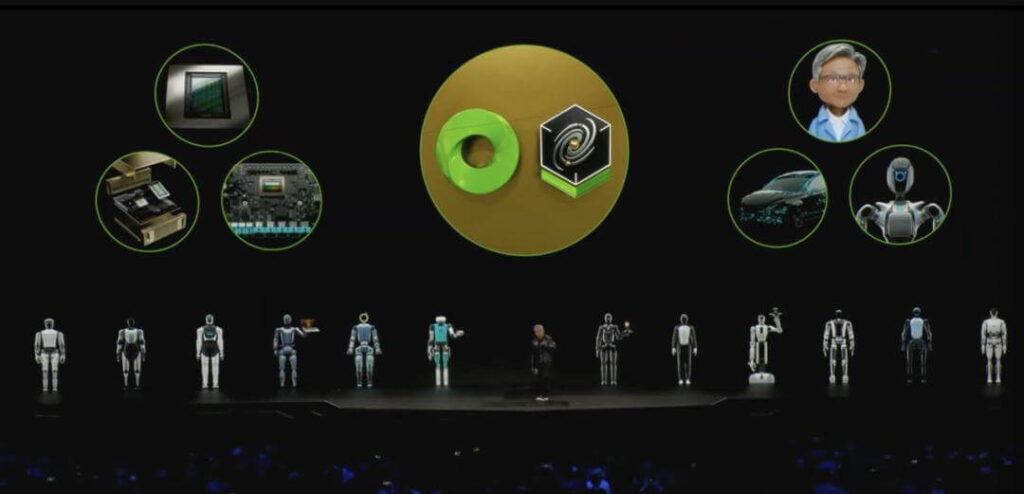

Jensen Huang also mentioned three types of computers: a DGX for training AI, an AGX for deploying AI, and a combination of Omniverse and Cosmos. When connecting the first two, we need a digital twin. Huang believes, “In the future, every factory will have a digital twin, and you can combine Omniverse and Cosmos to generate numerous future scenarios.”

Autonomous Vehicles and Robots

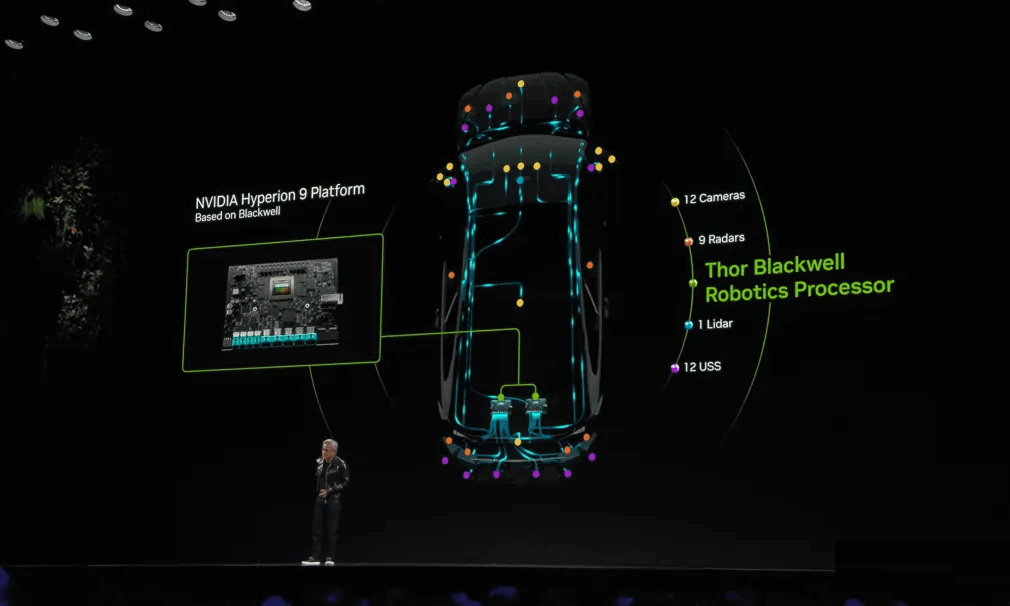

Creating autonomous vehicles, like robots, requires these three computers. As of now, with 100 million cars produced annually and billions of cars worldwide, they will gradually become highly automated and fully autonomous driving systems. Jensen Huang predicts that this will become the first trillion-dollar robot industry. He also introduced the next-generation automotive processor, Thor, which boasts a 20-fold increase in processing performance compared to its predecessor, Orin, and serves as a general-purpose robot processor.

So, what can Omniverse and Cosmos do in the context of autonomous driving? They can generate infinite driving scenarios, accelerating the development of autonomous driving in scenarios with short tails and uncollectible data.

Following this, Jensen Huang summoned all robots to the stage and announced the arrival of the “ChatGPT moment for general-purpose robots.” He stated, “There are currently three types of robots: intelligent AI, autonomous vehicles, and machines. If we have the technology to solve these three issues, the era of robots is at hand.” In concluding the press conference, Huang summarized that there are now three new Blackwell systems in production: the Grace Blackwell NVLink72 supercomputer, a foundational model for Physical AI, and three types of robots developed in the field of intelligent AI.

Table of Contents

ToggleRelated Products:

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

-

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

-

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$260.00

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$260.00

-

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

-

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

-

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$190.00

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$190.00