Advancements in industries have been driven by High-Performance Computing (HPC) and Artificial Intelligence (AI). The innovation will depend on its ability to process a large amount of data and perform complex computations efficiently. This is where the NVIDIA HGX H100 comes in, an innovative solution that will meet the growing needs of HPC and AI workloads. This article provides a detailed review of NVIDIA HGX H100, which highlights its technical specifications and main features, as well as how this technology will impact scientific research, data analytics, machine learning, etc. With this introduction, readers will be able to understand how NVIDIA HGX H100 is going to bring about a revolution in high-performance computing and artificial intelligence applications.

Table of Contents

ToggleHow Does the NVIDIA HGX H100 Accelerate AI and HPC?

What is the NVIDIA HGX H100?

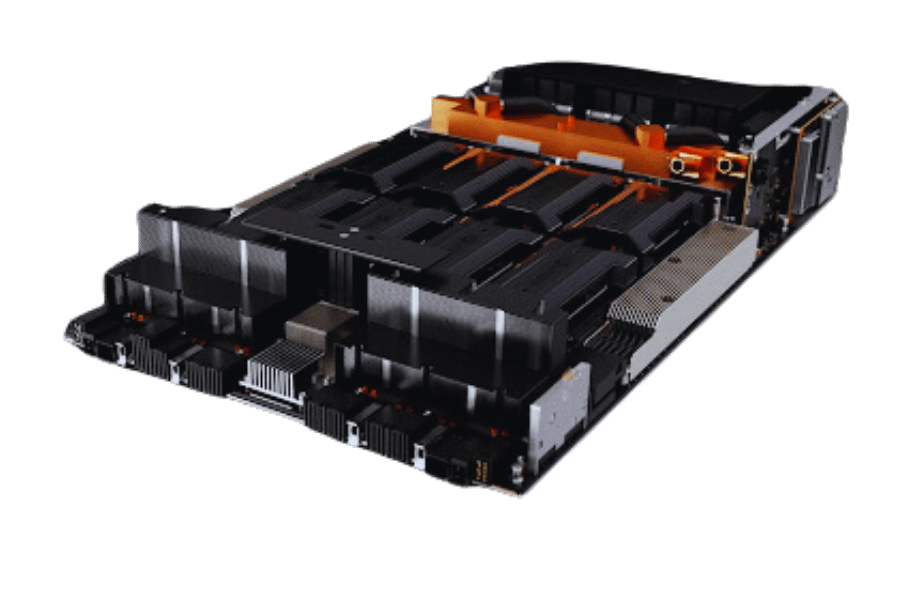

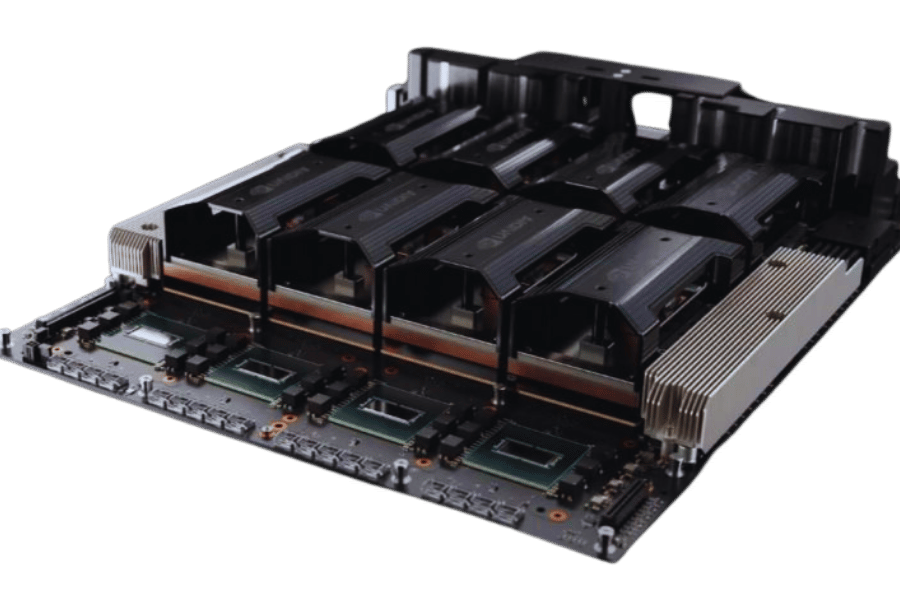

Created to boost the productivity of HPC and AI applications, NVIDIA HGX H100 is an efficient, high-performance computing platform. It comes with the groundbreaking NVIDIA Hopper architecture that features cutting-edge tensor cores and GPU technologies as a mechanism for increasing efficiency in numerical operations. The platform is used for running complex data analytics and massive simulations as well as training deep learning models, making it suitable for industries seeking powerful computers. HGX H100 achieves this through advanced memory bandwidth, interconnects, and scalability features, thereby setting a new benchmark for accelerated computation systems.

Key Features of NVIDIA HGX H100 for AI

- NVIDIA Hopper Architecture: The HGX H100 incorporates the newest NVIDIA Hopper architecture, designed specifically to bolster AI and HPC workloads. One of the highlights of this architecture is its new tensor cores that achieve up to sixfold greater training performance than in previous versions.

- Advanced Tensor Cores: The platform includes fourth-generation tensor cores, which double performance for AI training and inference applications, as Nvidia introduces us to HGX. The cores support different precisions, including FP64, TF32, FP16, and INT8, thus making it very versatile for all kinds of computations.

- High Memory Bandwidth: HGX H100 comes with high-bandwidth memory (HBM2e), which provides over 1.5 TB/s memory bandwidth. With this feature, large-scale simulations can be run as well as big data sets processed without any difficulties.

- Enhanced Interconnects: The HGX H100 features ultra-fast multi-GPU communication enabled by NVIDIA NVLink technology. This interconnect bandwidth is crucial for smoothly scaling intensive workloads across multiple GPUs since NVLink 4.0 offers up to 600 GB/s.

- Scalability: The platform is highly scalable and supports multi-node configurations. Therefore, it is a good pick for modular deployment in data centers where computational requirements may increase.

- Energy Efficiency: HGX H100, developed with an optimized energy consumption model, ensures high performance per watt, making it an environmentally and economically wise choice for heavy computations.

By integrating these technical features, the NVIDIA HGX H100 will greatly improve performance and efficiency in AI and HPC applications, thereby setting new records in accelerated computing.

Benefits for High-Performance Computing (HPC) Workloads

Notably, its advanced hardware design and software optimization make the NVIDIA HGX H100 deliver impressive rewards for HPC workloads. They significantly accelerate AI training and inference tasks to reduce compute time and improve productivity by integrating fourth-generation tensor cores. Critically, this supports the rapid execution of large datasets with high bandwidth memory (HBM2e) that are required in complex simulations and data-intensive applications. Furthermore, these interconnects allow for NVLink 4.0, designed by NVIDIA, to enhance multi-GPU communication, thereby facilitating efficient scaling across multiple nodes. Consequently, there is a general increase in computing performance, energy efficiency, and ability to handle bigger computation loads, leading to a higher endpoint for HPC infrastructures when these features are combined together.

What Are the Specifications of the NVIDIA HGX H100 Platform?

Hardware Overview of NVIDIA HGX H100

The NVIDIA HGX H100 platform, a robust hardware suite engineered for high-performance computing (HPC) and artificial intelligence (AI) applications, is equipped with several features. It employs the newest NVIDIA Hopper architecture having fourth-generation tensor cores that boost AI computation performance. The platform comes with high bandwidth memory (HBM2e), which facilitates fast data processing and handling large amounts of data. Additionally, the HGX H100 incorporates NVIDIA NVLink 4.0 to facilitate efficient scaling across clusters through improved multi-GPU communication. As such, through this amalgamation of advanced hardware components, the HGX H100 ensures exceptional performance, energy efficiency, and scalability for dealing with complicated computational problems.

Details on 8-GPU and 4-GPU Configurations

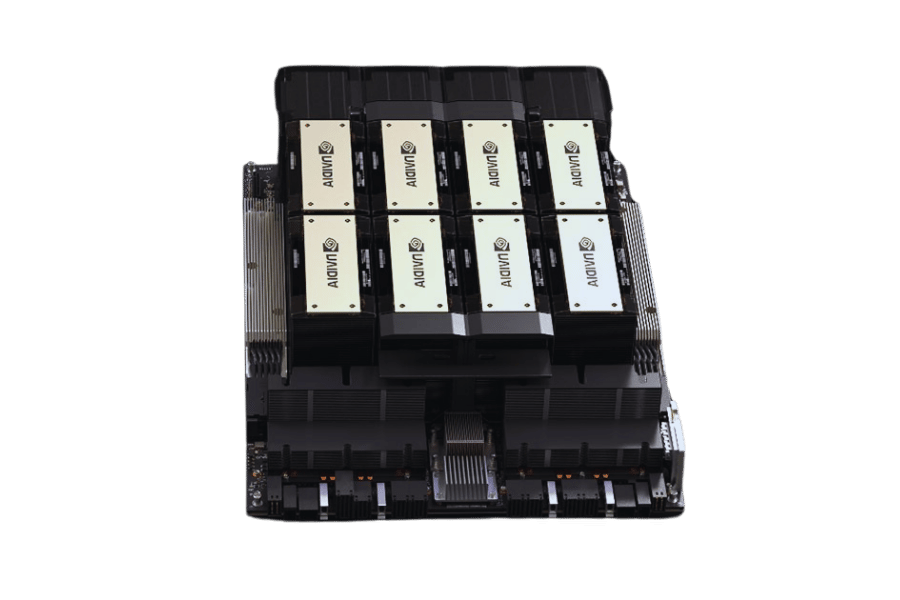

8-GPU Configuration

The NVIDIA HGX H100 platform’s 8-GPU configuration is meant to make the most out of computational power and scalability for high-performance computing and AI tasks. This includes eight NVIDIA H100 GPUs tied together by NVLink 4.0, which allows high-speed communication and minimizes latency between GPUs. Some key technical parameters of the 8-GPU configuration are:

- Total GPU Memory: 640 GB (80 GB per GPU with HBM2e).

- Tensor Core Performance: Up to 1280 TFLOPS (FP16).

- NVLink Bandwidth: 900 GB/s per GPU (bi-directional) using faster NVLink speed.

- Power Consumption: Approximately 4 kW.

These tasks include large-scale simulations and deep learning training, among others, that require a lot of parallel processing ability.

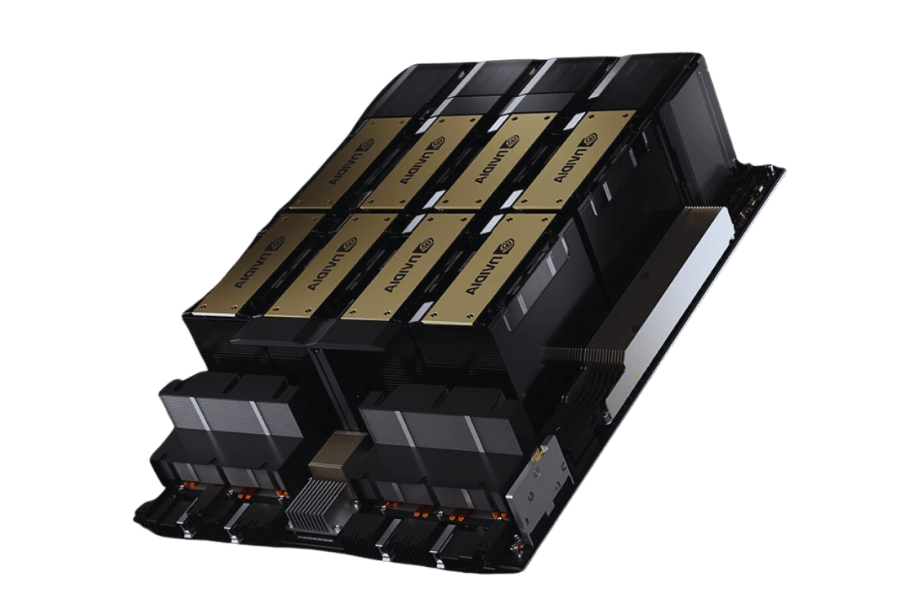

4-GPU Configuration

When compared to the other configurations, this one is balanced in terms of computation as it still offers a considerable amount of computational power but at lower dimensions than what had been provided in the 8-GPU setup. The setup consists of four NVIDIA H100 GPUs also connected through NVLink 4.0 for efficient communication. Some key technical parameters for the configuration are:

- Total GPU Memory: 320 GB (80 GB per GPU with HBM2e).

- Tensor Core Performance: Up to 640 TFLOPS (FP16).

- NVLink Bandwidth: 900 GB/s per GPU (bi-directional).

- Power Consumption: Approximately 2 kW.

It can be used for mid-sized computation tasks, AI model inference or small scale simulation, thus offering an economical yet powerful tool-set suitable for various applications in the field of hpc.

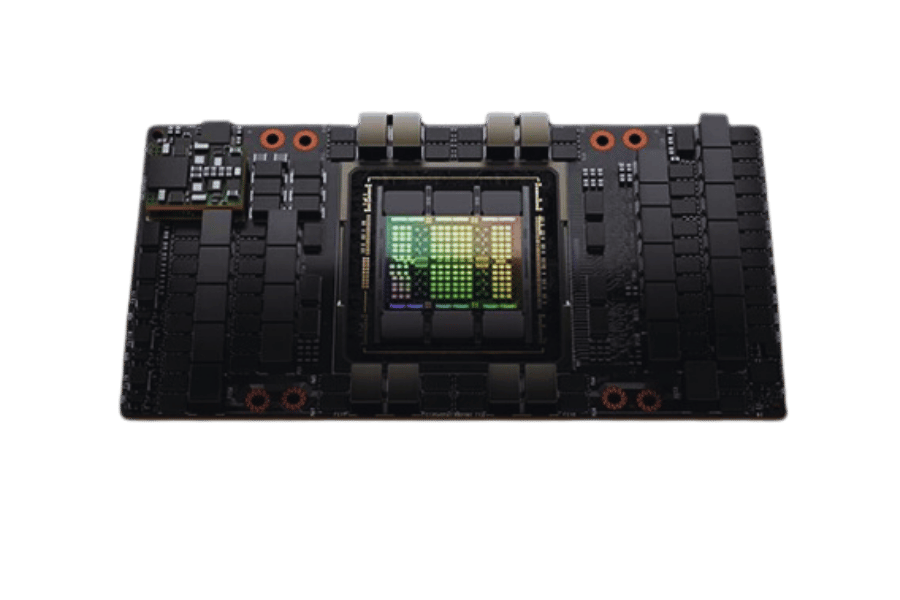

Integration with NVIDIA H100 Tensor Core GPU

Incorporating the NVIDIA H100 Tensor Core GPU in high-performance computing (HPC) and AI infrastructures requires taking into account several factors to maximize performance and efficiency. With a bandwidth that is very large but with low latency, the NVLink 4.0 interconnect ensures smooth interaction between multiple GPUs. This makes it possible for good data transfer rates to be realized in this instance and this is important for computational workloads that depend on large datasets and need real-time operations. Using advanced software frameworks such as NVIDIA CUDA, cuDNN, and TensorRT also improves its capability through optimization of neural networks and deep learning operations. Also, the mixed-precision computing mode supported by H100 enables balancing of accuracy and performance, hence making it ideal for various HPC works, scientific investigation, or business-oriented AI modules. Given that massive power consumption and heat generation are associated with these H100 GPUs, mechanical temperature control, as well as power provision, has to be considered carefully so as to ensure stable operation of multi-GPU setups.

How Does the NVIDIA HGX H100 Compare to Previous Generations?

Comparison with HGX A100

The NVIDIA HGX H100 platform represents a significant evolution from the HGX A100 in various ways. One way is through incorporating the NVIDIA H100 Tensor Core GPUs, which bring about better performance via the coming generation of tensor cores and improved support for mixed-precision computing. In addition, unlike the NVLink 3.0 employed in HGX A100, which had lower bandwidth and higher latency, it has better multi-GPU communication as well as data transfer rates because of the NVLink 4.0 upgrade in HGX H100. Moreover, it comes with enhancements in thermal design and power efficiency that better cater to increased computational load and power consumption by the H100 GPUs than its precursor does. Taken together, these advances position the HGX H100 as an even more powerful and capable solution for high-performance computing (HPC) or artificial intelligence (AI) workloads that are more demanding than ever before.

Performance Improvements in AI and HPC

The HGX H100 by NVIDIA has notable performance improvements in AI and HPC workloads as opposed to the HGX A100. Key enhancements and their respective technical parameters are shown below:

Enhanced Tensor Cores:

Next generation tensor cores inside H100 Tensor Core GPUs allow for 6X speed boost in AI training and more than three times better AI inference capacities compared to A100.

Increased GPU Memory:

High-bandwidth memory (HBM3) with a capacity of 80 GB is installed on each GPU of H100, which makes it possible to process larger datasets and models directly in memory unlike the A100’s 40 GB of HBM2 memory.

NVLink 4.0 Interconnect:

This means that the NVLink interconnect introduced in HGX H100 yields a bandwidth that is improved by 50% i.e., it supports a GPU-to-GPU rate of up to 900GB/s compared to HGX A100’s NVLink 3.0 which supports only about 600GB/s.

Improved Power Efficiency:

It should be noted that power efficiency has also been enhanced in the case of these new GPUs since they provide up to two times better TFLOPS per watt for AI operations, at least twenty TFLOPS per watt compared with the previous generation.

Increased FLOPS:

On one hand, this implies that its peak performance reaches sixty teraflops (TFLOPS) when double-precision (FP64) is considered; on another hand, it can achieve one thousand TFLOPS during mixed-precision (FP16) tasks as compared to A100’s numbers–19.5 TFLOPS (FP64) and 312 TFLOPS (FP16).

Expanded Multi-GPU Scalability :

Additionally, there have been major improvements in communication capabilities as well as thermal management systems on the HGX H100 platform, making scaling more efficient across multiple GPUs, thereby supporting larger and more complex HPC and AI workloads with increased robustness.

These developments demonstrate how NVIDIA’s NVIDIA HGX H100 platform has remained a pacesetter in the field of AI and HPC, thus providing investigators and scientists with tools to solve the most challenging computational problems at hand.

Power Consumption and Efficiency

NVIDIA H100 GPUs make considerable inroads in power consumption and efficiency. Employing a new architecture and improved design, the H100 GPUs manage to deliver up to 20 TFLOPS per watt, which means they are twice as efficient as the previous generation’s A100 GPU. This achievement is mostly because of the use of a manufacturing process that uses 4nm and enhanced thermal management techniques that facilitate better heat extraction and reduction of power waste, hence making them more effective than their predecessors. Hence, it can be said that the H100 achieves superior computational throughput as well as being more sustainable and cheaper for large-scale data centers, thus being perfect for an H100 cluster.

What Role Does the NVIDIA HGX H100 Play in Data Centers?

Network Integration and Scalability

NVIDIA HGX H100 plays an important role in data centers by enabling smooth network integration up to scale. It is equipped with advanced networking technologies such as NVIDIA NVLink and NVSwitch that make use of the high bandwidth, low latency connections between GPUs available from GPU clusters. This allows for fast data movement and effective GPU-to-GPU intercommunication which are key to scaling AI and HPC workloads. Besides, its compatibility as one of the data center network platforms with major current standards ensures its ability to be integrated into existing setups hence making it a flexible and scalable computational environment capable of handling highly demanding tasks.

Optimizing Workloads with the Accelerated Server Platform for AI

NVIDIA HGX H100 platform significantly enhances the performance of AI workloads through its advanced accelerated server architecture. This is achieved through several key components and technologies:

Tensor Cores: H100 GPUs have fourth generation Tensor Cores that boost AI processing efficiency and performance, supporting different precisions such as FP64, FP32, TF32, BFLOAT16, INT8 and INT4. These cores increase computational throughput and flexibility in the training and inference of AI models.

NVLink and NVSwitch: The utilization of NVIDIA NVLink with NVSwitch enables better inter-GPU communication. This allows up to 900 GB/s of bidirectional bandwith per GPU which ensures a fast data exchange with minimum latency thus optimizing multi-GPU workloads.

Multi-Instance GPU (MIG) Technology: According to the NVIDIA Technical Blog, the H100 has MIG technology that makes it possible for one GPU to be divided into many instances. Every instance can work independently on different tasks, hence maximizing resource usage while providing dedicated performance for parallel AI workloads.

Transformer Engine: A specialized engine present in H100 GPUs helps optimize transformer-based AI models used in natural language processing (NLP) among other AI applications; this delivers four times faster training and inference speed for transformer models.

Technical Parameters:

- Power Efficiency: Up to 20 TFLOPS per watt.

- Manufacturing Process: 4nm.

- Bandwidth: Up to 900 GB/s bidirectional via NVLink.

- Precision Support: FP64, FP32, TF32, BFLOAT16, INT8, INT4.

These improvements together improve the ability of H100 platform to handle and scale large-scale AI workloads more efficiently making it an indispensable part of modern data centers seeking high performance and operational efficiency.

Enhancing Deep Learning Capabilities

The NVIDIA H100 platform greatly enhances the potential of deep learning by uniting adaptive techniques with the most effectively configured hardware. Initially, the high computational throughput is achieved with powerful AI cores like Hopper Tensor Cores for increased performance in synthetic floating-point operations (NT8) and other integer computations required for multi-precision integer neural network calculations (INT4). It is important to enable effective scaling of multi-GPU workloads through lower latency and higher inter-GPU communication by integrating NVLink and NVSwitch which provide a bidirectional bandwidth of up to 900 GB/s per GPU. Secondly, Multi-Instance GPU (MIG) technology that allows multiple instances to be created using one H100 GPU makes it possible to maximize resource utilization as well as offer dedicated performance to concurrent AI tasks.

A specialized Transformer Engine also comes with the H100; this engine optimizes transformer-based models’ – key components in natural language processing (NLP) and various AI applications – performance. In addition, training and inference speed increments are up to four times greater than those of previous models. Additionally, the 4nm manufacturing process makes them even more efficient at power saving, giving up to 20 TFLOPS/watt power efficiency. For instance, FP64, FP32, and TF32 supporting full precision arithmetic are ideal for general-purpose computing across a broad range of applications, including AI/ML/DL, whereas INT8 and INT4 support reduced precision modes suitable for deep learning training where memory requirement plays a crucial role in determining the execution time.

How Can the NVIDIA HGX H100 Benefit Your Business?

Real-World Use Cases of NVIDIA HGX H100

Due to its advanced computational capabilities and efficiency, the NVIDIA HGX H100 platform can immensely benefit a wide range of industries. This allows for quick diagnosis and personalized treatment plans as it facilitates rapid analysis and processing of large-scale medical imaging data in the healthcare sector. For financial institutions, on the other hand, the technology improves real-time fraud detection systems while at the same time accelerating algorithmic trading due to low latency in handling massive data sets. In automotive manufacturing, this aids in creating complex AI models for self-driving cars thereby enhancing safety and operational efficiencies. Similarly, H100 is good for e-commerce because it elevates customer experiences through improved recommendation engines and greater sales through the use of consumer analytics. Thus, any AI-driven organization seeking innovation and competitive edge will consider H100 a worthwhile tool owing to its superior performance and flexibility in diverse applications.

Impact on AI and HPC Industries

HGX H100 of NVIDIA is a game changer in both industries of AI and high-performance computing (HPC) due to unmatched computational power and efficiency. This makes it possible for such sectors to undertake complex, large-scale calculations that promote advancements in various critical areas.

Technical Parameters:

- Manufacturing Process 4nm: Enhances transistor density and energy efficiency.

- Support for Precision: This entails FP64, FP32, TF32, BFLOAT16, INT8, and INT4, which enable a wide variety of computations.

- TFLOPS per Watt: It offers up to 20 TFLOPS per watt which enhances energy consumption optimization and performance.

Enhanced Deep Learning:

- Intensive computational capabilities and precision support enable the training and inference of intricate neural networks that are spurring advancement in AI research and application.

Scalability in HPC:

- The integration of the H100 platform means achieving scalability in HPC deployments that help with simulations, data analysis, and scientific research requiring substantial computing resources.

Operational Efficiency:

- Due to its high-performance metrics, HGX H100 provides lower latency as well as higher throughput, making it excellent for environments where real-time data processing and analysis are important.

Broad Industry Application:

- Healthcare: Quickens medical data processing through accelerated medical diagnostic techniques.

- Finance: Improves real-time analytics including fraud detection.

- Automotive: Supports sophisticated AI development for autonomous driving.

- E-commerce: Boosts customer analytics such as recommendation systems improvement.

NVIDIA HGX H100 not only boosts the performance of AI and HPC systems but also allows new developments that lead to a competitive edge across multiple sectors.

Future Trends and Potential Applications

The AI and HPC future trends are going to transform several industries through the integration of new technologies. Areas which are very significant include:

Edge Computing:

- A lot of applications will move from centralized cloud models to edge computing, reducing latency and enabling instant processing in fields such as autonomous cars and IoT devices.

Quantum Computing Integration:

- Quantum computing is continually developing and it will be combined together with the traditional HPC systems to solve complex problems efficiently especially in cryptography, material science and large scale simulations.

AI-Driven Personalization:

- Various sectors such as e-commerce or healthcare would readily provide hyper-personalized experiences by their ability to analyze enormous amounts of data, hence improving customer satisfaction and treatment outcomes.

Sustainable AI Development:

- The focus will increasingly be on making energy-efficient AI and HPC solutions, thereby aligning technological advancement with global sustainability objectives.

Enhanced Cybersecurity:

- With data security at its peak, progress in artificial intelligence (AI) shall improve protection against cyber threats using predictive analytics coupled with automated threat detection systems.

These emerging patterns emphasize how the NVIDIA HGX H100 platform has the potential to maintain its leadership position when it comes to innovation, thus driving substantial strides forward across different domains.

Reference sources

Frequently Asked Questions (FAQs)

Q: What is the NVIDIA HGX H100?

A: This is an introduction to the HGX H100, an AI and HPC powerhouse that integrates eight Tensor Core GPUs of the H100 model, which are designed to speed up complex computational tasks and AI workloads.

Q: How does the HGX H100 4-GPU platform benefit high-performance computing?

A: The HGX H100 4-GPU platform improves upon high-performance computing by offering enhanced parallelism and quick data processing through advanced architecture and new hardware acceleration for collective operations.

Q: What connectivity features are included in the NVIDIA HGX H100?

A: The HGX H100’s eight tensor core GPUs are fully connected using NVLink switches, which provide high-speed interconnects and reduced latency, enhancing performance overall.

Q: How does the HGX H100 handle AI tasks compared to previous models like the NVIDIA HGX A100?

A: For example, it has outdone itself by surpassing in terms of better processing power as compared to its predecessors such as NVIDIA HGX A100, improved NVLink ports, and additional support for in-network operations with multicast and NVIDIA SHARP in-network reductions, making it better suited for use in AI applications or High-Performance Computing (HPC) platforms.

Q: What is the role of NVIDIA SHARP in the HGX H100?

A: It is also crucial to include some features that can help in offloading collective communication tasks from GPUs into networks, such as NVIDIA SHARP In-Network Reductions, which can reduce the load on GPUs, hence increasing efficiency within the whole system by reducing the computation burden on individual devices.

Q: Can you explain the H100 SXM5 configuration in the HGX H100?

A: The latest generation GPUs optimized for low latency and throughput have a design that ensures the highest possible power and thermal efficiency when facing heavy computational loads found under demanding computational tasks, as is the case with the H100 SXM5 configuration of the HGX H100 platform.

Q: How does the NVIDIA HGX H100 compare to a traditional GPU server?

A: The NVIDIA HGX H100 offers significantly higher performance, scalability, and flexibility compared to a traditional GPU server due to its advanced NVLink switch connectivity, H100 GPUs, and in-network reductions with NVIDIA SHARP implementation.

Q: What are the key advantages of using the NVIDIA HGX H100 for AI applications?

A: In terms of AI applications, the key benefits offered by the NVIDIA HGX H100 include speed-ups during model training and inference, efficient handling of large datasets better parallelism through eight Tensor Core GPUs, and improved NVLink connectivity.

Q: How does the HGX H100 support NVIDIA’s mission to accelerate AI and high-performance computing?

A: This state-of-the-art infrastructure supports accelerated AI research as well as high-performance computing through the provision of powerful GPUs and advanced network features, among other things, such as software optimizations that enable researchers and developers to do complicated calculations on their computers faster than ever before.

Q: What role does the generation NVLink technology play in the HGX H100?

A: Generation NVLink technology plays an important function in this regard since it consists of high-bandwidth, low-latency interconnects that connect these GPUS, making faster communication between them possible and thereby improving their overall efficiency in processing AI/ML workloads.

Related Products:

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

-

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

-

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$260.00

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$260.00

-

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

-

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

-

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$190.00

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$190.00