Table of Contents

ToggleIntroduction

The NVIDIA GB200 is a highly integrated supercomputing module based on NVIDIA’s Blackwell architecture. This module combines two NVIDIA B200 Tensor Core GPUs and one NVIDIA Grace CPU, aiming to deliver unprecedented AI performance.

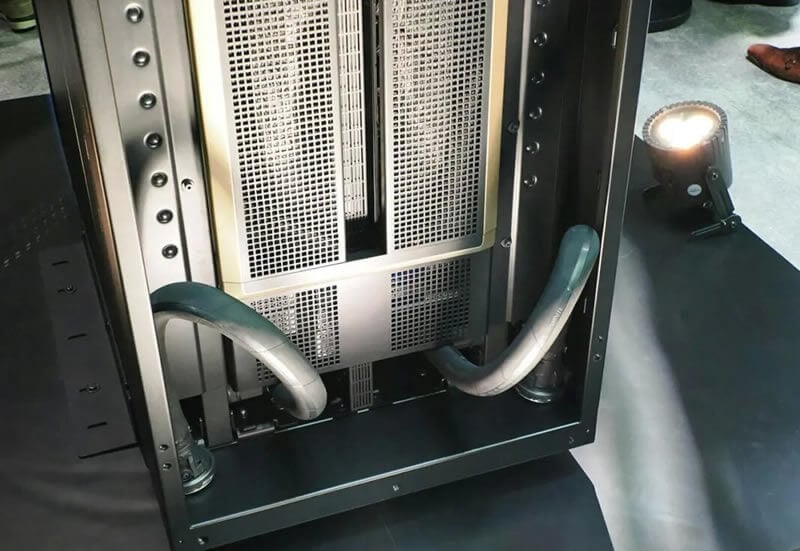

With the integration of liquid cooling, various industry participants are working together to implement this technology. We believe that as AI-generated content (AIGC) drives the increasing power consumption of AI computing chips, servers urgently need more efficient cooling methods. Global AI chip leader NVIDIA (with its new GB200 featuring liquid cooling) and AI server manufacturer Supermicro (which plans to expand liquid-cooled racks in Q2 FY24) are endorsing liquid cooling technology. Additionally, domestic industry collaboration is advancing, as evidenced by the release of a white paper on liquid cooling technology by the three major telecom operators in June 2023, envisioning over 50% project scale application of liquid cooling by 2025 and beyond. In summary, liquid cooling is being promoted by upstream chip manufacturers, server manufacturers, downstream IDC providers, and telecom operators, which is expected to boost the demand for liquid cooling equipment and new liquid-cooled data center construction. According to DellOro’s forecast, the global liquid cooling market size will approach $2 billion by 2027.

Basic Introduction to GH200 and GB200

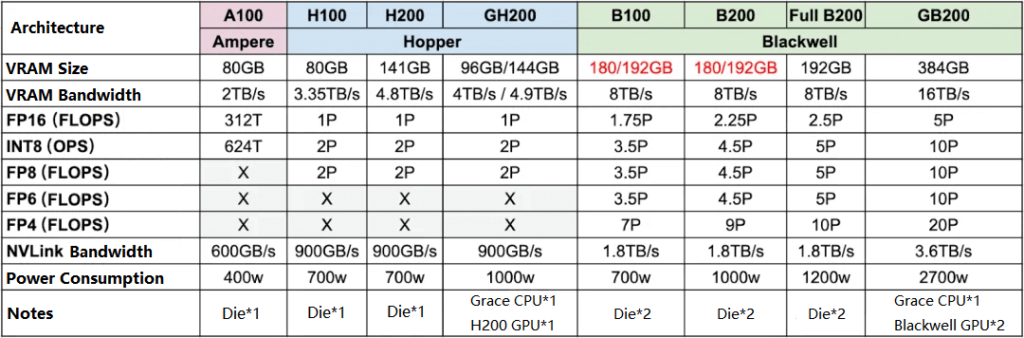

Comparing the parameters of GH200 and GB200 may provide a clearer and more intuitive understanding of GB200.

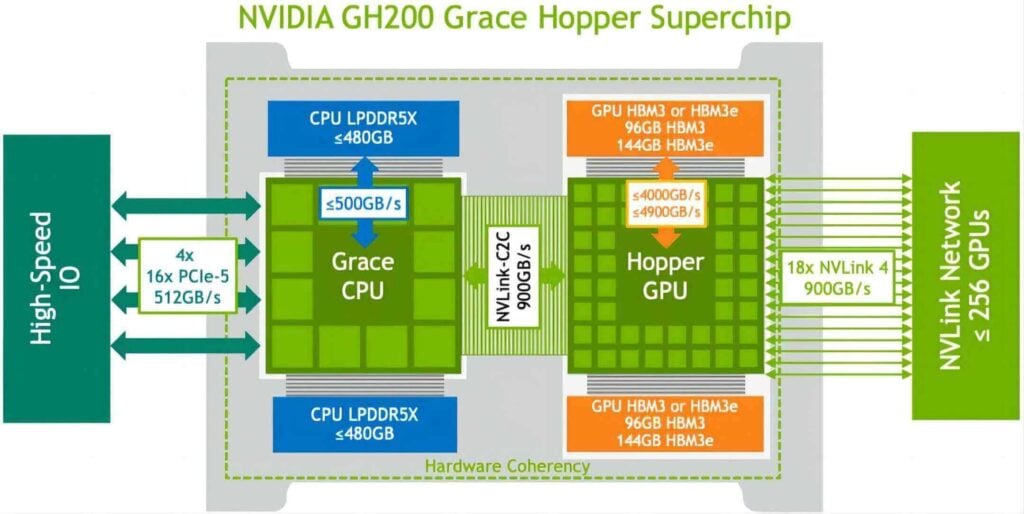

The GH200, released by NVIDIA in 2023, combines an H200 GPU with a Grace CPU, where one Grace CPU corresponds to one H200 GPU. The H200 GPU can have up to 96GB or 144GB of memory. The Grace CPU and Hopper GPU are interconnected via NVLink-C2C with a bandwidth of 900GB/s, and the corresponding power consumption is 1000W.

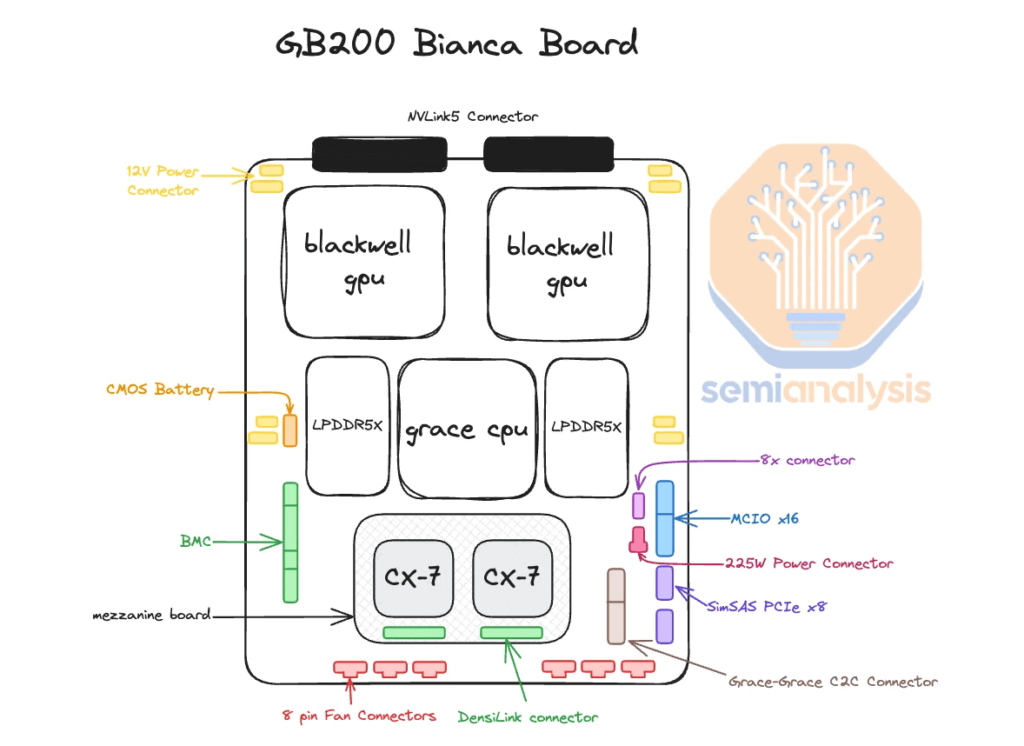

On March 19, 2024, NVIDIA introduced its most powerful AI chip, the GB200, at the annual GTC. Compared to the H100, the GB200’s computing power is six times greater, and for specific multimodal tasks, its computing power can reach 30 times that of the H100, while reducing energy consumption by 25 times. Unlike the GH200, the GB200 consists of one Grace CPU and two Blackwell GPUs, doubling the GPU computing power and memory. The CPU and GPU are still interconnected via NVLink-C2C with a bandwidth of 900GB/s, and the corresponding power consumption is 2700W.

Given its high power consumption of 2700W, the GB200 requires efficient cooling. The GB200 NVL72 is a multi-node liquid-cooled rack-scale expansion system suitable for highly compute-intensive workloads.

Liquid-Cooled Servers and Cabinets from Various Manufacturers

The GB200 primarily comes in two cabinet configurations:

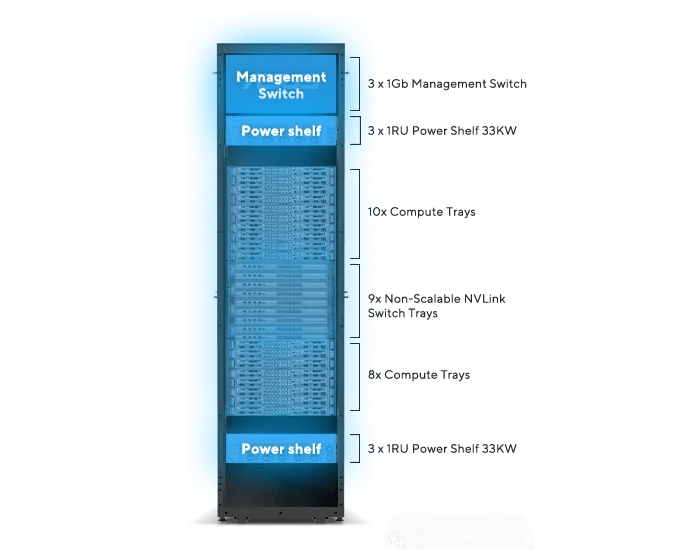

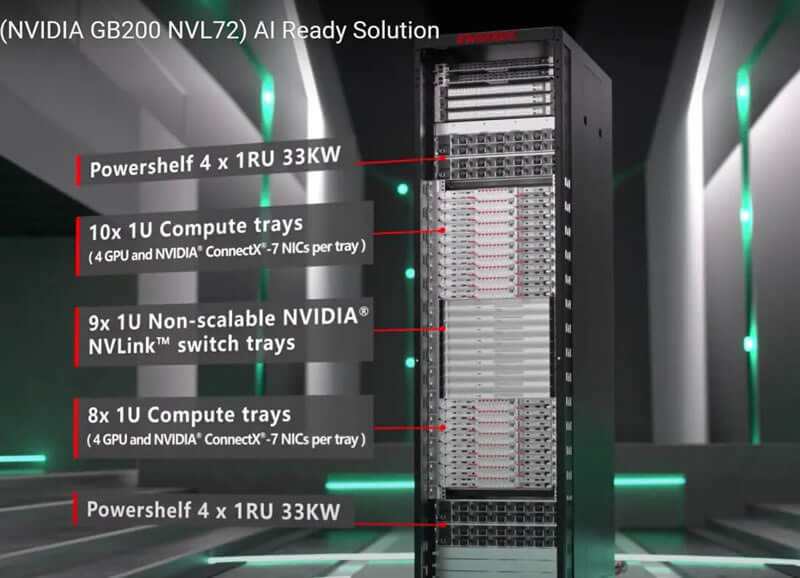

GB200 NVL72 (10+9+8 layout)

GB200 NVL36x2 (5+9+4 layout)

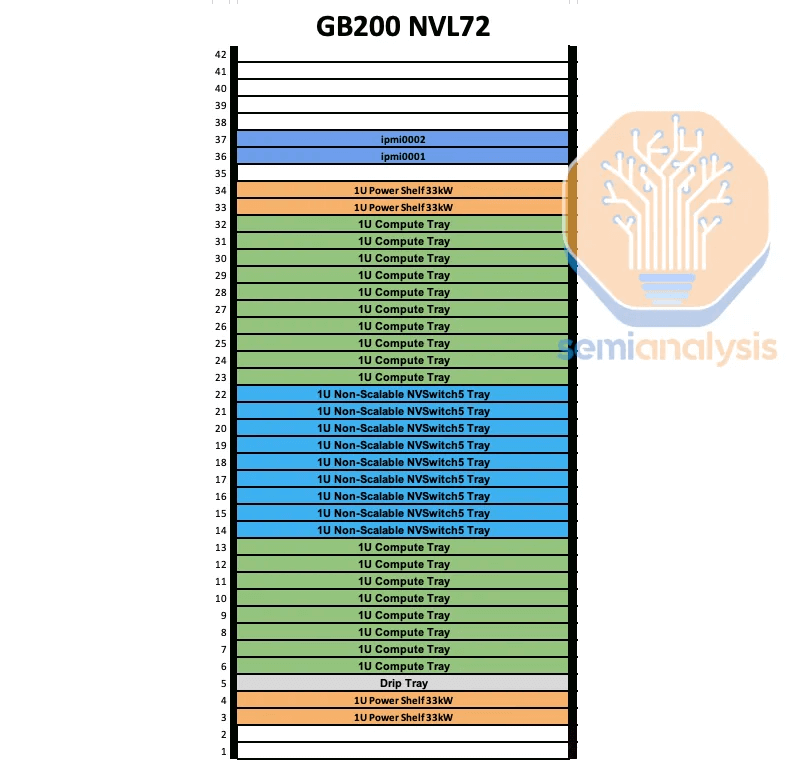

GB200 NVL72 Cabinet

The GB200 NVL72 cabinet has a total power consumption of approximately 120kW. While standard CPU cabinets support up to 12kW per rack, higher-density H100 air-cooled cabinets typically support around 40kW per rack. Generally, for single cabinets exceeding 30kW, liquid cooling is recommended, hence the GB200 NVL72 cabinet employs a liquid cooling solution.

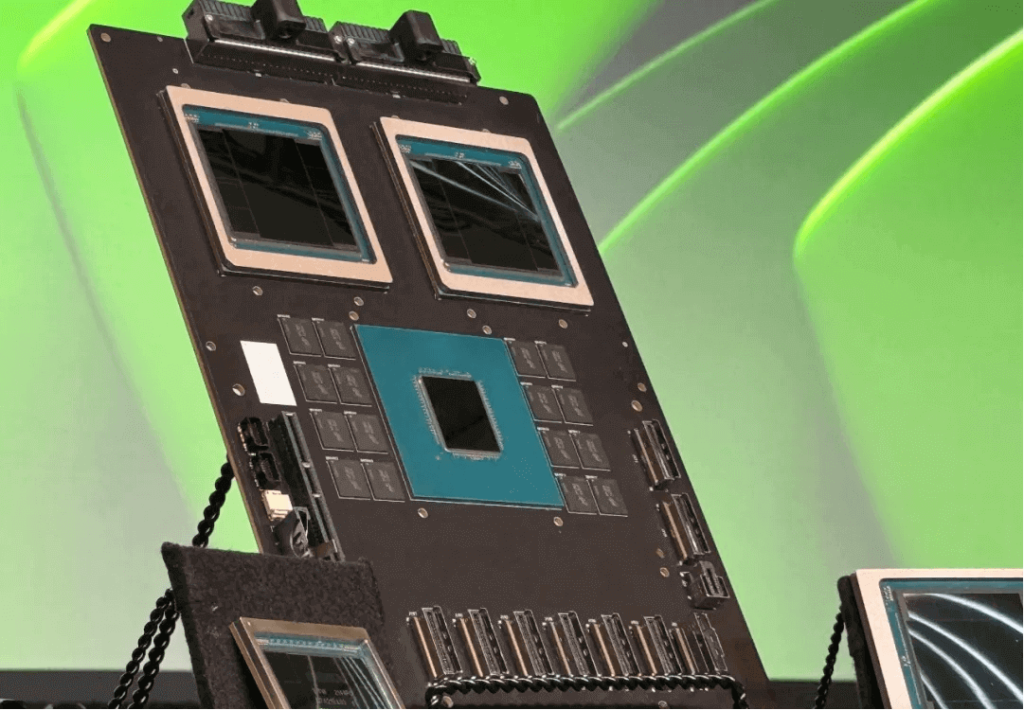

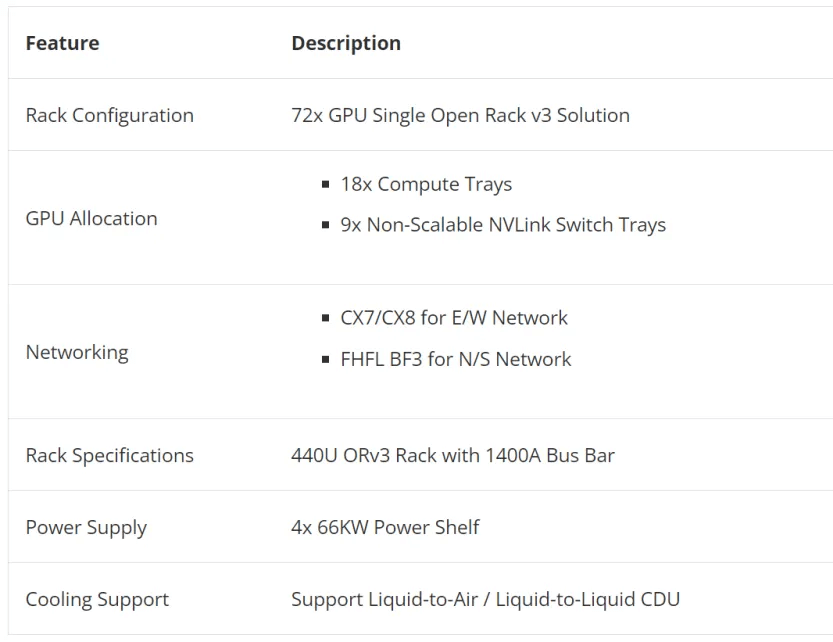

The GB200 NVL72 cabinet consists of 18 1U compute nodes and 9 NVSwitches. Each compute node is 1U high and contains 2 Bianca boards. Each Bianca board includes 1 Grace CPU and 2 Blackwell GPUs. The NVSwitch tray has two 28.8Gb/s NVSwitch5 ASICs.

This cabinet configuration is currently rarely deployed because most data center infrastructures, even with direct liquid cooling (DLC), cannot support such high rack density.

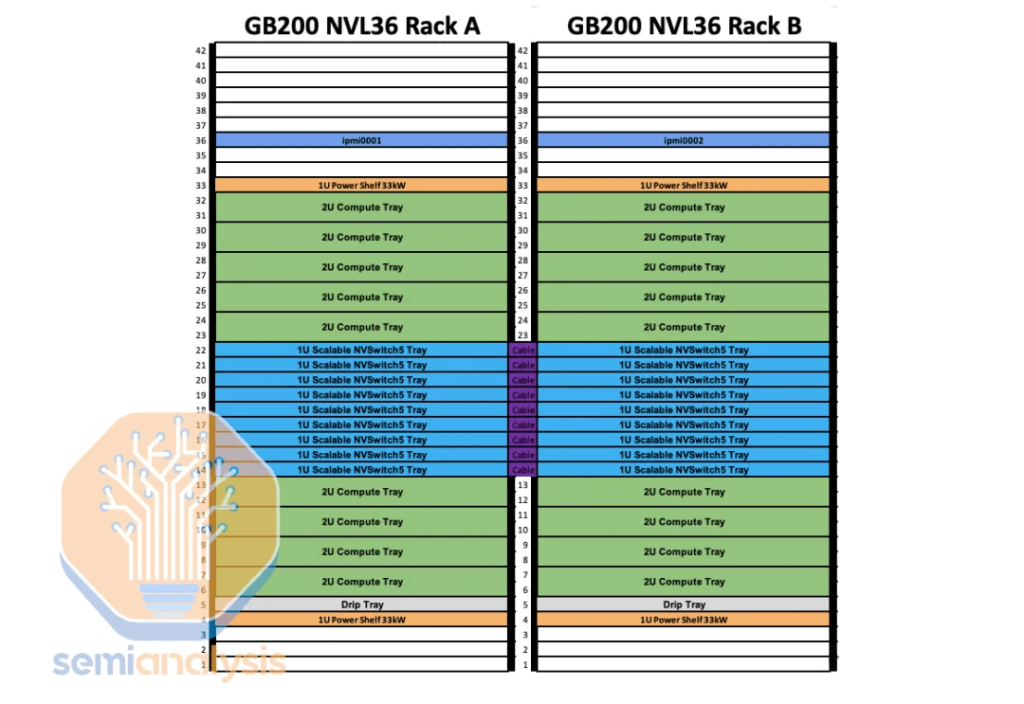

The GB200 NVL36x2 cabinet consists of two interconnected cabinets. This configuration is expected to be the most commonly used for GB200 racks. Each rack contains 18 Grace CPUs and 36 Blackwell GPUs. The two cabinets maintain a non-blocking full interconnect, supporting communication among all 72 GPUs in the NVL72. Each compute node is 2U high and contains 2 Bianca boards. Each NVSwitch tray has two 28.8Gb/s NVSwitch5 ASIC chips, with each chip having 14.4Gb/s to the backplane and 14.4Gb/s to the front plane. Each NVSwitch tray has 18 1.6T dual-port OSFP cages, horizontally connected to a pair of NVL36 racks.

During the 2024 Taipei International Computer Show, the GB200 NVL72 was publicly showcased. Most manufacturers displayed single cabinet configurations, such as Wiwynn, ASRock, GIGABYTE, Supermicro, and Inventec, with 1U compute node servers. GIGABYTE, Inventec, and Pegatron also showcased 2U compute node servers, referring to this configuration as GB200 NVL36.

Next, we will introduce the liquid-cooled servers and cabinets from various manufacturers.

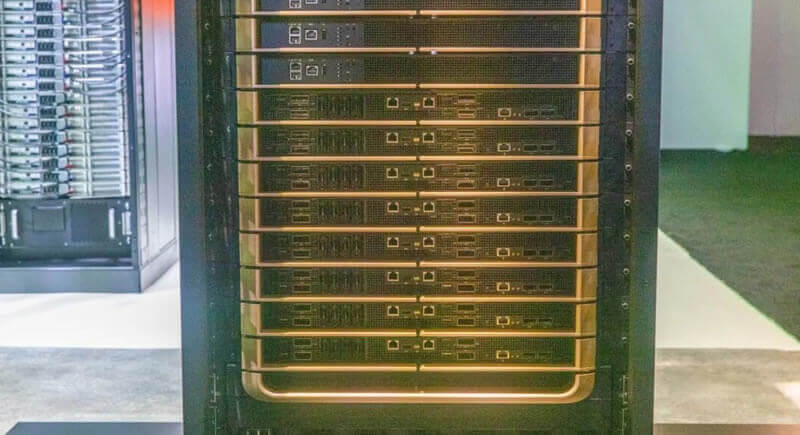

NVIDIA

At GTC 2024, NVIDIA showcased a rack configured with the DGX GB200 NVL72, fully interconnected via NVLink. The entire cabinet weighs approximately 1.36 tons (3,000 pounds). This system is an upgraded version of the Grace-Hopper Superchip rack system displayed by NVIDIA in November 2023, but with more than twice the number of GPUs.

Flagship System

The flagship system is a single rack with a power consumption of 120kW. Most data centers can support up to 60kW per rack. For those unable to deploy a single 120kW rack or an 8-rack SuperPOD approaching 1MW, the NVL36x2 cabinet configuration can be used.

At the top of the cabinet are two 52-port Spectrum switches (48 Gigabit RJ45 ports + 4 QSFP28 100Gbps aggregation ports). These switches manage and transmit various data from the compute nodes, NVLink switches, and power frames that make up the system.

Below these switches are three of the six power frames in the cabinet, with the other three located at the bottom. These power frames supply electricity to the 120kW cabinet. It is estimated that six 415V, 60A PSUs are sufficient to meet this requirement, with some redundancy built into the design. The operating current of these power supplies may exceed 60A. Each device is powered via a bus bar at the back of the cabinet.

Below the upper three power frames are ten 1U compute nodes. The front panel of each node has four InfiniBand NICs (four QSFP-DD cages on the left and center of the front panel), forming the compute network. The system is also equipped with BlueField-3 DPUs, which are said to handle communication with the storage network. In addition to several management ports, there are four E1.S drive trays.

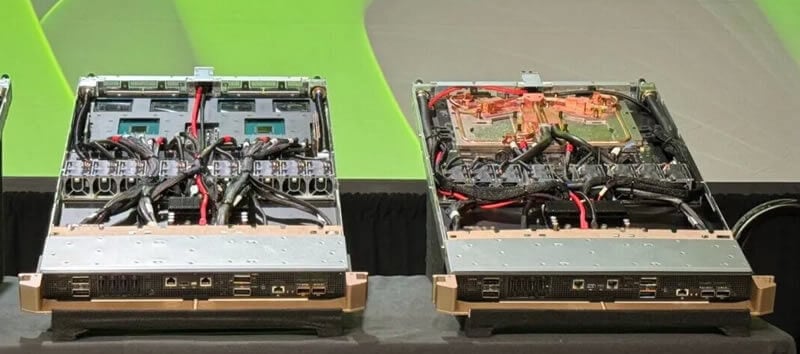

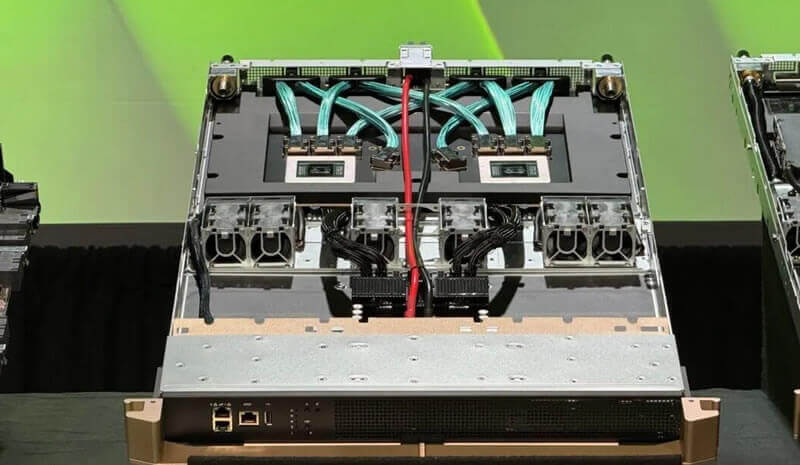

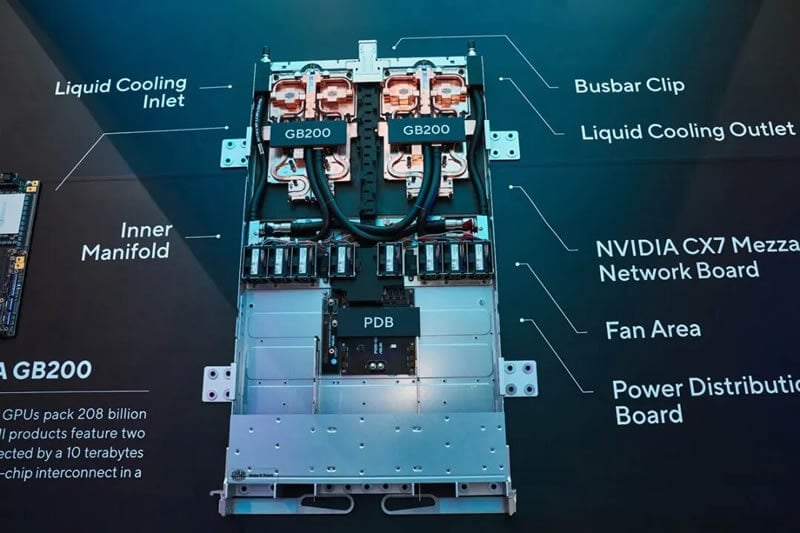

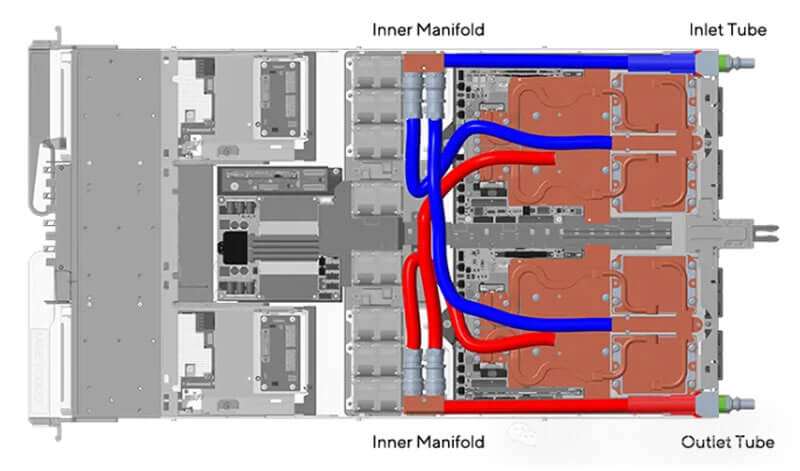

Each compute node contains two Grace Arm CPUs, with each Grace CPU connected to two Blackwell GPUs. The power consumption of each node ranges between 5.4kW and 5.7kW, with most of the heat dissipated through direct-to-chip (DTC) liquid cooling.

NVSwitches

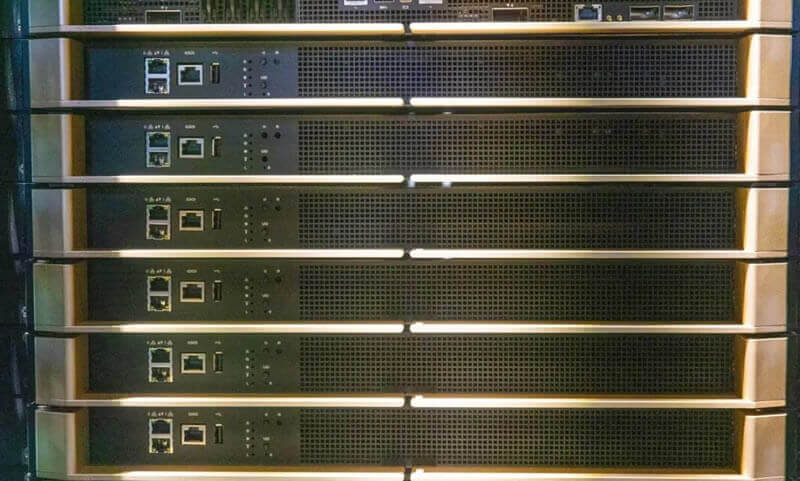

Below the ten compute nodes are nine NVSwitches. The gold components on the panel are handles for inserting and removing the switches.

Each NVLink switch contains two NVLink switch chips, which also use liquid cooling.

At the bottom of the cabinet, below the nine NVSwitches, are eight 1U compute nodes.

At the back of the cabinet, a blind-mate bus bar power design is used, along with connectors for providing cooling liquid and NVLink connections to each device. Each component requires some space for movement to ensure the reliability of the blind-mate connections.

According to Jensen Huang, the cooling liquid enters the rack at a rate of 2L/s, with an inlet temperature of 25°C and an outlet temperature exceeding 20°C.

NVIDIA states that using copper (fiber optic) NVLink at the back of the cabinet can save approximately 20kW of power per cabinet. The total length of all copper cables is estimated to exceed 2 miles (3.2 kilometers). This explains why the NVLink switches are positioned in the middle of the cabinet, as this minimizes cable length.

Supermicro

Supermicro NVIDIA MGX™ Systems

1U NVIDIA GH200 Grace Hopper™ Superchip Systems

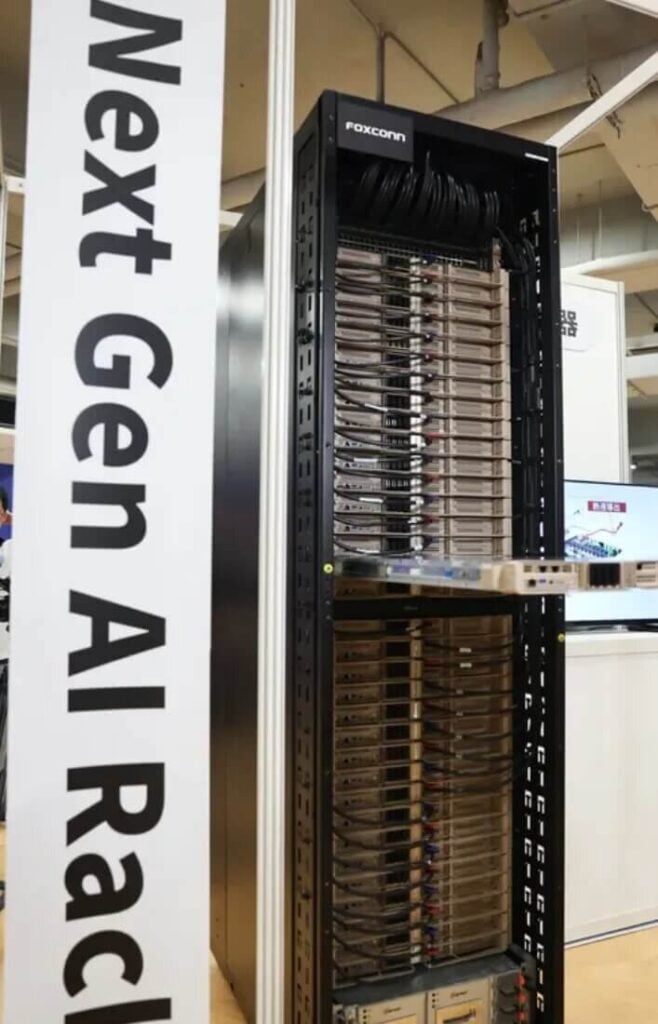

Foxconn

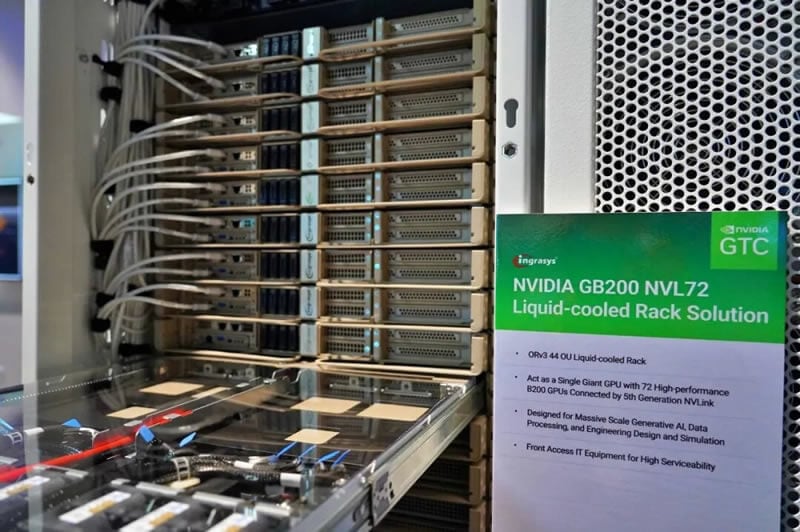

On March 18, 2024, at NVIDIA’s GTC conference, Foxconn subsidiary Ingrasys unveiled the NVL72 liquid-cooled server, which uses NVIDIA’s GB200 chip. This server integrates 72 NVIDIA Blackwell GPUs and 36 NVIDIA Grace CPUs.

Jensen Huang and Foxconn have a good relationship, with multiple collaborations in servers and other areas. Foxconn’s latest super AI server, the DGX GB200, will begin mass production in the latter half of the year. The GB200 series products will ship in rack form, with an estimated order volume of up to 50,000 cabinets. Foxconn currently holds three major new products in the DGX GB200 system cabinet series: DGX NVL72, NVL32, and HGX B200. They are the big winners of this platform generation transition.

The new generation AI liquid-cooled rack solution, NVIDIA GB200 NVL72, combines 36 NVIDIA GB200 Grace Blackwell superchips, which include 72 NVIDIA Blackwell-based GPUs and 36 NVIDIA Grace CPUs. They are interconnected via the fifth generation of NVIDIA NVLink to form a single large GPU.

Quanta Cloud Technology (QCT)

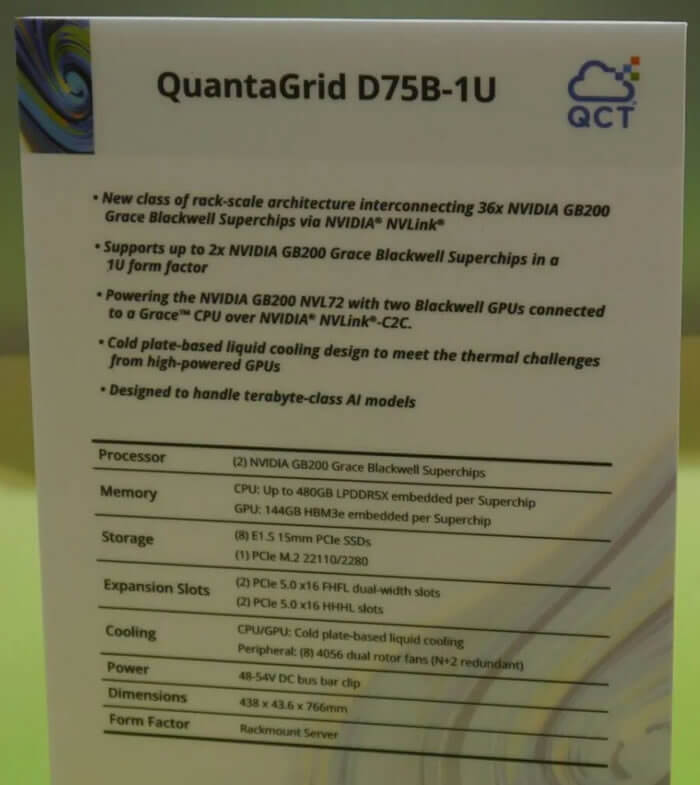

At the event, QCT showcased their 1U model, QuantaGrid D75B-1U. Under the NVIDIA GB200 NVL72 system framework, this model can accommodate 72 devices in a single cabinet. The D75B-1U is equipped with two GB200 Grace Blackwell Superchips. QCT highlighted that the CPU can access 480 GB of LPDDR5X memory, and the GPU is equipped with 144GB of HBM3e high-bandwidth memory, both featuring cold plate liquid cooling accessories. In terms of storage, this 1U server can house eight 15mm thick E1.S PCIe SSDs and one M.2 2280 PCIe SSD. For PCIe device expansion, the D75B-1U can accommodate two double-width full-height full-length interface cards and two half-height half-length interface cards, all supporting PCIe 5.0 x16.

Wiwynn

As an important partner of NVIDIA, Wiwynn is one of the first companies to comply with the NVIDIA GB200 NVL72 standard. At GTC 2024, Wiwynn showcased its latest AI computing solutions. The newly released NVIDIA GB200 Grace Blackwell superchip supports the latest NVIDIA Quantum-X800 InfiniBand and NVIDIA Spectrum-X800 Ethernet platform. This includes a new rack-level liquid-cooled AI server rack driven by the NVIDIA GB200 NVL72 system. Wiwynn leverages its strengths in high-speed data transmission, energy efficiency, system integration, and advanced cooling technologies. Its goal is to meet emerging performance, scalability, and diversity demands in the data center ecosystem.

Wiwynn also launched the UMS100 (Universal Liquid-Cooling Management System), an advanced rack-level liquid cooling management system designed to meet the growing demand for high computing power and efficient cooling mechanisms in the emerging generative AI (GenAI) era. This innovative system offers a range of functions, including real-time monitoring, cooling energy optimization, rapid leak detection, and containment. It is also designed to integrate smoothly with existing data center management systems through the Redfish interface. It supports industry-standard protocols and is compatible with various Cooling Distribution Units (CDUs) and side cabinets.

ASUS

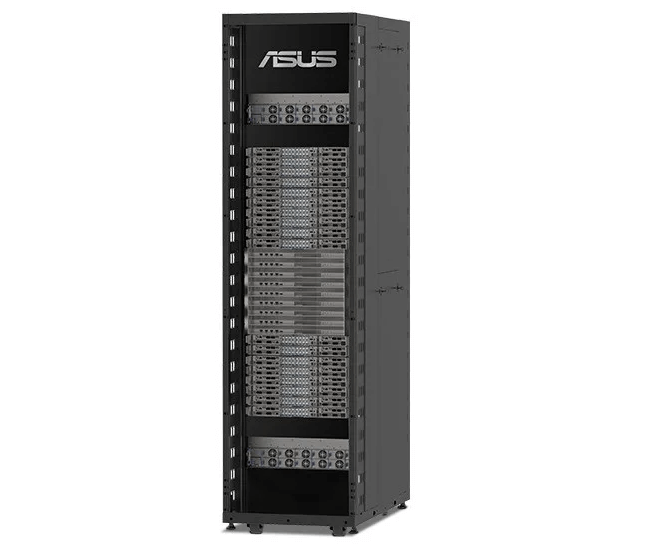

At Computex Taipei 2024, ASUS unveiled several AI servers. These include the new NVIDIA Blackwell servers, namely the B100, B200, and GB200 servers, as well as AMD MI300X servers. Additionally, there are Intel Xeon 6 servers and AMD EPYC Turin servers with up to 500W CPU TDP.

The highlight is the ASUS ESC AI POD, which features the NVIDIA GB200 NVL72 version.

ASUS also showcased the appearance of one of the nodes. In the 1U chassis, we can see the bus power supply and dual liquid-cooled GB200 nodes. These nodes are equipped with two GB200 Grace Blackwell Superchips, both covered with cold plates. In the middle of the chassis, there is a Power Distribution Board (PDB) designed to convert 48-volt DC to 12-volt DC to power the Blackwell GPUs. Additionally, this computational slot includes a storage module for E1.S-form factor SSDs and two BlueField-3 data processor series B3240 cards in double-width full-height half-length form factor.

For users seeking low-cost Arm computing and NVIDIA GPUs, there is the dual NVIDIA Grace Hopper GH200 platform, known as the ASUS ESC NM2-E1. It combines two Grace Hopper CPU and GPU units into one system.

Inventec

At the event, Inventec showcased the cabinet-level GB200 NVL72 alongside the Artemis 1U and 2U servers. These servers are equipped with two GB200 Grace Blackwell Superchips, ConnectX-7 400Gb/s InfiniBand network cards, and BlueField-3 400Gb/s data processors.

- 120kW per cabinet

- Power Bus Bar—1400A

- 8*33kW power shelves—1+1 backup

- Liquid cooling blind plug + bus bar blind plug + communication blind plug

- Rear cabinet cooling unit known as the “Side Car”

The “Side Car” is a liquid cooling cabinet designed to accompany the server cabinet, resembling a sidecar on a motorcycle, providing an effective cooling solution.

Related Products:

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

-

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

-

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$260.00

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$260.00

-

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

-

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

-

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$190.00

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$190.00