Table of Contents

ToggleWhat is IB-InfiniBand

IB, the abbreviation of InfiniBand (translated as ” Infinitive bandwidth ” ), is a computer network communication standard for high-performance computing. IB technology, characterized by extremely high throughput and extremely low latency, is used for data interconnection between computers. InfiniBand is also used as a direct or switched interconnect between servers and storage systems, and as an interconnect between storage systems. With the rise of AI, IB has also become popular and is currently the preferred networking method for high-end GPU server clusters.

Here is the development history of InfiniBand:

- 1999: The InfiniBand Trade Association (IBTA) released the InfiniBand architecture, which was originally intended to replace the PCI bus.

- In 2000, version 1.0 of the InfiniBand architecture specification was officially released. Then in 2001, the first batch of InfiniBand products came out, and many manufacturers began to launch products that support InfiniBand, including servers, storage systems, and network equipment.

- In 2003, thanks to its high throughput and low latency, InfiniBand turned to a new application area – HPC computer cluster interconnection, and was widely used in the TOP500 supercomputers at the time.

- In 2004, another important InfiniBand non-profit organization was born – Open Fabrics Alliance (OFA ).

- In 2005, InfiniBand found a new scenario – the connection of storage devices, and has been continuously updated and iterated since then.

- In 2015, InfiniBand technology accounted for more than 50% of TOP500 supercomputers for the first time, reaching 51.4%. This marks the first time that InfiniBand technology has overtaken Ethernet technology to become the most popular internal connection technology in supercomputers.

- Since 2023, AI large model training has been highly dependent on high-performance computing clusters, and InfiniBand networks are the best partner for high-performance computing clusters.

Core advantages of Mellanox and IB Network

Relationship between Mellanox and InfiniBand

Currently, when people mention IB, the first thing that comes to mind is Mellanox. In 2019, NVIDIA spent US$6.9 billion to acquire Mellanox as its sub-brand. Jensen Huang said publicly: This is a combination of two of the world’s leading high-performance computing companies. NVIDIA focuses on accelerated computing, and Mellanox focuses on interconnection and storage.

According to forecasts by industry organizations, the market size of InfiniBand will reach US$98.37 billion in 2029, a 14.7-fold increase from US$6.66 billion in 2021. Driven by high-performance computing and AI, InfiniBand has a bright future.

InfiniBand network architecture and features

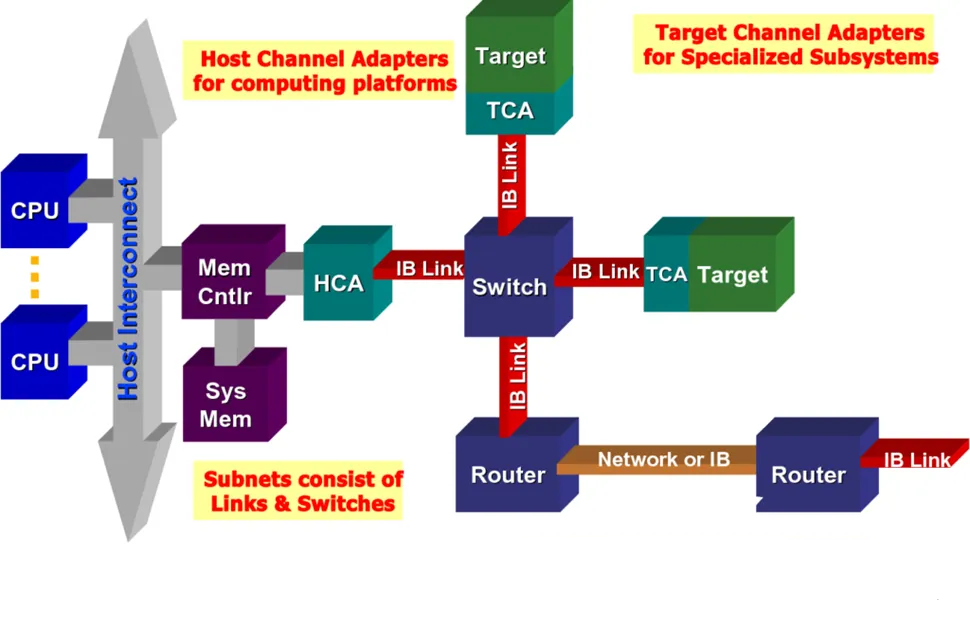

An InfiniBand system consists of channel adapters, switches, routers, cables, and connectors, as shown in the following figure.

InfiniBand System

The core features are summarized as follows:

- Low latency: extremely low latency and native support for RDMA

- High bandwidth: 400Gb/s data transmission capability per port

- Ease of use: Suitable for building large-scale data center clusters

IB Network and RDMA

When talking about IB network, we have to mention RDMA. RDMA (Remote Direct Memory Access) was created to solve the delay of server-side data processing in network transmission. It can directly access the memory of another host or server from the memory of one host or server without using the CPU. It frees up the CPU to perform its work. Infiniband is a network technology designed specifically for RDMA, and IB network natively supports RDMA.

The reason why RDMA technology is so powerful is its kernel bypass mechanism, which allows direct data reading and writing between applications and network cards, reducing the data transmission latency within the server to nearly 1us. Roce transplants RDMA to Ethernet.

Comparison of two mainstream RDMA solutions (IB and ROCEV2)

- Compared with traditional data centers, the new intelligent computing center communication network requirements are higher, such as low latency, large bandwidth, stability, and large scale.

- RDMA-based InfiniBand and ROCEV2 can both meet the needs of intelligent computing center communication networks.

- InfiniBand currently has more advantages in performance than ROCEV2, while ROCEV2 currently has advantages over InfiniBand in terms of economy and versatility.

Development and Trend of InfiniBand Link Rate

Taking the early SDR (single data rate) specification as an example, the original signal bandwidth of a 1X link is 2.5Gbps, a 4X link is 10Gbps, and a 12X link is 30Gbps. The actual data bandwidth of a 1X link is 2.0 Gbps (due to 8b/10b encoding). Since the link is bidirectional, the total bandwidth relative to the bus is 4Gbps. Over time, the network bandwidth of InfiniBand continues to upgrade.

What do the DRs mean in HDR and NDR? Each DR stands for the abbreviation of each generation of IB technology. DR is a general term for data rate, with 4 channels being the mainstream.

The following figure shows the network bandwidth of InfiniBand from SDR, DDR, QDR, FDR, EDR to HDR and NDR. The speed is based on 4x link speed. Currently, EDR, HDR and NDR are mainstream, corresponding to PICE 3.0, 4.0 and 5.0 server platforms.

NVIDIA InfiniBand mainstream products – the latest NDR network card situation

ConnectX-7 IB Card (HCA) has a variety of form factors, including single and dual ports, supporting OSFP and QSFP112 interfaces, and supporting 200Gbps and 400Gbps rates. The CX-7 network card supports x16 PCle5.0 or PCle 4.0, which complies with CEM specifications. Up to 16 lanes can be connected with support for an optional auxiliary card that enables 32 lanes of PCIe 4.0 using NVIDIA Socket Direct® technology.

Other form factors include Open Compute Project (OCP) 3.0 with an OSFP connector, OCP 3.0 with a QSFP112 connector, and CEM PCle x16 with a QSFP112 connector.

Mellanox’s latest NDR switch

Mellanox’s IB switches are divided into two types: fixed configuration switches and modular switches. It is understood that the latest NDR series switches no longer sell modular configuration switches (although the official website shows that they are available, they are no longer on sale).

NDR’s fixed configuration switch MQM9700 series is equipped with 32 physical OSFP connectors and supports 64 400Gb/s ports (which can be split into up to 128 200Gb/s ports). The switch series provides a total of 51.2Tb/s of bidirectional throughput ( backplane bandwidth) and an astonishing 66.5 billion packets per second (packet forwarding rate).

The number of interfaces and speeds of the sub-models are the same, and the differences lie in whether the management function is supported, in the power supply method and the heat dissipation method. Usually one switch with management functions is sufficient.

Mellanox’s latest interconnect cables and modules

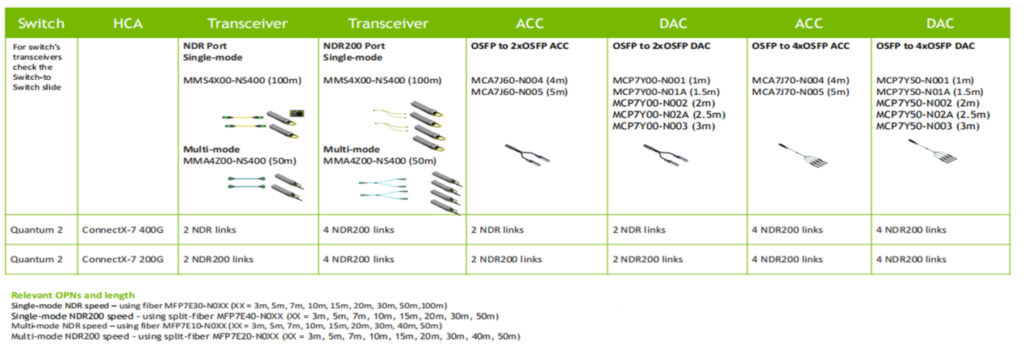

Mellanox’s LinkX cables and transceivers are typically used to link ToR switches downward to NVIDIA GPU and CPU server network cards and storage devices, and/or upward in switch-to-switch interconnect applications throughout the network infrastructure.

Active Optical Cable (AOC), Direct Attach Copper Cable (DAC), and the new active DAC called ACC, which include a signal enhancement integrated circuit (IC) at the end of the cable.

Mellanox Latest Interconnect Cables and Modules

Mellanox Typical Interconnect Links

Switches to switches and switches to network cards can be interconnected through different cables, and switch to network card can achieve 1-to-2 or 4-to-1 interconnection.

Mellanox NIC topology in H100

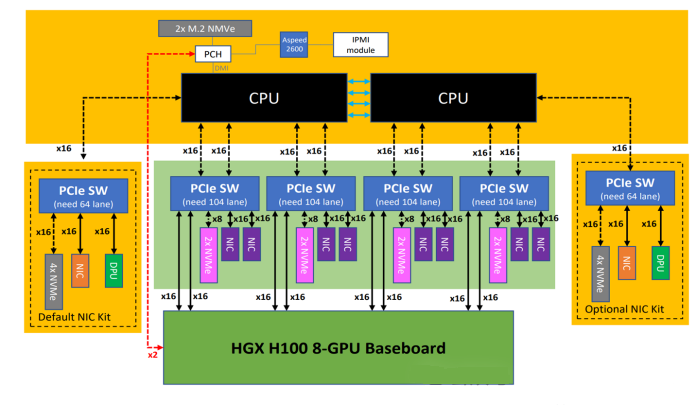

- The HGX module is logically connected to the head through 4 or 8 PCIE SW chips inside the H100 machine.

- Each PCIE sw corresponds to two GPU cards and two network cards, and the eight 400G IB cards are designed to correspond one-to-one with the eight H100 cards.

- If it is fully equipped with eight 400G IB cards, it will require other PCIE SW connections from the CPU to add other network cards.

Mellanox NIC topology in H100

Related Products:

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

-

NVIDIA MCA7J60-N004 Compatible 4m (13ft) 800G Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Active Copper Cable

$800.00

NVIDIA MCA7J60-N004 Compatible 4m (13ft) 800G Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Active Copper Cable

$800.00

-

NVIDIA MCP7Y60-H01A Compatible 1.5m (5ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$116.00

NVIDIA MCP7Y60-H01A Compatible 1.5m (5ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$116.00

-

NVIDIA(Mellanox) MCP1600-E00AE30 Compatible 0.5m InfiniBand EDR 100G QSFP28 to QSFP28 Copper Direct Attach Cable

$25.00

NVIDIA(Mellanox) MCP1600-E00AE30 Compatible 0.5m InfiniBand EDR 100G QSFP28 to QSFP28 Copper Direct Attach Cable

$25.00

-

NVIDIA NVIDIA(Mellanox) MCX653106A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Dual-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$828.00

NVIDIA NVIDIA(Mellanox) MCX653106A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Dual-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$828.00

-

NVIDIA NVIDIA(Mellanox) MCX653105A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall bracket

$965.00

NVIDIA NVIDIA(Mellanox) MCX653105A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall bracket

$965.00