Mellanox InfiniBand technology, high-performance computers (HPC), and advanced data centers have become major innovations. Infiniband is a high-throughput and low-latency networking solution that provides unprecedented data transfer speeds necessary for complicated calculations as well as big data analytics. It dramatically improves processing efficiency while reducing CPU overhead through Remote Direct Memory Access (RDMA), which allows computers to read and write directly into each other’s memory spaces without involving the operating system. This technology has flexible architectures that can scale up or down, making it suitable for different types of tasks from scientific research down to artificial intelligence, including machine learning works, too – everything depends on your imagination! Knowing about Mellanox Infiniband’s technicalities and deployment methods may result in huge gains in terms of performance for any organization looking forward to optimizing its computing infrastructure.

Table of Contents

ToggleWhat is Infiniband and How Does It Work?

Understanding InfiniBand Technology

High-performance computing and data centers heavily rely on InfiniBand technology, a communications standard. It does this by connecting servers to storage systems through a fast network so that there is quick communication and low latency between the two connection points. The main feature of InfiniBand, which makes it more efficient than other protocols, is it can use remote direct memory access (RDMA). This means that data can be transferred directly from one computer’s memory to another without going through CPU processing, thus significantly cutting down on overheads and increasing efficiency. These networks can support many devices simultaneously while also scaling up quickly when necessary; hence, they’re suitable for various complex programs such as scientific research, AI development, or big data analytics, among others.

Differences Between Infiniband and Ethernet

When contrasting Ethernet with InfiniBand, several disparities can be discerned, especially in their applications in data centers and high-performance computing (HPC).

Speed and Latency:

- InfiniBand: It is recognized for its high throughput and low latency, allowing it to achieve speeds of up to 200 Gbps with latencies of a few microseconds.

- Ethernet: Normally, Ethernet has higher latencies than InfiniBand. However, modern Ethernet technologies like 100 GbE have greatly improved this, although they still have tens of microseconds’ latency and can operate at 100 Gbps speeds.

Protocol Efficiency:

- InfiniBand: This utilizes Remote Direct Memory Access (RDMA) that allows memory transfer between systems without CPU intervention thereby reducing overhead significantly.

- Ethernet: Although Ethernet also supports RDMA through RoCE (RDMA over Converged Ethernet), usually there is more overhead due to additional protocol processing.

Scalability:

- InfiniBand: It has excellent scalability since it can support thousands of nodes with minimal degradation in performance using switch fabric architecture that efficiently handles large networks’ data traffic.

- Ethernet: While being scalable too much like Infiniband, as the number of nodes increases, especially in high-density data center environments, then, its performance may degrade more noticeably.

Cost and Adoption:

- Ethernet: While being scalable too much like Infiniband, as the number of nodes increases, especially in high-density data center environments, then, its performance may degrade more noticeably.

- InfiniBand: Generally speaking, solutions based on this technology tend to be costly hence are only used in specialized areas which require maximum performance such as HPC clusters .

- Ethernet: Being cost-effective and widely adopted across different industries because versatility and easy integration into existing networks is possible due to its nature as a standard protocol

Use Cases:

- InfiniBand is typically deployed where ultra-high performance is needed, such as in scientific research facilities, AI development labs, big data analytics, etcetera.

- Ethernet: Preferred choice for general DC networking, enterprise LANs, and mixed-use environments due to wider compatibility range as well as lower costs incurred.

In conclusion, although both Infiniband and Ethernet play important roles in networked computing environments, Infiniband’s speed, low latency, and protocol efficiency make it more suitable for high-performance computing tasks while, on the other hand, flexibility, cost effectiveness, and widespread use make Ethernet appropriate for a wider variety of applications.

Applications of Infiniband in High-Performance Computing

Due to its incomparable swiftness, negligible waiting time, and great capacity, InfiniBand is vital in high-speed computing (HPC) environments. One primary use of this technology is in HPC clusters, where many compute nodes need to communicate quickly with the help of InfiniBand interconnections, thereby greatly enhancing computational efficiency and performance.

In addition, scientific research establishments like those involved in climate modeling, molecular dynamics studies, or genome sequencing all rely heavily on InfiniBands. Such data-intensive operations require fast transfer speeds and minimal latencies when dealing with large datasets. Also, during training phases for deep learning models, which involve frequent exchange of information between GPUs, AI, and machine learning environments, InfiniBand is very useful.

Furthermore, big data analytics benefit significantly from Infiniband’s low latency and high bandwidth capabilities. When processing huge volumes of information, it becomes necessary to access and transmit such data at higher rates, hence making Infiniband an indispensable tool for reducing processing times while improving overall system performance.

How Do Mellanox Infiniband Switches Improve Network Performance?

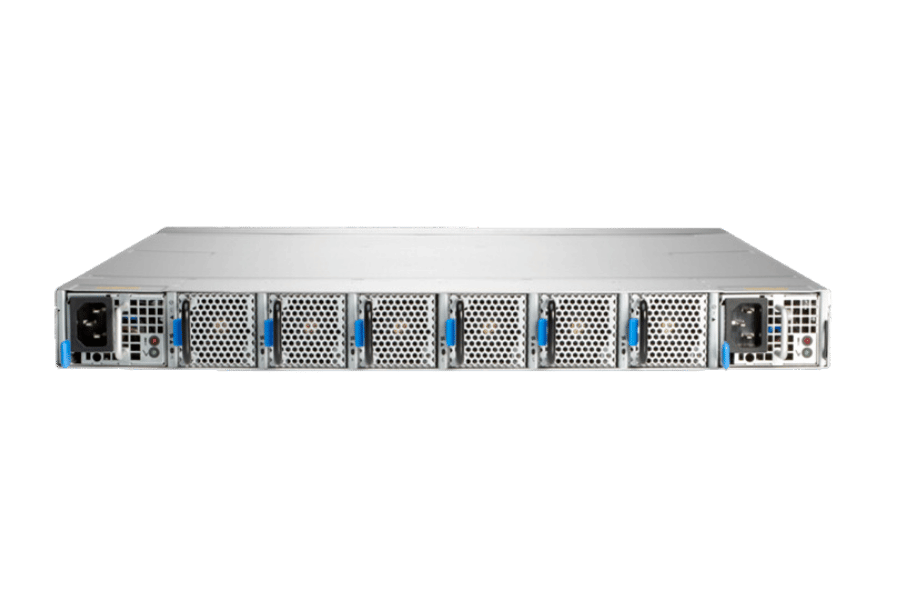

Features of Mellanox Infiniband Switches

To obtain outstanding network performance, Mellanox InfiniBand switches are built with a variety of features, such as:

- High Bandwidth: Each port of the Mellanox InfiniBand switch can support up to 400Gb/s in bandwidth. This is important for high-performance computing because it guarantees faster data transmission rates.

- Low Latency: These devices have very low latency, typically below 100 nanoseconds. Ultra-low latency is desirable for real-time AI model training, like processing live information.

- Scalability: The scalability feature allows you to grow your data center without affecting performance levels. Adaptive routing and congestion control help maintain peak performance even when the network expands greatly.

- Energy Efficiency: These switches were created using power-efficient designs so that they consume less power than others without compromising their abilities within large data centers.

- Quality of Service (QoS): QoS capabilities are built into each Mellanox InfiniBand switch. This means that certain packets will be given priority over others, thus reducing delays and improving the reliability of critical applications.

- Integrated Management Tools: These tools enable easy configuration, monitoring, and maintenance of networks through a user-friendly software package that is provided along with the switch itself. This not only saves time but also makes it possible to quickly get back online after an outage, thereby minimizing downtime experienced by users.

Together, these features enable Mellanox Infiniband switches to significantly improve network performance in HPCs (High-Performance Computing) and Big Data Analytics environments where large volumes of data need to move fast across different points on the network.

The Role of Mellanox Infiniband in Low-Latency and High-Throughput Systems

State-of-the-art interconnect solutions provided by Mellanox InfiniBand form an important part of systems with low latency and high throughput; they enable quick data transmission and minimal delays. Using sophisticated technologies ensures that packets are sent with ultra-low latency, usually less than a hundred nanoseconds. For algorithms such as AI model training, high-frequency trading, or real-time analytics, where every millisecond counts, this is very necessary. Moreover, its support for throughput rates of up to 400Gb/s per port enables efficient management of huge amounts of information; therefore, it becomes the best choice for environments that require fast processing and data transfer. Among other operational characteristics are congestion control as well as adaptive routing which further enhance performance by ensuring there are no interruptions in communication within large-scale data center infrastructures. Through integration with energy-saving designs plus strong management tools, besides boosting performance levels, Mellanox InfiniBand also improves efficiency in operation and saves power within modernized data centers.

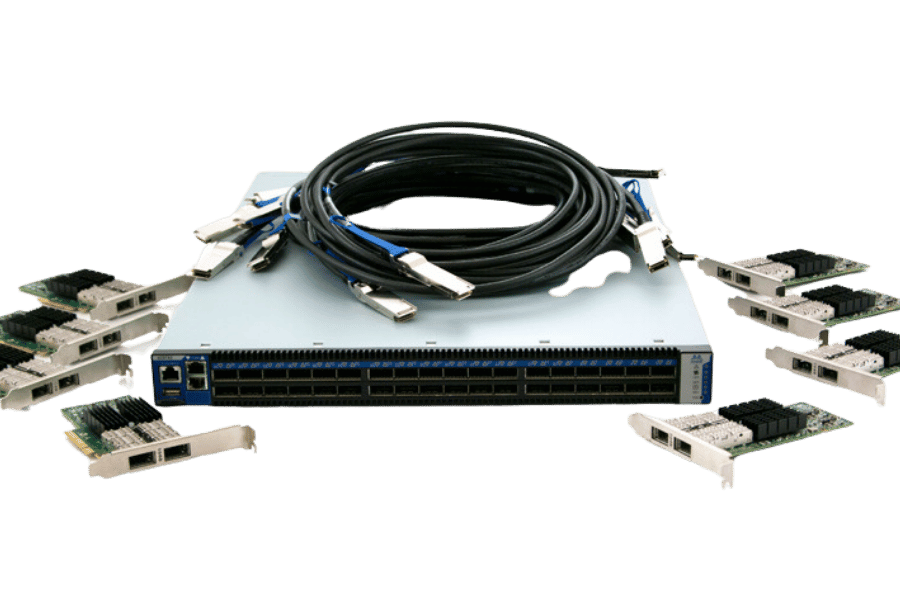

Deployment Scenarios for Mellanox Infiniband Switches

Mellanox InfiniBand switches are widely used in many different high-performance computing (HPC) and data center environments. HPC clusters rely on InfiniBand switches for ultra-low latency communication and high throughput, which is necessary to run complicated simulations and computational tasks efficiently. Within enterprise data centers, InfiniBand connects large-scale storage with computing resources so that users can access their information quickly while lowering overall costs. Additionally, Mellanox Infiniband is employed by cloud-based platforms, improving the performance of virtualized infrastructures by ensuring fast data transfer rates alongside reliable service delivery. These same switches also find utilization within AI and machine learning platforms, where they enable training large models by providing network bandwidth needed for handler inter-node communication at minimal latencies required.

What Are the Key Components of an Infiniband Network?

Types of Infiniband Adapters and Adapter Cards

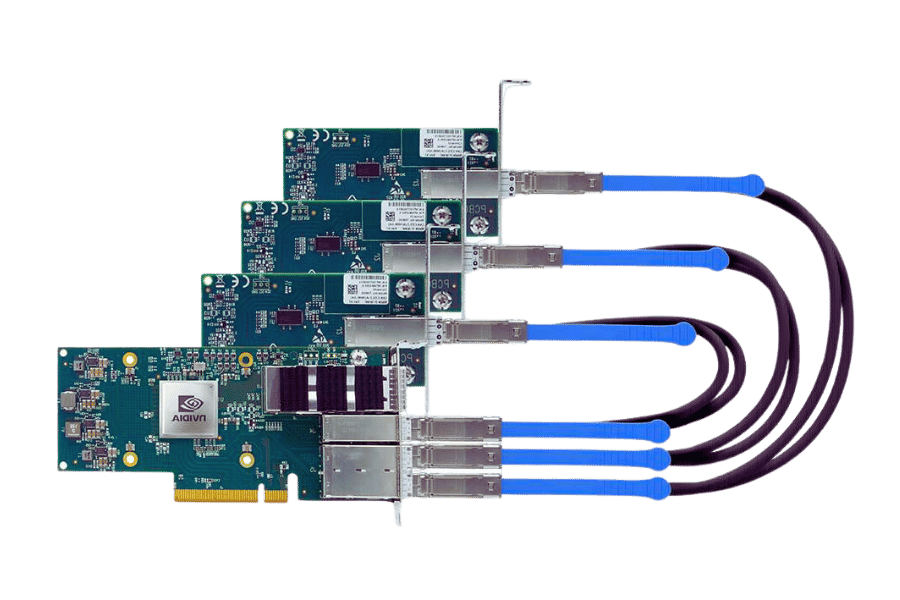

In high-performance computing (HPC) and data center settings, Infiniband adapters, as well as adapter cards, are crucial for fast communication. Different types of hardware exist, each one with features designed for specific infrastructural requirements. The main ones comprise:

- Host Channel Adapters (HCAs): These are meant to link servers into an InfiniBand network, which delivers low latency coupled with high bandwidth, hence suited for systems that depend on speed for their performance. Direct memory access among computing nodes cannot work without them.

- Active Optical Cables (AOCs) and Direct Attach Copper (DAC) Cables: AOCs or DACs are used physically connecting Infiniband switches either to servers or to storage devices; where longer distance connectivity is desired due to its higher signal integrity, AOC would be preferred over DAC, which only suits shorter distances but has a lower cost per link, thus making it easy to install.

- Smart Adapters: They come with many more advanced capabilities, including offloading network processing from the CPU onto the adapter itself, thereby improving the overall efficiency of a system, especially when there are heavy data processing needs such as those found in AI or machine learning environments.

All these adapters together ensure smooth high-speed networking so that modern computer systems can perform complex operations on data without any hitches.

Importance of QSFP56 Connectors and OSFP

Connectors such as QSFP56 (Quad Small Form-factor Pluggable 56) and OSFP (Octal Small Form-factor Pluggable) are fundamental in current fast networking systems, particularly HPC (High-Performance Computing) and data centers. Up to 200Gbps of data rates is supported by QSFP56 connectors, thus being ideal for applications with high bandwidth requirements as well as low latency. They are designed to be compatible with previous versions of themselves, hence ensuring flexibility during integration into an already existing infrastructure.

Unlike QSFP56, this type is engineered in such a way that it can accommodate much higher amounts of information, which may go up to even four times greater than those mentioned earlier. Additionally, they have been made more heat conductive so that next-generation network gears could handle them without any problem connected to their thermal performance.On the other hand, both these types promote scalability and dependability when it comes to transferring data at very high speeds, thus ensuring efficient communication among various networking gadgets.Its utilization is fueled by the ever-increasing need for processing power alongside the speed of moving information from one point to another, especially in areas like AI and machine learning, among others, where huge volumes of data sets are used .

Choosing Between Optical and Active Optical Cables

Comparing Optical Fiber (OF) with Active Optical Cables (AOC) necessitates thinking about the specific needs of your network environment. They each have their own strengths and use cases.

Optical Fiber:

- Distance: Optical Fiber cables can transmit data over long distances without much signal loss, making them ideal for large-scale data centers and backbone networks.

- Bandwidth is very important because it supports high-speed data transfers and helps keep the network running efficiently.

- Durability: Optical fibers are generally stronger than other types of cables and are not easily affected by electromagnetic interference (EMI), which means that they will work well in many different environments.

Active Optical Cables (AOC):

- Ease of Use: AOC integrates the cable ends with optical transceivers, making it easy to install without using more parts.

- Cost-Effective for Short Distances: These tend to be cheaper than OF when used on shorter distances, such as within a rack or between neighbouring racks

- Flexibility and Weight: Unlike traditional fiber optic cables, which are bulky and rigid, AOCs are lightweight and thus can be routed easily through tight spaces

Ultimately, you should choose either OF or AOC based on distance requirements, budget constraints, and specific network needs, among others. Generally speaking, people prefer using Optical Fiber for long-distance high-speed communication while opting for Active Optical Cables when deploying short-range links where the simplicity of installation and flexibility matter most.

How Does NVIDIA Leverage Mellanox Infiniband Technology?

Overview of NVIDIA Mellanox Integration

NVIDIA has taken advantage of Mellanox InfiniBand technology to boost its high-performance computing (HPC), artificial intelligence (AI), and data center solutions. For data-intensive applications and workloads, Mellanox InfiniBand provides ultra-low latency, high throughput, and efficient scalability. These general capabilities have been enhanced by NVIDIA in HPC systems through integration of their GPUs an software stack with Mellanox InfiniBand, thereby speeding up the exchange of information while processing it quickly, too. This allows for scientific research up to AI training, among other things, where different resources need to be pooled together for computation over a vast area network where various computer nodes share their storage capacity with each other to perform more calculations concurrently, thus reducing the time taken.

Benefits of NVIDIA Infiniband in Data Centers

Data centers benefit from using NVIDIA InfiniBand technology. The technology has the ability to bring about a big difference in the operational capabilities and efficiency of data centers. First, InfiniBand offers very low latency at the same time, high throughput, which is necessary for processing large amounts of data as quickly as possible as demanded by systems such as AI and machine learning, among others, that are used in high-performance computing (HPC). This feature alone makes it ideal for workloads where much information needs to be moved around rapidly between different points.

Secondly, with its exceptional scalability, this means that even if there was an increase in size or requirements by any company running a given data center, without affecting performance levels achieved before scaling up becomes easy because all one needs do is adopt more powerful equipment within their existing infrastructure then connect those new additions using InfiniBand cables alongside other old ones so that they become part of larger fabric networks capable of supporting thousands upon thousands of nodes connected together simultaneously while still maintaining robustness throughout system reliability shall also not be sacrificed during these connections thus ensuring continuous operation under intense load conditions.

Thirdly and last but not least, an important point is about resource utilization enhancement plus energy saving within data centers themselves through the deployment of InfiniBand. Resource allocation can be improved greatly by utilizing various sophisticated mechanisms like adaptive routing, among many others, which are aimed at reducing congestions caused by oversubscription, particularly during peak hours when traffic volumes tend to exceed available bandwidth capacity, leading to frequent packet drops resulting in retransmissions, thereby consuming lots power unnecessarily therefore adopting Infiniband would enable better overall system performance besides lowering operational cost significantly.

Active Optical vs. Optical Adapters from NVIDIA

Data centers rely on NVIDIA’s Active Optical Cables (AOCs) and Optical Adapters for connectivity. Each has its own benefits suitable for different uses.

Active Optical Cables (AOCs): These wires are unique because they have built-in active electrical components that can convert signals from electric to optical and back again within the same line. This conversion allows AOCs to be used over long distances without any loss in signal quality, making them ideal for use in large data centers. They are also very light and flexible, so they can be easily managed and installed even when space is tight.

Optical Adapters: These devices are needed whenever you want to connect a network device directly to an optical fiber cable. They act as a bridge between these two media types, making it possible to send information through them. The compatibility of Optical Adapters cannot be overemphasized since many protocols need different standards supported if one wants his or her network architecture to work well.

In conclusion, Active Optical Cables serve as integrated solutions so that installation becomes easier while giving higher performance over longer distances; on the other hand, optical adapters provide more flexible ways of connecting various types of equipment with fiber optic infrastructure within data centers. All these elements contribute significantly towards optimizing speeds required by today\’s digital networks for efficient data transmission across different locations.

How do you select the right Infiniband Switch for your needs?

Evaluating Port and Data Rate Requirements

To ensure the best possible performance and scalability, it is vital to evaluate data rate requirements as well as port numbers while choosing the InfiniBand switch. Start by determining how many ports are required. Think about the size of your data center and the number of devices that need connection. This can be influenced by the current workload or the expected workload in the future. Secondly, data rate needs will be evaluated, which will depend on specific applications being used and network bandwidth demands. Different models support different speeds, including 40Gbps (QDR), 56Gbps (FDR), or even higher, among others, for various levels of performance required by users. It is important to match the capability of a switch with its expected throughput so that no bottlenecks are experienced during the transmission of data through them. These two factors should be balanced: port count vs data rates. When selecting an InfiniBand switch, it should meet performance standards for your network while providing room for growth in the future.

Understanding 1U and 64-Port Configurations

Another important factor to consider when choosing an InfiniBand switch is the availability of physical and logical configurations. For example, there are 1U and 64-port models. The term “1U” refers to a switch’s form factor, which means that it takes up one unit of height (or 1.75 inches) in a standard server rack. This small size is helpful for saving space in crowded data centers, where many machines need to fit close together. Nevertheless, such switches still have powerful performance capabilities and can accommodate numerous ports despite their compactness.

A 64-port configuration indicates how many ports are present on the switch itself — and, as might be expected, this has direct implications for connectivity options. When 64 ports are available, it becomes possible for users to link up multiple devices; hence, they allow more server nodes or other devices to be connected directly into a single switch. Such an arrangement is especially advantageous if you are dealing with large-scale deployments or high-performance computing environments that require extensive connectivity coupled with low latency.

These setups save space while guaranteeing sufficient connections and performance necessary for today’s growing data centers’ demands. By balancing port capacity against form factor considerations, businesses can effectively expand their network infrastructure without sacrificing performance standards at any level within the system architecture design process.

Considering Non-Blocking and Unmanaged Options

Understanding the benefits and uses of non-blocking versus unmanaged InfiniBand switches in different environments is crucial.

Non-Blocking Switches: A non-blocking switch guarantees the highest throughput possible by allowing any input port to be connected to any output port at the same time with no loss of bandwidth. This is important for high-performance computing (HPC) and data-intensive applications that need constant delivery of data packets for optimal performance. They reduce bottlenecks, which leads to better overall network efficiency, thereby making them perfect where there is a need for high-speed data transfers coupled with real-time processing.

Unmanaged Switches: Conversely, unmanaged switches provide plug-and-play simplicity at a lower cost without configuration options. Generally easier to deploy and maintain than their managed counterparts; hence, they can work well in smaller networks or less demanding environments where features such as traffic management or monitoring are not critical. Although lacking some advanced capabilities found in managed switches, they still offer reliable performance suitable for small-medium enterprises (SMEs) and specific scenarios where keeping things simple on the network side is prioritized.

To decide between non-blocking and unmanaged switches, you need to evaluate your network needs, considering budget limitations and infrastructure complexity tolerance levels. While non-blocking switches are best suited for environments requiring maximum performance capability with minimum delay time, unmanaged switches represent affordable direct-forward solutions for low-level demands.

Reference sources

Frequently Asked Questions (FAQs)

Q: What is Mellanox Infiniband and why is it important?

A: Mellanox InfiniBand represents a fast interconnect technology created for high-performance computing (HPC) and data centers. It ensures low latency and high bandwidth, which are necessary for applications with intense data transfer and processing requirement.

Q: What are the key features of Mellanox Infiniband?

A: Some of the main characteristics of Mellanox Infiniband include low latency, high throughput, scalability, high bandwidth, quality of service (QoS), and support for advanced technologies such as RDMA and in-network computing.

Q: How does Mellanox Infiniband achieve low latency and high bandwidth?

A: The system achieves this by using improved interconnect architecture, efficiently using resources, and adopting features like RDMA that allow direct access to one computer’s memory into another’s.

Q: What are the differences between SDR, DDR, QDR, FDR, EDR, HDR, and NDR in Infiniband?

A: These abbreviations stand for various generations or speeds within InfiniBand. They include Single Data Rate (SDR), Double Data Rate (DDR), Quad Data Rate (QDR), Fourteen Data Rate (FDR), Enhanced Data Rate (EDR), High Data Rate(HDR), and Next Data Rate(NDR); each succeeding level offers higher performance measured in gigabits per second (Gbps).

Q: What role does QoS play in Mellanox Infiniband networks?

A: Quality of Service (QoS) guarantees that packets are prioritized according to their importance, ensuring better performance for demanding apps on reliable networks.

Q: How can Mellanox Infiniband be used to improve data center performance?

A: To enhance data center efficiency, servers’ performance should be optimized through application acceleration, hence requiring low-latency interconnects with high bandwidth, which are provided by Mellanox Infiniband.

Q: What does RDMA do, and how does it help Mellanox Infiniband users?

A: RDMA (Remote Direct Memory Access) is a technology that enables data to be transferred directly from one computer’s memory to another without any involvement of the CPU. This cuts down on latency and increases throughput, making it suitable for applications with high-speed data transfers.

Q: What role does PCIe x16 play in Mellanox Infiniband?

A: PCIe x16 refers to the interface used by Infiniband adapters to connect with the host system. A higher number of lanes (x16) results in faster data transfer rates, which are necessary for achieving peak performance levels in InfiniBand-connected systems.

Q: How does Mellanox Infiniband handle the quality of service (QoS)?

A: QoS is managed in Mellanox InfiniBand through different traffic classes, each with its priorities and bandwidth allocations defined. This ensures that critical applications get enough resources to maintain optimal performance.

Q: What does in-network computing mean, and how does it relate to Mellanox Infiniband?

A: The term “in-network computing” refers to the ability to process data within a network rather than only at endpoint devices themselves. Mellanox InfiniBand supports this capability, which can greatly decrease data movement, thereby improving overall system performance.

Recommend reading: What is InfiniBand Network and the Difference with Ethernet?

Related Products:

-

NVIDIA(Mellanox) MCP1650-H003E26 Compatible 3m (10ft) Infiniband HDR 200G QSFP56 to QSFP56 PAM4 Passive Direct Attach Copper Twinax Cable

$80.00

NVIDIA(Mellanox) MCP1650-H003E26 Compatible 3m (10ft) Infiniband HDR 200G QSFP56 to QSFP56 PAM4 Passive Direct Attach Copper Twinax Cable

$80.00

-

NVIDIA(Mellanox) MC220731V-005 Compatible 5m (16ft) 56G FDR QSFP+ to QSFP+ Active Optical Cable

$80.00

NVIDIA(Mellanox) MC220731V-005 Compatible 5m (16ft) 56G FDR QSFP+ to QSFP+ Active Optical Cable

$80.00

-

NVIDIA(Mellanox) MCP7H50-H003R26 Compatible 3m (10ft) Infiniband HDR 200G QSFP56 to 2x100G QSFP56 PAM4 Passive Breakout Direct Attach Copper Cable

$75.00

NVIDIA(Mellanox) MCP7H50-H003R26 Compatible 3m (10ft) Infiniband HDR 200G QSFP56 to 2x100G QSFP56 PAM4 Passive Breakout Direct Attach Copper Cable

$75.00

-

NVIDIA(Mellanox) MC220731V-015 Compatible 15m (49ft) 56G FDR QSFP+ to QSFP+ Active Optical Cable

$89.00

NVIDIA(Mellanox) MC220731V-015 Compatible 15m (49ft) 56G FDR QSFP+ to QSFP+ Active Optical Cable

$89.00

-

NVIDIA(Mellanox) MC2206130-00A Compatible 50cm (1.6ft) 40G QDR QSFP+ to QSFP+ Passive Copper Direct Attach Cable

$16.00

NVIDIA(Mellanox) MC2206130-00A Compatible 50cm (1.6ft) 40G QDR QSFP+ to QSFP+ Passive Copper Direct Attach Cable

$16.00

-

NVIDIA(Mellanox) MC220731V-050 Compatible 50m (164ft) 56G FDR QSFP+ to QSFP+ Active Optical Cable

$125.00

NVIDIA(Mellanox) MC220731V-050 Compatible 50m (164ft) 56G FDR QSFP+ to QSFP+ Active Optical Cable

$125.00

-

NVIDIA(Mellanox) MCP1600-E01AE30 Compatible 1.5m InfiniBand EDR 100G QSFP28 to QSFP28 Copper Direct Attach Cable

$35.00

NVIDIA(Mellanox) MCP1600-E01AE30 Compatible 1.5m InfiniBand EDR 100G QSFP28 to QSFP28 Copper Direct Attach Cable

$35.00

-

NVIDIA(Mellanox) MFS1S50-V003E Compatible 3m (10ft) 200G QSFP56 to 2x100G QSFP56 PAM4 Breakout Active Optical Cable

$480.00

NVIDIA(Mellanox) MFS1S50-V003E Compatible 3m (10ft) 200G QSFP56 to 2x100G QSFP56 PAM4 Breakout Active Optical Cable

$480.00

-

HPE NVIDIA(Mellanox) P06153-B23 Compatible 10m (33ft) 200G InfiniBand HDR QSFP56 to QSFP56 Active Optical Cable

$767.00

HPE NVIDIA(Mellanox) P06153-B23 Compatible 10m (33ft) 200G InfiniBand HDR QSFP56 to QSFP56 Active Optical Cable

$767.00

-

NVIDIA(Mellanox) MC2207130-003 Compatible 3m (10ft) 56G FDR QSFP+ to QSFP+ Copper Direct Attach Cable

$40.00

NVIDIA(Mellanox) MC2207130-003 Compatible 3m (10ft) 56G FDR QSFP+ to QSFP+ Copper Direct Attach Cable

$40.00