Mellanox adapter cards are the height of networking technology in a time when data transfer speed and connectivity take precedence. This paper offers an exhaustive analysis of Mellanox adapter card capabilities and applications, concentrating on Ethernet and InfiniBand solutions. Understanding these specifications, advantages, and deployment strategies for adapter cards used in high-performance computing by businesses that increasingly depend on them becomes crucial. Some key areas we will look into include architecture, performance benchmarks, and integration of various IT environments with Mellanox adapters to help readers know how they can tap their systems’ full potential while improving network efficiency. Suppose you are a network engineer, IT consultant, or someone who loves technology. In that case, this guide is for you – we’ll give you everything you need to know about using all the power of Mellanox adapter technologies!

Table of Contents

ToggleWhat Are Mellanox Adapter Cards?

Understanding Mellanox Technologies

Mellanox Technologies, now part of NVIDIA, focuses on high-speed networking and connectivity solutions using Ethernet and InfiniBand technologies. The organization is known for its dedication to increasing data throughput while decreasing latency in data centers, cloud environments, and high-performance computing applications. Mellanox has developed a broad selection of adapter cards, switches, and software that help move information efficiently through varied infrastructures. These products use cutting-edge technology like RDMA (Remote Direct Memory Access) or intelligent offloading that allows for smooth integration with other systems and better application performance overall. To take full advantage of these advances made by Mellanox, it is important to understand them, especially when dealing with their adapters under modern networking demands.

The Role of Network Interface Cards

Network Interface Cards (NICs) are crucial in linking computers and devices to a network. A NIC is the medium through which data packets are sent and received over the Local Area Network (LAN) or Wide Area Network (WAN). It is important for networking because it converts network protocols into electrical signals, enabling devices to communicate effectively.

Mellanox Technologies produces modern NICs with advanced features that support high bandwidth, low-latency communication, and hardware acceleration for various offloading tasks. These capabilities greatly improve overall network performance while minimizing CPU overhead, which is essential in cloud computing and big data analytics, where high-throughput applications run. Moreover, NICs facilitate network virtualization by allowing several virtual machines to utilize one set of physical network resources while guaranteeing isolation and safety and taking advantage of SmartNIC attributes.

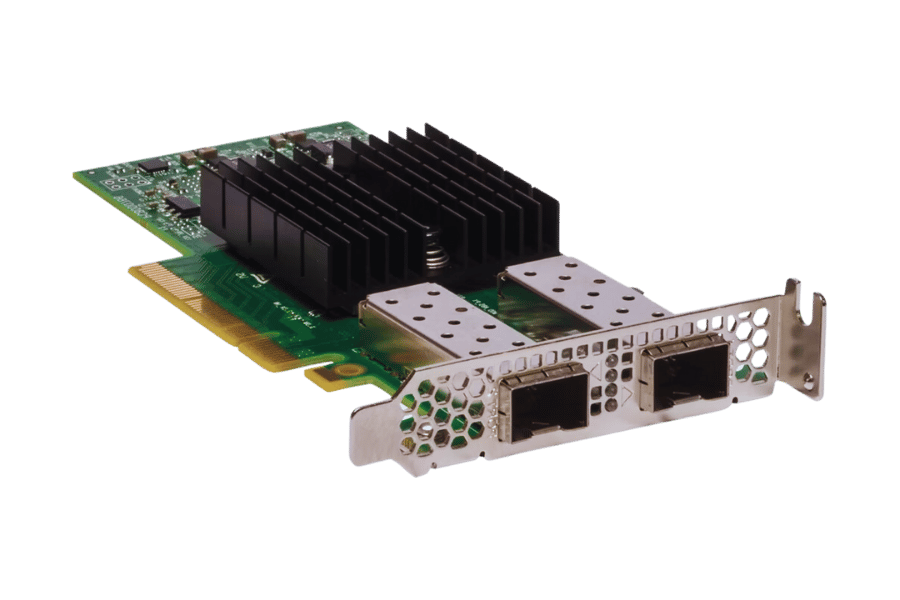

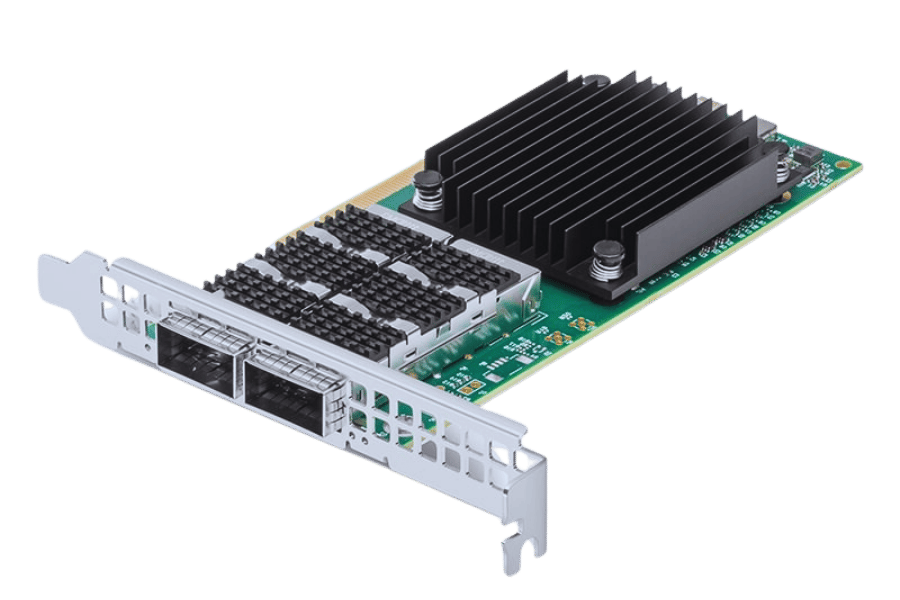

Types of Mellanox Adapter Cards

Mellanox provides a range of adapter cards, each designed for different networking needs and with unique features and capabilities. The main types are:

- Ethernet Adapter Cards: These cards enable high-speed Ethernet connections between 10GbE to 100GbE. They work best in environments that require heavy data transfer and low-latency communication, such as data centers and enterprise networks. Offloading abilities and support for RoCE (RDMA over Converged Ethernet) are among the advanced characteristics that make them suitable for storage or database applications.

- InfiniBand Adapter Cards: These cards have been designed specifically for high-performance computing (HPC) or data-intensive workloads where there is a need for higher bandwidths at lower latencies. InfiniBand solutions fit best in large-scale data environments, including scientific research, financial services, machine learning applications, etc., due to their optimization capabilities. They also provide excellent throughput as they can support different topologies thanks to their efficient architecture.

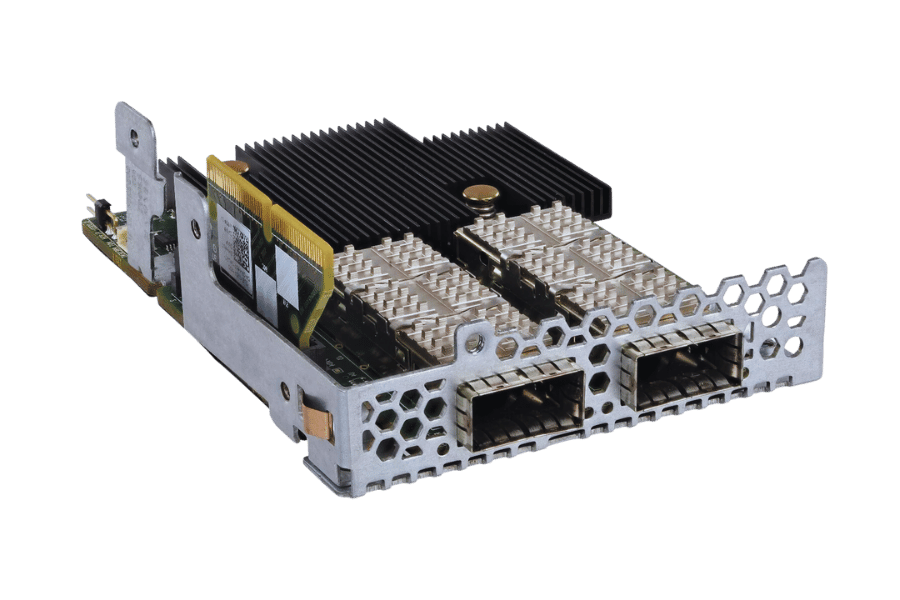

- SmartNICs (Smart Network Interface Cards): Mellanox’s SmartNICs integrate traditional NIC functions with on-card processing features thus making it possible to offload network processing tasks from CPU using hardware acceleration thereby improving performance on specific workloads like those involving connections requiring 10GbE especially in cloud environments where resource efficiency is key.

The choice of an adapter card will depend on the specific use case, workload requirements, and current infrastructure so that organizations can achieve optimal performance based on their networking needs.

How to Choose the Right Ethernet Adapter Card?

Evaluating Ethernet Connectivity and Throughput

It is important to consider connection options and throughput when choosing an Ethernet adapter. Connectivity refers to the type of supported Ethernet standards in the adapter, for instance, Fast Ethernet, Gigabit Ethernet, or 10/100/1000 Mbps links, among others. In high-demand environments you should look for higher bandwidth standards such as 10GbE or 100GbE adapters that are critical for large data transfers or real-time processing applications.

Throughput, on the other hand, measures how well a network interface performs under load from various factors like architecture, cable quality, and system configuration as a whole. When there is high throughput, it ensures efficient transmission of data, thus reducing latency and bottleneck situations. Offloading capabilities, among other advanced features, can improve this type of performance, making it necessary to select an adapter based on specific data workload requirements and infrastructure needs.

To sum up, organizations must prioritize both connectivity options and throughput performance to achieve optimal network efficiency that is reliable and tailored to their operational demands.

Considerations for 100GbE and 25GbE Dual-Port Options

The best placement of dual-port Ethernet adapter cards is 100GbE and 25GbE. To arrive at the best decision, it is critical to consider certain factors. Bandwidth requirements: Different applications have different bandwidth demands, and understanding them will help you choose between 100GbE for data-hungry apps and 25GbE for lesser ones. Redundancy and load balancing: In case there’s a failure with one port in a dual port configuration, then the second can take over while distributing loads evenly across connections that support both ports especially if they are ten gigabits each (10 GbE). Compatibility and integration: For seamless integration into the existing network infrastructure, such as switches or routers used within an organization, it is important to check whether your adapter works well together with these items before buying anything else like RDMA (Remote Direct Memory Access) which can greatly enhance performance levels when dealing strictly within data centers only! Cost considerations: Finally, considering total cost ownership, including initial purchase price along with recurring operating expenses, helps organizations make cheaper choices but still meet their overall strategic goals without compromising quality service delivery standards, so always think about this point carefully before making any decisions regarding purchases made by companies involved here today! Careful consideration of these factors will underpin successfully deploying dual-port 100GbE and 25GbE solutions within enterprise networks.

Compatibility with PCIe and Other Systems

When dealing with PCIe and other systems for dual-port 100GbE and 25GbE Ethernet adapter cards, there are some key considerations to keep in mind.

- PCIe Version and Lanes: Ethernet adapters’ performance is affected by the version of PCIe that’s supported by the host system. Newer adapters generally use interfaces such as PCIe 3.0 or even PCIe 4.0 which offer more bandwidth than their predecessors do. Furthermore, data transfer rates will be influenced by how many lanes (x8, x16) are available in a particular slot, meaning these must match correctly in order for full usage of card abilities.

- Operating System Compatibility: One important thing to check when using an adapter for a 10GbE setup is whether it has support from your operating system – usually this information can be found on manufacturer websites but popular OS platforms like Windows and Linux distributions should also work well together so long as drivers have been supplied.

- Server/Workstation Specifications: To prevent bottlenecking, you need to make sure that the server or workstation where you want to install the adapter meets its hardware specifications, including CPU type and memory size. Also consider if there’s enough room inside your chassis as well as adequate cooling capabilities because otherwise airflow might not flow freely around all parts involved thus leading them becoming overheated over time periods longer than expected due either too much heat being generated quickly without any way out through vents located places strategically chosen beforehand during designing stages development processes prior opening up businesses selling products made factories built regionally nearby communities populated people living near each other neighborhoods proximity schools attended children raised families started homes bought apartments rented etcetera ad infinitum …

In conclusion, these compatibility components help ensure easy integration of dual-port ethernet adapters into new or existing tech infrastructures!

Why is InfiniBand Important in Data Centers?

Exploring InfiniBand and Ethernet Connectivity

InfiniBand is an important technology used to connect data centers. It has certain benefits that traditional Ethernet infrastructures do not have. First, it provides more throughput and less latency, which makes it perfect for fast data transfer applications like high-performance computing (HPC) or real-time analytics. Many resources say that InfiniBand’s architecture supports features such as remote direct memory access (RDMA). This allows two computers’ memories to be connected directly without involving the CPUs, hence increasing efficiency.

On the other hand, Ethernet is still the common choice since it can be used widely in general networking environments where different types of systems are integrated together easily. However, Ethernet has been evolving with time, especially by adding 25G and 100G technologies so as to overcome bandwidth constraints experienced within large-scale data centers. Also noteworthy is that many firms have begun adopting hybrid approaches combining both InfiniBand and ethernet, thereby taking advantage of each technology while maximizing their capacity to transfer information between various applications/services.

In conclusion, either option selected should depend on specific cases considered along with existing infrastructure requirements around performance levels needed from them when choosing between these two connectivity options: infini-band versus ethernet cables for your company’s network system!

Benefits of InfiniBand for High-Performance Computing

The high-performance computing (HPC) environments are enhanced by InfiniBand. Here are a few of the significant advantages it offers:

- Improved bandwidth and decreased latency with Mellanox ConnectX-6 VPI cards: Unlike traditional Ethernet, InfiniBand has much higher bandwidth which can go up to 200 Gbps or more hence enabling fast transfer of data that is critical for HPC workloads. Applications that depend on rapid communication such as simulations and data analysis require its architecture to minimize latency.

- Efficient data transfer using RDMA: A major feature of InfiniBand is the support for Remote Direct Memory Access (RDMA). This allows the operating system to be bypassed, allowing direct memory-to-memory transfers between computers. The result is reduced CPU overhead, better throughput, and overall increased system efficiency, making it ideal for compute-intensive applications that need 10GbE connectivity.

- Scalability and Network Topology: For large scale compute clusters, scalable network topologies like fat-tree or dragonfly are supported by InfiniBand. This kind of scalability together with thousands of nodes being connected without any problem makes sure there is no deterioration in performance as HPC environments expand.

InfiniBand’s features make it an attractive option for organizations looking to improve their high-performance computing infrastructure’s capability.

Utilizing EDR IB for Maximum Data Rate

EDR InfiniBand can significantly boost data throughput and efficiency, making it perfect for high-performance computing workloads. Reaching speeds of 100 Gbps, the standard offers significant improvements over its predecessors to meet growing modern workload data requirements. To maximize EDR IB data rate, organizations should consider:

- Network Configuration Optimization: It is crucial that all network infrastructure components including switches and cables are configured for EDR capability. This involves ensuring that every part in the system supports EDR so as not to create any bottlenecks especially in a 10GbE network configuration.

- Using RDMA Efficiently: Latency and CPU load can be reduced significantly by using RDMA well. Applications must be optimized to fully utilize this feature with middleware and data protocols being compatible with EDR functionalities.

- Load Balancing and Resource Management: Implementing load balancing across nodes can improve resource utilization. This ensures an equitable distribution of workloads, preventing network congestion while maximizing overall system throughput.

Focusing on these strategies will allow organizations to tap into all the benefits brought about by EDR InfiniBand, thereby achieving maximum data rates that would ultimately improve performance in HPC environments.

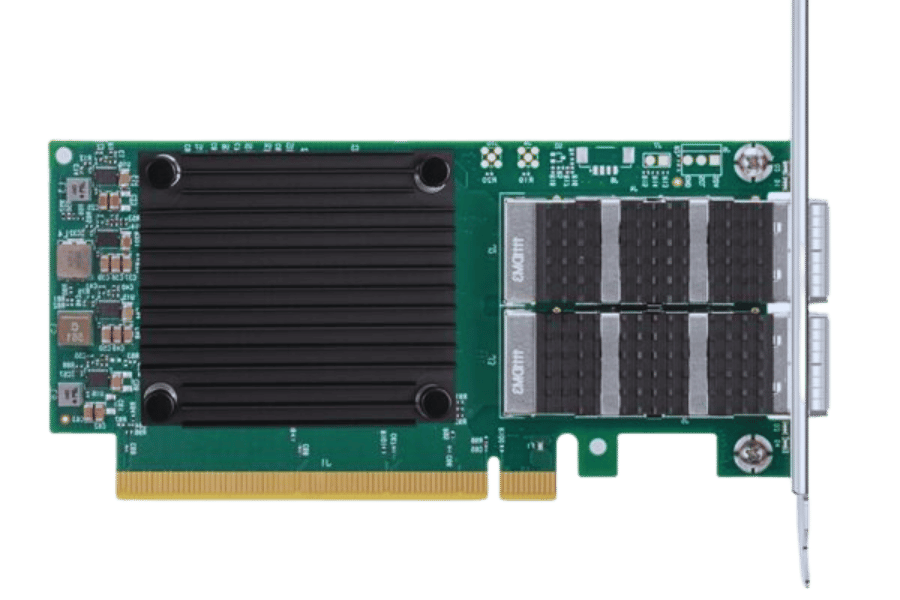

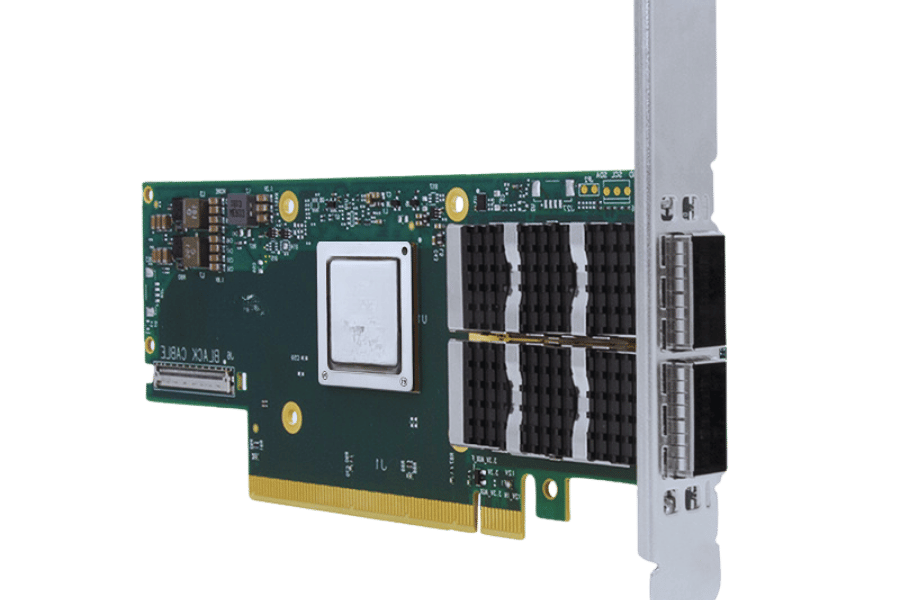

What are the Key Features of ConnectX-6 Adapter Cards?

Performance Metrics of Mellanox ConnectX-6

The Mellanox ConnectX-6 cards are designed for high-speed networks with great performance metrics. These adapters support 200Gbps data rates, twice as much as their predecessors. Here are some of the key features:

- Low latency and high bandwidth – The ultra-low latency provided by ConnectX-6 is less than 0.5 microseconds, making it ideal for applications sensitive to delays.

- RDMA Capabilities: This adapter supports both RoCE (RDMA over Converged Ethernet) and iWARP which improves direct memory access while reducing CPU overheads.

- Multi-Protocol Support: ConnectX-6 can work with different protocols, such as Ethernet and InfiniBand, making it very flexible in deployment scenarios.

- Offload capabilities: The adapter has many offloading features like NVMe over Fabrics support and hardware based encryption that boosts system efficiency and security.

- Scalability – This future-proof card integrates easily into existing infrastructures thus it can accommodate changing workloads enhancing scalability even up to 10GbE.

With these performance metrics, organizations can optimize their networking solutions for current or future demands of high-performance computing.

ConnectX-6 VPI and its Versatility

The ConnectX-6 VPI adapter card is a fantastic device that can work with both Ethernet and InfiniBand. Because it offers this dual functionality, organizations can ensure high-performance connectivity while accommodating different types of networks. Furthermore, advanced features such as Adaptive Routing and dynamic load balancing have been introduced to the card to optimize data transmission paths for better resource utilization. In addition, ConnectX-6 VPI has massive enhancements for cloud data centers and artificial intelligence workloads, which provide enough bandwidth at lower latency levels, which these demanding applications require. Moreover, its compatibility with existing infrastructure makes it easy to upgrade without causing much disruption as well as allowing room for flexibility towards adapting new technology directions, thus making this an ideal choice in modern-day environments driven by large amounts of information exchange between machines or systems over networks like internet etc., where speed matters most because every millisecond counts when dealing with big data analytics tasks involving real-time processing capabilities needed by companies today if they want to stay competitive globally!

Important ConnectX-6 Specifications

- Form Factor: This server is integrated into different types of servers with PCIe Gen 3 and Gen 4 compatibility.

- Data Rate: It has a maximum throughput of 200 Gbps making it possible to send and receive data at high speeds between two locations.

- Memory: The chip can support intensive applications by providing up to 16 GB onboard memory that can be used for buffering large data sets.

- Protocols Supported: The device supports Ethernet (50G, 100G, 200G) and InfiniBand (FDR, EDR, HDR) which provides flexibility in the design of networks ranging from gigabit ethernet to more advanced configurations such as optical networks using wavelength division multiplexing (WDM).

- Security Features: To enhance cybersecurity measures for sensitive information transmitted over the network, hardware-based encryption is included in this feature set so secure data transfer becomes more accessible than before – especially when dealing with financial records or personal health information where privacy concerns are paramount

- Latency: Low latency operation optimized for real time processing capabilities needed by high-performance computing workloads such as AI algorithms running on GPUs will be provided by our product line.

Power Consumption Energy efficiency should be considered during peak usage times but still allow maximum performance levels across all components designed into your system architecture including ConnectX-6 cards installed within blade servers housed inside racks located near cooling units positioned strategically throughout facilities housing these critical infrastructure elements required by enterprises looking improve their overall business model through better use technology resources available today.

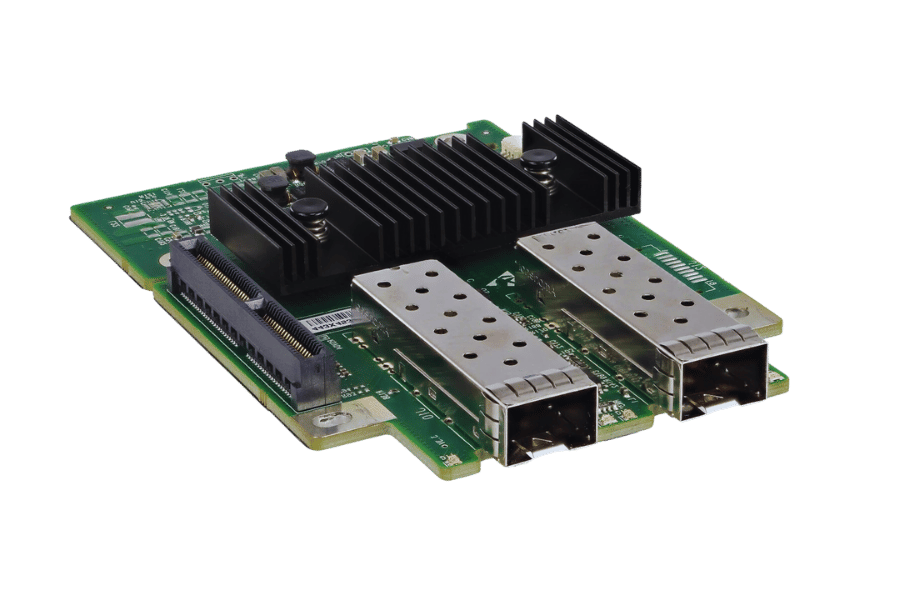

How to Optimize Network Utilization with SmartNICs?

Introduction to Smart Network Interface Cards

Smart Network Interface Cards (SmartNICs) are sophisticated network adapters that combine computing functions directly on the network interface. This permits offloading various networking tasks from the host CPU, allowing for higher performance and lower latency. Packet processing, encryption, and storage protocols can be handled independently by SmartNICs, which leads to better resource usage. Organizations can use these features to maximize their networks’ efficiency, simplify data movement, and improve overall infrastructure performance. Furthermore, SmartNICs offer programmability to enable tailored network functions according to application requirements and workload pressures, making it a flexible answer for changing networking needs.

Leveraging the Benefits of Dual-Port SmartNICs

There are many benefits to having a dual-port SmartNIC, especially regarding network efficiency and reliability. It increases fault tolerance and data throughput by load balancing and redundancy among different ports. This is important for high availability environments where it’s necessary to ensure that there are no bottlenecks in traffic between the two ports or continuous service during port failures.

Additionally, having two ports allows for better organization of network traffic, improving performance and security. For instance, management traffic can be separated from data traffic by assigning them their respective ports, thus ensuring efficient use of resources within an organization while simplifying its IT infrastructure. Overall, more resilient networks accommodating increasing demands from data-intensive applications can be achieved through the deployment of dual-ported smart NICs

Achieving Efficiency with RDMA and RoCE

Remote Direct Memory Access (RDMA) allows two computers to share memory without the involvement of a CPU. This reduces the time taken and increases efficiency. RDMA allows applications to transfer data directly over a network, thus significantly optimizing resource use.

RDMA over Converged Ethernet (RoCE) enhances RDMA by using Ethernet networks. It enables data centers to utilize their existing infrastructure while maintaining low latency and high throughput associated with RDMA. RoCE must be implemented to ensure lossless networking, which is crucial in preserving data integrity during high-performance computing tasks. The combination of these technologies can lead to remarkable performance improvements in data-intensive applications characterized by reduced latency, increased bandwidth, and overall better system efficiency.

What should you look for when installing Mellanox Adapter Card?

Step-by-Step Installation Process

- Preparation: Gather all the required tools and parts. Check if the Mellanox adapter is compatible with the server’s hardware.

- Power Down: Completely shut down the server and unplug it from power to avoid any electrical hazards.

- Open Case and carefully manage installation of components like Tall Bracket for larger cards.: According to manufacturer instructions, remove the server case cover so as to access the motherboard.

- Locate PCIe Slot: Find an appropriate PCIe slot for a Mellanox adapter card. Ensure that it is compatible and unobstructed.

- Insert Adapter Card: Carefully align this adapter card with PCIe slot then press it firmly into place until securely seated.

- Secure Card: Use screws or fasteners to secure an adapter card on a server chassis ensuring stability thus preventing movement.

- Reconnect Cables: Reattach any necessary cables such as power or network cables onto new adapter card(s).

- Close Case: Replace server case cover then secure according to manufacturer guidelines.

- Power On: Plug back in power supply into servers before switching them on again

- Install Driver(s): After booting up system, install appropriate driver(s) for Mellanox adapter from manufacturers’ website.

- Configuration Settings Adjustment(s): Using software tools provided by the manufacturer to adjust configurations settings based on the operational requirements of their product(s).

- Testing Procedures Run Tests To Ensure That Adapter Works Properly And Verify Network Performance Meets Expected Benchmarks.

Ensuring Compatibility with PCIe Slots

When placing an adapter card into PCIe slots, it is essential to check the following for compatibility:

- Slot Type: Check that the PCIe slot type (for example, x1, x4, x8, or x16) matches what is required by the adapter card such as a Mellanox ConnectX-6 VPI. Each card will have a specification indicating what slot type it needs like x16 which determines its bandwidth and performance.

- Motherboard Support: Review server motherboard specifications to confirm support for the desired PCIe version, e.g., PCIe 3.0 or 4.0, and the ability to accommodate part ID for easy identification purposes. Adapter cards need compatible versions of PCIe with motherboards because otherwise there could be negative effects on overall performance.

- Physical Space: Evaluate physical arrangement within the server case making sure that there is enough space around the PCIe slot where one wants to install an adapter card so as not to block any other component’s insertion path or affect airflow

- Power Requirements: Find out how much power does your new add-in-board require then make sure that server power supply can handle this load since some high-performance boards might demand more than just simple connectors.

Addressing these factors ensures seamless integration of your new add-in-board into your data center environment, thus maximizing reliability and performance.

Common Troubleshooting Tips

When you’re having trouble with an adapter card, try these troubleshooting steps:

- Check Physical Connections: Make sure that the PCIe slot is not loose and that the adapter card is firmly seated in it. Card recognition problems or performance difficulties can occur due to a loose connection.

- Update Drivers: Check whether you have installed the latest drivers for your adapter card. Problems with functionality can arise from outdated or incompatible drivers.

- Inspect System Logs: Any error messages related to the adapter should be checked in system logs. These logs often indicate operational abnormalities.

- Test with Different Slots: If there is no recognition of the adapter card, install it into another PCIe slot to eliminate potential issues specific to one slot.

- Examine Power Supply: It is important to ascertain that the PSU (Power Supply Unit) works properly and delivers sufficient power supply, especially when additional connector requirements exist for this particular type of hardware component, such as graphic cards, which consume more energy than normal during gaming sessions.

- Conduct a Benchmark Test, particularly if integrating a NVIDIA SmartNIC to assess performance gains.: After ensuring proper functioning of network interface controller(NIC), perform a benchmarking test on expected metrics using smart nics powered by NVIDIA technology integrated within servers hosting critical applications requiring high throughput rates between different virtual machines(VMs) deployed on same physical server host machine(s).

Users can efficiently isolate and resolve issues related to PCIe adapter card installations by following these troubleshooting tips.

Reference Sources

Frequently Asked Questions (FAQs)

Q: What are the key characteristics of Mellanox ConnectX-6 Dx VPI adapter cards?

A: Mellanox ConnectX-6 Dx VPI adapter cards feature a dual-port network interface with QSFP56 and PCIe 4.0 standards. These cards are designed for data centers and high-performance computing environments and feature advanced networking capabilities such as support for 100GbE and low latency connectivity.

Q: How do Mellanox network cards perform compared to other brands?

A: The ConnectX series of Mellanox network cards is famous for its superior performance features, such as low latency and high throughput. In demanding environments, these network cards often outperform other brands regarding CPU utilization, network efficiency, and scalable architecture.

Q: What distinguishes a ConnectX-5 EN NIC and a ConnectX-5 VPI adapter card?

A: The difference between the two lies in the fact that the former is used exclusively for Ethernet, while the latter can be used flexibly since it supports both Ethernet and InfiniBand protocols. Both types support high-speed networking with options like 25GbE dual-port SFP28.

Q: Can I use a QSFP28 transceiver with my Mellanox Ethernet adapters?

A: Yes! QSFP28 transceivers work well with various models of Mellanox ethernet adapters, including but not limited to its hundred gigabit dual ported qsfp28, which guarantees speedy connection along with reliable performance on any attached networks

Q: What benefits does PCIe 4 bring forth when dealing with Mellanoix’s networking devices

A: Compared to PCIE3, there’s increased speed capability for data transfer within PCI version four. As such then, this means that connecting x six dx cards from Mellanox running under pcie 4 will experience better performance coupled with decreased time delays, which are necessary where swift processing power is needed over big amounts flowing through different networks at once

Q: How do Mellanox network adapters enhance the scalability and efficiency of a network?

A: Advanced features such as low latency, high throughput, and efficient CPU utilization exist in Mellanox network adapters like the ConnectX series. These qualities enable high-performance data transfer and reduce bottlenecks—critical for growing network infrastructures—which enhances scalability and efficiency.

Q: Is there anything special to consider when using Mellanox smart NICs in a network?

A: Improving overall performance across networks is possible thanks to built-in processing capabilities that offload tasks from the CPU found in dual-port SmartNICs, such as those made by Mellanox. It’s vital to ensure compatibility with other components on the same system and optimize configurations within it so these advanced features can be fully utilized.

Q: What type of cables work with Mellanox network adapters?

A: Mellanox supports a wide range of cables, including, but not limited to, QSFP28, QSFP56, and Twinax. Twinax is often used for short distances because it is cost-effective while still performing excellently at high speeds, whereas QSFP56 supports even faster connections over greater lengths.

Q: About Mellanox adapter cards, what does “dual-port” mean?

A: Having two ports available on one card, which is referred to as dual sportiness within this context, can increase connectivity options and redundancy; ConnectX-6 Dx, for instance, has two 100GbE ports, thus allowing better failover scenarios between them during heavy loads than would otherwise have been possible with just one port alone.

Q: How does utilizing different protocols improve networking performance according to mellanoxtheories?

A: High-performance networking solutions are provided by InfiniBand and Ethernet, among others used regularly by mellanoxtheories where low latencies combined together result in increased throughput rates leading towards enhanced reliability levels overall throughout entire infrastructures involved here – thus making all systems run smoother together without any delays whatsoever occurring due simply because they weren’t designed properly from day one.