The need for a better high-speed networking solution is the order of today. This is because applications and cloud computing are gaining popularity and require such data-driven approaches. The Bellatrix 100gb ethernet technology is one of the most advanced added systems within these technologies, providing high performance with the minimum operation time, which is critical within modern enterprises. In this blog post, we will focus on the most exciting aspects of the 100 G Ethernet solutions by Mellanox Technologies concerning their design, use, and performance enhancement that can be realized. Once these features are appreciated, readers will be able to appreciate how Mellon technologies will take advantage of the high demands of massive data transmissions and the large connectivity requirements of the future.

Table of Contents

ToggleWhat Are the Key Features of Mellanox 100Gb Adapters?

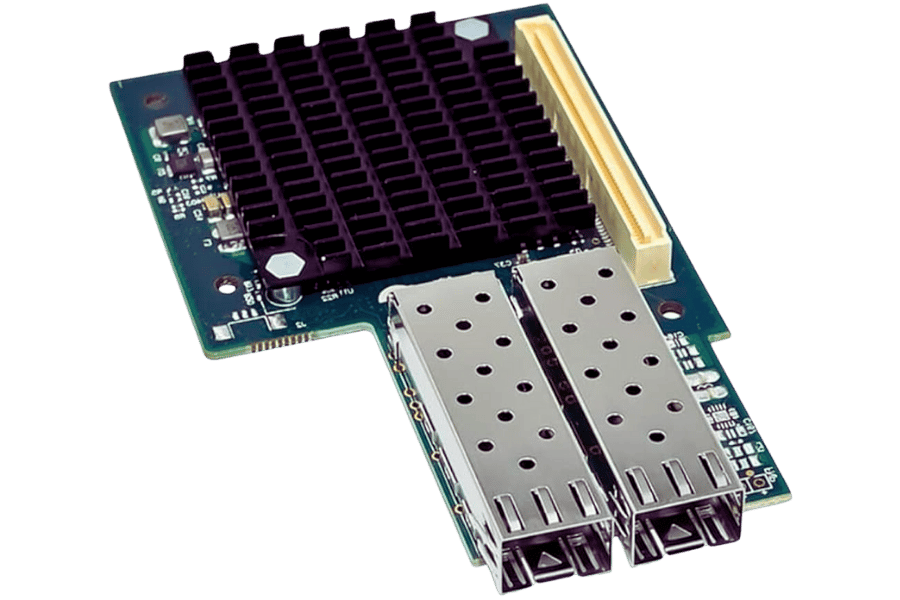

Understanding the ConnectX-6 Technology

The Mellanox ConnectX – 6 series is a huge development in network adapter solutions to improve 100G Ethernet performance in these adapters. Essential features are the willingness to work with several protocols simultaneously for the Ethernet and the InfiniBand protocol, enhancing the networking scope. The adapters provide progressive sanity overloads, such as stateless and stateful offloads, to promote efficiency and minimize the burden on the CPU. Moreover, the ConnectX – 6 devices in the presented interface support Enhanced Data Rate (EDR) owing to the high performance of achieving line rates of 200Gb/s and above. The technology also offers built-in capability to support RDMA over Converged Ethernet (RoCE), which provides high-performance low latency data transport purposes efficiently. Also, practical hardware management functions facilitate the optimization of the performance and reliability of the system even when there is a high data load. In summary, ConnectX-6 technologies satisfy the advanced requirements present in modern data centers in terms of network performance, scalability, and flexibility.

The Benefits of Using Dual-Port QSFP56 Adapters

Switchover precautions make deployments of dual-port QSFP56 adapters safe and optimal for network performance. First, it expands the bandwidth due to the data communication speed of up to 200Gb/s per port, allowing specific applications with great data processing capabilities. The dual-port configuration ensures redundancy and failover resources and, therefore, enhances the reliability and uptime of a network. Further, these adapters are also valuable for organizations since they are amenable to different forms of connectivity, either Ethernet or InfiniBand. The small size of the QSFP56 modules is space-saving in data centers, enhancing the airflow and lowering the cooling costs by using short cables like those of 3ft. Altogether, the functioning of dual-port QSFP56 adapters permits organizations to weave their data centers so that future data can be sustained and better disaster recovery can be provided.

How NVIDIA Mellanox Enhances Performance

NVIDIA Mellanox incorporates sophisticated enhancements and intelligent designs to increase the efficacy of multi-use networks. By maximizing offload features (for instance, RDMA, TCP & IP offload), NVIDIA Mellanox improves the CPU’s efficiency, allowing more computational resources for data processing tasks. Also, the application of high-speed interconnects along with the appropriate data transport protocols helps to minimize the overhead, which caps application performance, mainly when used over 10Gb SFP connections. Also, the improving adoption of software-defined networking into the company’s offerings will enable customers to eliminate coordination challenges and automate processes, allowing the companies to intelligently balance workload versus network configuration instantaneously. These improvements make NVIDIA Mellanox Solutions a central player in achieving optimal speed, practicality, and overall performance manageability in modern data center environments.

How to Choose the Right 100GbE NIC for Your Data Center?

Comparing Different Adapter Cards

Picking the right 100GbE Network Interface Card (NIC) is fundamental to improving a data center’s efficiency. When comparing different adapter cards, examining a few crucial factors, such as integration with existing hardware, supported protocols, and performance characteristics, including throughput, latency, and buffer size, is important.

- Compatibility and Form Factor: Ensure the NIC works with your server architecture and supports relevant form factors, such as PCIe slots. Such compatibility directly affects integration and overall operational efficiency.

- Performance Metrics: Look at those specifications’ maximum throughput, associated latency, and packet processing capabilities. Due to the advanced features of cards like NVIDIA Mellanox or Intel, offloading features enabling easy computation are standard. They allow improved data processing with more than one CPU for the card having less to do.

- Vendor Support and Warranty: What is the manufacturer’s reputation regarding the technical support provided? Always look for vendors who provide extensive warranty coverage and readily available customer support, as this will greatly help in the long-term operational stability and reliability.

Such careful consideration of the above factors will enable data center managers to choose the best 100GbE NIC with assurance that network systems are sufficiently upgraded to handle further expansions in data traffic.

Factors to Consider for Low Latency and High Throughput

To achieve low latency and high throughput in a typical data center environment, the following factors must be taken into account:

- Network Topology: Network topology affects latency and even throughput. By employing shortest-path routing techniques in network topological design, signal travel time and latency can be reduced. For instance, a Clos architecture can guarantee ample bandwidth with negligible latencies among the available switches.

- Quality of Network Cables: The cables chosen are critical to quality. Fiber optic cabling has been observed to possess lower latency than copper due to speedier data transmission and lack of electromagnetic interference. Using the suitable medium fiber, such as OM4 or OM5 cables, can improve performance in Mellanox1 products.

- Switching Technology: Deploying high-speed switches that integrate cut-through switching can help reduce latency. Unlike store-and-forward switches, which wait to load complete packets, cut-through switches offload packets as soon as the destination address is loaded, enhancing speed.

- Congestion Management: Network partitioning can be avoided by exploiting techniques such as Quality of Services (QoS) and traffic shaping, which address only sensitive traffic in terms of latency. At the same time, load balancing can be utilized to distribute traffic over numerous networks to increase throughput evenly.

- Hardware Offloads: Notably, the NICs that are provided with some offloading capabilities, like TCP offload, can relieve some of the CPU’s processing tasks. This decreases his role in engineering and places him in handling functions of great importance. This may enhance overall throughput while contributing to better latencies during busy hours on the network.

- Buffer Size: A NIC of the correct capacity buffer can take up large amounts of information without resulting in packet loss. With lower latency, buffers with small sizes provide further efficiency, whereas buffers with bigger sizes are needed to efficiently control a higher volume of information. There should, however, be a justification for the fact that there is a tradeoff between these regarding the case of the network being considered.

By carefully examining these elements, companies will be able to increase their networks’ performance, optimizing the possibilities of low latency and high throughput, which is especially important for modern data processing workloads.

The Role of Scalability in Modern Data Centers

Scalability is key in modern data centers since it affects their responsiveness to changing workloads and user requirements. A scalable data center can offer more resources, such as processing units, storage media, or network bandwidth, increasing vertically and horizontally. This, in turn, allows the organization to control how resources are utilized effectively so that performance is maximized when the demands are high. Costs are low when there are no adequate engaging requirements.

Cloud technology development provides companies with additional capabilities to scale because it employs the contemporary usage of resources as pay-as-you-go. Other than this, some forms of automation and orchestration lift the speed and ease within which resources are deployed and managed, enhancing operational effectiveness and lowering costs. Properly constructing scalable data centers is beneficial to companies in order to grow and capitalize, especially with Infiniband HDR, regardless of the varied abilities to sustain the affairs.

What Types of Cables Are Compatible with Mellanox 100Gb Adapters?

Understanding QSFP28 to QSFP28 Connections

QSFP28 (Quad Small Form-factor Pluggable 28) connections support high data rates of up to 100 Gbps owing to the ability to make data transfers through these strong connections. The four-channel connections are utilized in this one, spanning 25 Gbps, thus ensuring good signal integrity and short latency. The constituent cable types used by these QSFP28 adapters include passive and active optical cables (AOCs) and copper direct attach cables (DACs).

- Passive Optical Cables (POC): This type of polarization is economically favorable and appropriate for shorter lengths of about 100 meters or so, depending on the construction and use of the particular cable.

- Active Optical Cables (AOC): AOCs are dramatic improvements over traditional copper cables. They can reach lengths up to 300 meters and are well-suited for data centers that need longer lengths without any degradation in signal quality.

- Direct Attach Copper (DAC) Cables: DACs are simple and inexpensive cabling systems and are, therefore, widely used due to their dependable efficiency, especially for distance coverage of up to 7 meters. These cables are especially beneficial in inter-rack linkage and short-distance connections within the same rack.

When terminating QSFP28 to QSFP28 connections, different organizations must consider the length limitations, performance levels, and the design of the whole network when selecting these cables.

Choosing Between Active Fiber and Passive Direct Attach Copper

When choosing between Active Optical Cables (AOCs) and Passive Direct Attach Copper Cables, also known as DACs for the QSFP28, certain factors must be considered, including the use of directly attached copper cables. It has been found that AOCs provide better performance over greater distances due to advanced signal processing, which allows reliable data transmission over distances of up to 300 meters. It applies when distance is extremely important, and flexibility in routing cables is necessary. On the other hand, DACs are usually used for applications over shorter ranges that do not exceed 7 meters; for this reason, there is cost and no nonpowered construction. They are ideal along the walls of high-density racks where cost concerns are vital factors. Ultimately, the final decision on either AOCs or DACs has to be determined by the distance of the application, the money available, and the functional purposes of the elements in the network infrastructure. Organizations should analyze their specific situations to see the optimal solution, especially in the context of interconnects and direct-attached copper cables.

The Advantages of Using Direct Attach Copper Twinax Cable

Direct Attach Copper (DAC) Twinax cables also enjoy some benefits that make them appropriate to use in data centers and networking environments. Firstly, DAC cables are inexpensive compared to the price of optical cabling, especially over short distances. Secondly, low latency and minimal signal loss contribute to high-quality data transmission that meets the requirements of high-speed applications. Thirdly, DAC cabling is complemented by easy implementation and maintenance as there is no requirement for extra power sources, making it possible to place servers and switches within a bundle in high-density racks safely. Additionally, their robust and rugged construction makes them durable, and the rechargeable features of the cable are somewhat helpful for routing the cable when space is limited. Lastly, there will likely be better network functioning, ease of management, and overall cost reduction after incorporating DAC Twinax cables into the system.

Understanding Optical Transceivers for Mellanox 100Gb Ethernet

Differences Between QSFP28 and QSFP56 Modules

The operating principle of the QSFP28 and QSFP56 modules is similar in that they are used in high-speed networks but are suited for different bandwidth applications. The two technologies only differ in the amount of data transferred, which varies as the name suggests: up to 100 Gbps for the QSFP28, while the QSFP56 is designed for better performance, rated at 200 Gbps. The other distinction is also in the use of the modulation schemes, QSFP56 cables are generally more advanced as they are capable of data transmission using PAM4 (Pulse Amplitude Modulation) that packed more data in one medium without having to add more cables. Meanwhile, power distribution through QSFP56 modules is also somewhat different; higher power characteristics are typically designed to increase bandwidth. In summary, the factor determining the choice of either the QSFP28 or the QSFP56 will be the organization’s network specifications and future expectations regarding growth and performance.

How Infiniband EDR Transceivers Improve Efficiency

The InfiniBand Enhanced Data Rate (EDR) transceivers are crucial in improving network efficiency since they offer high bandwidth and low latency, particularly in data centers and high-performance computing facilities. EDR transceivers can transmit data at 100 Gbps, enabling users to speed up data processing and transfer for resource-intensive applications. Their improved design prevails over the high rate of connectivity integration, allowing for more connections within ‘congested’ rack spaces, thus better resource management.

Moreover, EDR transceivers include innovative technologies for Data Flow Optimization, such as Adaptive Routing and congestion control, to create a more efficient network spreading data throughout. This reduces the chances of deadlocks, providing constant and decent performance regardless of the load present. Last, the operational efficiency of EDR transceivers in lowering power use per Gbps output, as marketing has advanced, reduces costs and enhances the green aspects of the organizations that seek progressive networking capabilities. These characteristics define InfiniBand EDR transceivers as an integral infrastructure component for efficient network optimization in today’s architectures.

Troubleshooting Common Optical Transmission Issues

To rectify common problems associated with optical transmission, it is advisable to follow a particular order when troubleshooting. Below are three points those steps learned from the best practices of leading among given resources:

- Look over Physical Connections: Start by checking all the connectors and wires to ensure that they are free of breaks or dirt. Too loose or dirty connections cause most transmission problems.

- Test Signal: A light source and optical power meter should be used at various system parts to ascertain its signal strength while receiving the system signal. Look for received power levels within the manufacturer’s preferred level.

- Assess Compatibility Compatibility: All optical network components, such as transceivers, cables, and connections, need to be checked to see if they match. This further matches all the likelihood components which aberrate the signal.

- Look at Network Configuration: Check that network compatibility is true for the devices in the network (for instance, located wavelength, framing, and bitrate). There are chances that wrong associations cause increased or low signal transmission ability, especially while employing 10Gb SFP.

- Evaluate Environmental Factors: Analyze the environmental conditions impacting transmission, such as temperature, humidity, and obstacles to acute conditioning. These factors may help with some of the performance problems.

By addressing these various areas in a systematic manner, many reasons for poor optical transmission can often be identified and corrected to enhance network performance.

What Do Customer Reviews Say About Mellanox 100Gb Products?

Analyzing Feedback on Performance and Reliability

As it concerns reviews conducted concerning Mellanox 100Gb products, three factors are perceived to pull together quite a number of customer reviews on different top-ranking websites: performance, reliability, and customer support.

- Performance: A number of users boast of excellent network efficiency and exceptionally low latency, especially in high-density data center installations. In the reviews, it is generally notable how well Mellanox products solve tasks even at peak loads with respect to the workload.

- Reliability: The reviewers well discuss their inclination to commend the new mellow 100 GB products and especially their promise of reliability. One of the advantages considered is the low rate of failures and stable operation for a prolonged time, which increases the efficiency of the entire network and reduces downtime.

- Customer Support: The customers are also satisfied with the customer support, and there are many positive comments in that direction. The promptness and knowledge of the supporting staff were appreciated, with customers noting that help at the right time and effective resolution of issues were very beneficial in enhancing their experience with the products.

To sum up, the customers indicate that Mellanox 100Gb products are high-performing, reliable, and offer excellent customer support services, which foster customer satisfaction over a long period of time.

User Experiences with Deployment and Support

Considering user experience regarding the rollout and maintenance of Mellanox® 100Gb products, the review of today’s leading platforms suggests several themes, primarily vendor support and warranty.

- Seamless Integration: In most cases, users point out that the installation and configuration processes free up time to accomplish the above tasks, allowing the units to go live within the current network systems. This apparent oversimplification of integration is often explained by private and implicit reports and well-written cords of melanoma.

- Ongoing Technical Support: According to reviewers, support technicians are available during and after the deployment process, which makes it easy for the clients to complete the process. Several users mention that issues are usually solved in time, which helps carry out operations effectively and reduces the time needed for the organisation to move to the Mellanox systems.

- Training and Resources: Comprehensive training sessions and resources, together with realistic other supportive measures, are often called supportive measures. These measures help teams maximize the utility of Mellanox products. Users report that they like the availability of online and on-ground training that makes them more efficient in their operations.

To conclude, user deployment of the Mellanox 100Gb products has been encouraging, mainly due to the ease of installation, immense technical support, and adequate training resources, which eventually resulted in customer satisfaction.

Case Studies Highlighting Real-World Applications

Field experiments with Mellanox® 100Gb products support this fact, indicating how flexible and effective these networking products are. Observation of successful websites reveals exciting applications:

- Financial Services: One of the largest US banks implemented Mellanox 100Gb Ethernet switches to process too much information with too low a timeliness. As a result of this deployment, there was a 30% increase in data processing speed, allowing for real-time analytics and decisions, which are very important in the financial world, where there is a lot of competition.

- Healthcare: While enhancing the data center interconnectivity, one of the leading healthcare providers installed Mellanox solutions. The group was able to cut the latency periods associated with transferring files, especially electronic medical records, by employing 100Gb infrastructure. The improvement of patients and operational activities was worth 100 billion dollars, bearing in mind that transition meant not only maximizing but also maintaining compliance with regulations set on external safeguards.

- Cloud Computing: One of the leading companies offering cloud services managed to upgrade its warehouse infrastructure with Mellanox 100Gb technology. This upgrade produced more bandwidth and elasticity, increasing revenue from the ever-growing demand for cloud services. As a result, when the company produced forty services and served fifty customers, the performance of services and customer satisfaction increased by fifty percent; hence, the company’s presence in the cloud space became more competitive.

These case studies incorporate commercial endorsements of Mellanox 100G products, emphasizing their functional significance in creating and transforming different sectors.

Reference Sources

Frequently Asked Questions (FAQs)

Q: What is Mellanox 100Gb Ethernet connectivity?

A: As per the definition of Melons 100 gigabits per second ethernet connectivity, it is mature high-speed interconnects offered by Melons 10o gigabit ethernet products aimed at improving the performance of data center and enterprise networks, components such as qsfp, aoc, and DAC cables.

Q: What kinds of cables come under 100Gb Ethernet Mellanox?

A: Regarding the Mellonex 100gb Ethernet, this support comes from the assistant active optical cables (AOC), direct-attach copper cabling (DAC), fiber optical cables, and Active Optical Fibre cables per the relevant professional distances and needs.

Q: What makes active optical cable (AOC) distinct from DAC?

A: An active optical cable, or AOC, is a fiber optic cable that sends data faster and over longer distances than traditional interconnects. A direct-attach copper, DAC, uses copper to connect and is mainly used for shorter distances, generally in need of energy-efficient interconnects within a rack or adjoining racks.

Q: What is the purpose of QSFP28 from the perspective of the 100G Ethernet Mellanox?

A: In fast megapixel arrangements and high-speed channel data transceivers, the QSFP28 is a compact, hot-swappable optical transceiver format that plugs and plays high-speed communication. It can operate at 100Gb ethernet and is plugged into Mellanox switches or nics for connection with diverse network apparatuses.

Q: Are Mellanox 100Gb Ethernet products partially interoperable with other vendors’ equivalents?

A: Absolutely. Mellanox 100Gb Ethernet products such as 100g qsfp28 or Mellanox ConnectX-6 are compliant and friendly with foremost manufacturers like Cisco, HPE, Fortinet, Supermicro, etc.

Q: What is the significance of LINKX in Mellanox 100Gb Ethernet solutions?

A: LINKX refers to Mellanox’s line of high-speed interconnect products, which include active optical, DAC, fiber optic cables, and transceivers. These products are meant for applications in data centers and are highly reliable and performant.

Q: To which product category does one head for Mellanox 100Gb Ethernet connectivity?

A: In choosing the appropriate product, the following aspects have to be considered: required data over what range, environment, i.e., office, data center, enterprise environment, and whether there is any compatible equipment. Mellanox has a range of products for diverse networking requirements, from dual port NICs to active optical cables and qsfp28 DC cables.

Q: Do you recommend using Mellanox ConnectX-6 adapters, and why?

A: The Mellanox ConnectX-6 adapters are optimized for bandwidth and networking performance leveraging the power of Dual-port 100Gb Ethernet, Dual PCIe x16 Interface, and Multiple Protocol Support (Ethernet/InfiniBand EDR 100g qsfp28/HDR 400g), thus assisting in data center optimization and expansion.

Q: What is the delivery time for Mellanox 100Gb Ethernet products?

A: Delivery times for the Mellanox 100Gb Ethernet products, viz. the mel MCP1600-E001E30 1m monopole compatible cable and other such complimentary accessories, are available within a working day to several working days or workdays depending on stock levels and the transport mode to be employed.

Q: Is it possible to work with the Mellanox 100Gb Ethernet equipment in an HPC environment?

A: Yes, these products can also be used in HPC environments. Mellanox 100Gb Ethernet equipment, active optical cables, fiber optic solutions, and QSFP28 modules offer saturated Copps bandwidth, low latency, and high reliability.

Related Products:

-

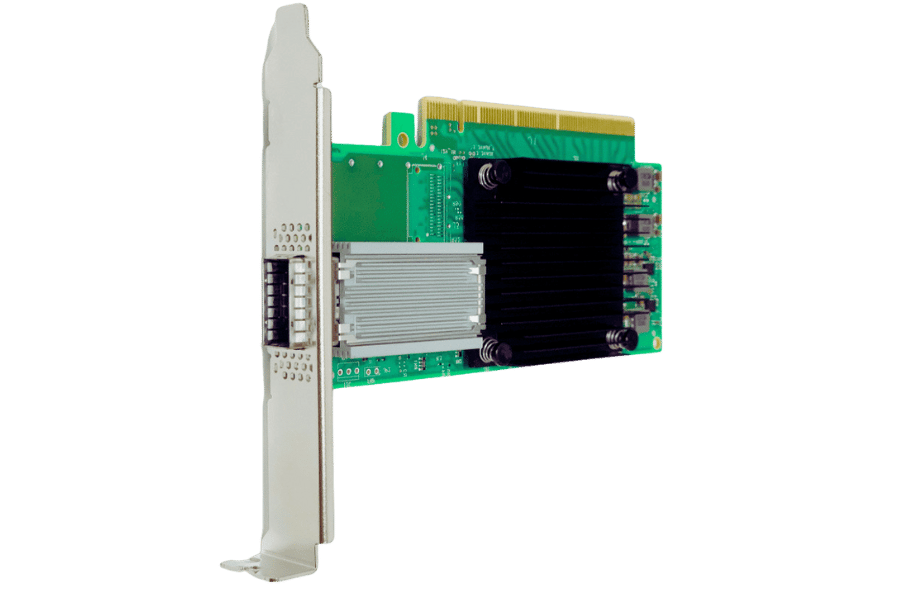

NVIDIA NVIDIA(Mellanox) MCX516A-CCAT SmartNIC ConnectX®-5 EN Network Interface Card, 100GbE Dual-Port QSFP28, PCIe3.0 x 16, Tall&Short Bracket

$985.00

NVIDIA NVIDIA(Mellanox) MCX516A-CCAT SmartNIC ConnectX®-5 EN Network Interface Card, 100GbE Dual-Port QSFP28, PCIe3.0 x 16, Tall&Short Bracket

$985.00

-

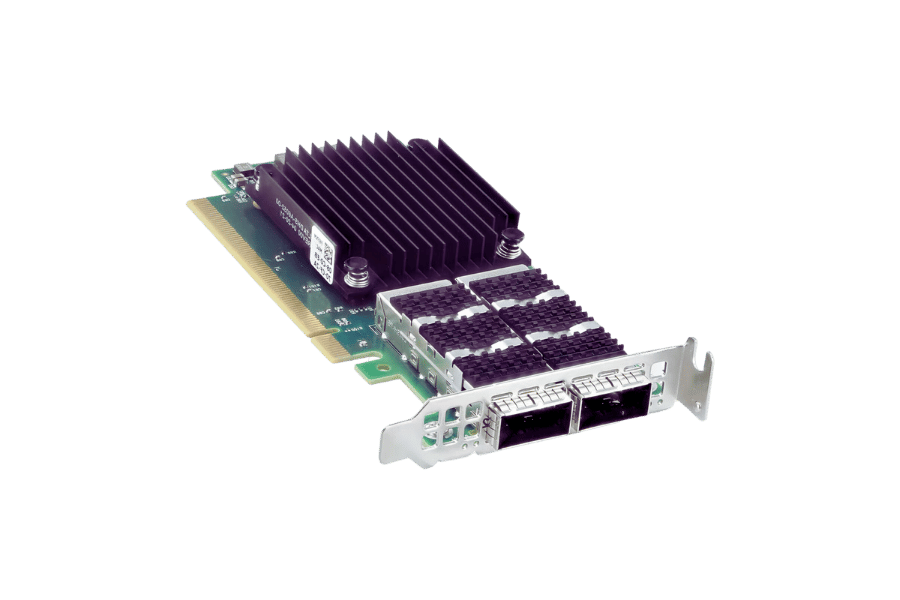

NVIDIA MCX623106AN-CDAT SmartNIC ConnectX®-6 Dx EN Network Interface Card, 100GbE Dual-Port QSFP56, PCIe4.0 x 16, Tall&Short Bracket

$1200.00

NVIDIA MCX623106AN-CDAT SmartNIC ConnectX®-6 Dx EN Network Interface Card, 100GbE Dual-Port QSFP56, PCIe4.0 x 16, Tall&Short Bracket

$1200.00

-

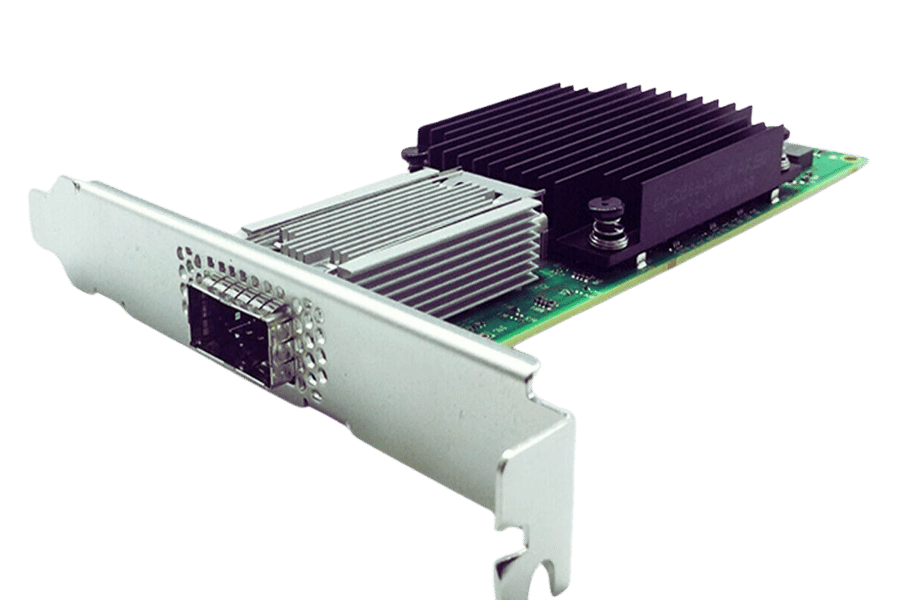

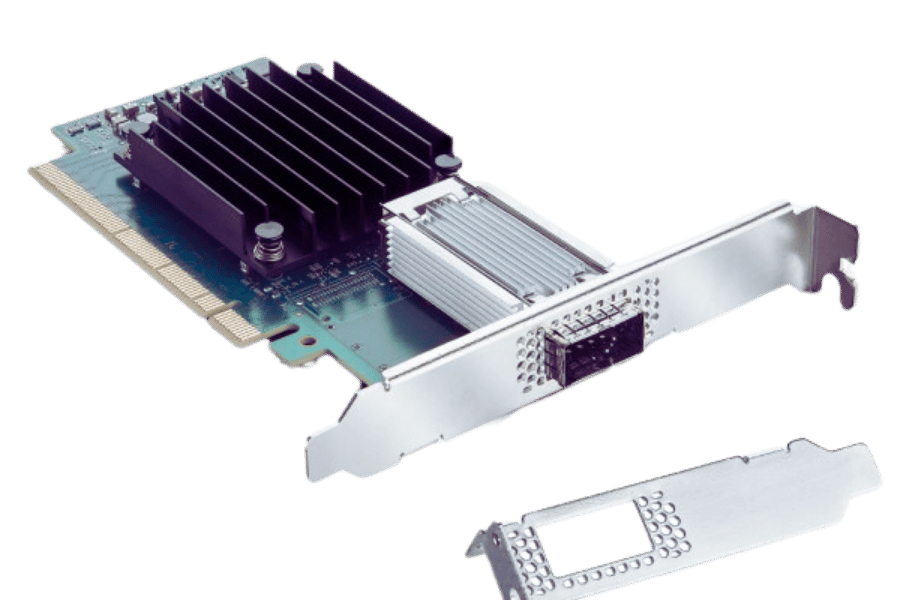

NVIDIA NVIDIA(Mellanox) MCX515A-CCAT SmartNIC ConnectX®-5 EN Network Interface Card, 100GbE Single-Port QSFP28, PCIe3.0 x 16, Tall&Short Bracket

$715.00

NVIDIA NVIDIA(Mellanox) MCX515A-CCAT SmartNIC ConnectX®-5 EN Network Interface Card, 100GbE Single-Port QSFP28, PCIe3.0 x 16, Tall&Short Bracket

$715.00