A promotional video of Musk’s xAI 100,000 GPU cluster was recently released online. Sponsored by Supermicro, the video features an onsite introduction by a foreign expert at a data center, taking 15 minutes to discuss various aspects of the 100,000 GPU cluster. These aspects include deployment density, cabinet distribution, liquid cooling solution, maintenance methods, network card configuration, switch specifications, and power supply. However, the video did not reveal much about the network design, storage system, or training model progress. Let’s explore the ten key insights!

Table of Contents

ToggleLarge Cluster Scale

In contrast to the more common clusters in our country, typically composed of 1,000 GPUs (equivalent to 128 H100 systems), the 100,000 GPU cluster is 100 times larger, requiring approximately 12,800 H100 systems. The promotional video claims that the deployment was completed in just 122 days, showcasing a significant disparity between domestic and international GPU cluster capabilities.

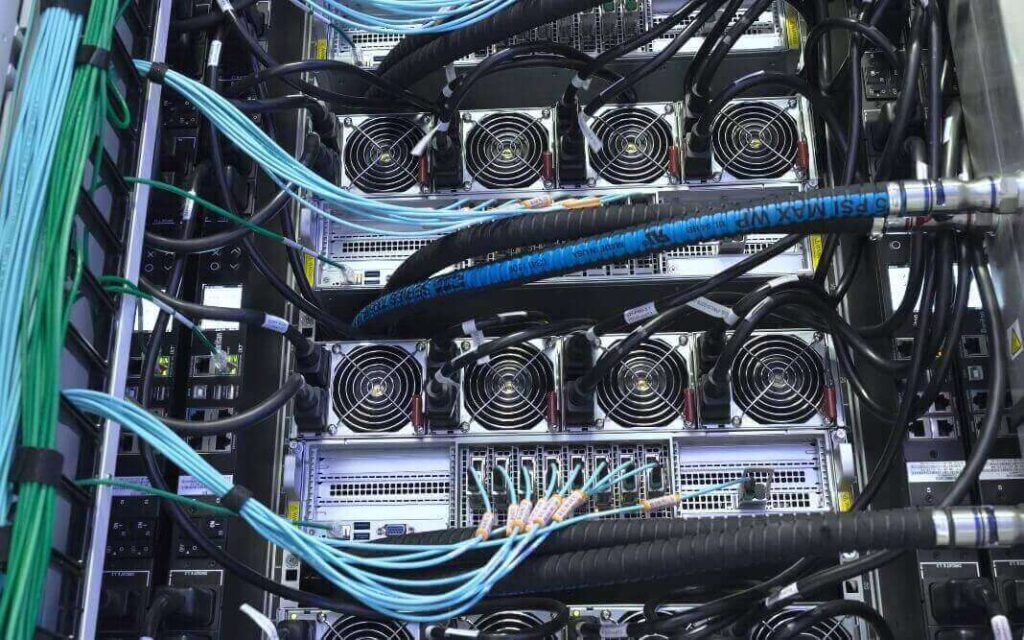

High Computational Density

The video shows that the H100 uses a 4U rack design, with each cabinet deploying 8 systems, equating to 64 GPUs per cabinet. A row of cabinets contains 8 cabinets, making up 512 GPUs per row. The 100,000 GPU cluster comprises roughly 200 rows of cabinets. Domestically, it’s more common to place 1-2 H100 systems per cabinet, each H100 system consuming 10.2 kW. Deploying 8 systems exceeds 80 kW, providing a reference for future high-density cluster deployments.

Large-scale adoption of Cold Plate Liquid Cooling

Although liquid cooling technology has been developed for many years domestically, its large-scale delivery is rare. The video demonstrates that the 100,000 GPU cluster employs the current mainstream cold plate liquid cooling solution, covering GPU and CPU chips (while other components like memory and hard drives still require air cooling). Each cabinet has a CDU (Cooling Distribution Unit) at the bottom, configured in a distributed manner, with redundant pumps to prevent system interruptions due to single failures.

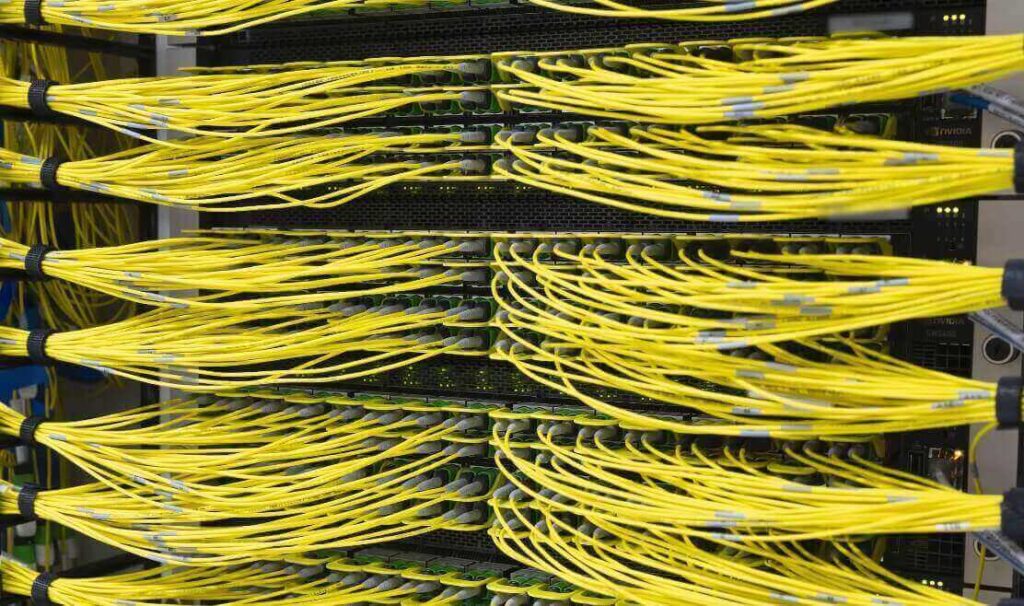

Network Card and Networking Solution – RoCE

While the video does not detail the network topology, it mentions that each H100 device is equipped with 8 Mellanox BFD-3 cards (one for each GPU and corresponding BFD-3 card) and one CX7 400G network card. This differs from the current domestic configurations, and the video does not provide an explanation for this setup. Additionally, the network solution uses RoCE instead of the more prevalent IB networking domestically, likely due to RoCE’s cost-effectiveness and its maturity in handling large-scale clusters. Mellanox remains the switch brand of choice.

Switch Model and Specifications

The video introduces the switch model as the NVIDIA Spectrum-x SN5600 Ethernet switch, which has 64 800G physical interfaces that can be converted into 128 400G interfaces. This configuration significantly reduces the number of switches required, potentially becoming a future trend in network design.

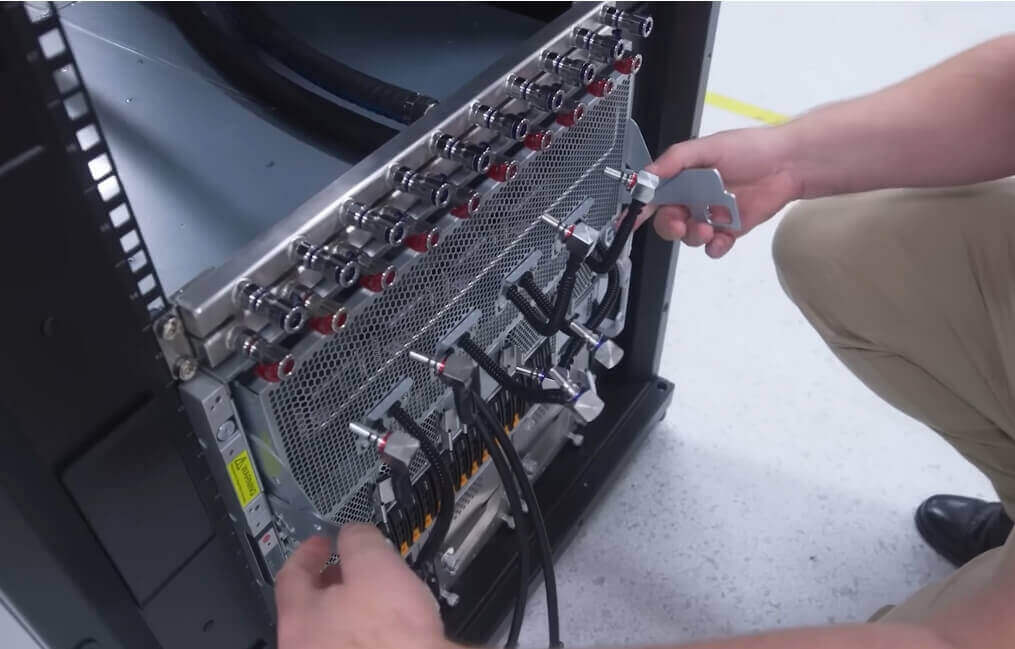

Modular Maintenance for GPU Servers

We all know that the failure rate of H100 GPUs is significantly higher than that of general-purpose servers, making replacement and repair quite challenging. The video showcased Supermicro’s 4U H100 platform, which supports drawer-style maintenance for GPU and CPU modules. As shown in the image, there is a handle that allows for easy removal and maintenance without having to disassemble the entire server, greatly enhancing maintenance efficiency.

Cabinet Color Indicator Lights

As shown in the image, the blue effect provides a strong technological feel while indicating that the equipment is operating normally. If a cabinet experiences an issue, the change in the color of the indicator lights allows maintenance personnel to quickly identify the faulty cabinet. Although not cutting-edge technology, it is quite interesting and practical.

Continued Need for General-Purpose Servers

In the design of intelligent computing center solutions, many often overlook general-purpose servers. Although GPU servers are the core, many auxiliary management tasks still require support from general-purpose servers. The video demonstrated high-density 1U servers providing CPU computing power, coexisting with GPU nodes without conflict. CPU nodes predominantly support management-related business systems.

Importance of Storage Systems

Although the video did not detail the design of the storage system, it briefly showcased this essential module for intelligent computing centers. Storage is critical for supporting data storage in training systems, directly affecting training efficiency. Therefore, intelligent computing centers typically choose high-performance GPFS storage to build distributed file systems.

Power Supply Guarantee System

The video displayed a large battery pack prepared specifically for the 100,000 GPU cluster. The power system connects to the battery pack, which then supplies power to the cluster, effectively mitigating risks associated with unstable power supply. While not much information was disclosed, it underscores the importance of a reliable power supply for intelligent computing center systems.

To Be Continued: Ongoing Expansion of the Cluster

The video concluded by stating that the 100,000 GPU cluster is just a phase, and system engineering is still ongoing.

Related Products:

-

OSFP-800G-FR4 800G OSFP FR4 (200G per line) PAM4 CWDM Duplex LC 2km SMF Optical Transceiver Module

$3500.00

OSFP-800G-FR4 800G OSFP FR4 (200G per line) PAM4 CWDM Duplex LC 2km SMF Optical Transceiver Module

$3500.00

-

OSFP-800G-2FR2L 800G OSFP 2FR2 (200G per line) PAM4 1291/1311nm 2km DOM Duplex LC SMF Optical Transceiver Module

$3000.00

OSFP-800G-2FR2L 800G OSFP 2FR2 (200G per line) PAM4 1291/1311nm 2km DOM Duplex LC SMF Optical Transceiver Module

$3000.00

-

OSFP-800G-2FR2 800G OSFP 2FR2 (200G per line) PAM4 1291/1311nm 2km DOM Dual CS SMF Optical Transceiver Module

$3000.00

OSFP-800G-2FR2 800G OSFP 2FR2 (200G per line) PAM4 1291/1311nm 2km DOM Dual CS SMF Optical Transceiver Module

$3000.00

-

OSFP-800G-DR4 800G OSFP DR4 (200G per line) PAM4 1311nm MPO-12 500m SMF DDM Optical Transceiver Module

$3000.00

OSFP-800G-DR4 800G OSFP DR4 (200G per line) PAM4 1311nm MPO-12 500m SMF DDM Optical Transceiver Module

$3000.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$850.00

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$850.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA MMA1Z00-NS400 Compatible 400G QSFP112 VR4 PAM4 850nm 50m MTP/MPO-12 OM4 FEC Optical Transceiver Module

$550.00

NVIDIA MMA1Z00-NS400 Compatible 400G QSFP112 VR4 PAM4 850nm 50m MTP/MPO-12 OM4 FEC Optical Transceiver Module

$550.00

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

NVIDIA MMS4X50-NM Compatible OSFP 2x400G FR4 PAM4 1310nm 2km DOM Dual Duplex LC SMF Optical Transceiver Module

$1200.00

NVIDIA MMS4X50-NM Compatible OSFP 2x400G FR4 PAM4 1310nm 2km DOM Dual Duplex LC SMF Optical Transceiver Module

$1200.00

-

OSFP-XD-1.6T-4FR2 1.6T OSFP-XD 4xFR2 PAM4 1291/1311nm 2km SN SMF Optical Transceiver Module

$15000.00

OSFP-XD-1.6T-4FR2 1.6T OSFP-XD 4xFR2 PAM4 1291/1311nm 2km SN SMF Optical Transceiver Module

$15000.00

-

OSFP-XD-1.6T-2FR4 1.6T OSFP-XD 2xFR4 PAM4 2x CWDM4 2km Dual Duplex LC SMF Optical Transceiver Module

$20000.00

OSFP-XD-1.6T-2FR4 1.6T OSFP-XD 2xFR4 PAM4 2x CWDM4 2km Dual Duplex LC SMF Optical Transceiver Module

$20000.00

-

OSFP-XD-1.6T-DR8 1.6T OSFP-XD DR8 PAM4 1311nm 2km MPO-16 SMF Optical Transceiver Module

$12000.00

OSFP-XD-1.6T-DR8 1.6T OSFP-XD DR8 PAM4 1311nm 2km MPO-16 SMF Optical Transceiver Module

$12000.00