Rapid technological advancements in data centers and performance computing have significantly increased the need for better data transfer rates and interconnect solutions. Infiniband Optical Cable is a technology that steps into this gap, providing the highest data transfer rate, scalability, and reliability. The purpose of this article is to present Infiniband Optical Cables, their technical characteristics, areas of use, and advantages over common offsets. Attention will be paid to the structure of these novel cables, allowing them to perform efficiently in data-rich environments and serve as a core for optimum data processing and storage systems. For those professionals interested in improving their infrastructures or researchers interested in modern communication technology, Infiniband Optical Cables and its dielectrics will undoubtedly be at the heart of any high-performance computing architecture.

Table of Contents

ToggleWhat Is an Infiniband Cable and How Does It Work?

Infiniband cables enhance communication and are meant for high-performance computing and data centers as a medium for fast data transmission. It provides efficient low latency, high bandwidth connections that are best suited for situations where there is a need for quick data transfers with minimal delay. The cable uses the method of serial point-to-point communications links in which data packets are transmitted over multiple channels in parallel, vastly increasing throughput. Its structure enables faster and higher data transmission speed, which, in most cases, surpasses Ethernet speeds. Thanks to optical fibers, if distance and speed are compared, InfiniBand cables are vastly superior to standard copper cables, which allow devices to connect and process large amounts of data more effectively.

Understanding the Infiniband Protocol

Infiniband Protocol is a high-speed data protocol developed for communicating between computer systems and within computer systems in high-performance computing. It provides communications over latency-constrained, high bandwidth communication channels provided by Infiniband cables and, hence, rapid data exchanges critical for such environments. It employs a switched fabric topology and provides an RDMA capability that allows memory-to-memory transfers without CPU involvement to reduce latency and CPU overhead. Also, the extensive scalability of its architecture makes it possible to satisfy the requirements of modern expanding data centers for efficient and dependable operation under significant data loads with minimum downtimes.

Role of Active Optical Cable in Infiniband

The support over long distances, low latency, and high bandwidth are some of the advantages of the infiniteband ecosystem, highlighted by active optical cables (AOCs), among other wires. The electrical signal is translated to an optical signal, sent through optic fibers, and then converted back to an electrical signal at the receiving end of the transmission links. This allows for inter-datacenter communication with less signal degradation and reduced electromagnetic interference, surpassing the performance of copper wires, more so when AOC cables are used. In high-performance computing environments, Infiniband networks not only extend the distance through AOCs but also meet the requirements of RDMA to enable data transfer without any interruption to the system.

Comparing Infiniband and Ethernet Cables

Infiniband and Ethernet cables are essential for networking purposes but are designed for and achieve different outcomes. Because Infiniband allows for RDMA capable of low-latency and high-throughput performance, Infiniband is most suited for high-performance computing environments. It is also the most suited for environments that require breakneck speeds and low levels of data transfer delay. On the other hand, Ethernet cables Markos and Integrative approaches: theorizing the translangual turn-Andrey V. Are used in more day-to-day networking activities or applications, as it is lower cost, more widely available, and work with a variety of devices and uses. While Ethernet continues to improve with new standards of higher speeds, it still usually has a more significant latency than Infiniband. Hence, selecting either Infiniband or Ethernet depends on the network’s requirements, which may include the speed levels, distance, and application type.

How to Choose the Right Active Optical Cable for Your Setup?

Evaluating Bandwidth Requirements

Both operational and prospective data load must be examined to properly assess the bandwidth requirements, allowing the most appropriate Active Optical Cable (AOC) to be selected. The first thing to do is estimate peak values of data throughput that the system’s operational requirements and calculate the required bandwidth with all attached devices. Evaluate the current network topology and recognize the possible sources of congestion in the system at peak loads. Also, consider the growth potential of your network so that the AOC can accommodate future higher levels of data transmission when your organization expands, or new higher bandwidth-demanding applications like 200G QSFP56 get deployed. In this regard, explore the technical features of the offered AOCs in the context of their performance, namely, the speed of data transmission, the distance they can transmit, and the data interfaces available. Such measures will enable you to choose the best option when installing and configuring AOCs to enhance the survivability of the network environment in the future.

Exploring Mellanox and Other Infiniband Products

To better understand Mellanox and general Infiniband offerings, best practices also require a brief review of the leading options. Under NVIDIA, one is familiar with the range of products manufactured by Mellanox, which produces several high-performance networking products, including ConnectX NICs and BlueField SmartNICs. Such products ensure fast data sharing and low latency, which are advantageous for data centers and AI workloads. Furthermore, some alternative Infiniband systems, like Intel Omni-Path architecture, deliver sound performance with the added advantage of being employed in Intel processing environments. Lastly, Cray, a subsidiary of Hewlett Packard Enterprise, features Slingshot interconnects designed to meet high-performance computing requirements for low-latency networking, mainly using AOC cables. These top products are designed for improved scalability and data center performance for specific organizations.

Connector Types: QSFP28, QSFP56, and More

Various connector designs, such as the QSFP28, QSFP56, or others, enable rapid information interchange over a high-bandwidth channel and provide safe and sound structural support for the networks deployed. To address enhanced functions, QSFP28 connectors specify 100Gbps data rates with performance improvement through 4 channels operating at 25Gbps each. These connectors are popular in large data centers that deal with large data transfers and are used alongside 100G Ethernet and Infiniband EDR applications.

QSFP56 connectors are a progressive scale of this technology as they offer twice the speed of the previous version, allowing a data rate that is four times higher due to the four-lane facilitation for a total rate of 200Gbps. This makes them suitable for high-performance and future-generation computing and for linking high-end routers and switches. They also show backward compatibility with a QSFP28, enabling easy field deployment. They are becoming increasingly preferred when there is a high demand for bandwidth but a low latency.

The story of the development of connectors QSFP and similar emphasizes the advancement of network development processes. Such products should not only comply with the existing technology requirements end users have but also cater to future needs in communication data rates, such as the continual growth and advancement in communication focus.

What Makes Mellanox a Leader in Infiniband Products?

History and Development of Infiniband Technologies

Mellanox has appeared at the very pinnacle of developing technologies based on InfiniBand topology due to its substantial involvement in the design and recent expansion of high-speed networking. Infiniband, which debuted in 2000, is the outcome of an alliance of technology giants who pioneered its development with the quest for an efficient and scalable data fabric in mind. From then on, Mellanox has been active in Infiniband development by advancing its performance characteristics, bandwidth, low latency, and reliable data communication. An essential part of leadership at Mellanox encircles technology and research, ensuring that the company designs next-generation interconnect solutions that integrate AI, big data, and cloud technologies. These attributes reduce the complexity of the data center, making Mellanox Infiniband products a favorite for corporations with intensive performance and computational requirements. This devotion to performance and innovation positions Mellanox in a category of its own as a top player in the Infiniband Networking market.

Future Prospects: Infiniband HDR and 800G

Thanks to HDR and over 800G contemplated bandwidth, the future development of Infiniband technologies will substantially improve the data center’s performance. It is essential as Infiniband HDR throughput is up to 200 Gbps per link and is crucial for answering the growing AI workload and large-scale data processing requirements. In this context, 800G will close all businesses’ bandwidth and low latency needs. With the advances in digital transformation, legacy systems, development of IoT devices, and edge computing, robust infrastructures are required to leverage the data regardless of scale. Through leading innovations in these spheres, Mellanox aims not only to meet current technology requirements but also to prepare for the future of computing. The features of the next-generation Infiniband products include seamless data traffic, high bandwidth, and low power usage. These features will allow Infiniband to maintain its role in high-performance computing environments in the future.

Integration with Data Center Infrastructure

Connecting data centers with Infiniband technology enhances the center’s scalability, efficiency, and performance. It enables connecting many servers with storage devices, allowing for swift data exchange with low delays and a high data transfer rate. Infiniband’s architecture also benefits data centers since they can scale quickly and efficiently when data grows. Lastly, Since Infiniband has low latency communication, it enhances the flow of information, which is essential for high-performance computing and enterprise recon. Infiniband best suits modern data centers, aiming to cut operational costs and improve energy efficiency, paving the way for groundbreaking technologies.

Why Is High Bandwidth Crucial for Compute and Data Operations?

The Role of Active Optical Cable in HPC

Active optical cables (AOCs) are significant in high-performance computing because of the high bandwidth connections required for data-driven applications. As AOCs support longer communication lengths, they provide a solution in large HPC setups, which is crucial in today’s practices. Compared to the more traditional copper cables, AOCs, including FDR active optical cables, offer increased bandwidths and less electromagnetic interference, which increases the communication speed, which is essential for HPC tasks. These devices enhance HPC performance by providing high bandwidth and low latency, which meets the requirements of complex simulations, scientific investigation, and data analysis and, at the same time, reduces the bulk of the infrastructure and power requirements. Their role in HPC enables perfect scalability, making it easier for systems to grow and change as more computational power is required.

Benefits of 200G and 100G Solutions

Moving on to the benefits offered by the 200G and the 100G solutions, the (only) negative thing that can be pointed out here is that they help boost the performance of a network, increasing efficiency as well. These solutions give improved bandwidth and quicker data transfer rates, which are crucial to satisfying the requirements of today’s data centers and HPC environments. Tamar noted, from a reflection of familiar sources, that there are 200G, such as 200G QSFP56, which are virtually twice the capacity of 100G. These allow institutions to keep up with the expanding volumes of data while maintaining performance. Furthermore, both solutions improve a larger scale and strategic future-proofing of networks by improving through expanding networks when continuity in demand occurs. The core implementation of these advanced solutions effectively minimizes latency. It increases system throughput, allowing users to employ high-frequency trading, massive cloud architecture, and real-time analytics with excellent reliability and speed.

How Do Infiniband Cables and Ethernet Cables Differ in Performance?

Data Rate Capabilities: FDR, EDR, HDR

There is a very crucial limitation that should be taken into account concerning Infiniband cables and their data rates, which is the difference between these standards: FDR, EDR, and HDR. FDR Infiniband data rate can reach up to 56 Gbps bandwidth efficiency, which is vastly more efficient when processing data-demanding tasks than the previous standards. EDR, on the other hand, significantly bumps this number as it can support data rates of 100 Gbps bandwidth, which is needed for modern-day computing environments with a high demand for data transfer and a low requirement for transfer latencies. The latest leap in this evolution of data is HDR, which facilitates transmission rates of up to 200 Gbps. This feature is essential for meeting the needs of contemporary data centers, where the bandwidth needed to support efficient AI and machine learning applications is the primary requirement. Each advancement from FDR to HDR has represented a noteworthy increase in efficiency and the bandwidth throughput that Infiniband will use.

Application Suitability: Enterprise vs. Consumer

Regarding enterprise and consumer applications, the decision between Infiniband and Ethernet cables is predicated on the particular needs of those applications. In the most demanding enterprise segment, which requires high-performance and low-latency networking, Infiniband cabling systems are the most appropriate, and they can be employed because of their higher data throughput and better scale. These systems are commonly used in data centers, high-frequency trading, and large cloud infrastructure systems. These applications require robust and reliable performance, which is not an issue for Infiniband. On the other hand, consumer applications often use Ethernet cables for large-area networking because they are inexpensive, easy to install, and provide sufficient performance for home networks and small offices. Due to its attractiveness and coverage of a wide networking area, ethernet technology is gaining traction as it provides a suitable trade-off regarding its performance versus costs for ordinary consumers.

Cost Efficiency: AOC vs. Copper Cable

There are many things to consider when making a cost analysis between Active Optical Cables (AOC) and copper cables. The first thing to note regarding AOCs is that they usually have a faster data transfer rate and lower latency, and these features are pretty useful for enterprise applications that require the transmission of large amounts of data. This is especially useful for large businesses that must transfer large amounts of data. Unfortunately, their cost is usually higher than that of copper cables. On the contrary, copper cables are a cheaper option costs, compromising performance on short-range transmission and only making them suitable for smaller networks or where an affordable option is needed to fit into budget limitations. Additionally, copper cables are easier to install and maintain, as they specialize in handling the AOCs; for instance, 850nm optical transceivers are not a requirement. Hence, the choice of the two options is a trade-off between the capital outlay and the requirements for the applications in terms of network size, amount of data to be transferred, and scope for future expansion.

Reference Sources

Frequently Asked Questions (FAQs)

Q: What is an Infiniband optical connector, and how is it different from other connectors?

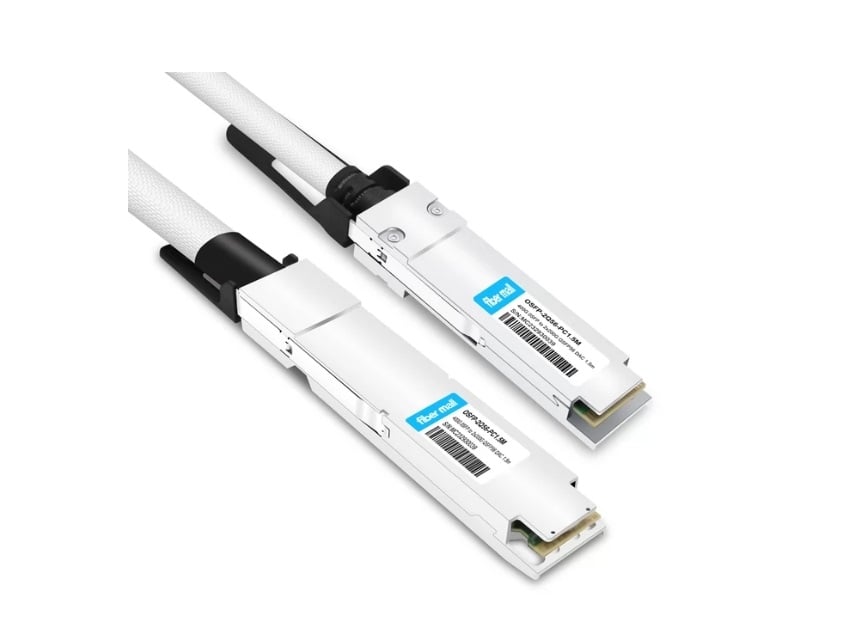

A: An Infiniband optical connector is a member of the optical cable family whose purpose is to transmit data at high speed in data centers, high-performance computers, or over networks. Infiniband optical connectors differ from direct attach copper (DAC) connectors in that they utilize light signals for transmission instead of electric signals, making it possible to increase bandwidth and drastically improve speed as with the active optical cables in FDR. Irrespective of that, they come in many designs, including QSFP28 and QSFP56, which comply with different Infiniband data transfer rates like EDR, HDR, and NDR speeds.

Q: What are the benefits of using QSFP28 AOC in Infiniband systems?

A: Among the other advantages, it facilitates the usage of QSFP28 AOC in Infiniband systems, accurate targeting, and distribution of elements within complex networks. It can maintain a bandwidth of up to 100G and offers longer distances than DAC and provides better signal quality. Furthermore, flexible and lightweight AOC cables make installation and management easier in dense data center setups and with cable assembly snaps. Working with different Infiniband models at various distances may also be suitable to ensure that communication between devices is not interrupted.

Q: What developments were made in network performance with the help of the QSFP56 Infiniband HDR AOC Gen-3?

A: Using the Infiniband HDR active optical cable QSFP56 Infiniband HDR AOC improved the network performance enhancement, supporting data rates of 200G. It incorporates optics and signal processing technologies in higher-generation devices, which assist in lowering the latency and bandwidth. Owing to their advanced design, QSFP56 HDR AOCs improve overall performance and are suitable for HPC and AI/ML workloads, which transfer high volumes of data while maintaining a low latency connection.

Q: What specific functions do AOCs perform, while optical transceivers perform in Infiniband networks?

A: Active optical cables (AOCs), like QSFP56 to QSFP56 varieties, and optical transceivers are different devices utilized for similar or even the same applications. AOC devices are ready-made cables with signal processors and optical devices that achieve a fast but plug-and-play solution. On the contrary, AOC transceivers are modules that can be docked into network devices and linked to fiber optic connections. AOC systems are relatively cheaper and have less deployment complexity; however, AOC transceivers have a more flexible length and type depending on the cable used.

Q: What is the advantage of Infiniband optical cables over direct attach copper (DAC) cables?

A: Infiniband optical cables offer better features than DAC on many levels. A more extended range, higher bandwidth, and nonprone to electromagnetic interference are some of the common advantages offered by optical cables. Their weight is less, and they are more flexible and, thus, are more accessible to route within data centers. They are inferior to direct attach copper (DAC) cables in basic costs so long as distance requirements remain short but lack performance in length and speed compared to AOC. In the case of data-intensive and high-performance computing, Infiniband optical cables are very much preferred owing to their superior performance characteristics.

Q: What type of applications would Infiniband EDR and HDR AOC cables be required for? What are their parameters?

A: Infiniband EDR(Enhanced Data Rate) and HDR(High Data Rate) AOC ensure reliable, high-performance connections in months. EDR is estimated to be capable of 100 Gbps of transmission, while HDR projects 200 Gbps. Due to integrated signal processing and advanced optics, both EDR and HDR AOCs can maintain signal integrity over extended wire distances.

Q: How do AOCs enhance the speed and interconnectivity of performing interconnections economically within a data center?

A: AOCs are cheap optical interconnect cables suitable for high-speed data transfer within a data center. It integrates transceivers and fiber cables into a single system, reducing costs. AOCs are less complicated regarding installation and maintenance, reducing operational costs. In addition, they work with comparatively lower power than some transceiver solutions and thus offer savings in cost over a long period of use, which makes them suitable for large-scale deployment.

Q: What are the highest available speeds and their protocols under the Infiniband umbrella, and how do optical cables facilitate these features?

A: Infiniband protocols cover a wide range of speeds, including FDR (56 Gbps), EDR (100 Gbps), HDR (200 Gbps), and the latest NDR reaching 400 Gbps. AOCs (active optical cables) allow these higher speeds because they use optics and advanced signal processing elements. It is perfect for fiber optic communications as it has a broad bandwidth, low latency, and long distances better than copper. Hence, Infiniband optical cables will be able to cope with the escalating performance needs of modern data centers and HPC systems.

Related Products:

-

NVIDIA MCA4J80-N004 Compatible 4m (13ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable

$650.00

NVIDIA MCA4J80-N004 Compatible 4m (13ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable

$650.00

-

NVIDIA MCA4J80-N005 Compatible 5m (16ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable

$700.00

NVIDIA MCA4J80-N005 Compatible 5m (16ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable

$700.00

-

NVIDIA MCP7Y60-H002 Compatible 2m (7ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$125.00

NVIDIA MCP7Y60-H002 Compatible 2m (7ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$125.00

-

NVIDIA MCP4Y10-N00A Compatible 0.5m (1.6ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive Direct Attach Copper Cable

$105.00

NVIDIA MCP4Y10-N00A Compatible 0.5m (1.6ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive Direct Attach Copper Cable

$105.00

-

NVIDIA MCP7Y60-H001 Compatible 1m (3ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$99.00

NVIDIA MCP7Y60-H001 Compatible 1m (3ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$99.00

-

NVIDIA MFS1S00-H003V Compatible 3m (10ft) 200G InfiniBand HDR QSFP56 to QSFP56 Active Optical Cable

$350.00

NVIDIA MFS1S00-H003V Compatible 3m (10ft) 200G InfiniBand HDR QSFP56 to QSFP56 Active Optical Cable

$350.00

-

NVIDIA(Mellanox) MCP1650-H003E26 Compatible 3m (10ft) Infiniband HDR 200G QSFP56 to QSFP56 PAM4 Passive Direct Attach Copper Twinax Cable

$80.00

NVIDIA(Mellanox) MCP1650-H003E26 Compatible 3m (10ft) Infiniband HDR 200G QSFP56 to QSFP56 PAM4 Passive Direct Attach Copper Twinax Cable

$80.00

-

NVIDIA MFA7U10-H003 Compatible 3m (10ft) 400G OSFP to 2x200G QSFP56 twin port HDR Breakout Active Optical Cable

$750.00

NVIDIA MFA7U10-H003 Compatible 3m (10ft) 400G OSFP to 2x200G QSFP56 twin port HDR Breakout Active Optical Cable

$750.00

-

NVIDIA MCP7Y60-H01A Compatible 1.5m (5ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$116.00

NVIDIA MCP7Y60-H01A Compatible 1.5m (5ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$116.00