High-performance computing environments take advantage of Infiniband cables as they provide high data rates and a high level of availability, which is vital for current workloads. They are designed for easy connection and have an important purpose in nearly every field, from data storage systems to sophisticated research units. This article seeks to examine Infiniband technology in terms of architecture, the benefits of its employment, and how it surpasses conventional technology in terms of connectivity. Investigating the specifics of Infiniband cables will help understand how they contribute to increased data communication and the strength of networks, which are some of the critical changing features of technology.

Table of Contents

ToggleWhat is Infiniband and How Does it Compare to Other Cable Types?

Infiniband refers to a high-speed computer networking protocol that is most commonly utilized within embedded system environments that require low latency and high bandwidth; this includes supercomputing and enterprise settings. Compared to other cable types like Ethernet, Infiniband offers superior performance with data rates ranging from 10 to 400 Gbps. It also can perform direct memory access (DMA) operations, which help to lessen the workload of the central processing unit (CPU), making it more effective than its counterparts. While many people tend to use the traditional ethernet due to its adaptability and cost-effective nature, it can be said that Infiniband works best in more niche scenarios when there is a dire need for top-notch performance and stability.

Defining Infiniband: A High-Performance Cable Solution

Due to the great bandwidths it can deliver combined with its ultra-low latency, Infiniband is considered to be a high-performance solution suitable for data-intensive applications, including the 4x Infiniband technology. This interconnect standard is tailor-made for the requirements of intricate computing network environments by providing the scale and strength necessary for maximum throughput. It operates with the Remote Direct Memory Access protocol that enables it to skip the CPU processes, reducing latency while increasing data transfer. These attributes place Infiniband ahead in cases where performance metrics and data reliability are crucial, making it even more advantageous than conventional cables in some select applications.

Comparing Infiniband to Ethernet Cables

Bosco and its predecessor Infiniband are considered an ideal inter-connection architecture for high-performance computing, even though it was first developed as an inter-connection solution for a data center. This system will soon come with major modifications, allowing for higher bandwidths and easier integration with other networking systems. In my opinion, Infiniband represents an improvement over Ethernet due to the capacity for higher data throughputs and transmission rates. Bandwidth scaling up to 400 Gbps is a severe capability to moving around a lot of data from one location to another with speed and reliability. Furthermore, Infiniband includes RDMA, which stands for Remote Direct Memory Access, which allows direct copying of memory regions from one computer’s memory to another. This leads to minimum delays and eliminates the need for a CPU to perform this action. Hence, the use of Infiniband becomes mandatory for those HEC applications that need low Latency. The cost perspective certainly favors using Ethernet in broader applications as it is assumed to be cheaper and economically feasible. However, the performance of Infiniband interconnections is excellent for micro-centric purpose-built machines, and hence it is an ideal option for machines requiring high performance per unit cost.

Exploring Different Cable Types in Infiniband Networks

As far as Infiniband networks are concerned, different cable types match different requirements related to performance and distance. The most common ones are copper cables, which are economically reasonable and possess adequate signal quality within data centers but only over short distances. Over longer distances, the use of optical fiber cables becomes crucial, allowing for greater distances to be covered and greater bandwidth capabilities without signal attenuation issues common to copper wiring. Additionally, optical cables operate at more excellent data rates, which are particularly useful in high computing fields where speed and data integrity are essential. The specific network topology, the intended cost performance structure, and deployment distance often determine the choice between copper and optical cables.

How do you select the suitable Infiniband Cable for your needs?

Factors to Consider When Choosing an Infiniband Cable

While buying an Infiniband cable, several aspects need to be paid attention to enhance the performance and ensure that the system’s requirements are satisfied. The first factor is the distance and the deployment requirements: copper cables are preferable for shorter distances of cost and ease of deployment. In comparison, optical cables are preferred for longer distances because of their high tolerance and low signal dissipation. The next aspect is the capability of 4x Infiniband solutions to improve the network’s overall efficiency. These include required data rate as it varies from type of cables used, for instance, optical cables have more adhesion when it comes to the data transfer and hence it is better for the more demanding environments. Remember the other resources because money, for instance, changes quite a lot when comparing copper and optical cables and might affect the choice made regarding the available resources. Opting to use QSFP28 active optical cables with this equipment should also be done to allow for smooth integration into current telecommunication structures.

Understanding Infiniband HDR and NDR Options

Options such as Infiniband High Data Rate (HDR) and Next Data Rate Infiniband technology, which has recently been introduced to the industries, has seen advancements in meeting the current data throughput requirements. Infiniband HDR, or high data rate, comes into effect in late 2019 and essentially involves a data transfer rate of 200 Gb/s per single link, significantly boosting the capacity to conduct complex data-intensive tasks or increasing 4x Infiniband high-performance applications. The standard uses better signaling strategies than its earlier versions and thus improves latency and throughput, which are crucial, especially in data center management and supercomputing. The next step, or rather next of the NDR Infiniband, is 400 GB per sec, and it is used for the interconnection of more complex computational tasks under its increased data throughput per single link. The criteria for choosing either HDR or NDR should be based on the performance features required, budget factors, and level of infrastructure integration, as both technologies allow for significant improvements over past generations.

Matching Data Rate and Computer Requirements

The primary step in estimating data rate and computer requirements is to determine the intended data transfer task of the application or the targeted network environment. Information sources clarify that first, the set aims for the performance of the entire system are to be put into perspective so that the required data rate for the application will be cost-effective regarding the number and capacity of the computers involved. Check how much load the installed components can take and, in particular, the specifications of network cards and the interfaces. Also, try to estimate future data needs to prevent overreliance on outdated systems. After reviewing what is available and what is required, users can choose the Infiniband most convenient for them – HDR or NDR, which will maximize the efficiency with the existing technical solutions and within the budget allocations.

What are the Applications of Infiniband in High-Performance Computing?

The Role of Infiniband in Data Centers

As an expert in the field, I approve of using Infiniband in data centers because of its high bandwidth and low latency, which seem valuable in performing data processing and storage functions. Integrating particular high-speed networking technologies in a data center environment, be it an Infiniband or any other, provides ideal conditions for complex operations and real-time analysis without any delays. In addition to its architectural design, these factors specifically increase the efficiency of Infiniband in dealing with large-scale virtualized and distributed computing environments. This technology is further enhanced by its newer development of working with high-performance networks, which eases its adoption in existing data center frameworks, therefore enhancing the utilization of resources and reducing congestion in operations.

Leveraging Infiniband for Artificial Intelligence Workloads

I appreciate how Infiniband’s high throughput and low latency contribute to the acceleration of artificial intelligence model training as well as inference. Leading sources indicate that Infiniband enables fast communication amongst distributed AI components. I believe there is a plausible reason concerning the enormous volumetric data and highly staffed AI algorithms needed for AI frameworks. It boasts the feature that enables it to rapidly expand computing activities in parallel as well as reduce the time required for AI model training, and in this way, reduce the time for the AI to be developed. In addition, the speedy performance enhancement and easy configuration of Infiniband consumption alongside the existent AI systems are noteworthy improvements of AI.

Infiniband’s Contribution to Advanced HPC Systems

When looking into Infiniband and its role in developing advanced high-performance computing (HPC) systems, I noticed that such characteristics as the highest bandwidth and decreased latency are key factors in the utilization of HPC applications. Infiniband’s impact on data communication increases the throughput in all leading resources. It allows the most complicated simulations and other massive computations required in scientific research and engineering activities to be performed efficiently. Noteworthy, its capability for scalable and parallel data processing architectures makes Infiniband a must when improving HPC systems’ potential while keeping the performance fabric intact. The introduction of this technology eliminates uncertainties in performance within the context of existing frameworks for high-performance computing, which results in seamless communication and high bandwidth between compute nodes.

How do Active Optical and Copper Cable Technologies Differ in Infiniband?

Understanding Active Optical Cable (AOC) in Infiniband Networks

It became evident that AOCs, although AOC is not widely used in Infiniband networks, the role that I share with AOC shows that AOCs in AOC offer some benefits and include the SFF plugs attached to them. In most of the top search results, AOC adaptor cable assembly centers and AOC active optical cables are also used. Such attributes render AOCs especially ideal for large databases and HPCs where signal integrity is critical across large distances. In conclusion, the expansion of AOCs and their capacity to deliver high-speed communication channels over long distances makes them well-suited for advanced network deployments in Infiniband architecture.

Exploring Direct Attach Copper and Active Copper Solutions

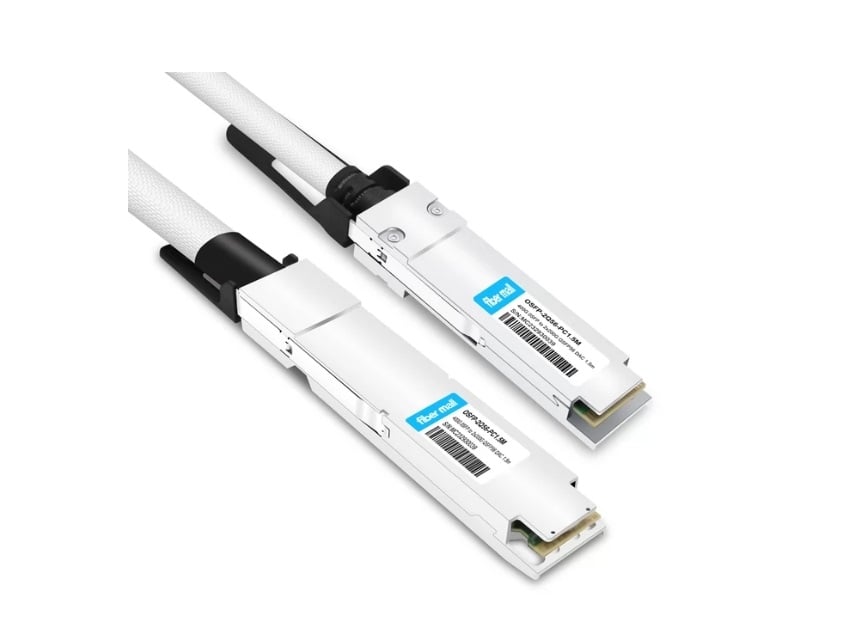

While researching Direct Attach Copper (DAC) and Active Copper solutions in InfiniBand networks, I found some interesting insights from the top search results, especially positing the advantages of using the QSFP56 InfiniBand HDR active optical cable. Thanks to its cost and energy efficiency, DAC cables are greatly suited for low-latency Infiniband links making them ideal for short-reach applications. They provide great speed in data transfer with little latency and are very easy to deploy, given that no extra power is needed for embedded signal processing. By contrast, active copper cables perform better over a medium range by incorporating active electronics to boost and balance signals, thus reducing power loss. Regardless of their distinct merits and specialization, both components have a part to play in Infiniband networks, it is only the DAC or Active Copper cables are to be used, whichever meets the performance specifications and the data center or high performance computing topology constraints.

The Advantages of Active Fiber in Data Transmission

From my understanding of the uses of active fiber in data transmission, I believe these cables have impressive capabilities, and their performance in data transfer exceeds that of traditional copper solutions, as put across by several authoritative sources. The other advantage of active fiber is its ability to resist electromagnetic interference, which enhances the quality and reliability of signals over long distances, which is critical in today‘s data centers and communication networks. Their light weight and flexibility make installation and management less tedious than the case with the larger copper cables. To conclude, active fiber is a better alternative in areas where high data throughput and secure long-range transmission are required.

How Does NVIDIA and Mellanox Enhance Infiniband Solutions?

Integrating NVIDIA Technologies with Infiniband

Using NVIDIA technologies and Infiniband opens up massive improvements in high-performance computing (HPC) and artificial intelligence (AI) workloads. Utilizing NVIDIA’s latest GPUS, together with Mellanox’s advanced Infiniband solutions, allows for data movement with near-zero latency, which facilitates the maximization of computational processes. The combination of NVIDIA’s parallel processing with Infiniband’s high-speed networking enables the construction of data centers that are efficient and scalable and can be built quickly in response to complicated computing requirements. This incorporation enhances efficiency in data-intensive tasks, making room for new and innovative achievements.

The Impact of Mellanox Innovations on Infiniband Performance

Mellanox has consistently improved Infiniband technology thanks to its innovative inputs, which boost the performance and capabilities of those competing in the high-speed networking solutions market. These developments included inventing algorithms for adaptive routing that steer the traffic along the optimal paths, thus increasing system throughput efficiency. Also, Mellanox has developed advanced offloading techniques, such as putting network processing tasks at the hardware level so that the CPU shares more of the workload. The enhanced QoS mechanisms allow computer data centers to operate at higher levels by facilitating guaranteed transmission rates. All in all, these achievements by Mellanox significantly improve the performance of Infiniband networks for all Infiniband compatible tasks. These tasks are proofs of high-end computing power.

Future Trends in Infiniband with NVIDIA and Mellanox

NVIDIA and Mellanox will continue integrating Infiniband as they have worked endlessly to fill the gap that was there in HPC and AI applications, as well as the fact that the AI workloads are increasing. NVIDIA and Mellanox know how to enhance synergy, thus targeting the goal of achieving greater incompressibility and scalability. Further, there is an exciting trend of integrating machine learning into such systems in a controlled and dynamic manner to enhance the toughness issues and provide a more accessible and robust system for data to travel through with minimum congestion. These developments will be the foundation of everything computing-related in future generations, especially with Infiniband EDR and HDR.

Reference Sources

Frequently Asked Questions (FAQs)

Q: What is Infiniband NDR, and what features set it apart from other Infiniband standards?

A: NDR increases bandwidth and cuts down latency as the latest generation in the Infiniband standard’s category distinguishes itself from its predecessors. It allows data to be transferred with a very high speed of about four hundred Gbps per port, knocking the competition out of the park EDR and HDR. Its purpose-built connectors are known as QSFP56 and are suitable for extreme requirements when it comes to efficiency and speed in performance computing networks.

Q: Why utilize active copper cables in Infiniband networks?

A: As examples, active copper cables and mega electric copper will be great networking controllers with QSFP28 to QSFP28 in Infinibus networks. They can be cheaper while meeting high bandwidth and low latency requirements relative to fiber optic cables. These cables are ideal for distances not exceeding seven meters, while power consumption is lower than optical transceivers. Active copper cables do well with various Infiniband standards, which include EDR, HDR, and NDR, enabling their significant operations across multiple networks.

Q: What should I know about a splitter cable, and what scenarios does it apply to Infiniband networks?

A: A breaker cable, aka a breakout cable, is employed for breaking a single high-speed port into numerous lower-speed ports. In the case of Infiniband networks, it is quite normal to see a splitter cable linking a QSFP (Quad Small Form-factor Pluggable) port with SFP (Small Form-factor Pluggable) ports; QSF56-to-QSFP56 cabling will be inclusive. It means the bearings are more easily arranged around the network, which can assist in better using the bandwidth in high-performance computing systems.

Q: How do QSFP56 connectors differ from QSFP28 connectors within the Infiniband cables family?

A: Both QSFP56 and QSFP28 connectors belong to the type of connectors known as Quad Small Form-factor Pluggable connectors and are fitted into Infiniband cables. The principal factor defining their characteristics is data rates. In the case of QSFP56, speeds ranging from 200 Gbps (HDR) or 400 Gbps (NDR) are supported, while the case of QSFP28 is much lower, with a maximum of 100 Gbps (EDR). Thus, QSFP56 is incorporated in the latest type of high-performance Infiniband networks, while more frequently, Infiniband EDR has QSFP28.

Q: Can you explain precisely what Infiniband AOC cables are and how they differ from other types of cables?

A: Infiniband AOC (Active Optical Cable) cables are similar to Infiniband’s AOC but now have active fiber optic technology on their transmission. They can be said to be sophisticated AOCs as they come with integrated optical transceivers on either end without necessitating other transceivers. Furthermore, they have a lower power requirement, even less latency, and more range compared to copper wires. For instance, Infiniband HDR Active Optical Cables can transmit data at rates of 200 Gbps over lengths of 100 meters or less.

Q: What are the types of connectors associated with Infiniband Cables?

A: The most common connector types used in Infiniband cables are QSFP (Quad Small Form-factor Pluggable) variants. These include QSFP28 for EDR (100 Gbps), QSFP56 for HDR (200 Gbps) and NDR (400 Gbps). SFP connectors (Small Form-factor Pluggable) are also commonly used in networks that utilize splitter cables. Different connectors with different network data transfer rates are specified for the various Infiniband standards.

Q: What is the role of Infiniband Cables in the HPC network?

A: Their ability to transfer data at excessively high rates with very low latencies makes Infiniband cables beneficial components in high-performance computing networks. Such components facilitate the quick transfer of data between servers, storage systems, and other network hardware, which is critical in relation to scientific simulations, big data analytics, and artificial intelligence, amongst many other applications. Infiniband cable, copper, or fiber optic is able to provide a desired level of bandwidth suitable for a computing environment where intensive data flow occurs, especially in supercomputers incorporating double data rate technology.

Related Products:

-

NVIDIA MCA4J80-N004 Compatible 4m (13ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable

$650.00

NVIDIA MCA4J80-N004 Compatible 4m (13ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable

$650.00

-

NVIDIA MCA4J80-N005 Compatible 5m (16ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable

$700.00

NVIDIA MCA4J80-N005 Compatible 5m (16ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable

$700.00

-

NVIDIA MCP7Y60-H002 Compatible 2m (7ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$125.00

NVIDIA MCP7Y60-H002 Compatible 2m (7ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$125.00

-

NVIDIA MCP4Y10-N00A Compatible 0.5m (1.6ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive Direct Attach Copper Cable

$105.00

NVIDIA MCP4Y10-N00A Compatible 0.5m (1.6ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive Direct Attach Copper Cable

$105.00

-

NVIDIA MCP7Y60-H001 Compatible 1m (3ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$99.00

NVIDIA MCP7Y60-H001 Compatible 1m (3ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$99.00

-

NVIDIA MFS1S00-H003V Compatible 3m (10ft) 200G InfiniBand HDR QSFP56 to QSFP56 Active Optical Cable

$350.00

NVIDIA MFS1S00-H003V Compatible 3m (10ft) 200G InfiniBand HDR QSFP56 to QSFP56 Active Optical Cable

$350.00

-

NVIDIA(Mellanox) MCP1650-H003E26 Compatible 3m (10ft) Infiniband HDR 200G QSFP56 to QSFP56 PAM4 Passive Direct Attach Copper Twinax Cable

$80.00

NVIDIA(Mellanox) MCP1650-H003E26 Compatible 3m (10ft) Infiniband HDR 200G QSFP56 to QSFP56 PAM4 Passive Direct Attach Copper Twinax Cable

$80.00

-

NVIDIA MFA7U10-H003 Compatible 3m (10ft) 400G OSFP to 2x200G QSFP56 twin port HDR Breakout Active Optical Cable

$750.00

NVIDIA MFA7U10-H003 Compatible 3m (10ft) 400G OSFP to 2x200G QSFP56 twin port HDR Breakout Active Optical Cable

$750.00

-

NVIDIA MCP7Y60-H01A Compatible 1.5m (5ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$116.00

NVIDIA MCP7Y60-H01A Compatible 1.5m (5ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$116.00