In recent decades, efficient data flow and transmission technologies have matured, and Infiniband stands out as an effective medium. This evolution enables high-bandwidth and low-opening latency performance to proliferate. Infiniband facilitates interconnecting processors and I/O devices in a setting where high-speed data communications are vitally desired. This blog addresses the copper cable and direct-attach application aspects of using Infiniband. We will attempt to find ways to optimize these components for use in today’s ‘computing infrastructures’ that were always aggressive. By being thorough and detailed, we explain the workings of Infiniband over copper-twisted pair, its benefits, and economic and industrial factors one must consider before taking the plunge in implementing it. Overall, this paper offers a complete understanding of the use and leverage of Infiniband cabling, considering other topical industry standards and best practices so that, in the end, the reader is equipped enough to apply this technology in his or her work, resulting in better performance.

Table of Contents

ToggleWhat is Infiniband and How Does It Work?

Infiniband technology stands out due to its expectational role in data transfer rates geared towards low latency and high bandwidth despite only connecting two devices. It is commonly employed in computer systems and enterprise solutions architectures that experience demanding workloads. To achieve this capability, the technology uses a network of routers connected with the help of cables and optical fibers. It moves data packets among many channels, efficiently reserving bandwidth while decreasing the time spent communicating through RDMA moving data. This allows data to move between the system’s memories instead of going through the CPU, which preserves the system’s performance and reduces limits in computation configurations such as DDR.

Understanding the Basics of Infiniband Technology

To grasp Infiniband technology in its full context, mentioning its essential elements and design concepts is appropriate. As a technology, Infiniband was designed with a layered architecture, which includes physical, link, and network layers and consequently allows for ease in the transmission of information throughout the infrastructure. The physical incurs consist of shielded cables of high capacity, either water or fiber optic, that transmit a data signal. The link layer handles the flow control and error detection/correction. In contrast, the network layer is responsible for delivering data packets to final destinations, ensuring that the management of directly attached copper twinax cables works smoothly. Among the factors for Infiniband is the RDMA feature that allows data to be transferred directly from one memory location to another but on a different node without passing through the CPU, which means less latency. Infiniband is a type of intercommunication, a suitable means for data centers and high-expansion computing cluster environments, which require fast data retrieval and transfer.

How Does Infiniband Compare to Ethernet?

Infiniband and Ethernet are popular data transmission technologies but satisfy distinct requirements and standards. Infiniband is exceptional because of its high throughput and low latency, making it suitable for high-performance computing (HPC) and data centers that focus on high-speed data processing. It can work at 100 Gbps and multiples of that. It has the RDMA feature, which permits data transfer without burdening the CPU.

On the contrary, Ethernet, since it is more available and cheaper, dominates the deployment of general networking. While ethernet has increased in speed over the years, with the latest advances being up to 400 Gbps, it lags behind the latency offered by Infiniband. The current development of Ethernet RDMA over Converged Ethernet (RoCE) pursues this objective, but Infiniband is preferred in areas where each microsecond is critical. Thus, the network infrastructure performance requirements and cost considerations seem to determine the choice between the two types of networks.

Exploring the Role of Infiniband Cable in High-speed Data Transfer

The Infiniband system provides a high-speed interconnection between computing nodes of the network infrastructure, which can be achieved using Infiniband cables. An Infiniband cable can support high data transfer architecture, which Infaiband technology is renowned for its required bandwidth and low communications latency. These cables support packet Infiniband systems by propagating packet transmission for nodes and vice versa. They significantly reduce overhead, which would otherwise have been caused by standard networking approaches owing to their RDMA (Remote Direct Memory Access) feature. With this capability, applications can directly access remote memory without going through the CPU. This goes a long way in increasing the efficiency of data retrieval in a cluster. In the HPC system context, where performance is paramount, selecting Infiniband cables, such as the 30AWG passive direct attach copper twinax, optimizes the full benefits of the technology’s implementation within data centers and HPC clusters.

Why Choose Direct Attach Copper for Your Network?

Benefits of Using Direct Attach Copper Cables

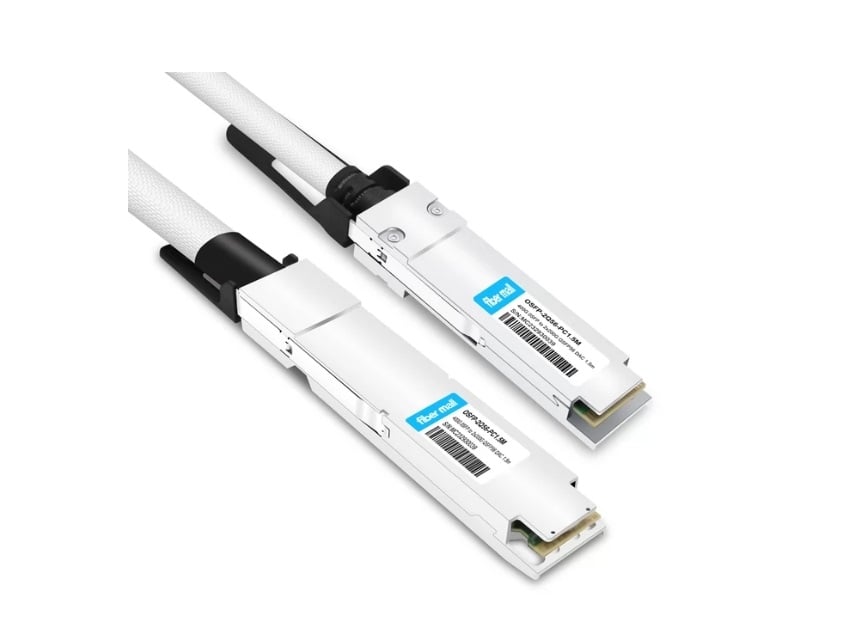

Direct Attach Copper (DAC) cables are becoming increasingly popular due to their low cost and performance in data center environments. First, these cables are more power efficient and have lower millisecond latency than optical alternatives, making them suitable for rack interfacing where speed and power consumption are critical. Second, DAC cables are more reliable and last longer as they are made of sturdier materials resistant to wearing out from frequent plugging in and out, which adds to the lifecycle. Third, deployment is cost-effective as they do not need extra transceivers, so installation is more straightforward, especially when passive direct attach copper twinax cables are used. These characteristics make DAC cables a reasonable and suitable solution for densely packed networks and HPC environments.

Comparing Direct Attach Copper vs. Active Optical

As we begin to analyze the Direct Attached Copper (DAC) cables to the Active Optical Cables (AOC), a few key differences unfold that allow an individual to choose from either one, depending on the networking requirement. Considering a 30AWG version suited for connections that often lie within or between the racks in a data center, DAC cables have usually catered to short-range connections and have been more reliable and reasonably inexpensive while consuming less power. Owing to its sturdy construction, which withstands the force from frequent plugging and unplugging, resultant latency is significantly low, and connectivity is highly reliable.

On the other hand, while DAC cabling provides good bandwidth, AOC cabling has the advantage of being able to connect over greater distances and, thus, is usually required alongside DACs when aiming to communicate over long distances. Due to this concern or lack of areas of concern, one would find AOC easier to use and less heavy, which benefits from active use of AOC. This poses an affordability concern as they are expensive compared to AOC, considering they require additional components such as transceivers. Every solution within the two options has restrictions: distance, installation design, complexity, power consumption, and budget.

Choosing the Right Direct Attach Copper Cable for Your Needs

The selection of the Direct Attach Copper (DAC) cable suitable for your service is determined by the specificities of your network. First, it is essential to decide on the data rate required by the application; DAC cables are available for purchase for data rates ranging from 1Gbps to 400Gbps. Then, proceed to evaluate the distance between the connector ends. Because DAC cables are optimized for short-range connections (usually less than 10 m), they are preferentially used in intra-rack applications. Also, it is necessary to maintain compatibility with the hardware already in use to obtain the best results. Economically sensitive areas that also aim for low power draw would find DAC cables superior to optical cables. Finally, consider your environment and how often cords are plugged and unplugged, as DAC cables can withstand a fair amount of wear and tear. This means the cables will last longer and be more reliable in ever-changing networked situations.

How do you select the right copper cable for infinite bandwidth EDR?

Key Features to Look for in a Copper Cable Assembly

Several key characteristics must be examined for a copper cable assembly engineered to meet the EDR (Enhanced Data Rate) standard bandwidth. First, the bandwidth in question must be reviewed to ascertain that it can facilitate high-volume secure data communication in addition to low latency. Also, investigate advancements in conductor materials and construction that ought to enhance EMI shielding or improve mesh harnessing. Integration with these protocols for connectivity is an essential requirement for the future enhancement of existing systems. Other features include durability, reliability, and flexibility, as thick designs capable of good sheathing withstand flexibility in active working conditions. Please also consider thermal performance and energy efficiency, as they will be beneficial for the reliability and long-term sustainability of the network solution.

Understanding Data Rate and Bandwidth Capabilities

In evaluating EDR’s infinite bandwidth, the prime data rate and bandwidth features are the signal frequency, modulation, and channel capacity. Higher frequencies have a more significant data transmission potential, whereas EDR QSFP28 has a higher data transfer rate. Amplitude and frequency modulations are equally crucial in transferring the data more efficiently per unit hertz of bandwidth. The vocabulary word can be defined as the channel’s capability, which, according to the Shannon – Hartley theorem, includes the maximum possible bitrate in a specific bandwidth at a particular noise level. Adequate bandwidth utilization must consider these elements if copper cables are to perform optimally within a requisite network while reducing the required time needed.

Ensuring Compatibility with Infiniband EDR Systems

Hardware and software components must be addressed to expand interoperability with InfiniBand-enhanced data rate systems. Incorporating Prefined adapters, switches, and cables carrying features that support InfiniBand EDR functionality is paramount. Adopting converged network adapters further extends networks over existing data center infrastructure. Moreover, the endorsement of specifications established by professional companies like the InfiniBand Trade Association guarantees the coherence of network components. Regular updates of drivers and firmware are also critical to maintain the performance and security level of software functioning. Not neglecting interoperability requirements protects against significant disturbances and enables effective data transfers between network systems.

What Makes NVIDIA Infiniband Cables Stand Out?

Exploring the Advantages of NVIDIA Infiniband Solutions

NVIDIA solutions have many advantages, making them viable for high-performance computer networking. For instance, they excel in providing high bandwidth. This enhances application performance because there is a high data transmission rate, which means even big data applications run smoothly. While traditional networks or communications technologies can introduce a latency lag, InfiniBand, implemented on lower-latency MicroTCA systems, has advantages in recording that data throughout the analysis cycle.

Moreover, Network requirements are growing, especially for Nvidia InfiniBand, which continues to provide better network performance. This is required for increasing the number of data centers, which ensures seamless integration with environments that are larger clusters.

Furthermore, the resources that fall under NVIDIA InfiniBand have advanced network management capabilities that help effectively carry out tasks. Such features include intelligent routing, surge control, and other advanced error detection and correction mechanisms. Such features were essential to begin with, especially given the purpose of the InfiniBand solutions, which deliver greater efficiency and stability across the server in aid of strenuous tasks for the server to tackle without dropping performance.

Comparing Mellanox and NVIDIA Infiniband Offerings

The first thing to remember when talking about NVIDIA’s and Mellanox’s network technologies is that they bought Mellanox Technologies in 2020, meaning they are now part of the same organizational ecosystem. This unison was not present before, so the historical account differs. They are also known for their innovations that have set the industry on fire. Their products were crucial for building massive server and storage infrastructures without being overwhelmed.

NVIDIA’s InfiniBand continues to improve due to Mellanox’s rich heritage. These solutions have also been integrated into more robust Nvidia infrastructures, such as those assisting workload requirements and AI optimization. The combination of Mellanox’s core technology and NVIDIA’s developments helps Mellanox and others deliver seamless data movement services, ensuring maximum data transfer availability across multiple networks. NVIDIA markets InfiniBand solutions today because the company has built a wealth of networking technology expertise for decades.

How do you install and maintain copper cables efficiently?

Best Practices for Installing Copper Cable Assemblies

To minimize interference and limitations of future maintenance, analyze the course and extremities in detail to ensure resourceful installation of copper cable assemblies. Employ materials of high quality as specified in the relevant standards to guarantee durability and satisfactory performance. Install the bend radius according to the instructions so as not to kink the cables during installation, which will cause signal distortion in the set installation. Prevent excessive strain and damage over time by adequately securing them with hardware, e.g., for high-density QSFP connections. Identify each cable with a label and maintain its integrity for future still maintenance and troubleshooting. Connectors utilizing EDR or FDR technology should always be checked during routine inspections to ensure the installation complies with performance requirements. The work’s excellent performance and the cable infrastructure’s lifetime are guaranteed if everything is followed correctly.

Maintenance Tips for Prolonging the Life of Copper and Twinax Cables

To maximize the longevity of the copper and twinax cables “New Improved cables,” proper maintenance, including constant cleaning, is crucial. Keeping the wires clean will also involve routinely peering through them for any instances of damage that could result in a reduction in their performance. Always keep a close eye on the environmental conditions, ensuring an optimal level of temperature and humidity so that thermal expansion and contraction do not occur, increasing the integrity of the charges. Also, ensure that there is no use in bending cables past the radius and that the cable ties are not overtightened to avoid cable ties. Auxiliary wiring should be placed to prevent electromagnetic interference from affecting the wires, thus boosting the signal. With adherence to these principles, regular maintenance of the cables will guarantee maximum reliability and efficiency.

Dealing with Common Issues in Direct Attach Copper Installations

Direct attached copper (DAC) networks also suffer from connection dropouts, cross talk, or mishandling of the cables. Sticking connectors entirely and by the devices being connected is essential to avoid disconnections. If strong magnetic fields surround the physical structure, proper grounding measures are necessary to eliminate interference with the signal. Shielded DAC cables would also provide the required CADM with minimal performance degradation, especially when using 4x configurations. Effective routing of cables is necessary; poor routing and tight bends of cables lead to mechanical forces being placed on the wires, which will likely shorten the cables’ life. This has to be done budget-wise and over time so that technology improvements or deployment can be made only on areas or devices that require changing them.

Reference Sources

Frequently Asked Questions (FAQs)

Q: What are the benefits of using QSFP28 100G EDR Infiniband cables?

A: QSFP28 100G Infiniband cables are designed to sustain speeds up to 100G, thus enabling them to support high bandwidth, low latency, and overall good performance in data centers that require such technologies. They are suitable for high-performance computing and storage networks. Such configurations are today available in DAC (Direct Attach Copper) and AOC Active Optical Cable, which enable twinax options for those distances that do not suffice for active cables.

Q: Compare DAC and AOC cables in infringing the distance for InfiniBand connections.

A: Connecting DAC (Direct Attach Copper Offers ) cables is cheap and can extend connectivity distance which is suitable for between 5 and 7 meters using passive copper twinax cables. AOC Active Optical Cable allows for longer extending between wires using fiber optics, allowing connections up to 100 + meters away without loss in cable performance. Although affordable, AOCs perform better at connectivity, extending distances while weighing less depending on zones; the only drawback is that the AOCs are pricier than copper wires.

Q: What QSFP28 to QSFP28 Infiniband Cables are Lengths Available?

A: The Infiniband cables have different types of cable lengths for various data center requirements. Standard lengths for DAC cables vary between 1 m and 7m, i.e., between 1m, 2m, 3m, 5m, and 7m. AOC counterparts are manufactured up to 10m, 15m, and 20m and zipped up to more than 30m. The essence of getting the correct product length is to enhance effective performance by cutting back on signal loss.

Q: What parameters do I consider for my Infiniband products?

A: All factors, such as needed data rate (EDR, HDR, NDR), the space between devices, types of connectors (QSFP28, QSFP56), and the set financial plan must be accounted for when making decisions for the Infiniband network to work efficiently. Twinax DAC cables are affordable when the devices are close by. AOCs are advisable for long runs or in an instance where expansion is a possibility. In addition, confirm that your network devices will be ok and be sure you have the necessary breakout cables or transceivers.

Q: What advantages do passive copper twinax cables have when used in Infiniband networks?

A: Twinax passive copper cables, or DACs, have several advantages on Infiniband networks. They are inexpensive, passively direct-attached copper twinax cables that give them low energy usage and are only effective when operating over short distances. Such wires have low latency levels and are more suited to interconnects and top-of-rack links (TOR) applications. They are also straightforward to deploy, as they do not need separate transceivers, making them increasingly popular in many data center scenarios, mainly when direct-attached copper twinax cables are employed.

Q: Are there platforms other than Infiniband under which 100G EDR QSFP28 cables would work?

A: QSFP28 Infiniband EDR cables are often compatible with other 100G networking standards like 100GBASE-CR4 for Ethernet. However, it is essential to test equipment compatibility. Specially designed cables for broader uses only to support Ethernet and Infiniband may be able to do so, while other cables may only support one protocol. Always verify specs and contact the manufacturer or vendor for details on compatibility.

Q: What are the newly implemented Infiniband standards, and how do they stack against EDR?

A: Infiniband SDR (Single Data Rate), DDR (Double Data Rate), QDR (Quad Data Rate), and EDRA are the most contemporary covering standards. HDR supports up to 200 Gb/s per port using QSFP56 connectors, while NDR pushes it to 400 Gb/s. These standards have high bandwidth and perform better than EDR, which is 100 Gbps. However, EDR is still quite prevalent and is satisfactory for most existing systems. Consider the expansion of your network and the cost-benefit of implementing new techniques when upgrading it.

Related Products:

-

NVIDIA MCA4J80-N004 Compatible 4m (13ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable

$650.00

NVIDIA MCA4J80-N004 Compatible 4m (13ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable

$650.00

-

NVIDIA MCA4J80-N005 Compatible 5m (16ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable

$700.00

NVIDIA MCA4J80-N005 Compatible 5m (16ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable

$700.00

-

NVIDIA MCP7Y60-H002 Compatible 2m (7ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$125.00

NVIDIA MCP7Y60-H002 Compatible 2m (7ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$125.00

-

NVIDIA MCP4Y10-N00A Compatible 0.5m (1.6ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive Direct Attach Copper Cable

$105.00

NVIDIA MCP4Y10-N00A Compatible 0.5m (1.6ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive Direct Attach Copper Cable

$105.00

-

NVIDIA MCP7Y60-H001 Compatible 1m (3ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$99.00

NVIDIA MCP7Y60-H001 Compatible 1m (3ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$99.00

-

NVIDIA MFS1S00-H003V Compatible 3m (10ft) 200G InfiniBand HDR QSFP56 to QSFP56 Active Optical Cable

$350.00

NVIDIA MFS1S00-H003V Compatible 3m (10ft) 200G InfiniBand HDR QSFP56 to QSFP56 Active Optical Cable

$350.00

-

NVIDIA(Mellanox) MCP1650-H003E26 Compatible 3m (10ft) Infiniband HDR 200G QSFP56 to QSFP56 PAM4 Passive Direct Attach Copper Twinax Cable

$80.00

NVIDIA(Mellanox) MCP1650-H003E26 Compatible 3m (10ft) Infiniband HDR 200G QSFP56 to QSFP56 PAM4 Passive Direct Attach Copper Twinax Cable

$80.00

-

NVIDIA MFA7U10-H003 Compatible 3m (10ft) 400G OSFP to 2x200G QSFP56 twin port HDR Breakout Active Optical Cable

$750.00

NVIDIA MFA7U10-H003 Compatible 3m (10ft) 400G OSFP to 2x200G QSFP56 twin port HDR Breakout Active Optical Cable

$750.00

-

NVIDIA MCP7Y60-H01A Compatible 1.5m (5ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$116.00

NVIDIA MCP7Y60-H01A Compatible 1.5m (5ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$116.00