In high-performance computing (HPC) and data-intensive applications, network infrastructure is vital for good performance and efficiency. NVIDIA Mellanox Infiniband adapter cards lead the way in this technological change with their advanced features, which are meant to increase data transfer rates and cut down on latency, thereby improving overall system throughput. This essay will look at these adapter cards in detail by discussing their architecture design, measuring performance and usage in different areas such as AI (Artificial Intelligence), ML (Machine Learning), and BDA (Big Data Analytics). What we hope for is that understanding what Nvidia Mellanox InfiniBand adapters can do helps people realize how much better networks can be made to perform while still supporting current computational workload needs.

Table of Contents

ToggleWhat is an Infiniband Adapter Card, and How Does it Work?

Understanding Infiniband Technology

Infiniband is used as a high-speed network technology in data centers and high-performance computing (HPC) environments. This runs on the switched fabric architecture that allows multiple devices to communicate concurrently without creating traffic jams, making data transmission more efficient. Infiniband uses point-to-point connection models that support single and multi-directional information flow to reduce latency while increasing throughput. Different performance levels are accommodated by this technology, which operates at speeds ranging from 10 Gbps up to over 200 Gbps. Among its many abilities are features like Remote Direct Memory Access (RDMA), which supports direct access of memory from one computer to another without using the operating system, thus reducing further still delays and CPU consumption; hence, it is beneficial for scientific research, financial modeling or machine learning where quick movement of data is required.

Key Features of Mellanox Infiniband Network Cards

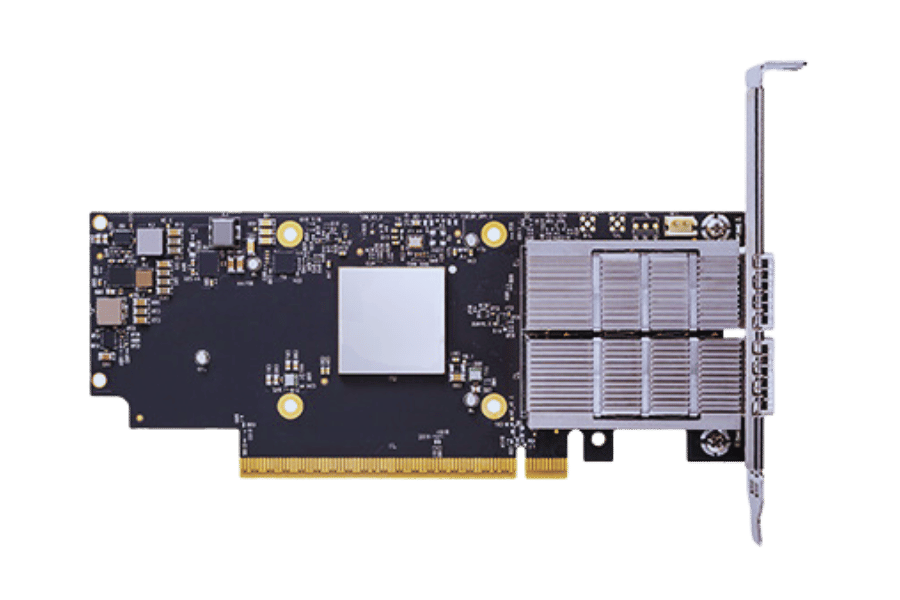

The Mellanox InfiniBand Adapter Card is designed to deliver top-speed performance with low latency in data center and HPC environments. Some of its features include:

- High Speed: This card can support up to 200 Gbps of bandwidth, allowing massive amounts of information to be sent during large-scale computations.

- Low Delay: These adapters use RDMA technology, resulting in minimal response times. This is particularly important for applications requiring quick processing speeds for their data analysis or real-time capabilities.

- Flexible Scalability: The switched fabric architecture used by these devices supports many more peripherals than other architectures could accommodate without sacrificing performance when scaling up.

- Sturdy reliability: Continuous operation readiness was considered during the design process, and advanced error correction and management capability were integrated. The adapter can always be relied upon, even under mission-critical conditions.

- Compatibility across all systems: They can work with various operating systems and programming models, making them easily integrated into different infrastructural setups.

- Enhanced safety measures: Sensitive information is protected through encryption while being transmitted, enabled by built-in security functions that enable safe communication between systems.

These attributes make Mellanox Infiniband network cards the best choice for boosting computational power in networks under demanding computation environments.

Comparing Infiniband and Ethernet Adapter Cards

When comparing Infiniband and Ethernet adapter cards, certain differences emerge regarding their impact on performance, scalability, and suitability for different applications.

- Performance: Infiniband provides higher bandwidth capabilities, up to 200 Gbps, and lower latency compared with Ethernet, thereby making it ideal for high-performance computing (HPC) applications and data-intensive environments. Conversely, although Ethernet has evolved with higher-speed standards, such as 100 Gbps, it generally does not achieve the same low latency as Infiniband.

- Scalability: With its switched fabric architecture design, Infiniband enables effective scaling in dense environments where many nodes need to be interconnected without degrading performance. On the other hand, Ethernet may face difficulties when deployed at large scales due to increased latency caused by contention.

- Cost and Complexity: Although setting up Infiniband technology may attract relatively higher initial costs resulting from specialized hardware requirements, its efficiency in terms of data throughput can offset operational expenditures over time. Besides being more widely adopted and having a larger market share, Ethernet benefits from using existing infrastructures, often leading to lower deployment costs and more straightforward integration procedures.

- Use Cases: Data centers utilize InfiniBand heavily for HPC workloads, deep learning training clusters, or large-scale analytics where speed is critical. However, ethernet remains the most commonly used choice for general networking tasks such as connecting computers within a building or office premises (LAN), which involves handling various workloads.

To sum up this discussion, selecting an InfiniBand or ethernet adapter card depends on specific use case requirements. Performance needs, achievable scalability levels, and cost-effectiveness relative to the target application must also be considered.

How to Choose the Best Infiniband Adapter for Your Needs?

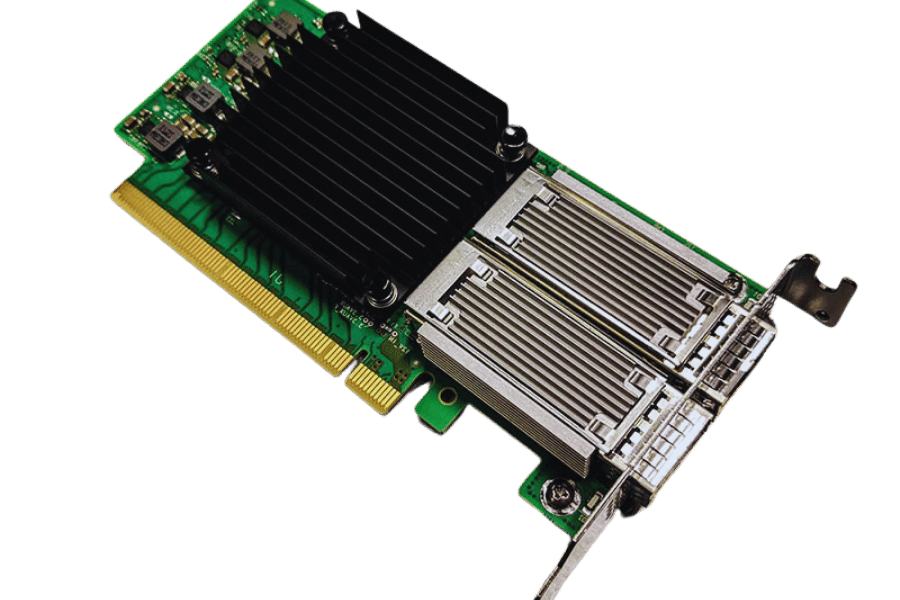

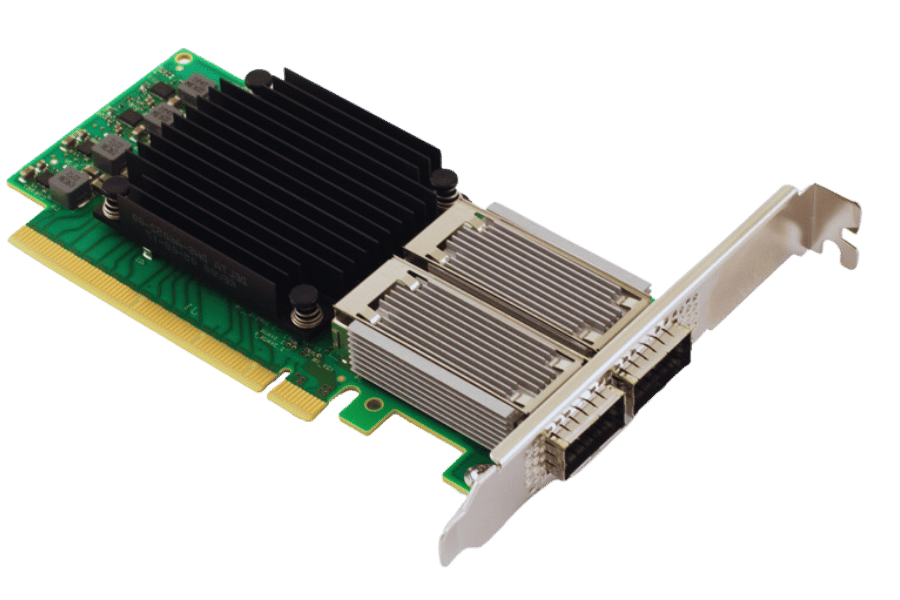

Single-Port vs. Dual-Port Infiniband Adapters

When deciding whether to use single-port or dual-port Infiniband adapters, you must consider your networking requirements and performance needs. Single-port InfiniBand Adapters are often cheaper and better for smaller systems where high bandwidth is not a priority. They support typical workloads while minimizing the number of devices needed and management expenses.

On the other hand, Dual-Port InfiniBand Adapters offer increased redundancy through two ports, which allows for higher throughput, especially in data-intensive computing applications. They also allow load balancing across multiple paths, leading to increased fault tolerance and better overall performance since this reduces the chances of bottlenecks occurring during the transmission of information packets between network components. Moreover, failover abilities can be achieved with these setups that have two ports, making them suitable for use in mission-critical environments.

In conclusion, deciding whether to adopt single-port or dual-port Infiniband adapters should reflect an organization’s scalability plans, budgetary allocations, and, most importantly, performance requirements. For environments with high current or expected future data loads, it may be necessary to opt for dual ports because they are more efficient and reliable. In contrast, single ports might do well in less demanding situations.

Understanding HDR, EDR, and FDR Infiniband Speeds

This technology has many different speed standards, which are High Data Rate (HDR), Enhanced Data Rate (EDR), and Fourteen Data Rate (FDR). FDR Infiniband significantly improved as it operates at 56 Gbps per link compared to previous versions. This significantly increased data transfer speeds in high-performance computing environments. Throughput was upped again with EDR Infiniband, which achieved 100 Gbps per link, enabling better data handling efficiency and lower latency for demanding applications. HDR Infiniband is the highest level of InfiniBand, offering an incredible 200 Gbps per link that suits present-day HPC needs and modern data centers. These should be chosen based on their use case requirements, overall network architecture design, and scalability plans for the future. Once establishments have grasped these differences, they can make well-informed decisions about where investments must be made within their own IB infrastructure to achieve optimal performance levels while keeping pace with evolving technologies.

Considering PCIe x16 Interfaces: PCIe 4.0 vs PCIe 5.0

To assess the PCIe x16 slots, one needs to be aware of the difference between PCIe 4.0 and 5.0 regarding bandwidth, latency, and compatibility. In case it is fully configured, the PCIe 4.0 has a maximum theoretical speed of up to 64 GB/s or gigabytes per second, which is twice as fast as its predecessor, the 3.0 version, which only supports up to 32 GB/s. This more comprehensive range can accommodate modern applications like gaming and data analytics.

On the other hand, the speed doubles for each lane, with PCIe 5.0 delivering an unprecedented 128GB/s; hence, this creates room for faster performance on data-driven tasks while ensuring future-readiness as workloads increase over time.PCIe 5 also has backward compatibility with previous versions, making it easier for businesses to upgrade their systems without experiencing any downtime. So, if your company wants better scalability and system performance, then go for PCIE Gen5 because PCIE Gen4 would be ideal, especially when working with environments with high data transfer rates and low latency demands.

What Are the Benefits of NVIDIA Mellanox ConnectX-6 VPI Adapter Card?

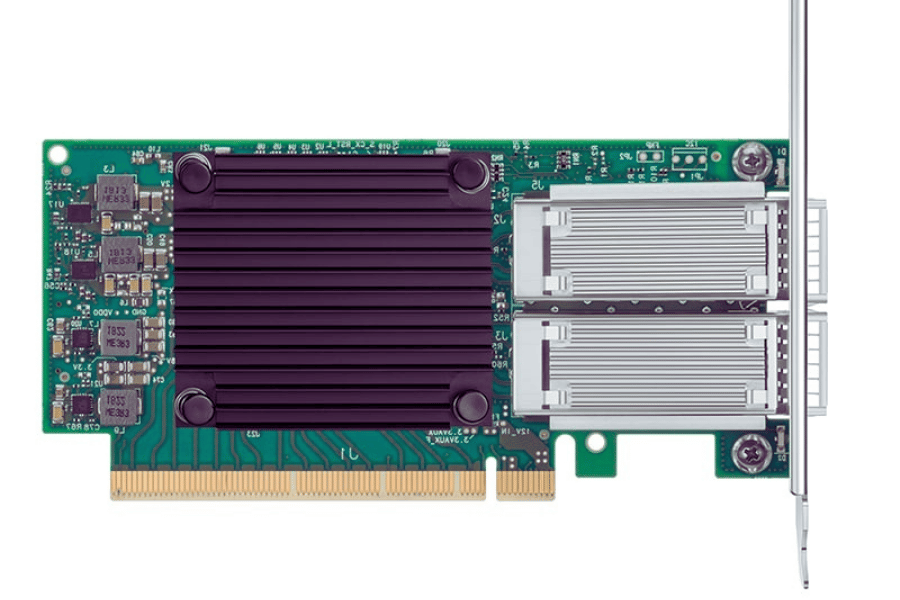

Performance and Flexibility of ConnectX-6 VPI

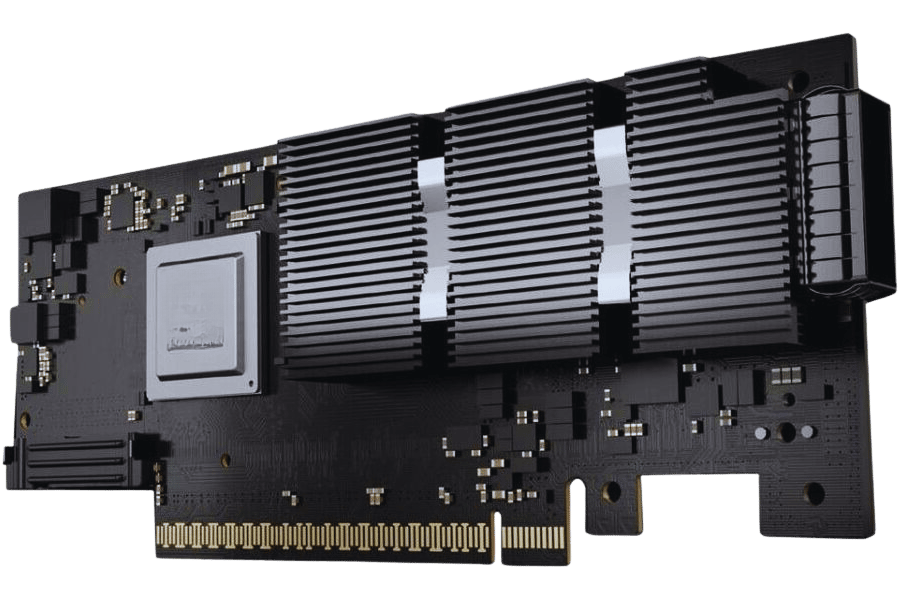

The NVIDIA Mellanox ConnectX-6 VPI network adapter card is designed for use in data centers with high processing needs. It supports both the InfiniBand and Ethernet protocols, ensuring smooth communication throughout different network environments. This makes it perfect for any company looking to improve its infrastructure.

This card operates at 200 Gb/s, increasing data transfer rates while decreasing latency significantly; this is especially important for high-performance computing (HPC), artificial intelligence, and machine learning applications. Additionally, the ConnectX-6 VPI has hardware acceleration capabilities for many different protocols, meaning that functions like RDMA (remote direct memory access) can be performed faster and involve less CPU work.

Another feature of this product is advanced visibility into what’s going on with networks managed by IT teams, trying to ensure reliability across systems through proactive troubleshooting where necessary. The combination of these factors makes the ConnectX-6 VPI a compelling networking solution and one that can adapt as needed, given today’s changing workloads in modern data centers.

Integrating ConnectX-6 VPI in Data Center Applications

Integration of the NVIDIA Mellanox ConnectX-6 VPI adapter card into data center applications requires a systematic approach to maximizing its full potential. First and foremost, organizations have to evaluate their current network architecture to determine where limitations exist that can be solved by deploying the ConnectX-6 VPI. This means including the adapter card in supercomputing clusters because it supports RDMA, which greatly improves data transfer efficiency, leading to faster computational tasks.

Secondly, compatibility with Ethernet and InfiniBand enables IT teams to switch seamlessly between network protocols, allowing them to adjust for particular workloads without major infrastructure changes. Using built-in management capabilities offered by ConnectX-6 VPI is essential for easy monitoring in real-time and automatic adjustments on networks, which enhances resource allocation optimization while maintaining uniformity in performance across different applications. For scalability-demanding environments, connect six vpi could easily be scaled out proportionally with growths in data traffic, thus becoming an indispensable element within contemporary data center design.

Using ConnectX-6 VPI with HDR100 and QSFP56

The NVIDIA Mellanox ConnectX-6 VPI adapter can support high data rates, such as HDR100, having a bandwidth of 200 Gbps per port. If we want to take advantage of the HDR100’s functionalities, we must use QSFP56 transceivers with these specifications. QSFP56 modules were created for increased data throughput and decreased latency, thus making them perfect for any high-performance network setup.

When integrating ConnectX-6 VPI along with HDR100 and QSFP56, it is essential to consider the cables and infrastructure being used. Users should ensure that they are compatible with their current hardware and check whether or not their data centers’ cabling infrastructures can meet the transmission requirements of HDR100. Moreover, what can be done further to improve network performance and reliability in bandwidth-intensive scenarios would be using QoS features together with advanced Ethernet technologies supported by ConnectX-6 VPIs. Therefore, organizations need to align these components to greatly enhance their system performance and overall efficiency in transferring data.

How do you install and configure Infiniband adapter cards?

Step-by-Step Installation Process

- Preparation: Before installation, make sure that you have all the necessary tools and components, such as an Infiniband adapter card, screws, and installation manual. Shut down the server and disconnect it from power.

- Open Server Chassis: Use a screwdriver to remove one side panel of your chassis to access the motherboard and expansion slots. Follow correct anti-static precautions to avoid damaging any hardware parts during this process.

- Find Out PCIe Slot: Identify which PCI Express slot on your motherboard will be used for installing an Infiniband Adapter Card (AIC) and ensure its compatibility with AIC specifications.

- Install Adapter Card: Align Infiniband card pins with the selected PCIE x16/x8/x4/x1 lane width version according to its supported bandwidth capability, then push gently but firmly until fully seated into the slot. If needed, secure it using screws if required by particular servers’ construction design features.

- Reconnect Cables: Ensure all necessary power cables are connected correctly to AIC and network cables. Verify whether they support the desired data rates; otherwise, replace them accordingly.

- Close Server Chassis: Once finished with the above task, put back the removed side panel earlier, then fix it tight using screws to close up everything again properly so nothing falls out while moving around later on during transportation or maintenance works, etcetera.

- Power Up & Configure: Connect the power supply again, switch ON, enter the BIOS settings, and see if it is detected. Otherwise, add drivers / configure networking through OS-level software components, etc.

- Testing: After completing diagnostics, check this device’s optimal functionality. Run connectivity tests and performance benchmarks, ensuring that each step is done correctly.

Adhering to these directions will result in successful installations and configurations for InfiniBand adapter cards, achieving the highest performance levels within a high-speed network environment.

Configuring Infiniband Network Settings

To guarantee maximum performance and connectivity while setting up an Infiniband network, some key steps must be followed. First, you need to access the utility that configures the operating system network so as to define a subnet manager for InfiniBand. This is essential in controlling traffic flow within this type of network. One should use OpenFabrics Enterprise Distribution (OFED) for software management and drivers since it has all the required configuration tools.

When IP-based networking is needed, configure IP over InfiniBand (IPoIB). Here, either using correct network management tools or configuring the “/etc/network/interfaces” file to binding addresses IPs with InfiniBand interfaces is involved. You should ensure that MTU (Maximum Transmission Unit) settings are properly configured to support an application’s data rates, such as throughput high-performance, usually set at 65520 bytes per second.

Finally, run command line utilities such as instant to check link status and shipping to test connectivity between nodes. This will verify if Infiniband has been configured correctly or not. Regular adjustment of Infiniband settings may be required for optimal performance, especially in dynamic network environment monitoring.

Troubleshooting Common Issues

Several common issues can impact performance and connectivity when managing an Infiniband network. Below are troubleshooting steps:

- Link Status and Connectivity Problems: Verify the link status of the Infiniband interfaces with the ibstat command. If it is found down, check on the physical connections, which include ensuring that cables are correctly plugged in without any damage and resetting the subnet manager or affected nodes to establish communication afresh.

- Configuration Errors: Confirm whether there has been any misconfiguration in the/etc/network/interfaces file or network management settings, particularly IPoIB settings; also ensure that the subnet manager was configured correctly and is up and running, then recheck MTU settings if they match application requirements.

- Performance Degradation: When experiencing reduced throughput, use load to keep track of traffic while identifying possible blocking points. Also, inspect switches, adapters, and other hardware components to rule out failures or overloads. One may consider adjusting Quality of Service (QoS) settings to optimize performance.

By following these steps systematically using appropriate commands and configurations, you will be able to restore functionality within your Infiniband networking environment and achieve maximum performance. It is equally important to Update drivers and firmware regularly because this can avert many common problems beforehand.

What to Expect from the Latest NVIDIA Mellanox MCX75510AAS-NEAT ConnectX®-7 Infiniband Adapter Card?

Advanced Features and Performance Upgrades

The Infiniband adapter card called NVIDIA Mellanox ConnectX®-7 offers many more data rates by efficiency and throughput. Specific options also characterize it. One of these features is Enhanced Adaptive Routing (EAR). It enhances fault tolerance and efficiency by dynamically shifting the paths used for data. Hardware-accelerated RDMA (Remote Direct Memory Access) and Congestion Control are supported, too, which significantly decreases latencies while reducing CPU overheads; therefore, it becomes beneficial in high-performance computing and other data-intensive applications.

Besides that, ConnectX-7 has included telemetry capabilities, thus making it possible to monitor different network performance metrics in real time. Additionally, this adapter card, when used together with NVIDIA’s Spectrum™ switches, provides end-to-end visibility that maximizes bandwidth, ensuring optimal performance across large-scale data center environments. There are also performance improvements, such as increased buffer memory size for handling bursts of data traffic more effectively, leading to better overall system responsiveness and throughput enhancement.

Comparing ConnectX-7 with Previous Generations

Many critical developments become apparent when one compares the ConnectX-7 to previous generations like ConnectX-6 or ConnectX-5. Among other things, it has a maximum support of 400 Gbps data throughput, significantly higher than the ConnectX-6’s 200 Gbps. This jump in bandwidth is significant for systems dealing with big amounts of data because it enables them to send more information at once, thus easing congestion in high-performance environments.

Additionally, its enhanced adaptive routing (EAR) feature greatly improves fault tolerance and dynamic path adjustments, which were less advanced on older versions of this product. Furthermore, hardware-accelerated RDMA and Congestion Control, added to the ConnectX-7, further optimize performance by reducing latencies and CPU loads, making it perfect for use in areas such as AI or big data processing.

In addition, the telemetry enhancements within the ConnectX-7 enable complete real-time monitoring of network health and performance, unlike any other generation before. Generally speaking. Therefore, this device represents a huge step from its predecessors by providing enhanced capabilities required by modern data centers.

Optimal Use Cases for ConnectX-7 Infiniband Adapters

The ConnectX-7 Infiniband adapters are very good for many critical applications in the modern computing environment. They are particularly good in high-performance computing (HPC) environments where moving data fast and with the least delay possible is important. With a capacity of 400 Gbps throughput, they are an excellent choice for simulations and complex computations that deal with large amounts of information.

ConnectX-7 also significantly improves performance in big data and data analytics applications. These adapters’ ability to efficiently handle large amounts of data due to hardware-accelerated RDMA combined with congestion control makes them perfect for analytics workloads requiring real-time insights.

In addition, they are precious for machine learning (ML) and artificial intelligence (AI) workloads. This feature helps train models on vast datasets quickly, as enhanced adaptive routing and low latency support the swift transfer of information between massive clusters. All these require extensive resource utilization, thus enabling the necessary infrastructure provision to be effectively achieved through ConnectX-7 adapters as such tasks demand.

Reference Sources

Frequently Asked Questions (FAQs)

Q: What is an NVIDIA Mellanox Infiniband Adapter Card?

A: The NVIDIA Mellanox InfiniBand Adapter Card is a high-performance network adapter designed to deliver the fastest data transfer speeds and reliability in data centers, clouds, and high-performance computing environments. These cards are recognized for their great throughput and low latency.

Q: How does the ConnectX series enhance performance and scalability?

A: The ConnectX series has enhanced performance and scalability by adding new capabilities that support advanced protocols and doubling the data rate. It also has dual-port QSFP56 with PCIe 4.0 x16 interfaces to provide efficient and flexible networking solutions.

Q: What is the difference between dual-port and single-port Infiniband cards?

A: A dual-port InfiniBand card has two ports for network cables, while a single port has only one. This enables higher data transfer rates with redundancy, making it suitable for most demanding applications where flexibility matters most.

Q: What does “Virtual Protocol Interconnect” (VPI) mean?

A: Virtual Protocol Interconnect (VPI) refers to a feature on some network adapter cards that allows them to support multiple networking protocols such as Ethernet or InfiniBand, which provides more flexibility in how networks can be configured based on different needs.

Q: Can NVIDIA Mellanox Infiniband Adapter Cards work with PCIe 5.0 x16?

A: Yes, some NVIDIA Mellanox Infiniband Adapter Cards can work with the PCIe 5.0 x16 interface standard, which offers better performance due to the higher data rates supported compared to earlier versions like PCIe 4.0, etc.

Q: What does it mean to have 215 million messages per second?

A: Being capable of handling 215 million messages per second shows how fast these network adapter cards can send messages, which is very important in applications that require rapid and efficient communication, such as high-frequency trading or real-time simulations.

Q: What are some key features of the ConnectX-6 VPI Card (MCX653106A-ECAT-SP)?

A: The ConnectX-6 VPI Card (MCX653106A-ECAT-SP) is highly performant and flexible. It has dual-port QSFP56 and PCIe 4.0 x16 support, hardware accelerations, enhanced security features, and optimized power consumption, making it perfect for any demanding networking task.

Q: What speeds can NVIDIA Mellanox Infiniband Adapter Cards handle?

A: These types of adapter cards can work with various networking speeds, such as DDR and SDR Infiniband speeds, EDR Infiniband speeds, and 100GbE Ethernet speeds, thus ensuring wide compatibility with different types of network infrastructures and the performance levels needed.

Q: How do these cards meet the continuously increasing requirements of modern networks?

A: They have been designed to flexibly cater to the ever-changing needs posed by current network structures; therefore, apart from offering high throughput rates, low latency periods should be expected alongside advanced protocol support plus scalability, thereby being able to handle growing amounts of data traffic over wider networks.

Q: Why is there a tall bracket on a network adapter card?

A: Tall brackets are used when installing these devices into standard PCI Express slots found within servers and other computer systems; this ensures proper physical attachment between the device and its host, creating an environment where such cards can operate efficiently in data centers.

Related posts:

- Understanding the SN® Connector: Fiber Optic Solutions for High-Density Applications

- Everything You Need to Know About the NVIDIA Blackwell B100 AI GPU

- Everything You Need to Know About SFP28 Switches: 25G SFP28 Managed Switch for Pro AV and Cisco Networks

- Unlocking the Potential of GPU Clusters for Advanced Machine Learning and Deep Learning Applications