NVIDIA has released the latest GB200 series compute systems, with significantly improved performance. These systems utilize both copper and optical interconnects, leading to much discussion in the market about the evolution of “copper” and “optical” technologies.

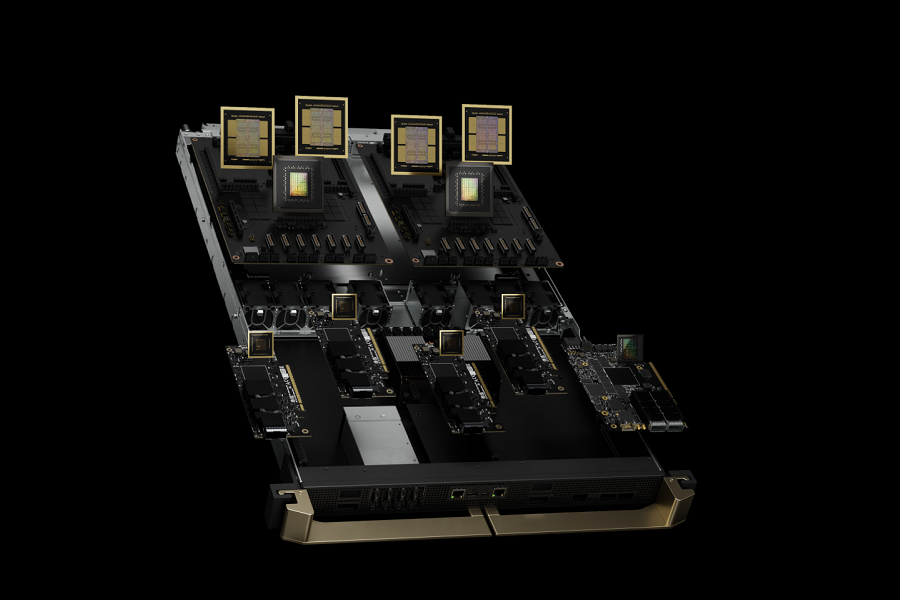

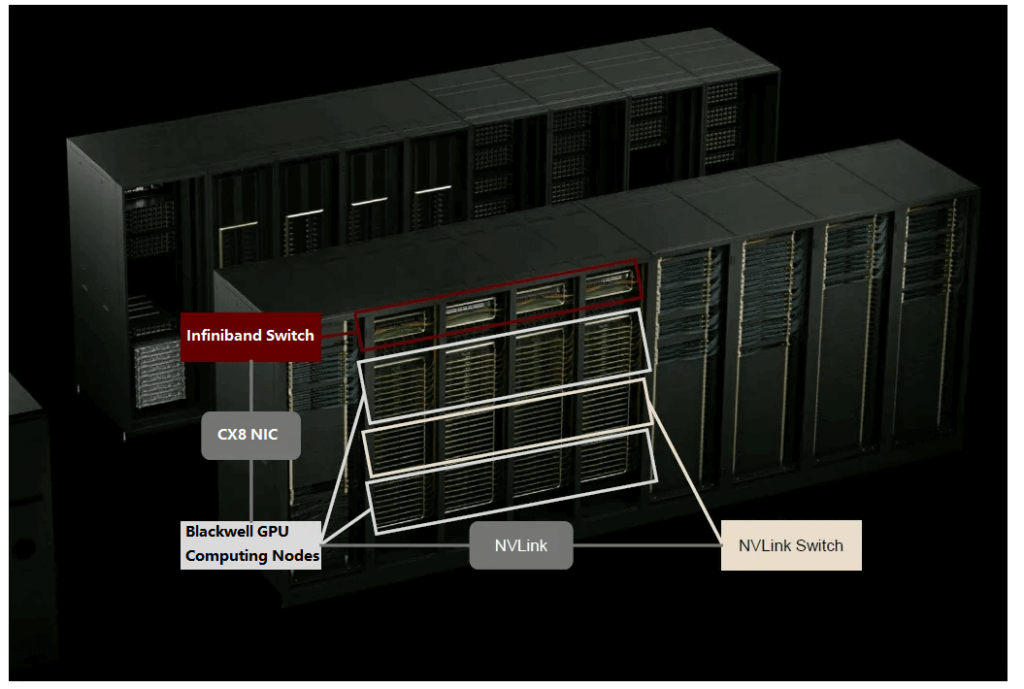

Current Situation: The GB200 (including the previous GH200) series is NVIDIA’s “superchip” system. Compared to traditional servers, the system has a larger granularity, with 36 or 72 GPUs connected primarily using electrical signals within the cabinet. Externally, they employ both NVLink and InfiniBand networks.

The choice between copper and optical is essentially a tradeoff between distance and speed.

Table of Contents

ToggleWhy 800G/1600G DAC and ACC Are Critical for AI Workloads

The NVIDIA GB200 NVL72’s reliance on 800G and 1600G Direct Attach Copper (DAC) and Active Copper Cable (ACC) solutions is a game-changer for AI data centers. These high-speed interconnects address the massive bandwidth and low-latency demands of large-scale AI models, such as trillion-parameter large language models (LLMs). Here’s why these cables are essential:

- High Bandwidth for AI Inference: Each Blackwell GPU in the GB200 NVL72 supports up to 1.8TB/s of bidirectional bandwidth via NVLink 5.0, requiring robust interconnects like 800G/1600G DAC/ACC to handle data transfers efficiently.

- Cost and Power Efficiency: Copper-based DAC and ACC cables consume less power (typically under 16W compared to optical modules) and are more cost-effective, saving up to 20KW per cabinet. This is critical for energy-conscious AI factories.

- Short-Range Reliability: Within a single GB200 NVL72 cabinet, copper cables replace optical modules for internal connections, reducing latency and simplifying infrastructure for distances up to 3 meters.

- Scalability for Large Clusters: While copper excels within cabinets, 800G/1600G optical modules support inter-cabinet connections, enabling scalability for clusters of up to 576 GPUs.

By balancing copper and optical solutions, the GB200 optimizes performance and cost, making it ideal for AI Cloud deployments.

The NVIDIA GB200 NVL72 represents a leap forward in AI supercomputing, combining cutting-edge GPUs with advanced networking solutions. To better understand its architecture and transformative potential, the following video provides an in-depth look at the system’s design and capabilities.

The GB200 NVL72’s innovative design enables unparalleled performance for AI workloads. With this foundation in mind, let’s explore how its high-speed connectivity, particularly through 800G and 1600G DAC/ACC cables, drives its efficiency.

Does GB200 Reduce the Importance of Optical Communication?

Based on the GH200, the target scenario for the GB200 NVL72 system is more oriented towards large clusters, clouds, and platform-type customers – what NVIDIA defines as the “AI Cloud/AI Factory”. The expected form is a multi-cabinet cluster, where inter-cabinet networks of 800G or more would incur massive electrical losses, making optical communication a necessity.

However, for small to medium-sized customers who may only adopt a single GB200 system, the feasibility is questionable, and traditional servers or cloud-based solutions may be better options (also part of NVIDIA’s differentiation strategy).

The design goal of GB200 is: a single cabinet can handle AI inference, which is beneficial for cloud virtualization deployment.

The minimum unit of the GB200 system is a cabinet, and the inference performance has been greatly improved, which can better handle massive parameters, cross-modal, massive tokens, and multi-concurrent inference scenarios, avoiding large-scale single-GPU distributed systems. In the cloud IDC scenario, it can better cope with the future massive inference demand (referring to the evaluations of AWS and MSFT).

The market’s understanding of the increase in copper interconnects is due to:

Previously, the H100 series clusters did not have intra-cabinet interconnects, but rather a separate network cabinet, and the chip rate was relatively high, so there was almost no short-distance copper wire.

While for the GB200 series, although there are many copper wires within the cabinet, the demand for optical interconnects in large clusters, such as the NVLink domain and the optical interconnect expansion of IB, is very large, and the future path of silicon photonics and chip-to-chip optical I/O is already very clear.

Q: How did NVIDIA’s product launch at the GTC conference differ from expectations?

A: At this GTC conference, GB200 became the main product, while the originally expected B100 and B200 products were not launched as expected. The GB200 chip contains 2 GPUs and 1 CPU, and the main product showcased is a single cabinet composed of 36 GB200 chips, for server use. Compared to the GB200 released last year, this GB200 did not showcase a standard cluster product, but only a single 36-card cabinet product.

Q: What is the role of the IP architecture in the GB200 series?

A: For some large customers who need cross-cabinet connections, the IP architecture is used for external connections. However, the difference between GB200 and GB200 lies in the internal connections. GB200 uses m link electrical connections (i.e., copper interconnects between GPU and switch), rather than the backplane connection method of last year. Huang particularly emphasized the advantages of copper interconnects in cost reduction and performance demonstration at the conference.

Q: At the GTC conference, Jensen Huang gave a special explanation of the copper interconnect solution, what are its advantages?

A: This is the first time that the copper interconnect solution has been specifically explained at such an important conference, which is also a point of great concern to everyone. The GPUs are connected through the mlink, confirming the use of copper interconnects, and their product solution may be similar to last year’s backplane connection method. Huang emphasized the advantages of copper interconnects in cost reduction and performance demonstration.

Q: What is the impact of the launch of the GB200 series on the market?

A: As NVIDIA’s new generation of server-level GPU chips, the GB200 series’ performance and efficiency improvements will have a significant impact on the market. Particularly, its adoption of the copper interconnect solution may change the internal connection method of GPU clusters, reducing costs and improving performance. Furthermore, the GB200 may change the design and deployment of data centers, further driving the development of artificial intelligence and cloud computing.

Beyond the GB200 NVL72’s technical specifications, its impact extends to the broader landscape of AI data centers. To explore how NVIDIA’s innovations, including the GB200, are shaping the future of AI infrastructure, the following video offers a comprehensive look at these advancements.

As the video illustrates, NVIDIA’s advancements, including the GB200 NVL72, are redefining AI data center capabilities. In conclusion, we’ll summarize how these technologies position NVIDIA at the forefront of AI innovation.

Q: What are the advantages of the GB200 copper interconnect?

A: Highlights of the GB200 launch: The GB200 single chip contains 2 GPUs and 1 CPU, and the main product launched is a single cabinet product composed of 36 GB200 chips. This shows that NVIDIA is focusing on electrical interconnects (mlink) rather than optical interconnect technology. Market promotion and expectations: GB200 will be widely applied, in contrast to the GH200 which has not been widely adopted. According to Huang’s speech at the conference, multiple potential major customers suggest that GB200 has high volume expectations. The market generally believes that the promotion of GB200 may lead to a gradual increase in the ratio of optical modules to GPUs from 1:2.5 to 1:9, and the sales volume of GB200 next year may have great growth potential.

Q: What is the industry trend for backplane connectors?

A: The use of backplane connectors is gradually increasing in AI servers, large switches, and routers. The technology is also evolving towards orthogonal zero-backplane and cable-backplane modes. Backplane cables have the advantages of longer transmission distance and more flexible wiring but are relatively higher in cost.

Q: What are the demand and price trends for backplane connectors?

A: The increasing demand for AI servers is driving the demand for backplane connectors. The shift to the cable-backplane mode has also increased the value, with the total value accounting for 3-5% of the cost of a single server. Therefore, the industry is showing a trend of both volume and price growth.

Q: How does the launch of GB200 impact the optical module industry?

A: The launch of GB200 is positive for the optical module industry, as it meets the demand for cross-cabinet connections, which exist for most customers. The current GB200 has a bidirectional bandwidth of 1800G, and based on a 1.6T configuration ratio, the ratio to optical modules is about 1:9. If using the 800G solution, the ratio could reach 1:18. The value difference is not significant, but since 1.6T OSFP-XD optical modules are expected to be mass-produced in Q4, customers are more inclined to use the more cost-effective 1.6T solution. Therefore, optical modules will be upgraded, driving the trend from the current 1:2.5 to 1:9.

Q: What are the main differences between GB200 and GH200?

A: The main difference is that the GH series is not a high-volume product, while GB200 has a wide range of potential customers, including Google, Meta, OpenAI, Microsoft, Oracle, and Tesla. This means GB200 will see widespread application and high-volume adoption.

Q: What are the market expectations for 1.6T OSFP-XD optical module demand next year?

A: The market expectation for 1.6T OSFP-XD optical module demand next year is between 2-3 million units, with our model predicting 2.5 million. This does not yet consider the incremental demand from the standalone B100 or B200 series.

Q: What type of interconnect solution did GB200 adopt?

A: GB200 adopted a copper interconnect solution, which reduces costs. Especially with the high-volume adoption of GB200, this will become an important factor driving the increase in demand for optical modules.

Q: What industry trend did Huang’s presentation at the conference reflect?

A: His presentation at the conference can be seen as a reflection of an industry trend, not just a short-term hot topic. Over time, the industry will observe the performance delivery of the relevant companies. The market previously had debates on copper vs. optical interconnect solutions, but now it has been confirmed that GB200 adopted the copper interconnect solution.

Q: What are the core investment targets in the optical module industry?

A: With the high-volume adoption of GB200 and the rising demand for 1.6T OSFP-XD optical modules, FiberMall will receive more attention.

Q: What is the market prospect for the copper interconnect solution?

A: The market’s attitude towards the copper interconnect solution is changing. The fact that GB200 adopted the copper interconnect solution indicates that this solution may see wider application in the future. With the performance delivery of the relevant companies, the market prospect of the copper interconnect solution is worth looking forward to.

Q: What are the main differences in design and application between GB200 and GH200?

A: The GB200 design integrates the switch, server, and GPU into the same cabinet, using a blade server-like connection method. The switch’s I/O interfaces are visible on the front, while the connection between the switch and GPU is most likely through a copper backplane cable. In comparison, GH200 uses a separate connection design with DAC (Direct Attach Cable).

Q: What role does the copper backplane cable play in the design of GB200?

A: The copper backplane cable plays a critical role in the GB200 design, connecting the backplane connectors and supporting the interconnection between the switch and GPU boards. This design makes the signal transmission between the server and switch more convenient.

Q: What are the main application areas of backplane connectors?

A: Backplane connectors are mainly used in large switches, routers, and AI servers. Especially in modular-designed servers and large switches/routers, this sub-board and backplane architecture will be more common.

Q: What is the industry development trend of backplane connectors?

A: With the progress in server and switch design, the demand for backplane connectors is expected to continue growing. Especially in the areas of large switches, routers, and AI servers, the application prospects of these connectors are broad.

Q: What is the competitive landscape on the supply side?

A: The competitive landscape on the backplane connector supply side is relatively stable. FiberMall, with its rich production experience and strong supply chain management capabilities, provides the market with stable and high-quality backplane connector products.

Q: How does the design of GB200 impact the demand for backplane connectors?

A: The design of GB200 has driven the growth in demand for AI servers and large switches/routers, which in turn has boosted the demand for backplane connectors. In this design paradigm of servers and switches, the demand for backplane connectors will see a significant increase.

Q: How are backplane connectors evolving in their technology development path?

A: Backplane connectors are evolving in two directions: one is an orthogonal zero-backplane, and the other is a cable-backplane. GB200 adopts the cable-backplane mode, whose advantages include better heat dissipation, lower transmission loss, longer transmission distance, and more flexible wiring. But the downside is higher cost.

The 800G and 1600G DAC/ACC cables are pivotal to the GB200 NVL72’s high-speed connectivity, offering low latency and high bandwidth for AI-driven workloads. To dive deeper into how these cables function and their technical advantages, the following video offers a clear and concise explanation.

With a clearer understanding of DAC and ACC technologies, we can now appreciate their role in optimizing the GB200 NVL72’s performance. Next, we’ll examine how these cables integrate with the system’s overall architecture to support massive-scale AI computations.

Q: What is the demand-side logic for backplane connectors?

A: The demand for backplane connectors is mainly driven by AI servers, and the cable-backplane mode has a significantly higher value than the traditional backplane PCB mode. Currently, the value of backplane connectors accounts for about 3-5% of the cost of a single server.

Q: What is the future market prospect for backplane connectors?

A: With the rapid development of technologies like artificial intelligence and big data, the market demand for backplane connectors will continue to grow. In the future, the backplane connector market will face more opportunities and challenges, and domestic and foreign companies need to continuously strengthen their technology R&D and product innovation to meet market demand and maintain a competitive edge.

Q: Will the cable-backplane mode used in AI servers become a mainstream trend?

A: Yes, the cable-backplane mode used in AI servers is expected to become a mainstream trend.

Related Products:

-

NVIDIA MMS4A00 (980-9IAH1-00XM00) Compatible 1.6T OSFP DR8D PAM4 1311nm 500m IHS/Finned Top Dual MPO-12 SMF Optical Transceiver Module

$2600.00

NVIDIA MMS4A00 (980-9IAH1-00XM00) Compatible 1.6T OSFP DR8D PAM4 1311nm 500m IHS/Finned Top Dual MPO-12 SMF Optical Transceiver Module

$2600.00

-

NVIDIA Compatible 1.6T 2xFR4/FR8 OSFP224 PAM4 1310nm 2km IHS/Finned Top Dual Duplex LC SMF Optical Transceiver Module

$3100.00

NVIDIA Compatible 1.6T 2xFR4/FR8 OSFP224 PAM4 1310nm 2km IHS/Finned Top Dual Duplex LC SMF Optical Transceiver Module

$3100.00

-

NVIDIA MMS4A00 (980-9IAH0-00XM00) Compatible 1.6T 2xDR4/DR8 OSFP224 PAM4 1311nm 500m RHS/Flat Top Dual MPO-12/APC InfiniBand XDR SMF Optical Transceiver Module

$3600.00

NVIDIA MMS4A00 (980-9IAH0-00XM00) Compatible 1.6T 2xDR4/DR8 OSFP224 PAM4 1311nm 500m RHS/Flat Top Dual MPO-12/APC InfiniBand XDR SMF Optical Transceiver Module

$3600.00

-

0.5m (1.6ft) NVIDIA Compatible 1.6T OSFP-RHS to OSFP-RHS Passive Direct Attached Cable

$465.00

0.5m (1.6ft) NVIDIA Compatible 1.6T OSFP-RHS to OSFP-RHS Passive Direct Attached Cable

$465.00

-

NVIDIA MCA4J80-N003 Compatible 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable

$600.00

NVIDIA MCA4J80-N003 Compatible 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable

$600.00

-

NVIDIA MCP4Y10-N002-FLT Compatible 2m (7ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive DAC, Flat top on one end and Flat top on the other

$300.00

NVIDIA MCP4Y10-N002-FLT Compatible 2m (7ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive DAC, Flat top on one end and Flat top on the other

$300.00

-

NVIDIA MCA7J65-N004 Compatible 4m (13ft) 800G Twin-port OSFP to 2x400G QSFP112 InfiniBand NDR Breakout Active Copper Cable

$800.00

NVIDIA MCA7J65-N004 Compatible 4m (13ft) 800G Twin-port OSFP to 2x400G QSFP112 InfiniBand NDR Breakout Active Copper Cable

$800.00

-

OSFP-1.6T-PC1.5M-RHS 1.5m (5ft) 1.6T OSFP-RHS to OSFP-RHS Passive Direct Attached Cable

$525.00

OSFP-1.6T-PC1.5M-RHS 1.5m (5ft) 1.6T OSFP-RHS to OSFP-RHS Passive Direct Attached Cable

$525.00

-

OSFP-800G-AEC5M 5m (16ft) 800G OSFP to OSFP PAM4 Active Electrical Copper Cable

$1055.00

OSFP-800G-AEC5M 5m (16ft) 800G OSFP to OSFP PAM4 Active Electrical Copper Cable

$1055.00

-

OSFP-800G-FR4 800G OSFP FR4 (200G per line) PAM4 CWDM Duplex LC 2km SMF Optical Transceiver Module

$3500.00

OSFP-800G-FR4 800G OSFP FR4 (200G per line) PAM4 CWDM Duplex LC 2km SMF Optical Transceiver Module

$3500.00

-

OSFP-800G-2FR2L 800G OSFP 2FR2 (200G per line) PAM4 1291/1311nm 2km DOM Duplex LC SMF Optical Transceiver Module

$3000.00

OSFP-800G-2FR2L 800G OSFP 2FR2 (200G per line) PAM4 1291/1311nm 2km DOM Duplex LC SMF Optical Transceiver Module

$3000.00

-

OSFP-800G-2FR2 800G OSFP 2FR2 (200G per line) PAM4 1291/1311nm 2km DOM Dual CS SMF Optical Transceiver Module

$3000.00

OSFP-800G-2FR2 800G OSFP 2FR2 (200G per line) PAM4 1291/1311nm 2km DOM Dual CS SMF Optical Transceiver Module

$3000.00

-

OSFP-800G-DR4 800G OSFP DR4 (200G per line) PAM4 1311nm MPO-12 500m SMF DDM Optical Transceiver Module

$3000.00

OSFP-800G-DR4 800G OSFP DR4 (200G per line) PAM4 1311nm MPO-12 500m SMF DDM Optical Transceiver Module

$3000.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$700.00

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$700.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA MMS4X50-NM Compatible OSFP 2x400G FR4 PAM4 1310nm 2km DOM Dual Duplex LC SMF Optical Transceiver Module

$1200.00

NVIDIA MMS4X50-NM Compatible OSFP 2x400G FR4 PAM4 1310nm 2km DOM Dual Duplex LC SMF Optical Transceiver Module

$1200.00