The topmost high-speed networking technology is the High Data Rate (HDR) InfiniBand, which has incomparable data transfer rates and the lowest latency. This tutorial looks into how HDR Infiniband works in depth so that readers can have a clear picture of its structure, performance features, and real-world uses. Through digging into details and advantages seen in practice, this paper will provide enough information needed to employ HDR Infiniband within your network infrastructure to ensure it performs best and scales properly. If you’re an IT specialist, network engineer, or just someone interested in technology – be sure to keep reading! We’re going to cover everything there is to know about today’s most complex networking solutions.

Table of Contents

ToggleWhat is HDR Infiniband and How Does it Work?

Essentials of HDR Infiniband

HDR Infiniband, or High Data Rate Infiniband, is an updated networking technology that can provide up to 200 Gbps of data transfer speed per link. This is achieved through fast signaling combined with efficient information encoding and low latency switching techniques. The basics of the InfiniBand are followed with HDR InfiniBand, which is a point-to-point bidirectional serial link architecture using physical, link network transport, and application layers as its layered structure. It also guarantees the safety of transferred packets at higher speeds by introducing more sophisticated error-detecting and correcting capabilities into the system. This combination enables networks to perform well under high loads while being able to grow easily — making them perfect for use in enterprise environments where much computational power is needed or data centres dealing with large amounts of information.

The Role of Mellanox and NVIDIA in HDR Infiniband

At present, a division of NVIDIA, Mellanox Technologies is an essential contributor in the field of HDR Infiniband technology, which refers to its development and deployment. Mellanox remains inventive under NVIDIA by creating super networks that use high performance through capitalizing on capabilities brought about by HDR Infiniband. For instance, they can support data transfer up to 200 Gbps per link with their designed adapters and switches for this purpose alone, where latency is minimized while optimization is done on throughput at maximum levels.

Some key technical parameters of Mellanox’s HDR InfiniBand solutions are as follows:

- Data Transfer Rate: Signaling techniques enable the achievement of up to 200 Gbps per link.

- Latency: End-to-end latency is ultra-low, which is necessary for high-performance computing and data center applications.

- Switching Capacity: Scalable network configurations require high throughput support offered by exceptional switching capacity provided by Mellanox HDR switches.

- Error Detection & Correction: Advanced algorithms used during transmission enhance reliability in data transmissions by ensuring integrity.

- Energy Efficiency: Large-scale network operations benefit from devices that optimize power consumption such as those produced by Mellanox HDR InfiniBand.

These technologies are important for meeting the needs of modern data-intensive environments where reliability and high performance matter most. With the backing of NVIDIA resources plus expertise from Mellanox, it sets new records every time still being recognized among other advanced networking solutions.

How HDR Infiniband Differs from Other Versions

The previous versions of Infiniband, EDR (Enhanced Data Rate) and FDR (Fourteen Data Rate), are substantially improved by HDR (High Data Rate) Infiniband. They differ in terms of data transfer rates, latency, and overall network efficiency. Per link, HDR Infiniband supports data transfer rates up to 200 Gbps, which is twice as fast as 100 Gbps offered by EDR and much higher than 56 Gbps provided by FDR. This speed increase has been achieved through more advanced methods of signaling that allow for greater bandwidth while still maintaining signal integrity.

Another important distinction is latency. For this reason alone HDR Infiniband would be perfect for high performance computing systems (HPCs) or data centers where even the slightest delay can cause a significant decrease in operational efficiency. Sophisticated hardware design combined with optimized protocols for handling information are responsible for such low latencies demonstrated by this technology.

What’s more is that when compared against its ancestors, HDR Infiniband features better error detection as well as correction algorithms. These improvements make sure that there will be no loss or corruption during transmission thus being very important for applications requiring accurate delivery of information.

Energy efficiency, together with increased switching capacity, are two other areas where HDR outperforms earlier iterations. Switches made by Mellanox under the name “HDR” have enormous switching capabilities, allowing networks to scale up easily, hence supporting the high throughput necessary for growing data center operations; moreover, devices belonging to this generation were designed so as not only to optimize power usage but also reduce it which contributes towards creation more sustainable and cheaper network structures.

To put it briefly: when compared with EDR or FDR – its precursors – we can see that HDR excels in terms like rate at which information is transferred, reducing waiting time between different stages of processing packets or frames sent over links, correcting mistakes made during transmission while consuming less electricity which makes it a better choice for modern data intensive environments.

What are the Advantages of HDR Infiniband?

Speed and Bandwidth Benefits

HDR Infiniband has a remarkable 200 Gbps data transfer rate, which is twice as fast as EDR Infiniband’s 100 Gbps and almost four times as fast as FDR Infiniband’s 56 Gbps. This significant boost in velocity makes possible speedy movement and processing of facts that is important in high-performance computing environments. Better bandwidth capacity without compromising signal quality is achieved through more advanced signaling techniques used by HDR Infiniband, thus making it faster and more reliable for transmitting data. As a result, it allows larger networks to be built more efficiently while also improving the performance of applications that require large amounts of data to be processed quickly.

Low Latency and High Throughput

HDR InfiniBand is an appropriate technology for intensive computational operations due to its low latency and high throughput. It can achieve as little as 90 nanoseconds in delay, which is essential for real-time applications such as scientific research, financial services, or machine learning. The above-mentioned can process up to 200 million messages per second (Mmsgs/s) by use of complex structures, thus allowing large amounts of information to be transmitted without any significant delays.

Technical Parameters:

- Latency: ~90 nanoseconds.

- Throughput: Up to 200 Mmsgs/s.

- Data Transfer Rate: 200 Gbps.

New buffer designs, better routing algorithms and faster data paths are some architectural enhancements behind these figures justified by their numbers. With this feature not only do they cut down on the time spent while moving from one place to another but also smoothen transit process preventing interruption thus meeting demand for today’s high performance data centers infrastructure.

Reliable Data Transmission

HDR Infiniband ensures trustworthy data delivery by use of its advanced fault-finding and rectifying techniques. By using Forward Error Correction (FEC) and strong packet retransmission tactics, it secures the correctness of information despite noise or interference. Also, HDR Infiniband implements end-to-end Quality of Service (QoS) rules that prioritize critical data streams to reduce packet drop and guarantee steady performance. These functionalities jointly improve dependability and effectiveness in transmitting facts, thereby making HDR InfiniBand the most reliable option for mission-critical applications within high-speed computing environments.

How Does HDR Infiniband Compare to Ethernet?

Infiniband vs Ethernet: Key Differences

A number of important distinctions arise when comparing Ethernet to HDR Infiniband that render HDR Infiniband the best choice for high-performance computing (HPC) environments:

- Latency: Latency in Ethernet is measured in microseconds while HDR Infiniband has significantly lower latency (~90 nanoseconds).

- Throughput: Ethernet offers lower throughput compared to Infiniband, with data transfer rates of 200 Gbps and capabilities of up to 200 million messages per second (Mmsgs/s).

- Efficiency: While being designed for more general-purpose networking, InfiniBand is optimized for minimal delay as well as efficient communication.

- Quality of Service (QoS): Robustness or granularity might not be there with ethernet QoS implementation where reliable priority transmission is needed which is very critical in HPC environment; therefore this function utilizes advanced protocols such as those found in the HDR standard.

- Error Handling: Data integrity can be maintained by infiniband through forward error correction (FEC), packet retransmission strategies among other mechanisms but Ethernet too includes error handling although it may not be sophisticated enough.

- Cost and Complexity: For general networking needs, Ethernet is cheaper and easier to deploy than infinibands which have higher costs due their specialized features for high performance; also deployment of these features requires increased complexity in setup procedures applicable during mission-critical and scientific computing applications.

Case Studies: When to Choose Infiniband Over Ethernet

HPC Clusters

In High-Performance Computing (HPC) environments, performance and low latency are key. For instance, a research facility might be running climate models that require complex simulations. To ensure this, the establishment settled on HDR Infiniband, which has an ultra-low latency (just above 100 nanoseconds) and high speed (up to 200 Gbps). These figures were instrumental in cutting down simulation run times, thus reducing data corruption points during transmission, something necessary for exact predictions about global warming.

Data Centers for Financial Trading

Financial institutions involved in high-frequency trading need almost instant data transfer. Infiniband replaced Ethernet in one of the top finance firms’ upgraded data centers. Smaller than microsecond figures used by Ethernet cannot compete with slightly over 100 nanosecond numbers achieved using InfiniBand when it comes to speed; hence, this innovation enabled swifter transaction times, thereby giving them an edge over their rivals within markets.

Scientific Research and Development

Genomic institutes as well as those dealing with molecular dynamics simulations handle large amounts of information that needs efficient processing power behind them. An example is when a genomics research institute switched to Infiniband from Ethernet because they considered QoS protocols and error handling mechanisms advanced enough in Infiniband compared to its counterpart, which was therefore seen as lacking such capabilities necessary for maintaining data integrity during extensive genome sequencing exercises.

Artificial Intelligence & Machine Learning

Artificial intelligence (AI) training clusters rely heavily on bandwidth utilization among nodes during machine learning workloads; hence, another company involved in this field adopted Spock-based hdr infiniband after migrating from their previous AI training cluster setup. This sped up things since higher throughputs associated with 200Gbps meant faster completion times while superior error correction ability reduced overall duration taken by large neural networks towards being trained, therefore enhancing efficiency levels within model development across different areas where such technologies are applied today like virtual private network sites among others.

Virtualized Data Centers

Virtualization infrastructures often struggle with consistent performance levels, but a cloud service provider dealing with such environments found an answer in HDR InfiniBand, which they incorporated into their network backbone. They did this because Infiniband offers them what they need, which is constant high throughput alongside strong QoS measures that are crucial towards meeting SLA commitments required by clients who have entrusted them with critical data storage and processing tasks on shared servers.

These examples show how organizations decide whether to use Ethernet or Infiniband based on performance, reliability and efficiency requirements for particular applications. For mission-critical systems where every second counts such as those used in High Performance Computing clusters (HPC), low latency becomes essential; thus making infinband a better option than its counterpart since the latter cannot achieve anything lower than microseconds.

What Products and Adapters are Available for HDR Infiniband?

Overview of Mellanox HDR Infiniband Products

NVIDIA now owns Mellanox, which provides various HDR Infiniband products to cater for high-performance computing environments. The most important among these are:

- ConnectX-6 Adapters: These cards provide up to 200 Gbps of speed by means of advanced congestion control as well as scalability features that are suitable for AI and ML workloads.

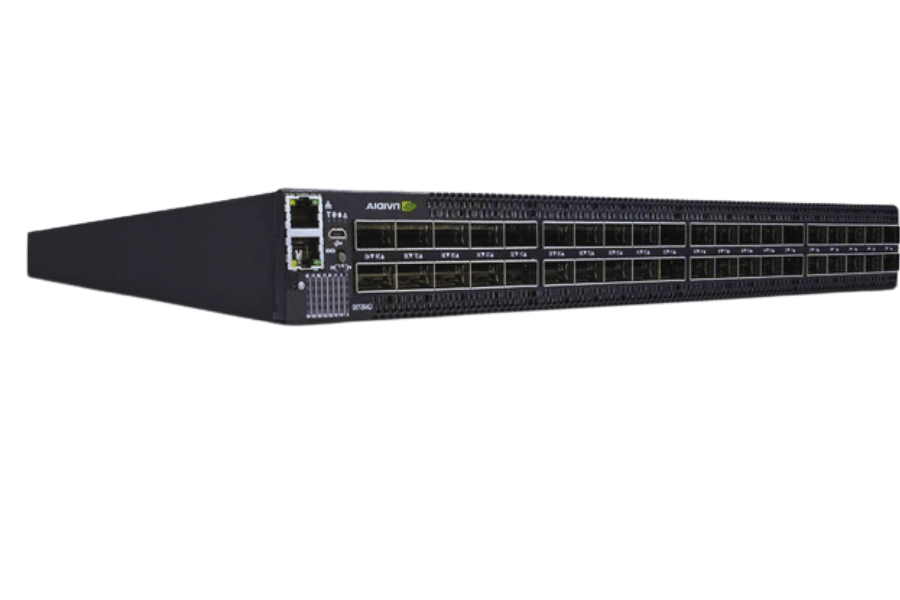

- Quantum Switches: Quantum HDR Infiniband switches have the ability to deliver performance which has never before been seen in this industry; they do so through providing either 40 ports at 200 Gbps each or 80 ports at 100 Gbps each thereby ensuring low-latency and huge capacity for data-intensive applications.

- LinkX Cables & Transceivers: LinkX offers both copper and optical solutions enabling signal integrity optimization as well as data transmission over different distances thus making it easier to scale up interconnections within data centers.

These products collectively improve network performance thereby guaranteeing dependable and efficient transfer of information necessary for such areas like artificial intelligence, machine learning and virtualized environments.

Popular Adapters and NICs for HDR Infiniband

HDR InfiniBand adapters and network interface cards (NICs) are essential in achieving the best performance in computing with high throughput and low latency. Here are some of the options that you can choose from:

Mellanox ConnectX-6 HDR100 Adapter

- Throughput: 100 Gbps.

- Latency: Sub-600 nanoseconds.

Key Features:

- In-Network Computing offloads.

- Adaptive routing for congestion control.

- Hardware-based security features.

Mellanox ConnectX-6 HDR200 Adapter

- Throughput: 200 Gbps.

- Latency: Sub-600 nanoseconds.

Key Features:

- Advanced virtualization accelerations.

- Multi-host and SR-IOV for efficient resource usage.

- High scalability tailored for AI and machine learning workloads.

Mellanox BlueField SmartNIC:

- Throughput: 200 Gbps.

- Latency: Sub-600 nanoseconds.

Key Features:

- Integrated Arm cores for flexible software programmability.

- Ideal for data center security, storage, and networking functions.

- In-network computing for reducing application runtime.

Technical Justification

These NICs were selected because they provide large bandwidths and low latencies, which are necessary for HPC (high-performance computing) workloads characterized by massive amounts of data. The ConnectX-6 offers strong congestion control as well as advanced virtualization, which makes it highly versatile across various settings. BlueField SmartNIC, on the other hand, integrates more processing cores, thereby allowing greater flexibility and programmability needed to handle complex tasks within a data center environment while enhancing security around such areas.

This is based on their criticality towards ensuring faster processing of data, speedy transfer rates between systems as well as reduced computational delays hence making them ideal fitment for mission critical applications.

Options for QSFP56 and HDR Cables

QSFP56 Fiber Optic Cable

- Summary: A high-speed data communication tool that is small and hot-pluggable is called QSFP56. It has been designed to provide more bandwidth by supporting speeds of up to 200Gbps.

Merits:

- Low insertion loss, high return loss.

- Superior signal integrity for long-distance data transmission.

- Perfectly fits in data centers and high-performance computing environments.

Application:

- Ideal for 200G Ethernet interconnections and InfiniBand HDR interconnections.

- Used in places where there is need for low latency together with high data rates.

HDR Copper Cable

- Summary: High Data Rate (HDR) copper cables are built to support fast network systems while still being cost-effective since they offer short-distance connectivity solutions.

Benefits:

- It is cheap when compared with optical cables.

- It consumes less power.

- Strong and dependable over short transmission distances.

Application:

- Optimized for inter-rack connections within data centers.

- Where high performance relies on low latency and large bandwidths needed for efficient operation.

Active Optical Cables (AOCs)

- Summary: Active Optical Cables (AOCs) convert electrical signals into optical ones using active electronic components then back again thus they extend the range of coverage without degrading signals like copper wires do because they have got amplifiers along their length.

Advantages:

- Much lighter & smaller size.

- Better signal attenuation characteristics.

- Higher reliability over longer distances.

Application:

- Suitable for HPC & DCI – high-performance computing & data center interconnects.

- Most common in lightweight/flexible cable management scenarios.

Additionally, these choices work with Mellanox ConnectX-6 and BlueField SmartNIC solutions to ensure maximum performance efficiency in environments where there is need for high capacity transmissions across wide networks.

How do you set up HDR Infiniband in your network?

Steps to Install HDR Infiniband Adapters

Confirm Compatibility:

- Check whether the server and switch hardware can work with the HDR Infiniband adapters.

Firmware and Driver Confirmation:

- Verify that the firmware and driver version are appropriate by crosschecking.

Adapter Installation:

- Power off the server.

- Put in place the HDR Infiniband adapter into the available PCIe slot.

- Fasten down the adapter using screws.

Cable Connection:

- Link up through a suitable HDR copper cable or AOC.

- Interface the cable to the adapter then to its corresponding switch port.

Power Up and Boot Server:

- Switch on your computer system then enter BIOS/UEFI settings.

- Ensure that your new adaptor has been detected by this host machine (server).

Driver/Firmware Installation:

- Turn on; boot into OS (Operating System).

- Get online and visit the manufacturer’s website so you can download & install the latest drivers plus firmware versions.

Network Configuration:

- Use network management tools for configuring IP addresses as well as other related settings within the networking environment.

- Optimum performance should also be achieved through correct subnetting alongside VLAN setup realization.

Test Connectivity:

- Run diagnostics in order to confirm whether the adapter is functioning properly or not.

- Conduct tests on bandwidth together with latency so as to affirm performance levels achieved.

These guidelines are designed to help you install HDR Infiniband adapters successfully. They ensure high-performance networks that deliver fast speeds all through while maintaining low latencies.

Configuration of HDR Switches and Routers

Confirm Compatibility of Hardware and Firmware

- Switch Model: Verify that the HDR switch is compatible with Infiniband technology.

- Firmware Version: Please check if the switch and routers have been updated to run on the recommended versions of firmware for supporting HDR.

Initial Setup and Connection

- Initial Configuration: Use the command-line interface (CLI) of the switch through its console port.

- Cabling: Establish connections between the appropriate ports on switches and routers with HDR Infiniband cables.

Configuring Network Parameters

- IP Address Assignment: Assign IP addresses within the management subnet.

- Subnets and VLANs: Ensure each port on every switch or router has the correct settings for subnets as well as VLANs configured.

Enable HDR Features

- Link Speed Configuration: Set Infiniband link speed to 200 Gbps (HDR).

- Port Configuration: Configure ports for HDR mode using CLI or network management software.

Implement Quality of Service (QoS)

- Priority Settings: Define priority levels for different types of traffic so that they receive adequate service level agreement (SLA) support throughout their transmission paths within your network infrastructure thereby ensuring peak performance always.

- Bandwidth Allocation: Allocate sufficient amounts/bandwidths for minimum-latency yielding streams/data flows where necessary or applicable while bearing in mind other competing demands like voice calls which might need higher priorities than background tasks such as data backups in order to reduce potential delays caused by congestion points along this connection path.

Monitoring and Diagnostics

- Performance Metrics: Continuously monitor metrics such as bandwidth utilization, latency variations as well packet drops due congestion control mechanism failures etc., all these being key indicators required when deploying any high-speed network links like InfiniBand so that you can keep track whether things are working out fine from start till finish.

- Diagnostic Tools : Make full use of built-in diagnostic tools meant for troubleshooting purposes thus enhancing efficiency in terms fixing problem areas associated with connectivity while at the same time checking health status across various links involved within this setup.

Security Configurations

- Access Control: Implement Access Control Lists (ACLs) to allow only authorized users or groups access to your networks.

- Encryption: Encrypt all data transmitted over the Infiniband network using appropriate encryption protocols.

Justification of Technical Parameters

- Link Speed: High-performance applications require HDR InfiniBand that supports up to 200Gbps.

- Firmware Version: Compatibility with the latest firmware guarantees HDR features support and stability.

- Bandwidth and Latency Tests: These are used for confirming whether a given network meets required performance standards needed by InfiniBand technology in terms of bandwidths and latencies as well.

By following these detailed configurations, you will have optimized your HDR switches and routers for high-performance networking, which ensures low-latency connections with high-bandwidth capability suitable for even demanding applications.

Troubleshooting Common Issues with HDR Infiniband

Failures in connection

- Examine physical connections: ensure that all cables are connected properly and there is no visible sign of damage.

- Updates of Firmware and Drivers: Ensure you have the latest versions of firmware and drivers for all Infiniband devices, from the manufacturer’s website.

- Settings for Configuration: Confirm that HDR switch settings match what is specified by the manufacturer as well as router configurations including IP configuration and port setting.

Problems with Performance and Latency

- Quality of Service (QoS) Settings: Check if QoS settings need to be adjusted so that important data streams are given priority over others; incorrect configuration will lead to underperformance.

- Network Congestion: Establish if congestion has occurred within your network by checking whether some parts show high levels of utilization; redistribute traffic or upgrade infrastructure as required.

- Diagnostic Tools: Use tools built into the system diagnostic utilities to test bandwidth and latency; this aids in pinpointing bottlenecks thereby enhancing performance optimization.

Intermittent Connectivity

- Interference & Physical Environment: Make sure electromagnetic interference is minimized around cables which should also be shielded correctly.

- Equipment Compatibility: Verify whether or not any device being used with HDR Infiniband is compatible with it because failure to do so can cause intermittent connection errors.

- Error Logs: Study error logs looking for patterns or recurring issues shown in them since such records may indicate hardware faults or setup mistakes that ought to be rectified immediately.

By following these steps one after another systematically, most problems commonly encountered on an HDR InfiniBand network should be fixed hence ensuring better reliability coupled with top performance at each level.

Reference sources

Frequently Asked Questions (FAQs)

Q: What advantages does InfiniBand HDR have over other network technologies?

A: Faster data transfer rates than traditional Ethernet solutions, lower latency and better scalability are some of the main benefits provided by InfiniBand HDR. Also, it supports copper as well as optical cables which offers flexibility based on specific network needs.

Q: What can Mellanox InfiniBand HDR Quantum QM87XX Switches do for my network?

A: Equipping your network with Mellanox InfiniBand HDR Quantum QM87XX switches enables ultra-low latency high-throughput switching capabilities. These switches are designed for use in large-scale cluster computing systems and data centers where they enhance overall efficiency and performance of your network.

Q: How fast is InfiniBand HDR compared to Gigabit Ethernet?

A: When it comes to speed and latency, Gigabit Ethernet falls behind InfiniBand HDR—here’s why. While Gigabit Ethernet reaches its maximum of 1 Gbps (Gigabit per second), the latter one can achieve up to 200 Gbps (Gigabits per second). Besides this significant difference in terms of throughput rates between them, another important factor worth considering is that there’s much less delay when using hdr than when using ge; therefore making hdr more suitable for high-performance computing systems than ge.

Q: Can InfiniBand HDR work with existing Ethernet networks?

A: Although it is possible to have both InfiniBand HDR and Ethernet in the same data center, they are distinct technologies. Sometimes you can use network adapters and switches that support both Ethernet and InfiniBand without any problem. However, dedicated InfiniBand HDR products should be used for maximum performance under normal conditions.

Q: What is the purpose of 200G QSFP56 modules in InfiniBand HDR networks?

A: The 200G QSFP56 modules help to transfer data at very high speeds in InfiniBand HDR networks. These modules come with a capacity of transferring up to 200 Gbps and are typically used in active optical cables together with other optical solutions to enhance network efficiency as well as reliability.

Q: How does the InfiniBand Trade Association contribute towards the advancement of InfiniBand HDR technology?

A: The duty of developing and promoting standards for use in the industry falls under the jurisdiction of a body known as the Infiniband Trade Association (IBTA). This organ plays a critical role in driving forward-looking innovations within this field such as Infiband HDR while ensuring product interoperability between various vendors’ offerings thereby creating an enabling environment that supports rapid growths through enhanced connectivity options for businesses involved in networking using such systems.

Q: What do you understand by Active Optical Cables, and how do they function when incorporated into an InfiniBand HDR Network?

A: Active Optical Cables (AOCs) represent one type among several others that make up those fast types capable of transmitting over longer distances within infinband hdr networks; these are utilized where electric signals must first be converted into light beams so as to enable them to travel further before being changed back again at their destination points from light back into electrical form – thus giving us all benefits associated with having optic cable performance levels but still maintaining ease associated with traditional copper cable use.

Q: Where can one buy InfiniBand HDR products?

A: There are many places where you can purchase InfiniBand HDR products. One example is the HPE Store US among other vendors and online stores. Companies like Mellanox and HPE produce different types of switches, network interface cards as well as cables for Infiniband hdr to cater for various networking needs.

Q: How does InfiniBand HDR compare with Omni-Path in terms of performance?

A: While both Omni-Path and infiniband hdr being high-performance networks, infiniband hdr has got higher data transfer speeds which can go up to 200 Gbps with lower latencies than those associated with omni path . Additionally, infiniband hdr also enjoys wider adoption across multiple vendors hence making it more versatile within many data centres environments.

recommend reading:https://www.fibermall.com/blog/what-is-infiniband-network-and-difference-with-ethernet.htm

Related Products:

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

-

NVIDIA MCA7J60-N004 Compatible 4m (13ft) 800G Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Active Copper Cable

$800.00

NVIDIA MCA7J60-N004 Compatible 4m (13ft) 800G Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Active Copper Cable

$800.00

-

NVIDIA MCP7Y60-H01A Compatible 1.5m (5ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$116.00

NVIDIA MCP7Y60-H01A Compatible 1.5m (5ft) 400G OSFP to 2x200G QSFP56 Passive Direct Attach Cable

$116.00

-

NVIDIA(Mellanox) MCP1600-E00AE30 Compatible 0.5m InfiniBand EDR 100G QSFP28 to QSFP28 Copper Direct Attach Cable

$25.00

NVIDIA(Mellanox) MCP1600-E00AE30 Compatible 0.5m InfiniBand EDR 100G QSFP28 to QSFP28 Copper Direct Attach Cable

$25.00

-

NVIDIA NVIDIA(Mellanox) MCX653106A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Dual-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$828.00

NVIDIA NVIDIA(Mellanox) MCX653106A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Dual-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$828.00

-

NVIDIA NVIDIA(Mellanox) MCX653105A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall bracket

$965.00

NVIDIA NVIDIA(Mellanox) MCX653105A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall bracket

$965.00