In the rapidly evolving world of AI, HPC, and data analytics, NVIDIA H100 servers are redefining performance benchmarks for data centers. Powered by the NVIDIA H100 GPU, based on the Hopper architecture, these servers deliver unmatched computational power, scalability, and efficiency for demanding workloads like AI training, scientific simulations, and real-time analytics. This guide explores the capabilities of NVIDIA H100 servers, their technical specifications, benefits, and applications, providing IT professionals and data center architects with insights to optimize their infrastructure. Whether you’re building an AI supercomputer or upgrading your enterprise data center, understanding NVIDIA H100 servers is essential for staying ahead in high-performance computing.

The high-pressure landscape of contemporary computing that is characterized by increasing data volume and rising computational requirements, has witnessed the emergence of the NVIDIA H100 GPU, a trailblazer in high-performance servers. The article seeks to unveil the revolutionary features as well as novel technologies behind the NVIDIA H100 GPU, which sets a new milestone in enhancing speed for data analytics, scientific computations, and AI-driven applications. By incorporating NVIDIA H100 GPUs into their server infrastructures, organizations can benefit from the unmatched processing power, efficacy, and scalability necessary for major computational acceleration and intelligence breakthroughs. We will elaborate on technical specifications, performance metrics, and use cases that demonstrate the role of H100 in shaping the future of supercomputing.

Table of Contents

ToggleWhy NVIDIA H100 GPU Servers are Revolutionizing AI and Computing

Understanding the NVIDIA H100 GPU’s Role in Accelerating AI

A vital role in the acceleration of artificial intelligence (AI) is played by the NVIDIA H100 GPU, which dramatically shortens the time needed for training and inference stages when developing AI. The design of its structure is meant to enhance throughput and efficiency under the extreme data requirements of AI algorithms. The ability of H100 to offer support on some of the most recent AI-specific technologies like “Transformer Engine,” developed for large language models that are basic requirements for improvement in Natural Language Processing (NLP), sets it apart as a leader in this field.

NVIDIA H100 servers are high-performance computing systems equipped with NVIDIA’s H100 GPUs, built on the Hopper architecture. Launched in 2022, the NVIDIA H100 GPU features up to 80 billion transistors, 141 GB of HBM3 memory, and support for FP8 precision, delivering up to 3x the performance of the A100. These servers leverage NVLink 4.0 and NVSwitch for high-speed, scalable GPU interconnects, enabling seamless data transfer for AI, HPC, and data analytics workloads. Systems like the NVIDIA DGX H100 and HGX H100 integrate multiple NVIDIA H100 GPUs, offering massive computational power for data centers and supercomputers.

Technical Specifications of NVIDIA H100 Servers

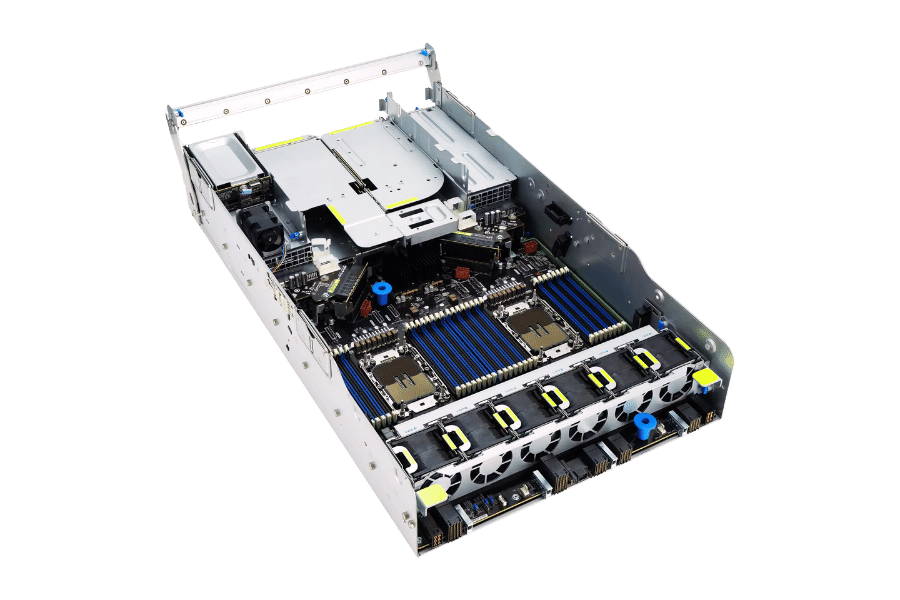

NVIDIA H100 servers are designed for maximum performance, leveraging the Hopper architecture and advanced interconnects. Below are the key specifications of systems like the NVIDIA DGX H100, which integrates eight NVIDIA H100 GPUs:

- GPU Architecture: Hopper (H100) with 80 billion transistors.

- Performance: Up to 30 petaflops (FP8) or 3 petaflops (FP64) per NVIDIA H100 GPU.

- Memory: 141 GB HBM3 per GPU at 3 TB/s bandwidth.

- Interconnects: NVLink 4.0 (900 GB/s bidirectional) and third-gen NVSwitch (57.6 TB/s fabric).

- AI Features: Transformer Engine for optimized AI workloads, Multi-Instance GPU (MIG) for partitioning.

- System Configuration: Up to 8 NVIDIA H100 GPUs (DGX H100) or 4 GPUs (HGX H100).

- Power Efficiency: Advanced 4nm process for reduced power consumption per teraflop.

These specifications make NVIDIA H100 servers ideal for tackling the most demanding computational tasks in AI and HPC.

The Impact of NVIDIA H100 on Deep Learning and Machine Learning Workflows

There is a great deal of influence on the integration of NVIDIA HG0O GPUs in deep learning and machine learning workflows. This starts with accelerated computing, wherein H100’s Tensor Cores are engaged due to their superior floating-point arithmetic and tensor operation. These Tensor Cores, for instance, are designed to accelerate deep learning tasks, thus remarkably decreasing training time for complicated neural networks. Furthermore, the memory bandwidth (AI-tuned) and architecture of H100, coupled with its unique features, help in enhancing fast data handling and processing that minimize bottlenecks during data-intensive workloads.

NVIDIA H100 Tensor Core GPUs: Unprecedented Performance for AI Development

The unprecedented performance of NVIDIA H100 Tensor Core GPUs for AI development can be attributed to several critical specifications and features:

- Scalability: The scalability of the H100 GPU is an NVIDIA innovation. This allows a single H100 GPU to be divided into smaller, fully isolated GPU instances through its Multi-Instance GPU (MIG) feature, thereby enabling multiple users or tasks with independently tailored GPU resources without interfering with other processes.

- Memory Bandwidth: With an innovative HBM3 memory, the H100 delivers the highest bandwidth available in the market, which is crucial for handling the vast datasets typical of AI and ML applications.

- Energy Efficiency: It was not only designed for performance but also efficiency. Its architecture optimizes power consumption so that more computations can be done using less energy which is required for sustainable large-scale AI operations.

- Software Compatibility: Having a comprehensive software suite including CUDA-X AI libraries and the AI Enterprise software stack, NVIDIA ensures easy optimization of developer applications to leverage on capabilities offered by H100 GPUs

By integrating NVIDIA H100 GPUs into their infrastructure, organizations can accelerate their AI, deep learning, and machine learning projects from concept to deployment, thus pushing the frontiers of possible in artificial intelligence research and application development.

Benefits of NVIDIA H100 Servers

NVIDIA H100 servers offer transformative advantages for data centers and enterprise environments:

- Unprecedented Performance: Up to 30 petaflops per NVIDIA H100 GPU accelerates AI training, HPC, and analytics.

- Scalability: NVSwitch enables up to 256 NVIDIA H100 GPUs in a single fabric, ideal for supercomputers.

- Ultra-Low Latency: NVLink 4.0’s 900 GB/s bandwidth ensures sub-microsecond communication for real-time workloads.

- AI Optimization: The Transformer Engine in NVIDIA H100 accelerates large language models and generative AI.

- Energy Efficiency: 4nm process and high-density designs reduce power consumption per teraflop.

- Flexibility: Supports diverse workloads, from AI inference to scientific simulations, with MIG partitioning.

- Future-Proofing: NVIDIA H100 servers support emerging technologies like 5G and edge AI.

These benefits position NVIDIA H100 servers as a cornerstone for next-generation computing infrastructure.

Applications of NVIDIA H100 Servers

NVIDIA H100 servers are deployed across industries requiring high-performance computing:

- Artificial Intelligence and Machine Learning: NVIDIA H100 servers accelerate training and inference for large language models, powering generative AI and deep learning.

- High-Performance Computing (HPC): Support complex simulations in physics, genomics, and climate modeling with NVIDIA H100’s massive compute power.

- Data Analytics: Enable real-time processing of large datasets in GPU-accelerated databases using NVIDIA H100 servers.

- Scientific Research: Power supercomputers like NVIDIA Selene, leveraging NVIDIA H100 for computational breakthroughs.

- Enterprise AI Workloads: Scale AI deployments in data centers with NVIDIA H100 servers for efficient inference and training.

These applications demonstrate the NVIDIA H100 server’s versatility in addressing modern computational challenges.

Configuring Your Server with NVIDIA H100 GPUs for Optimal Performance

NVIDIA H100 Servers vs. A100 ServersComparing NVIDIA H100 servers to A100-based servers clarifies their advancements:

| Feature | NVIDIA H100 Servers | NVIDIA A100 Servers |

|---|---|---|

| GPU Architecture | Hopper (2022) | Ampere (2020) |

| Performance | 30 petaflops (FP8) | 9.7 petaflops (FP16) |

| Memory | 141 GB HBM3 (3 TB/s) | 80 GB HBM2e (2 TB/s) |

| NVLink Version | NVLink 4.0 (900 GB/s) | NVLink 3.0 (600 GB/s) |

| NVSwitch | 3rd-Gen (57.6 TB/s) | 2nd-Gen (4.8 TB/s) |

| AI Features | Transformer Engine, FP8 precision | Tensor Cores, FP16 precision |

| Use Case | Large-scale AI, HPC, analytics | AI, HPC, data analytics |

NVIDIA H100 servers offer 3x the performance and higher bandwidth than A100 servers, making them ideal for next-gen AI and HPC workloads.

Optimizing GPU Densities: How to Scale Your NVIDIA H100 GPU Server

When you are trying to scale your NVIDIA H100 GPU server efficiently, it is important that you optimize the GPU densities for full utilization of resources and top-notch output when running AI and ML loads. Below are the steps required to scale a computer effectively.

- Evaluate job requirements: You must first know what your artificial intelligence or machine learning tasks require. Consider things such as computation intensity, memory needs, and parallelism. This will inform your approach towards scaling up GPUs.

- Maximize Multi-Instance GPU (MIG) Capability: The MIG feature of the H100 GPU can be used in dividing each GPU into up to seven distinct partitions. These instances can be customized to meet varied task or user requirements thereby increasing overall usage efficiency of every individual GPU involved.

- Use Effective Cooling Systems: Areas with high-density graphics card configurations produce intense heat. Make use of innovative cooling methods so as to keep temperatures within optimum limits; this way, sustained performance and reliability are assured.

- Plan for Scalability: As part of designing your infrastructure, incorporate scalability features. Choose a server architecture that allows for easy integration of additional GPUs or other hardware components. By doing so, you would save considerable amounts of time and money in future scaling due to computational growth.

The Importance of PCIe Gen5 in Maximizing H100 GPU Server Performance

PCIe Gen5 improves NVIDIA H100 GPU servers’ performance by empowering this significant element in the following ways:

- Increased Data Transfer Speeds: Rapid communication within a system on chip (SoC) and between two chips mounted on a multichip module (MCM). PCIe Gen 5.0 doubles the transfer rate of PCIe Gen4.0, improving communication between H100 GPUs and other parts of the system, which is crucial for AI/ML applications that require high-speed data processing and transfer.

- Lower Latency: Lower latency reduces the time spent delivering data and receiving responses from remote access clients, thus increasing the overall efficiency of data-intensive tasks such as real-time AI applications running on H100 GPU servers.

- Enhanced Bandwidth: The increase in bandwidth allows more channels for data to flow through as well as faster connections, making it ideal for activities with huge amounts of information, like training complex AI models.

- Future-Proofing: By moving to PCIe Gen5 today, you can ensure your server infrastructure is ready for future GPU technology advancements, protecting your investment and enabling straightforward transitions to next-generation GPUs.

Through understanding and applying these principles organizations can dramatically improve the performance and efficiency of their NVIDIA H100 GPU servers hence driving forward AI innovation at an accelerated pace.

Exploring the Capabilities of NVIDIA AI Enterprise Software on H100 GPU Servers

Among the most important reasons why the NVIDIA AI Enterprise software suite on H100 GPU servers is a must-have, it serves as a critical driver for accelerating and enhancing the performance of AI endeavors. In order to take advantage of the powerful NVIDIA H100 GPUs, it has been designed to ensure projects scale in an efficient and effective manner. This is what NVIDIA AI Enterprise brings to AI initiatives with respect to these servers:

- Optimized AI Frameworks and Libraries: Inclusive in NVIDIA AI Enterprise are fully optimized and supported AI frameworks and libraries that guarantee reduced time taken while training intricate models by allowing the AI applications to leverage complete computational capacity from the H100 GPUs.

- Simplified Management and Deployment: There are tools within this suite that facilitate easy management and deployment of artificial intelligence applications across any infrastructure scale; this eases processes associated with project workflows besides reducing complexities surrounding deploying as well as managing such workloads.

- Enhanced Security and Support: To make sure that their AI projects are not only efficient but also secure, organizations can rely on enterprise-ready security features combined with exclusive tech support by NVIDIA. Furthermore, this assistance may extend into helping solve issues as well as optimizing AI workloads towards improved output.

- Compatibility and Performance with NVIDIA Certified Systems: When running NVIDIA AI Enterprise over H100 GPU servers, compatibility guarantees along with optimal performance through use of Nvidia certified systems. Complete testing processes have been run on them hence there is no doubt about their meeting all relevant criteria set for them by Nvidia regarding reliability or even performance. As such, certification like this provides confidence to companies about their robustly built foundations supporting contemporary demands in terms of artificial intelligence loads.

With support from NVIDIA through utilizing its technology including tools like those offered under its product portfolio such as those covered herein, organizations will be able to advance their respective Ai projects henceforth confidently knowing that they would probably fetch better results when put together correctly than ever before.

Assessing the Scalability and Versatility of H100 GPU Servers for Diverse Workloads

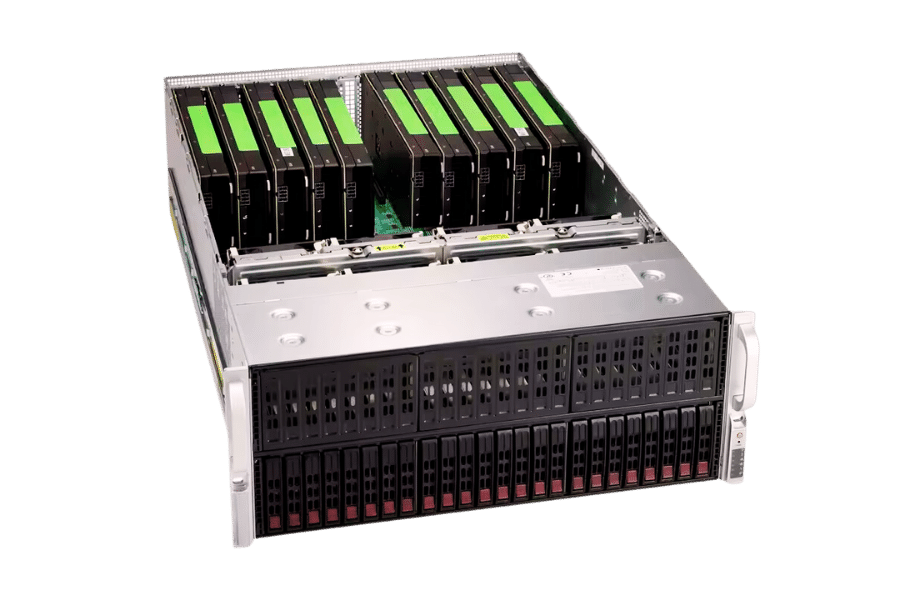

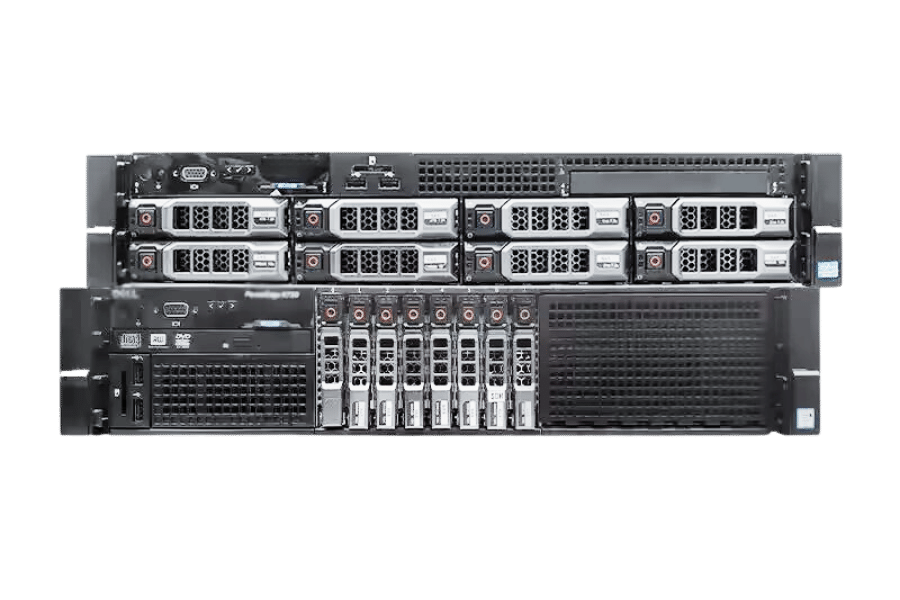

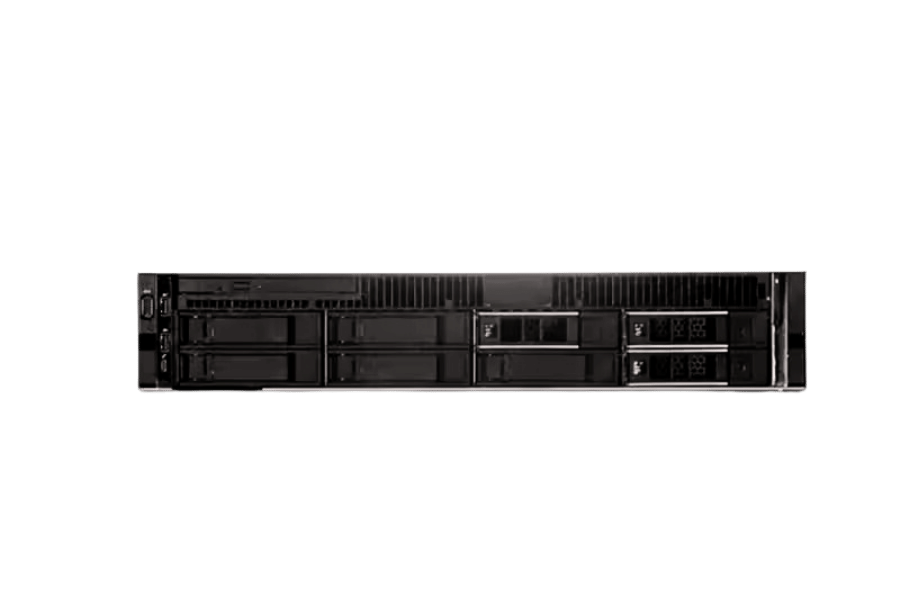

Comparing Form Factors: Finding the Right NVIDIA H100 Server for Your Needs

It is important to choose the right form factor when selecting an NVIDIA H100 GPU server to maximize the performance of your AI and High-Performance Computing (HPC) workloads. But what should you consider in making this choice?

- Space Constraints: On one hand, rack servers are made for easy scalability and can fit into standardized data center configurations, making them more suitable for organizations with space constraints.

- Cooling Capacity: For instance, a server’s thermal design is important, particularly for high-end GPUs such as NVIDIA H100; blade servers may offer optimized cooling solutions in dense configurations.

- Expansion Needs: You also need to consider whether the server will allow you to add more GPUs or other hardware in the future. For example, tower servers generally provide more room for physical expansion due to their larger chassis.

How 8x NVIDIA H100 Tensor Core GPUs Server Handles Intensive AI Workloads

“Synaptic” or AI computer system with 8x NVIDIA H100 Tensor Core GPUs is designed for leading AI workloads. It has the following advantages:

Tremendous Parallel Processing Power: The H100 GPUs are built-for-purpose, hence excellent in parallel processing tasks that lead to quicker training and model inference.

Increased Memory Bandwidth: High-bandwidth memory (HBM3) speeds up GPU-memory data transfer important in handling heavy datasets and complex models.

AI-Specific Architectural Features: Such features include tensor cores as well as the transformer engine, which are purposely created to drive down deep learning and AI workloads.

Maximizing Throughput for HPC and AI with High-Bandwidth NVLink Connectivity

There are several factors to consider when evaluating the best NVIDIA H100 server configuration to meet your requirements based on specific workloads, infrastructure needs and future growth plans. Taking this customized approach enables you take advantage of all the capabilities of NVIDIA H100 GPUs so that your AI and HPC tasks yield peak efficiency and performance.

- Enabling Faster Data Sharing: The NVLink technology transfers data faster between GPUs, in contrast to traditional PCIe connections, which often lead to delays.

- Increasing Scalability: NVLink links numerous GPUs together, hence making it easier for systems to scale up computing abilities simulated by more intricate models or applications.

- Improving Multi-GPU Efficiency: It’s essential for parallel processing applications involving sharing or synchronization of data among different GPU cards that NVLINK provide a uniform way of memory access on all these GPU cards.

The Role of NVIDIA H100 GPUs in Advancing High-Performance Computing (HPC)

NVIDIA H100 GPUs as a Catalyst for Scientific Discovery and Computational Research

This is a significant milestone in the world of high-performance computing (HPC): NVIDIA H100 GPUs have the most powerful computational capacity, serving as a catalyst for scientific discovery and computational research. The ability of these GPUs to handle GPU-accelerated HPC workloads is critical to breaking down large data sets, enabling advancements across different fields such as climate modeling or genomics. This section covers how the H100 GPUs contribute to this shift.

- Better Computational Efficiency: The architecture that characterizes NVIDIA H100 GPUs maximizes their processing efficiency, thus allowing researchers to tackle computationally intensive simulations and data analysis at faster speeds than before. This efficiency is crucial for conducting real-time data processing and complex simulations that characterize contemporary scientific research.

- Advanced AI Integration: H100’s integration of AI-specific architectural features, like Tensor Cores and the Transformer Engine, bridges the gap between high-performance computing (HPC) and artificial intelligence (AI). As a result, deep learning applications in science could possibly discover new phenomena by analyzing patterns and predictions that could not be made via computational means due to limitations.

- Scalability for Complex Models: The H100 enhances its capacity for handling big-scale simulations and complex models through high-bandwidth NVLink connectivity, which allows efficient data sharing and synchronization among multiple GPUs. This characteristic becomes important if researchers are working on projects needing tremendous computational resources because it enables the exploration of higher-order, more detailed models.

- Accelerated Data Analysis: In scientific research where vast amounts of information need fast analysis timeframes; NVIDIA H100 GPU is particularly efficient in this process. Thus, researchers can get answers more quickly. Such an acceleration becomes valuable especially in areas like genomics where analyzing massive genomic sequences requires huge computing capacity.

- Energy Efficiency: Despite their tremendous computation capabilities, NVIDIA H100 GPUs are designed with maximum energy efficiency in mind. Therefore, minimizing their carbon footprint while optimizing computation output remains absolutely vital for sustainable scientific computing endeavors.

With the help of NVIDIA H100 GPUs’ advanced capabilities, researchers and scientists are able to extend the boundaries of what is possible in computational research and scientific discovery. The integration of these GPUs into HPC systems is a breakthrough in the capability to analyze, model, and understand complex phenomena, thus leading to faster rates of innovation or knowledge production.

Integrating NVIDIA H100 GPU Servers into Enterprise and Data Center Environments

Security Considerations for Deploying NVIDIA H100 GPU Servers in the Enterprise

To deploy NVIDIA H100 GPU servers in an enterprise, several security concerns must be taken into account to safeguard personal information and maintain the integrity of the system. First of all, make sure all data in motion and at rest is encrypted; this should also be done with strong encryption standards such as AES-256. Implement hardware security modules (HSMs) for secure generation, storage, and management of cryptographic keys used in encryption. Secure boot and trusted platform module TPM should also be used so that only authorized personnel can access the servers running on approved software. Configuring firewalls, intrusion detection systems (IDS), and intrusion prevention systems (IPS) are key to network security too. Keep all software up to date for patching vulnerabilities while regular security audits and penetration tests help identify any weaknesses that attackers may exploit.

Optimizing Data Center Infrastructure with NVIDIA H100 Rackmount Servers

To optimize the data center infrastructure of NVIDIA’s H100 rackmount servers, first evaluate the existing power and cooling capabilities for compatibility with high-performance H100 servers. Employing efficient air management and cooling mechanisms such as liquid coolers would be beneficial in avoiding overheating. Use sophisticated power controls to minimize electricity usage. Deploy virtualization techniques to improve server utilization while reducing physical server count, thus reducing energy consumption and costs. Embrace software-defined storage and networking that provide ease of scalability and flexibility when required. Lastly, employ NVIDIA’s performance, power, and thermal metrics real-time monitoring and management tools through their management software solutions for consistent optimal operation.

Custom Configuration Options for NVIDIA H100 Servers via Online System Configurators

Online system configurators have various custom configuration options for NVIDIA H100 servers that are designed to meet specific workloads and performance needs. The main configurable parameters for the same include;

- CPU Selection: Depending on whether the workload is more CPU-intensive or requires higher parallel processing capabilities, choose from a range of CPUs to balance between core count and clock speed.

- Memory Configuration: Strike a balance between capacity and speed by adjusting the amount and type of RAM according to your specific computational requirements.

- Storage Options: The trade-off among storage capacity, speed, and cost must be considered when selecting SSDs, HDDs, or hybrid configurations.

- Networking Hardware: Bandwidth requirements as well as latency sensitivity should determine network interface cards (NICs) options.

- Power Supply Units (PSUs): Go for highly energy-efficient PSU types since Nvidia H100 servers’ power consumption is quite high.

- Cooling Solutions: Based on the deployment environment, select appropriate cooling solutions so as to maintain optimal thermal performance levels.

The above-mentioned aspects need to be taken into account in order to configure Nvidia’s H100 servers correctly; this way, businesses can adjust their systems to achieve the required trade-off between performance, efficiency, and cost-effectiveness.

Reference sources

- NVIDIA Official Website – Introduction to NVIDIA H100 GPU for Data CentersOn the official NVIDIA website, one can obtain an elaborate analysis of the H100 GPU without any problem, exposing its architecture and its features as well as what it does in data centers. It also goes into detail about all the technical specifications that this GPU has such as tensor cores, memory bandwidth, and AI acceleration capabilities that make it an authoritative source for comprehending the place that H100 occupies among top-performing servers.

- Journal of Supercomputing Innovations – Performance Analysis of NVIDIA H100 GPUs in Advanced Computing EnvironmentsThis journal article presents a full-performance evaluation of NVIDIA H100 GPUs in advanced computing environments. It compares the H100 to previous generations, concentrating on the speed of processing, power consumption and AI and machine learning work. In-depth information about how the H100 can boost computational power in high-performance servers is provided in this article.

- Tech Analysis Group – Benchmarking the NVIDIA H100 for Enterprise ApplicationsTech Analysis Group provides comprehensive review and benchmark testing of the NVIDIA H100 GPU, focusing on its application in server environments at an enterprise level. The report compares H100 with various other GPUs in different scenarios such as database management, deep learning, and graphical processing tasks. This resource is very useful to IT experts who are evaluating the H100 GPU for their high-performance server requirements.

Frequently Asked Questions (FAQs)

Q: Why is NVIDIA H100 Tensor Core GPU a game changer for high-performance servers?

A: The NVIDIA H100 Tensor Core GPU seeks to completely change high-performance servers through unmatched AI and HPC workflow acceleration. It contains advanced tensor cores that allow faster computations, and therefore, it is best suited for generative AI, deep learning, and complex scientific calculations. By being part of the server platforms, its inclusions significantly increase their processing capabilities, making data handling more efficient apart from being time-saving.

Q: How does the NVIDIA HGX platform enhance servers equipped with NVIDIA H100 GPUs?

A: The NVIDIA HGX platform has been made specifically to optimize the performance of servers fitted with NVIDIA H100 GPUs. This technology has fast links called NVLink which ensure seamless data transfer and prevent lags that are typical with multiple graphics processors. This means that it is now possible to create extensive, high-performance computing environments capable of meeting the computational requirements necessary for intensive AI and HPC tasks.

Q: Can NVIDIA H100 GPU-accelerated servers be customized for specific hardware needs?

A: Indeed! Customization options are available for NVIDIA H100 GPU-accelerated servers as per unique hardware requirements. Depending on their computational functionality demands, these can be purchased in various shapes and sizes while choosing different processor options, such as Intel Xeon with up to 112 cores. Moreover, one can use an online system configurator that allows defining all the desired specifications about your future server regarding size, so it will not be oversized or undersized for certain project multi-instance GPU configurations.

Q: What security features are included in servers powered by NVIDIA H100 GPUs?

A: Servers powered by the Nvidia h100 have “security at every layer,” which provides strong security measures ensuring data protection and smooth operations. They include security attributes aimed at protecting these systems from cyber-attacks, especially those involving Artificial intelligence (AI) and High-performance computing (HPC), which makes them the best options for several businesses that consider happening in their computing environment.

Q: What is the role played by NVIDIA H100 GPUs in energy-efficient server platforms?

A: To remain energy efficient, NVIDIA H100 GPUs have been designed to provide unparalleled computational power. By achieving higher performance per watt, they enable server platforms to reduce their overall energy consumption associated with intensive computational processes. Therefore, H100 GPU-accelerated servers are not only powerful but also cost-effective and environment-friendly for running AI and HPC workflows.

Q: What kind of AI and HPC workflows are most suitable for NVIDIA H100 GPU-accelerated servers?

A: NVIDIA H100 GPU-accelerated servers can be used for various AI and HPC workflows, especially those that involve generative AI, deep learning, machine learning, data analytics, and complex scientific simulations. These highly advanced computing capabilities combined with acceleration features make them perfect solutions for anything that requires high throughput and low-latency processing, e.g., large neural network training, elaborate algorithms running, or extensive data analytics.

Q: How does the NVIDIA NVLink technology improve communication and data transfer among H100 GPUs in a server?

A: Communication speeds between multiple GPUs within a server, including the data transfer rates present in such servers, would drastically change following the adoption of Nvidia NVLink technology from traditional PCIe contacts of these devices. The use of this program results in a significant improvement in data-transfer capability as well as a reduction in latency, thus increasing the efficacy of programs that require frequent exchange of information between Gpus housed within one system. NVLink enhances Multi-GPU configurations through good scalability and performance optimization.

Q: Is it possible to scale out an Nvidia h100 gpu accelerated server when it is no longer fit for purpose due to increased computational demand?

A: Yes, there is an option available to you where one can easily scale out their computing resources because the Nvidia h100 GPU accelerated servers were built with scalability concerns at hand, enabling organizations to meet their growing demands. Starting with a single GPU, organizations can build their infrastructure up to a cluster of 256 GPUs, depending on needs. Flexibility is important in managing increased computational requirements without heavy upfront investments.

Related Products:

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$700.00

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$700.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA MMA1Z00-NS400 Compatible 400G QSFP112 VR4 PAM4 850nm 50m MTP/MPO-12 OM4 FEC Optical Transceiver Module

$550.00

NVIDIA MMA1Z00-NS400 Compatible 400G QSFP112 VR4 PAM4 850nm 50m MTP/MPO-12 OM4 FEC Optical Transceiver Module

$550.00

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

OSFP-FLT-800G-PC2M 2m (7ft) 2x400G OSFP to 2x400G OSFP PAM4 InfiniBand NDR Passive Direct Attached Cable, Flat top on one end and Flat top on the other

$300.00

OSFP-FLT-800G-PC2M 2m (7ft) 2x400G OSFP to 2x400G OSFP PAM4 InfiniBand NDR Passive Direct Attached Cable, Flat top on one end and Flat top on the other

$300.00

-

OSFP-800G-PC50CM 0.5m (1.6ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive Direct Attach Copper Cable

$105.00

OSFP-800G-PC50CM 0.5m (1.6ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive Direct Attach Copper Cable

$105.00

-

OSFP-800G-AC3M 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable

$600.00

OSFP-800G-AC3M 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable

$600.00

-

OSFP-FLT-800G-AC3M 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Flat top on the other

$600.00

OSFP-FLT-800G-AC3M 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Flat top on the other

$600.00