Machine learning (ML) and deep learning (DL) have recently been growing at such an incredible rate that they are now demanding huge amounts of computing power. In order to meet this need, Graphics Processing Units (GPUs) have become very popular, and GPU clusters even more so. These chips differ from traditional Central Processing Units (CPUs) in that they can process many tasks simultaneously; meaning they can handle the heavy workloads often associated with ML and DL applications.

This text will give a broad idea of how helpful it is to use GPU clusters to speed up ML and DL processes. Designing principles, operation mechanisms, and performance advantages will all be explained hereafter in relation to the architecture behind these systems. Besides hardware setup requirements, software compatibility issues along with scalability options will also be discussed when deploying GPU clusters. Therefore by the time you finish reading this article, you should understand clearly what needs to be done in order for us realise new potentials within advanced machine learning and deep learning spheres using GPU clustering technology.

Table of Contents

ToggleWhat is a GPU Cluster?

Understanding the Components of GPU Clusters

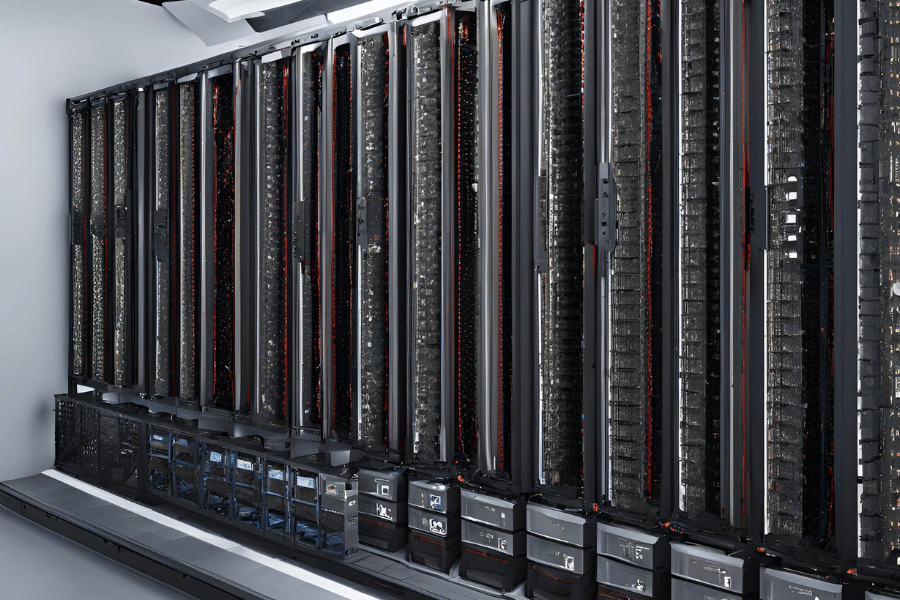

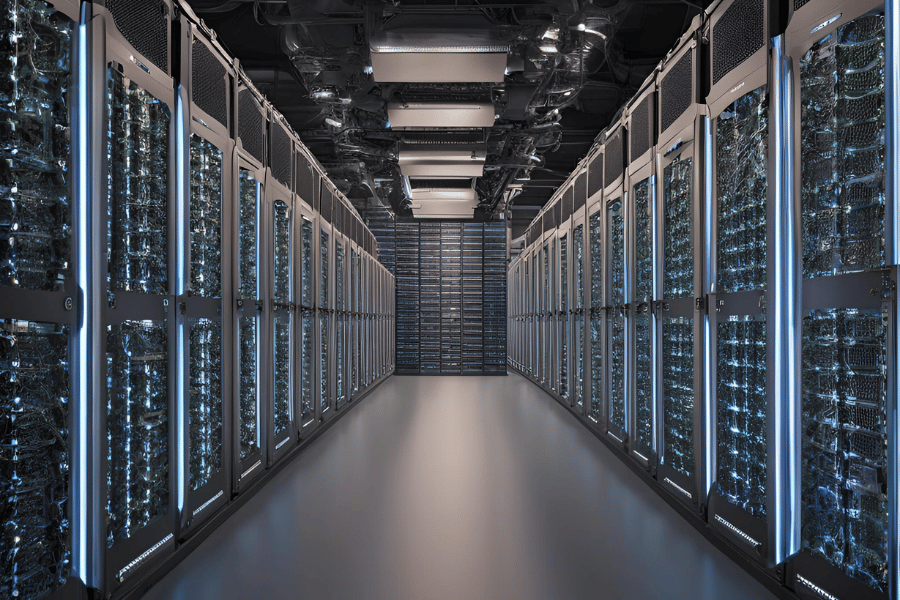

A GPU cluster is made up of many GPUs that are connected using high-speed interconnects such as InfiniBand or NVLink so they can be used together as one computing resource. Here are some key components of a GPU cluster:

- Graphics Processing Units (GPUs): These are the main units for computation which can process thousands of parallel threads, enabling them to work with large sets of data and complicated algorithms.

- Nodes: A node contains several GPUs alongside one or more CPUs responsible for managing operations on those GPUs and handling tasks not suitable for them.

- Interconnects: Links for fast communication ensuring quick data transfer rates between nodes and GPUs, thereby reducing latency while increasing throughput.

- Storage Systems: High-performance storage solutions help in dealing with large amounts of data produced and processed within the cluster.

- Networking: Strong network infrastructure is needed to enable rapid communication between different nodes as well as efficient load balancing and distribution of information across the system.

- Software Stack: This refers to a collection of software components such as operating systems, drivers, libraries like CUDA and TensorFlow, etc., which are necessary for effective utilization of GPU hardware in ML & DL tasks

Each component listed above has its own importance towards making sure that a GPU cluster performs optimally while meeting computational requirements demanded by advanced machine learning (ML) and deep neural networks (DL) applications.

Role of GPUs and CPUs in a GPU Cluster

In a GPU cluster, the roles of CPU and GPU are separate but work together. Thousands of small cores in GPUs make them best suited for handling parallel tasks since they can execute multiple threads simultaneously hence being more efficient per watt than CPUs. This, therefore, implies that such systems would be good for machine learning (ML) and deep learning (DL) algorithms, which involve large amounts of data processing coupled with complex mathematical operations. On the other hand, the design of central processing units (CPUs) enables them to act as control points within clusters by managing general system operations, delegating responsibilities among different components, and executing serial processes that may not be optimal on graphics cards. It is through this combination of graphics processing units and central processing units that workflow management becomes easier thus facilitating scalability at higher levels required for advanced ML & DL applications on GPU clusters to be realized.

How Interconnect and Infiniband Enhance GPU Cluster Performance

Increasing the efficiency of GPU clusters is the main job of high-speed interconnects and technologies like InfiniBand. What they do is that they enable fast communication between nodes by cutting down on latency and increasing data transfer rates – a critical requirement for coordinating tasks across multiple GPUs within a cluster. InfiniBand is particularly known for its high throughput and low latency, which helps improve data bandwidth between nodes so that data-intensive applications are not limited by poor communication speeds among different parts of the system. By creating effective ways for sharing information, these tools keep computational delays at bay, thereby making sure that machines work at their best all the time; moreover, this also helps with scalability, thus allowing computers to handle heavier workloads generated by artificial intelligence systems or any other type of complex task where huge amounts of learning must take place during the training phase.

How to Build a GPU Cluster?

Essential Hardware for a GPU Cluster

- GPUs: Vital are powerful GPUs, with widespread use by NVIDIA and AMD.

- CPUs: Multi-core CPUs that can handle parallel processing effectively, as well as task management.

- Motherboard: A strong motherboard with many PCIe slots for GPUs and expansion capability.

- Memory: Sufficient RAM –preferably ECC (Error-Correcting Code) — to manage huge datasets while ensuring the stability of the system, particularly in GPU nodes.

- Storage: Fast-access high-capacity SSDs together with big-enough HDDs

- Power Supply: Reliable power supplies providing enough wattage to support all components

- Cooling System: Efficient cooling systems like liquid cooling for thermal output.

- Networking: High-speed interconnects like InfiniBand, or Ethernet should be used so that data transfer between nodes is fast enough

- Chassis: The chassis must have a good airflow design and accommodate all components while allowing room for expansion.

Steps to Build a GPU Cluster from Scratch

- Define Requirements: Determine the specific use case, desired performance metrics and budget limitations.

- Select Hardware: Selecting GPUs, CPUs, motherboards, RAMs, storage, power supplies, cooling systems or fans, networking, and chassis as project requirements define.

- Assemble Hardware: Install CPUs and memory on motherboards with GPUs. Fit the motherboard into the chassis, then connect storage devices such as SSDs (Solid-State Drives), power supplies like PSUs (Power Supply Units), and cooling systems, i.e., fans or heatsinks. Ensure that all components are properly fastened.

- Configure Networking: Setting up high-speed interconnects between nodes using InfiniBand or Ethernet cables so that they can communicate with each other effectively.

- Install Operating System: Choosing an appropriate OS such as Ubuntu Linux distribution among others like CentOS etc., then installing it onto the hard drive .SSD would be better for I/O intensive applications . Setting up OS for maximum performance optimization is necessary at this stage too .

- Install Software: Loading required drivers, such as libraries like CUDA(cuDNN) and machine learning frameworks, e.g., TensorFlow(PyTorch). Updating software to the latest versions, thereby unleashing the full computational power of the GPU cluster

- System Configuration: BIOS fine-tuning ; Network configuration through what is called DHCP to allocate IP addresses automatically; power optimization management to ensure stability while maximizing output

- Testing/Validation: Carry out stress tests, benchmark testing programs, etc. in order to ascertain whether or not the system meets specifications given by manufacturers themselves

- Deploy Applications – Install desired applications along with necessary ML models then start processing data according to use case intended for

Choosing the Right Nvidia GPUs and Components

In choosing the right Nvidia GPUs and other components, it is important to follow a systematic method to ensure that they will work optimally according to their intended use. Here are some of the things you should consider:

- Recognize Workload Requirements: Different workloads have different requirements for GPUs. For instance, if you are doing machine learning then you might want Nvidia A100 which has high compute performance and memory bandwidth. Graphic-intensive tasks such as video editing may call for graphics cards like Nvidia Quadro series.

- Calculate Your Budget: High-performance graphics cards can be very costly. Determine how much money you want to spend on them early enough so that this doesn’t become a limiting factor in your search for good performance.

- Compatibility with the Current System: Ensure that any selected GPU works well with all parts of your computer system, especially motherboard and power supply unit (PSU). Make sure that it physically fits into the PCI Express (PCIe) slot while still leaving sufficient space around; also verify whether the PSU can deliver the necessary amount of power.

- Memory Requirements: Depending on the complexity involved in what one is doing, there might be a need for a lot more VRAM than usual, even on a GPU. For example, deep learning models trained using large datasets will need graphic cards with huge memory capacities, like Nvidia RTX 3090.

- Cooling Solutions: More heat is generated by high-end graphics processing units than low-end ones so cooling becomes an issue at some point in time if not dealt with properly – Choose air-cooled vs liquid-cooled vs custom-built depending upon thermal properties exhibited under maximum load by each individual card [2].

- Future Expansion: If you plan to upgrade or expand later, make sure everything chosen now allows easy scalability later; thus, at least two extra slots must be available below the main PEG x16 slot, while the minimum recommended PSU wattage should not be less than 850Watts.

By considering these points carefully, one can choose suitable Nvidia GPUs and components that will work best for your needs in terms of performance and budget.

Why Use GPU Clusters?

Benefits of AI and Machine Learning Workloads

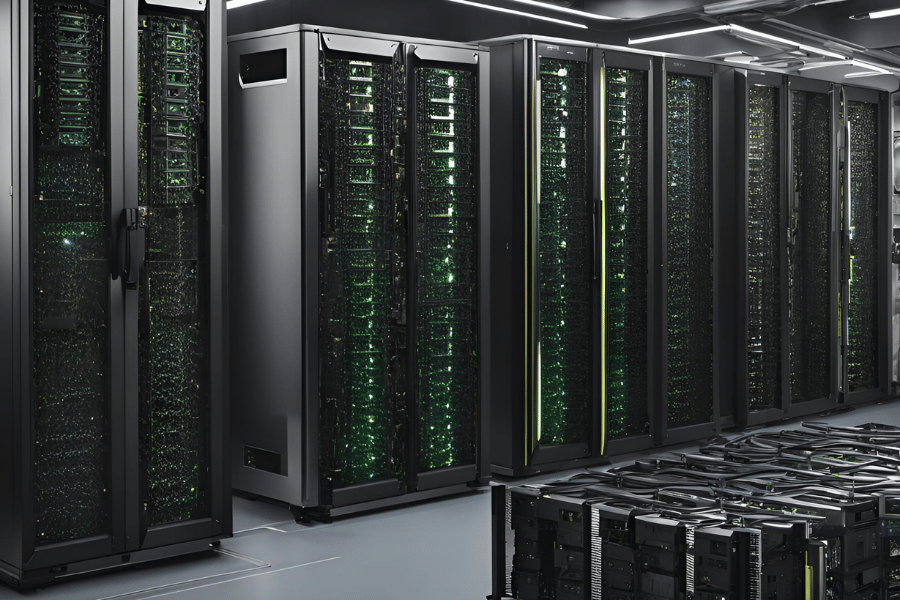

Several notable advantages come with the use of GPU clusters for AI and machine learning workloads. Firstly, they outperform central processing units (CPUs) in parallel computing due to their design, which makes them well-suited for large-scale computation in AI and machine learning jobs. As a result, this significantly reduces training time when dealing with intricate models. Secondly, scalability is achieved by GPU clusters, where multiple GPUs can be used simultaneously to handle bigger datasets and more complex algorithms. Therefore, these joint computational abilities lead to increased performance and accuracy rates especially on AI models that fully utilize a GPU cluster’s compute power. Moreover, different types of tasks, such as deep learning or data analytics, can be processed by these clusters, thereby making them more versatile and applicable across various areas of artificial intelligence research involving machines’ ability to learn from experience based on observation or data acquisition through sensors, etcetera.

Performance Advantages in High-Performance Computing (HPC)

In high-performance computing (HPC) environments, GPU clusters bring about significant performance advantages. Their design is parallel-oriented, thereby greatly speeding up complex simulations computation, modeling, and data analysis. Such simulations can take the form of scientific experiments or engineering projects. This concurrency allows quick run times and better throughput for large-scale scientific computations and engineering designs. Also, GPUs have high memory bandwidth that permits fast rates of transferring information, which is necessary for dealing with huge amounts of data in HPC workloads. Additionally, utilization of GPU clusters increases efficiency and cost effectiveness in an HPC system by providing higher wattage efficiencies than traditional CPU only configurations.

Scaling Deep Learning Models with GPU Clusters

If you want to scale the models of deep learning with GPU clusters then you need to distribute the workload on all the GPUs, this will help in speeding up the training process. The different parts of a neural network can be processed simultaneously by different GPUs through model parallelism. Alternatively, data parallelism refers to training complete models across several GPUs with various subsets of data and periodically synchronizing weights for consistent learning. Additionally, both approaches are combined in hybrid parallelism to make use of available resources more effectively. It becomes possible for properly configured GPU clusters to handle larger as well as more complicated datasets, thereby cutting down on training times while improving the general performance and accuracy levels of deep learning models.

How to Optimize a GPU Cluster?

Best Practices for Cluster Management

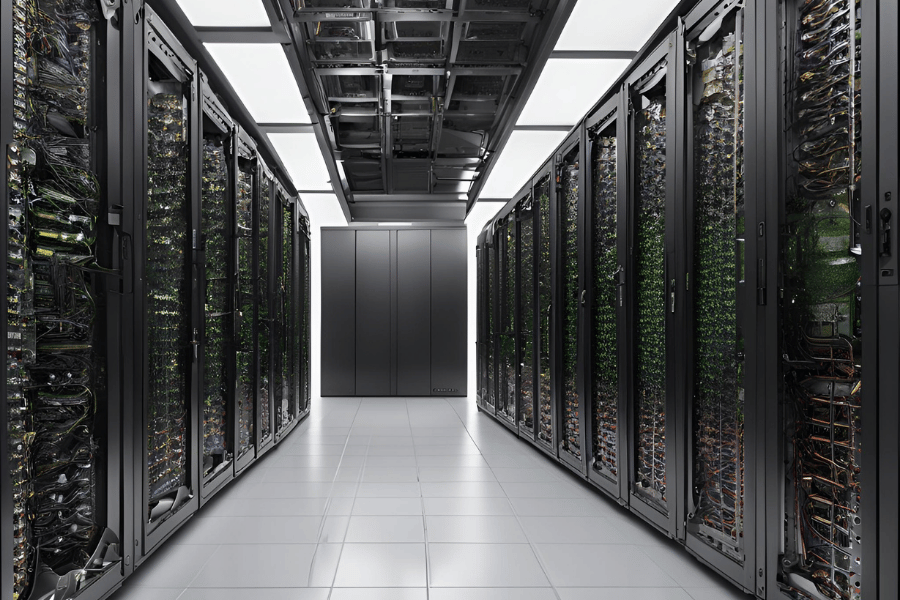

To optimize the performance and efficiency of GPU clusters, one must be good at managing them. Some best practices are as follows:

- Resource Monitoring and Allocation: Create powerful tracking tools that can check how much GPU is being used, the memory consumption rate, and the system’s health. They should also help reallocate resources dynamically so that no jam occurs.

- Load Balancing: Ensure that workloads are evenly distributed across the cluster to avoid overworking some GPUs while others have nothing to do. Advanced scheduling policies can be used that look at the current load against resource availability, among other factors.

- Routine Maintenance: The cluster should always operate at its optimal level thus regular checks on hardware functionality, software updates installation or even cooling systems review need to be done frequently enough not only for this reason but also because failures could lead into downtimes.

- Communication Overhead Optimization: Data transmission methods must be made efficient by reducing communication overhead during large-scale parallel processing where many nodes are involved, especially if high-speed interconnects like InfiniBand are utilized.

- Adaptive Scaling: Implementing a real-time demand-based auto-scaling policy helps control costs while ensuring enough resources are available during peak loads.

- Security Measures: Unauthorized access prevention measures such as strong authentication protocols and encryption of data in transit /at rest need to be put in place together with periodic security audits, too, since cyber threats keep changing daily

All these guidelines if followed will enable organizations manage their GPU clusters more effectively thus improving performance levels as well as cost effectiveness.

Utilizing Cluster Management Software like Slurm and Open Source Options

GPU cluster management software is vital for monitoring and controlling GPUs to enhance performance. A Linux-based open-source system, Slurm is expandable and fault-tolerant designed for all sizes of clusters. It gives users a strong foundation for resource allocation, job scheduling, and monitoring so that computational resources are used effectively. With the support of simple and complex workload scheduling policies, it suits various applications including high-performance computing (HPC) up to artificial intelligence.

Some other noteworthy open source possibilities consist of Apache Mesos which abstracts CPU, memory, storage along with other compute resources in order to enable efficient sharing among distributed applications or frameworks; Kubernetes though mostly recognized for container orchestration but increasingly used also in managing gpu workloads within clustered environment. Kubernetes allows automating deployment scaling and operations thus providing very flexible management solution suitable for different workloads.

Slurm provides extensive documentation alongside community support just like these open-source options too, therefore making them convenient choices when organizations need scalable, affordable solutions on cluster management systems.

Optimizing GPU Driver and CUDA Configurations

For the best optimization in computational workloads, it is important to optimize GPU driver and CUDA configurations. The first step that should be taken is making sure that the newest GPU drivers are installed. Manufacturers such as NVIDIA frequently release updates which bring performance improvements, bug fixes and new feature sets necessary for modern applications.

Setting up the Compute Unified Device Architecture (CUDA) toolkit is another key factor in optimizing GPU clusters’ computing capacity besides updating drivers. It includes compiler tools, libraries and optimized runtimes. This can greatly speed up parallel application execution by utilizing the power of computation of general purpose graphics cards, especially with models within the same line. There are some specific settings like compute capability choice or memory usage optimization that need to be done right for achieving top performance.

Also fine tuning different parts of GPU and CUDA settings can be achieved by using NVIDIA Nsight together with CUDA Profiler which give opportunity to find out where bottlenecks may occur during execution time as well as how they can be eliminated. These utilities provide a lot of information about kernel execution details, memory transfer efficiency statistics, hardware utilization metrics, etc., thus giving developers the ability to detect any possible causes of low performance and fix them easily.

In conclusion keeping your drivers updated, configuring your CUDA toolkit properly and using profiling tools are crucial steps towards achieving better computational efficiency through optimization on GPU driver and Cuda configuration level.

Challenges and Solutions in GPU Cluster Deployment

Handling Latency and Bandwidth Issues

In GPU cluster deployment concerning delay problems and the amount of data that can be transmitted at one time, many accurate methods may be taken into consideration. Firstly, ensure that you use interconnections which are very fast like InfiniBand or NVLink so as to cut down on the delays and raise the speed of transference between various nodes. Second of all, introduce ways in which you can compress information, thus reducing its size during transit, thereby making better use of bandwidth. Thirdly, optimize data distribution schemes among different processors to minimize possible interactions across nodes when running parallel tasks. Finally, asynchronous communication protocols should be used alongside each other where computation is taking place and information is transferred from one point to another if latency is to be dealt with effectively.

Managing Power Supply and Cooling in Large Clusters

For big GPU clusters, effective power supply and cooling control demand a careful strategy. Guarantying reliable power supply means using backup power sources and incorporating UPS (Uninterrupted Power Supplies) systems to counteract any power outage. Precision air conditioning systems along with liquid cooling solutions are necessary for cooling so as to keep operating temperatures at their optimum level and prevent overheating. It is important to closely track power and thermal metrics for quick identification and mitigation of inefficiencies. Likewise, large-scale GPU cluster stability and performance can be enhanced through strict observance of Thermal Design Power (TDP) rating enforcement mechanisms coupled with advocating for energy-efficient hardware choices.

Navigating Scheduler and Workload Management

It’s crucial to efficiently use resources and achieve performance goals when working with large GPU clusters. Using fair-share or priority-based scheduling algorithms, distribute work evenly among available resources, eliminating bottlenecks within a GPU cluster. Systems like Slurm or Kubernetes assist in submitting, tracking, and executing tasks on a group of computers. They make it possible to allocate different types of resources concurrently as well as dynamically handle various workloads. Besides, predictive analytics can be employed to anticipate resource requirements, thereby enabling early intervention and enhancing the system’s general efficiency.

Future Trends in GPU Cluster Technology

Emerging Nvidia Tesla and Tensor Core Innovations

Tesla and Tensor Core architectures are being innovated by Nvidia in order to keep expanding the possibilities of GPU technology. They have introduced a new product called A100 Tensor Core GPU which has extremely good performance improvement compared to last generations. This GPU utilizes third-generation tensor cores for accelerating AI training as well as inference workloads, making it suitable for high-performance computing (HPC) and data center applications. Moreover, A100 also features multi-instance GPU (MIG) capability that allows the user to partition their resources better, hence improving efficiency. Through these advancements, we can now build stronger and more scalable clusters of GPUs, which will increase computational power while still maintaining operational flexibility across different use cases.

Impact of AI Infrastructure Development

The growth of AI infrastructure affects computational efficiency, scalability, and the democratization of advanced analytics. Faster AI model training and deployment across industries are powered by better AI infrastructure for inference. Scalability improvements ensure that resources can be expanded as necessary to handle more data and processing power easily. Organizations with limited access to entry barriers may then use these technologies for various purposes which foster innovations too. Moreover, stronger artificial intelligence systems support larger-scale projects that can lead to breakthroughs in healthcare or finance, among other fields like autonomous vehicles where they’re needed most.

Prospects for Research Clusters and Academic Applications

AI infrastructure advancements are very beneficial for research clusters and academic institutions. The Nvidia A100 Tensor Core GPU can solve harder problems faster because it performs well and is versatile. Establishments can do more than one project at a time by making better use of their resources through multi-instance GPU (MIG) capabilities. Hence, collaborative research becomes more effective which encourages innovation leading to breakthroughs in various areas including genomics, climate modeling, computational physics among others. Furthermore, with the scalability and ruggedness that come with today’s AI infrastructure, academic institutions have the ability to win over funds as well as top brains, thereby propelling knowledge frontiers into higher levels.

Reference Sources

Frequently Asked Questions (FAQs)

Q: What is a GPU cluster and how is it used in advanced machine learning and deep learning?

A: A GPU cluster is a group of several nodes, each having one or more GPUs, that are set up to collaborate on high-performance computing tasks. These clusters work well for advanced machine learning and deep learning because such applications need large amounts of computing power to train huge neural networks and process immense datasets.

Q: How does a GPU cluster differ from a traditional CPU-based cluster?

A: In contrast to traditional CPU-based clusters, which use central processing units designed for general-purpose computations, a GPU cluster taps into graphics processing units specifically built for parallel computing tasks – this makes them faster and more efficient for some computational workloads. For example, while CPUs can handle many different types of processes simultaneously, GPUs are ideal for handling the massive parallelism involved in machine learning and deep learning applications.

Q: What are the main components of GPU cluster hardware?

A: Typically, GPU cluster hardware consists of high-performance GPUs, compute nodes, interconnects like NVLink or PCIe that provide fast data transfer rates between devices within the same system as well as between different systems in a networked environment; storage solutions; associated infrastructure within a data center where these resources reside together with cooling systems necessary so heat generated by such powerful machines can be dissipated safely. The overall architecture allows easy scalability when dealing with large computational tasks spread over multiple devices.

Q: Why is NVLink important in a GPU cluster?

A: It is important to have NVLink in every GPU device within a single multi-GPU system because this technology developed by NVIDIA gives high-speed connectivity between these devices, thereby allowing them to exchange information much quicker than they would over traditional PCIe connections, thus eliminating any potential bottlenecks caused by slow data transfers between processors or memory modules attached via slower buses. As a result, all available processing power across all installed GPUs becomes accessible at its maximum potential, which greatly increases overall cluster performance.

Q: What role does a compute node play in a GPU cluster?

A: In a GPU cluster, compute nodes are the basic building blocks; each node contains CPU(s), one or more GPUs, memory modules and storage devices necessary for large-scale computations. Compute nodes work together to distribute workloads across different system parts involving several GPUs simultaneously while ensuring efficient communication between various components required during execution of high-performance computing tasks within such clusters.

Q: Are there various kinds of GPU clusters?

A: Yes, GPU clusters are often classified according to the number of GPUs per node, the type of GPU (such as specific models like NVIDIA GPUs), and the architecture of the cluster itself – whether it is homogeneous (all nodes have similar hardware) or heterogeneous (different types of nodes and GPUs).

Q: Which applications benefit most from using gpu clusters?

A: Applications in fields such as artificial intelligence, machine learning, deep learning, computer vision, and data analytics greatly gain from the computational power offered by GPU clusters. Such applications demand significant parallel processing capabilities, thus making GPU clusters an ideal solution for improving performance and efficiency.

Q: Can I use Linux on a GPU cluster for machine learning applications?

A: Certainly! Linux is widely used as an operating system in GPU clusters due to its stability, flexibility, and support for high-performance computing. Many AI software frameworks are optimized to run on Linux which makes it a preferred choice for managing and deploying machine learning (ML) plus deep neural network (DNN) applications on gpu clusters.

Q: How does the form factor of GPUs influence GPU cluster design?

A: The form factor of GPUs impacts various aspects related to hardware design within a GPU cluster, such as cooling, power consumption, or even space utilization, among others. Proper consideration of GPU form factors assists in optimizing deployment within data centers while ensuring efficient thermal management so as to realize high performance, especially in one cluster.

Q: What are some benefits associated with using a homogeneous cluster?

A: A homogeneous cluster where all nodes possess identical hardware configurations simplifies management tasks like scheduling computation jobs or even their optimization processes too. This kind of uniformity can result into more predictable performances; easier software deployments as well reduced complexities when maintaining an entire cluster.

Related Products:

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$550.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$900.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1199.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$700.00

-

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

NVIDIA(Mellanox) MMA1T00-HS Compatible 200G Infiniband HDR QSFP56 SR4 850nm 100m MPO-12 APC OM3/OM4 FEC PAM4 Optical Transceiver Module

$139.00

-

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

NVIDIA MFP7E10-N010 Compatible 10m (33ft) 8 Fibers Low Insertion Loss Female to Female MPO Trunk Cable Polarity B APC to APC LSZH Multimode OM3 50/125

$47.00

-

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$260.00

NVIDIA MCP7Y00-N003-FLT Compatible 3m (10ft) 800G Twin-port OSFP to 2x400G Flat Top OSFP InfiniBand NDR Breakout DAC

$260.00

-

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

NVIDIA MCP7Y70-H002 Compatible 2m (7ft) 400G Twin-port 2x200G OSFP to 4x100G QSFP56 Passive Breakout Direct Attach Copper Cable

$155.00

-

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

NVIDIA MCA4J80-N003-FTF Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Finned top on other

$600.00

-

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$190.00

NVIDIA MCP7Y10-N002 Compatible 2m (7ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$190.00