In the world we live in today, there is an ever-growing demand for networking systems that perform well. The NVIDIA ConnectX-6 dual-port QSFP56 network adapters are leading the way in this wave of technology with unmatched speed, low latency, and increased scalability necessary for cloud infrastructures and modern data centers alike. This document is a complete introduction to ConnectX-6 adapters by covering their main features, performance tests, and real-world usage examples. Work as a network engineer, IT professional, or someone who loves tinkering with gadgets. This post will give you all the information you need to understand how incredibly powerful these state-of-the-art network adapters are. Let’s look at some of the most advanced functionalities and breakthroughs that make ConnectX-6 an essential component of contemporary networking solutions.

Table of Contents

ToggleWhat is the NVIDIA ConnectX-6 Adapter?

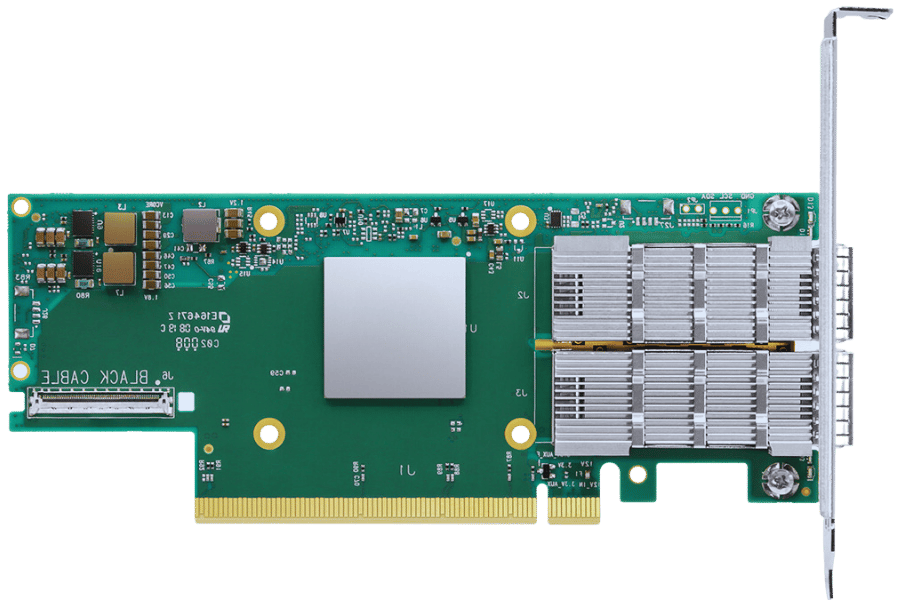

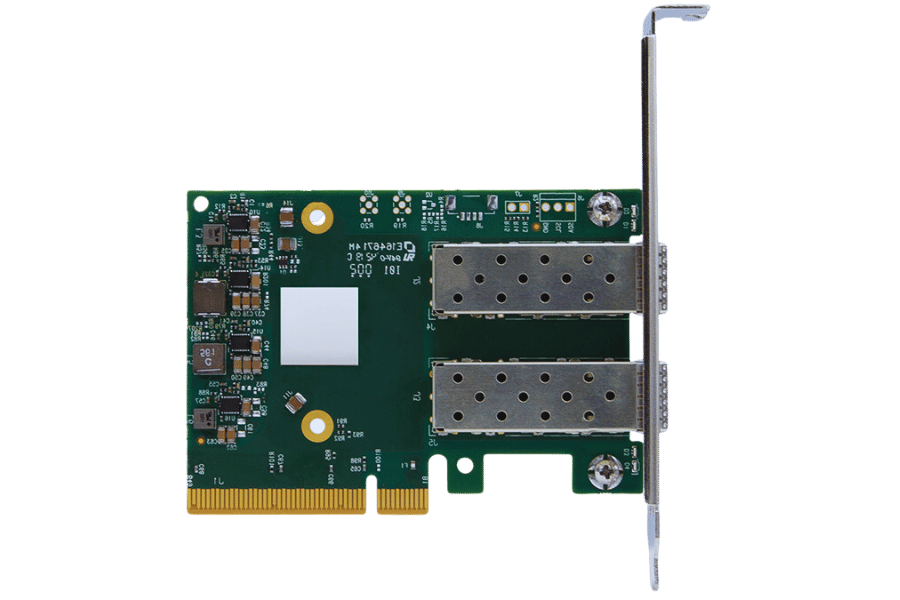

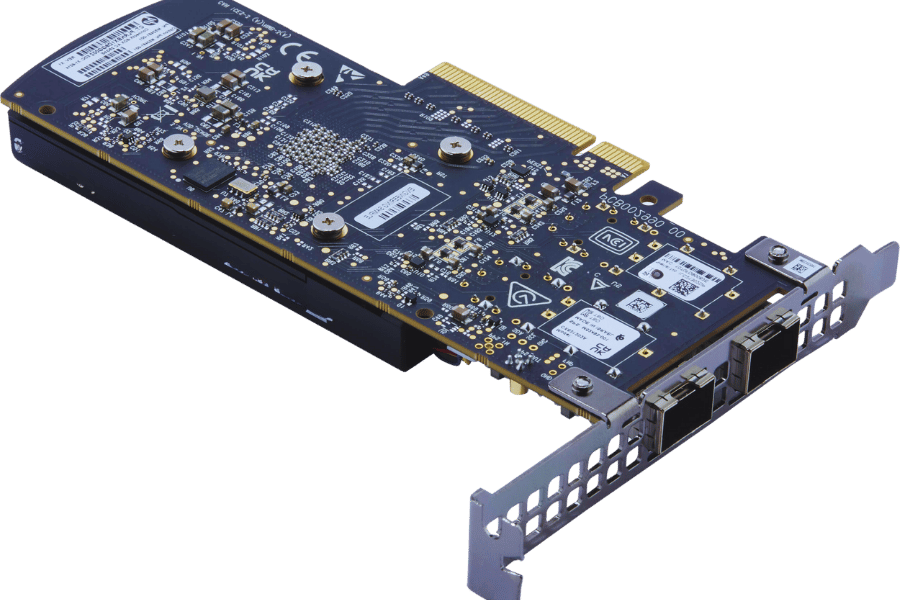

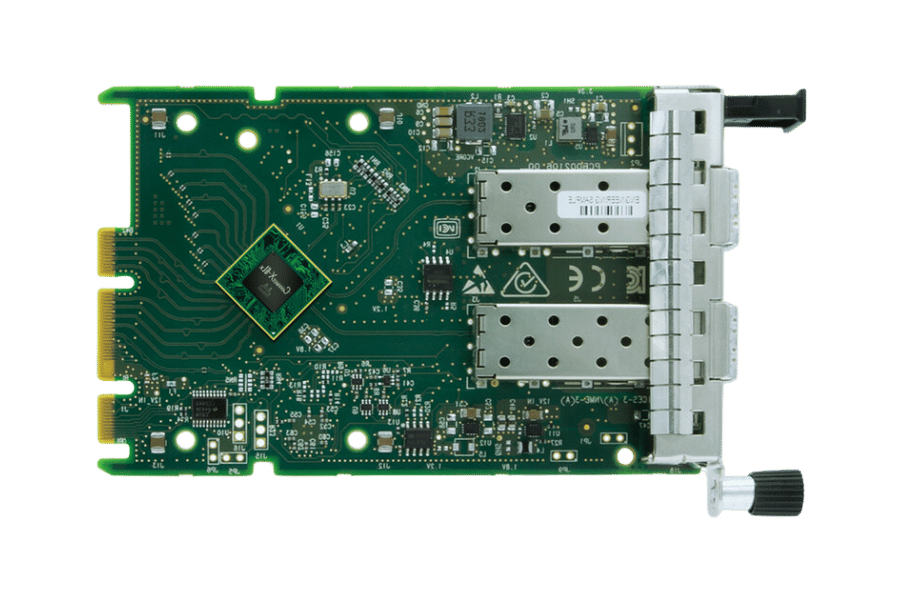

Understanding the NVIDIA ConnectX-6 Technology

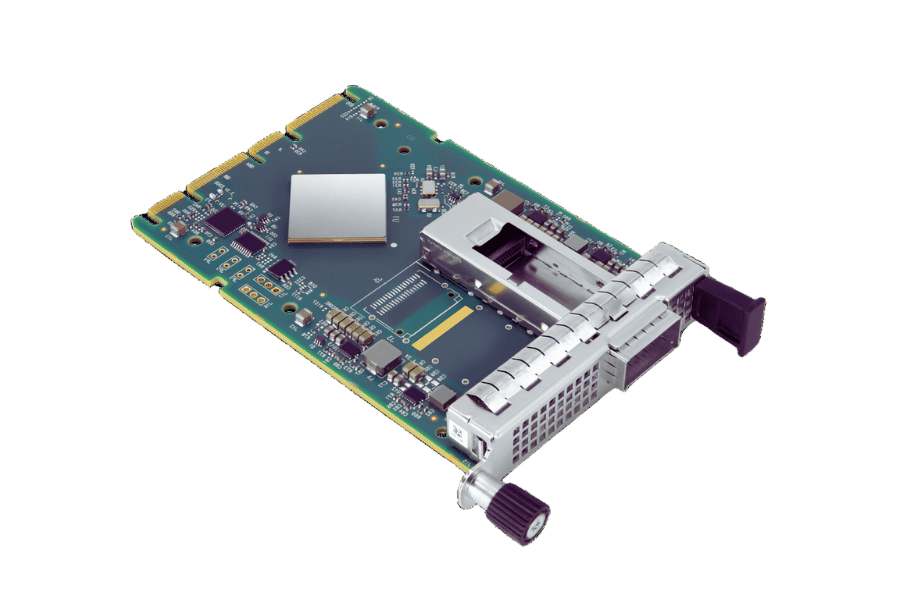

The adapter from NVIDIA called ConnectX-6 is a high-speed network interface card (NIC) that has been created for dual-port QSFP56 connectivity, which allows for data rates up to 200Gbps. This product uses advanced technologies like GPUDirect and RDMA over Converged Ethernet (RoCE) that help lower latency and reduce CPU overhead, leading to faster computation and transfer of data. It also boasts a strong hardware architecture that comes with built-in encryption and flexible programmability so as to ensure security across different applications while optimizing network performance. With its ability to scale connections seamlessly at low-latency levels, this device suits best environments with heavy workloads such as cloud services providers, HPC clusters, or any other demanding setting within a data center infrastructure.

Key Features of the ConnectX-6 Adapter

- High Capacity: The ConnectX-6 adapter can handle up to 200Gbps of data, ensuring quick transfers and establishing short-term connections that are appropriate for high-performance systems.

- Sophisticated Traffic Jam Solution: It uses sophisticated congestion control mechanisms that best manage the flow of network traffic at different times when there is a load.

- Improved Safety: It has some encryption functions inborn in it including TLS offload and IPsec that help protect information during transit without slowing down performance.

- Flexibility in Programming: With eBPF (extended Berkeley Packet Filter) as well as other interfaces used for programming networks being supported by this device it allows customization and optimization according to specific workloads.

- GPU Accelerator: Tasks that require heavy computation can now be executed faster than before through direct sending of information between GPUs enabled by GPUDirect supported among other features by the said hardware thereby reducing both latency and CPU overheads significantly.

- RoCE Support: When dealing with HPC or AI workloads that need low-latency large data volumes, transferring RDMA over Converged Ethernet becomes very important.

- Expandability: This card was designed with scalability in mind hence its suitability across diverse demanding environments such as datacenters, clouds especially when used alongside 25GbE connection.

Comparison with Other Network Adapters

When comparing the ConnectX-6 adapter with other network adapters, we see some key differences:

- Data Rate: Most regular network adapters have data rates that range from 1Gbps to 100Gbps; however, the ConnectX-6 has a data rate of 200Gbps, which puts it ahead in terms of throughput capacity.

- Security Capabilities: Basic network adapters may lack integrated security features, but this is not the case for ConnectX-6; it has advanced encryption capabilities, such as IPsec and TLS offload, that ensure strong data protection without sacrificing performance.

- Congestion Control: ConnectX-6’s advanced congestion control mechanisms are better than those found on average adapters because they enable efficient and reliable transmission under varying network loads.

- Programmability: Unlike other standard devices that do not support programmability at all, this particular one does so through eBPF support, among others, hence allowing optimization according to specific use cases.

- GPU Integration: Normal adapters do not directly work with GPUs, whereas Connectx 6 does so through the GPUDirect feature, which allows for direct transfers between them, leading to greater computing power utilization and reduced latency periods for processing tasks.

- RoCE Support: Incorporating RoCE technology into connect x six enables fast, low latency, high throughput data transfer capabilities, which are usually absent in general-purpose network cards.

- Scalability: While many traditional adapters struggle to meet demands imposed by highly demanding environments like data centers or cloud services, let alone sophisticated HPC clusters, this device was designed explicitly keeping these scenarios in mind, thus making it highly scalable across various applications.

In conclusion, what sets apart the ConnectX-6 network adapter is its higher data rate, superior security measures, more powerful congestion controls, enhanced programmable abilities, increased GPU acceleration support for RoCE, and scalability, unlike any other normal adaptor.

How to Install and Configure a ConnectX-6 Adapter Card?

Step-by-Step Installation Guide for ConnectX-6 VPI Adapter Card

Step 1: Getting Ready

- Make sure you have the right tools like a screwdriver.

- Ensure that your system is switched off and disconnected from all power sources.

- Collect the ConnectX-6 VPI adapter card and any cables or parts that come with it.

Step 2: Opening the Chassis

- Take out the screws that hold the case of the system together.

- Open up the chassis carefully following instructions given by its manufacturer.

Step 3: Installing The Adapter Card

- Look for an available PCIe slot supporting this card type (ConnectX-6 VPI).

- Detach the cover from the selected slot and unscrew it.

- Line up the connector on the adapter’s end against the appropriate position within the PCI Express x16 long slot, and then push down firmly to insert it into place correctly without bending pins or damaging contacts.

- Fixing the device requires using one screw taken from the covers and screwed onto empty slots nearby.

Step 4: Cables Linkage

- Connect adapter cards with necessary cables, making sure they are firmly plugged.

- Place wires properly so that they do not block or disturb other parts.

Step 5: Chassis Closing And Powering Up

- Return the cover of the case and fasten it using the screws that were taken off before.

- Attach this system back to the electricity mains and switch it on.

Step 6: Driver Installing

- Log into your OS and boot it up.

- Visit the maker site for ConnectX-6 VPI adapter card drivers downloaded recently.

- Confirm the installation is correct by following the steps provided for installing these drivers.

Step 7: Configuration

- On your machine, open network settings.

- Depending on your network requirements, configure the network adapter, which may involve IP address assignment, among other parameters.

- Once done, save those changes, then check if the adapter works fine.

In order to get your ConnectX-6 VPI adapter card up and running smoothly with your network setup, follow these steps carefully. But don’t be afraid to improvise a little – this guide will show you what’s worked for us in the past, but it’s not an exact science!

Configuring Dual-Port QSFP56 on ConnectX-6

To set up Dual-Port QSFP56 on ConnectX-6 adapter, do as follows:

- Access Network Interface Settings: Open your system’s network interface settings to find the ConnectX-6 adapter.

- Select Port Allocation: Choose which one of two ports (Port 1 and Port 2) needs to be configured for QSFP56 setup.

- Set Up Network Profiles: Each port should be assigned a network profile. The profile can include IP addresses, subnet masks, and gateway settings required by your network infrastructure.

- Configure Link Speed and Duplex: Set link speed and duplex mode so that they correspond with QSFP56 standards (200Gbps full-duplex).

- Enable Advanced Features: If necessary, enable any advanced networking features like RDMA or RoCE, or Quality of Service (QoS) through the management software/interface provided by the driver.

- Verify Connectivity: Test whether each port is correctly functioning or not, along with checking if data transfer rates meet expected benchmarks.

- Save and Apply Settings: Save all configurations, then restart either the system itself or its network interface, which will make changes take effect immediately.

The given steps will help you configure Dual-Port QSFP56 on your ConnectX-6 adapter for better networking performance.

Troubleshooting Common Installation Issues

Some common problems can occur when you install and configure the ConnectX-6 VPI adapter. Here are a few troubleshooting steps to help resolve these issues:

The system doesn’t recognize the Adapter:

- Check if the hardware is properly installed: Ensure that the adapter is seated firmly into the PCIe slot and that all power connectors are attached correctly.

- Confirm firmware and driver versions: Always install the latest firmware and drivers that match your OS version and are compatible with the ConnectX-6 adapter for optimal performance at 100Gb network speeds.

Connectivity problems between ports:

- Inspect cable connections: Ensure QSFP56 cables are securely connected to respective ports.

- Verify port configuration: You should review the network interface settings to confirm whether ports were configured correctly and ensure that network profiles were set accurately.

Link speed or performance below expectations:

- Modify Speed and Duplex Settings: Check whether the link speed has been set to 200Gbps full-duplex in both system settings and network profiles.

- Enable Advanced Features: Depending on your application requirements within the broader context of your network architecture design, you can utilize RDMA, RoCE, or QoS, among other advanced features.

By following a step-by-step approach towards addressing such issues, most installation failures with ConnectX-6 adapters can be troubleshot successfully while ensuring reliable connectivity across high-performance networks.

What are the Performance Benefits of ConnectX-6 Dual-Port QSFP56?

High-Performance Ethernet Connectivity

The adapter known as ConnectX-6 Dual-Port QSFP56 has some very noticeable performance advantages, and it can do this by quickening communication through low latency high-speed data transfer. Which are:

- Throughput of 200Gbps: Though a dual-port configuration, this adapter can aggregate a bandwidth of up to 200 Gbps, which makes it best suited for demanding applications in data centers or networks with high speeds of up to 25GbE.

- Scalability Improvement: The ConnectX-6 supports advanced networking protocols such as RDMA over Converged Ethernet (RoCE) and EVPN, enabling efficient scalability within data centers.

- Latency Reduction: This adaptor’s design was optimized for low levels of latency, which is very important when considering areas like frequency trading at high speed or even telecommunication, where every second counts.

- Capabilities for Advanced Offloading: Some features that help offload GPU Direct and NVMe over Fabrics have been added to reduce CPU load and improve overall system performance.

- Service Quality (QoS): In environments using passive DAC cables, QoS capabilities make sure critical apps receive prioritized bandwidth, which promotes stable, predictable performance.

The ConnectX-6 Dual Port QSFP56 adapter brings excellent Ethernet connectivity; it is designed for modern enterprise environments and data centers with higher performance demands.

Offload Capabilities and Reduced CPU Overhead

The adapter has two ports and is connected through QSFP56. It uses state-of-the-art offload technologies to lighten the CPU’s workload, thus improving the performance and efficiency of the system. The main capabilities of offloading are:

- RoCE (RDMA over Converged Ethernet): RoCE enables direct transfer of data between storage and server memory, thus freeing up CPU cycles, which reduces latency and increases throughput for data-driven applications like machine learning or AI.

- NVMe-oF (NVMe over Fabrics): This feature bypasses the host CPU, accelerating storage access times by minimizing processing latencies and maximizing IOPS.

- GPU Direct: By avoiding the CPU, it speeds up transfers necessary for compute-intensive tasks such as big data analytics or deep learning, where large amounts of information have to be processed quickly.

Moreover, this card also supports TCP/UDP/IP Stateless Offloads that shift protocol stack processing from the network hardware onto itself; this results in lower processor utilization and better overall network performance. Together, these various types of offloads make for more efficient data centers with higher performance levels.

Impact on Data Center Efficiency

The data center efficiency is significantly improved by the advanced offload capabilities of the ConnectX-6 Dual-Port QSFP56 adapter. These offloads streamline and make the processing of large datasets more efficient by reducing CPU overheads and enhancing data transfer speeds. For instance, implementation of RDMA over Converged Ethernet (RoCE) reduces latency while maximizing throughput through enabling direct memory access necessary for fast data processing applications like AI and machine learning; moreover, NVMe over Fabrics (NVMe-oF) accelerates I/O operations by bypassing host CPUs hence leading to faster storage accesses and higher IOPS are achieved as a result. GPU Direct also helps to optimize data transfers between GPUs and network adapters, which is required for compute-intensive operations.

These improvements, therefore, enable reduced power consumption and operational costs at the data center. The reduction in energy usage comes from lower CPU utilization, which also prevents server hardware from getting damaged quickly due to heat stress, thereby extending its lifespan. This means that overall efficiency increases while reliability is enhanced, making the ConnectX-6 Dual-Port QSFP56 adapter an essential component for modern high-performance computing facilities.

How to Choose the Right ConnectX-6 Adapter for Your Needs?

Comparing ConnectX-6 DX and ConnectX-6 VPI

To choose between ConnectX-6 DX and ConnectX-6 VPI, you have to look at what they are best for. The ConnectX-6 DX centers on security as it is built with certain features such as hardware root-of-trust and a secure boot process so that firmware attacks can be prevented. IPsec plus TLS cryptographic acceleration are supported by this device thereby making it ideal for an environment where data safety cannot be compromised.

ConnectX-6 VPI, on the other hand, provides wider connectivity alternatives than any other product of its kind in the market today since it supports both the InfiniBand interface and Ethernet interface too. This dual-protocol ability ensures organizations have flexibility when designing their network architectures, especially those dealing with high-performance computing (HPC) or data centers where there may be a need for either protocol. Additionally, RDMA over Converged Ethernet (RoCE) is also supported by the VPI version, which enhances efficiency in transferring data while lowering latency, thus becoming critical for applications requiring high throughput together with low response time.

It is important that one bases his decision of whether to use ConnectX-6 DX or ConnectX-6 VPI depending on the specific requirements of his/her data center. If you want maximum security, then go for ConnectX 6DX, but if what matters most is connectivity flexibility coupled with faster rates of transferring information, then choose Connect X 6VPI instead.

Optical vs. Ethernet Ports: Which is Better?

The indication to use either optical or Ethernet ports is determined by specific needs of bandwidth, distance and cost.

Bandwidth and Speed: Usually, optical ports are wider in terms of bandwidth and speed than Ethernet ports. Optical fiber is capable of transferring data at a rate of up to several terabits per second, which makes it good for high-performance applications like data centers and large enterprise networks.

Distance: Even though copper twinax cables can transmit data directly attached over short distances; they do not have the ability to carry signals over long distances without signal loss as compared with optic fibers. It is thus appropriate for campus or inter-building connections that require links covering significant lengths.

Cost and Installation: For shorter distances where day-to-day networking is needed, installing ethernet cables becomes cheaper because their installation costs are lower than those associated with fiber optics, which are expensive although they are more efficient in their performance. In addition, managing ports along an Ethernet cable also becomes easier.

Interference: An optical port is not affected by electromagnetic interference; hence, it should be used in places having much electronic noise around them, while shielded versions. This may help reduce this effect since these types of cables can interfere easily.

Therefore, one would choose optical ports when dealing with wide bandwidth requirements coupled with long-range communications or areas having strong electromagnetic fields that could interfere with signal transmission; conversely, if the link covers short distances only but needs economy during installation, then an ethernet port would suffice for general network usage.

Understanding PCIe 4.0 x16 Compatibility

The Peripheral Component Interconnect Express (PCIe) is in its fourth version. This interface type has shown significant improvements over its previous iterations. It achieves this by providing 16 GT/s per lane as data transfer speed, which doubles the rate of PCIe 3.0 and amounts to 32 GB/s aggregate bandwidth over x16 lanes configuration, thus making it ideal for applications that require high bandwidth such as gaming, 3D rendering, or even data analysis.

Compatibility with a PCI-E4x16 entirely depends on the motherboard chipsets and CPUs; these days, most new boards, especially those based on AMD’s X570 and B550 chipset, support PCIE4 natively. Intel started supporting it from Z490 onwards, but only with Rocket Lake-S series CPUs.

PCIe 4.0 is also inclusive of backward compatibility where devices and slots that are PCIE4 compliant work well with older versions like PCIE3 or PCIE2, although at reduced speeds due to lower bandwidth capacities set forth by previous standards. With this kind of inter-working capability, users can upgrade their systems stepwise, i.e., add PCI-E4 cards onto an old motherboard or put old PCI-E cards into a PCI-E4 chassis.

What Are the Use Cases for NVIDIA ConnectX-6 in Modern Data Centers?

Enhancing Machine Learning Applications

To greatly improve machine learning programs, NVIDIA ConnectX-6 adapters offer strong scalability, low latency, and high throughputs. Such adaptors are responsible for the quick movement of heavy datasets that are needed to train intricate machine learning models by having a bandwidth of up to 200Gb/s. RDMA (Remote Direct Memory Access) and GPUDirect help reduce CPU overheads, thereby allowing direct data transfers between GPUs, which is essential in accelerating AI workflows as well as reducing training time. In addition, virtualization capabilities ensure that resources are used efficiently, thus enabling many different ML jobs to be run simultaneously on one piece of hardware, thus maximizing data center utilization.

Supporting High-Frequency Trading and Financial Services

Ultra-low latency and high throughput are the key features of NVIDIA ConnectX-6 adapters used in high-frequency trading (HFT) and financial services. The RoCE (RDMA over Converged Ethernet) supported by the adapters dramatically decreases data transfer latency, thereby accelerating trade execution. This function is crucial for HFT, where profit is determined in nanoseconds. Additionally, hardware offloads and the accelerated data path of ConnectX-6 offload packet processing from the CPU enable servers to handle more transactions per second. Moreover, these adapters are flexible enough to accommodate complex financial algorithms and support real-time data analytics, ensuring that trades are executed by financial institutions with unmatched speed and reliability when it comes to processing information.

Optimizing Cloud and Enterprise Networking

Crucial to cloud and corporate networking optimization is the use of NVIDIA ConnectX-6 adapters, which increase data throughput and decrease latency while ensuring resource efficiency. These advanced features of the adapter, such as better RoCE (RDMA over Converged Ethernet) and GPUDirect RDMA, are important in providing fast speed and latency data transfer for the cloud environment. This means that virtual machines can now communicate directly with storage systems without any interruption, hence improving performance for distributed applications. Moreover, large numbers of virtual ports supported per adapter coupled with hardware offloads for VXLAN, among other virtualization-centric protocols, lead to better resource management as well as isolation. On this note, it becomes possible for service providers to attain higher density levels together with lower overheads since they align well with current dynamic needs for enterprise networks. No other device can match what the NVIDIA ConnectX-6 adapter offers in terms of speed, effectiveness, or advanced networking abilities, thus making them essential components for any cloud or business network infrastructure optimization efforts.

Reference Sources

Frequently Asked Questions (FAQs)

Q: What is NVIDIA ConnectX-6 VPI?

A: The NVIDIA ConnectX-6 VPI is a network interface card that can connect to both InfiniBand and Ethernet. It is flexible and can be used for data centers and cloud networks as it has a throughput of 100GbE dual-port QSFP56.

Q: What does the NVIDIA Mellanox ConnectX-6 consist of?

A: Some features of the NVIDIA Mellanox ConnectX-6 are PCIe4.0 x16 support, low latency, and high throughput with 100GbE, among others. Additionally, RDMA over Converged Ethernet (RoCE) and advanced security capabilities.

Q: What sizes are there in the ConnectX Series?

A: Tall brackets and short brackets are available for the ConnectX Series cards, including the NVIDIA ConnectX-6, so that they can fit into different servers.

Q: What contributions does the ConnectX-6 DX EN Adapter Card make to data centers?

A: This card improves data centers through industry-leading speed performance, enhanced security functions, and smart network interface abilities. This involves supporting RoCE, among other things, such as reduced latency.

Q: Why is NVIDIA Mellanox ConnectX-6 appropriate for 100GbE dual-port QSFP56 interconnect?

A: Supporting 100GbE dual-port QSFP56 enables the Mellanox to connect X six from Nvidia, which acts as an ideal solution for fast interconnects because it guarantees high performance while still being adaptable in terms of configuration within networks.

Q: What can take advantage of ConnectX®-6 DX capabilities?

A: Programs that require high throughput and low latency (like data centers, high-performance computing, and financial services) benefit a lot from what ConnectX®-6 DX can offer.

Q: Can ConnectX-6 work with PCIe4.0 x16 slots?

A: Yes, it is compatible with them; this allows for faster transfer speeds between devices connected via PCI Express 4.0 x16 slot as well as improving overall network performance.

Q: What type of NIC is NVIDIA® ConnectX-6 VPI?

A: It is an intelligent two-port InfiniBand or Ethernet network interface card designed by NVIDIA that supports different networking needs.

Q: Does NVIDIA Mellanox ConnectX-6 have any security features?

A: Yes, this product has them – hardware-based encryption and isolation are included in its design so that information security management systems can be maintained at all times.

Q: Where does ConnectX®-6 fit among FS.com United Kingdom’s data center technology offerings?

A: The ConnectX®-6 by NVIDIA Mellanox seamlessly integrates into various data center technologies provided by FS.com United Kingdom, thus improving network infrastructure through superior performance, flexibility, and security.

Related Products:

-

NVIDIA NVIDIA(Mellanox) MCX653105A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall bracket

$965.00

NVIDIA NVIDIA(Mellanox) MCX653105A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall bracket

$965.00

-

NVIDIA NVIDIA(Mellanox) MCX653105A-HDAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR/200GbE, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$1400.00

NVIDIA NVIDIA(Mellanox) MCX653105A-HDAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR/200GbE, Single-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$1400.00

-

NVIDIA NVIDIA(Mellanox) MCX653106A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Dual-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$828.00

NVIDIA NVIDIA(Mellanox) MCX653106A-ECAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR100/EDR/100G, Dual-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$828.00

-

NVIDIA NVIDIA(Mellanox) MCX653106A-HDAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR/200GbE, Dual-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$1600.00

NVIDIA NVIDIA(Mellanox) MCX653106A-HDAT-SP ConnectX-6 InfiniBand/VPI Adapter Card, HDR/200GbE, Dual-Port QSFP56, PCIe3.0/4.0 x16, Tall Bracket

$1600.00