- Catherine

Harper Ross

Answered on 8:46 am

Unified Fabric Manager (UFM) is a specific product suite that is widely used in high-performance computing to manage and optimize InfiniBand networks. The recommended size of the cluster for using UFM depends on several factors:

- Management requirements: When a cluster is large, manual management and maintenance may become difficult. UFM can automate many routine operations and provide in-depth analysis and monitoring capabilities to improve operational efficiency. For smaller clusters, it may also be beneficial for management and tuning.

- Economic considerations: For small clusters, you may not need to invest in the economic cost of purchasing a complex management platform like UFM. However, if the cluster size is medium or larger (such as 50-100 nodes or more), it may be more economical to invest in a UFM because it can save a lot of management and maintenance labor time.

- Performance requirements: Using UFM can effectively optimize network communication, thereby improving application performance. If your application has high-performance requirements, it may be beneficial to use UFM, regardless of the size of your cluster.

- Error diagnosis and firmware upgrades: In large clustered environments, error diagnosis and firmware upgrades can be complicated. UFM can provide automated tools to help diagnose and fix problems, as well as handle firmware upgrades, which can be especially valuable in large clustered environments.

People Also Ask

Related Articles

800G SR8 and 400G SR4 Optical Transceiver Modules Compatibility and Interconnection Test Report

Version Change Log Writer V0 Sample Test Cassie Test Purpose Test Objects:800G OSFP SR8/400G OSFP SR4/400G Q112 SR4. By conducting corresponding tests, the test parameters meet the relevant industry standards,

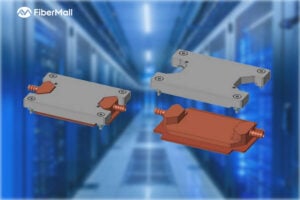

Key Design Constraints for Stack-OSFP Optical Transceiver Cold Plate Liquid Cooling

Foreword The data center industry has already adopted 800G/1.6T optical modules on a large scale, and the demand for cold plate liquid cooling of optical modules has increased significantly. To

NVIDIA DGX Spark Quick Start Guide: Your Personal AI Supercomputer on the Desk

NVIDIA DGX Spark — the world’s smallest AI supercomputer powered by the NVIDIA GB10 Grace Blackwell Superchip — brings data-center-class AI performance to your desktop. With up to 1 PFLOP of FP4 AI

RoCEv2 Explained: The Ultimate Guide to Low-Latency, High-Throughput Networking in AI Data Centers

In the fast-evolving world of AI training, high-performance computing (HPC), and cloud infrastructure, network performance is no longer just a supporting role—it’s the bottleneck breaker. RoCEv2 (RDMA over Converged Ethernet version

Comprehensive Guide to AI Server Liquid Cooling Cold Plate Development, Manufacturing, Assembly, and Testing

In the rapidly evolving world of AI servers and high-performance computing, effective thermal management is critical. Liquid cooling cold plates have emerged as a superior solution for dissipating heat from high-power processors

Unveiling Google’s TPU Architecture: OCS Optical Circuit Switching – The Evolution Engine from 4x4x4 Cube to 9216-Chip Ironwood

What makes Google’s TPU clusters stand out in the AI supercomputing race? How has the combination of 3D Torus topology and OCS (Optical Circuit Switching) technology enabled massive scaling while

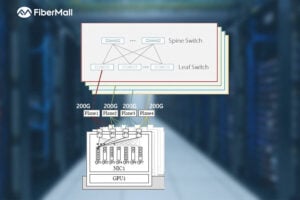

Dual-Plane and Multi-Plane Networking in AI Computing Centers

In the previous article, we discussed the differences between Scale-Out and Scale-Up. Scale-Up refers to vertical scaling by increasing the number of GPU/NPU cards within a single node to enhance individual node

Related posts:

- Is the CX7 NDR 200 QSFP112 Compatible with HDR/EDR Cables?

- Can CX7 NDR Support CR8 Transceiver Modules?

- What is the Maximum Transmission Distance Supported by InfiniBand Cables Without Affecting the Transmission Bandwidth Latency?

- Can the CX7 NIC with Ethernet mode interconnect with other 400G Ethernet switches that support RDMA?