- Catherine

FiberMall

Answered on 10:18 am

It is possible to plug an NDR cable into one port and an NDR 200 splitter cable into another port on the same module of the NDR switch. This is because NVIDIA’s port-split technology can split one NDR port (quad-lane) into two dual-lane ports, supporting 200Gb/s data speed. This way, you can use two NDR 200 ports in one OSFP cage, achieving higher port density and lower network design cost.

People Also Ask

Related Articles

800G SR8 and 400G SR4 Optical Transceiver Modules Compatibility and Interconnection Test Report

Version Change Log Writer V0 Sample Test Cassie Test Purpose Test Objects:800G OSFP SR8/400G OSFP SR4/400G Q112 SR4. By conducting corresponding tests, the test parameters meet the relevant industry standards,

Unveiling Google’s TPU Architecture: OCS Optical Circuit Switching – The Evolution Engine from 4x4x4 Cube to 9216-Chip Ironwood

What makes Google’s TPU clusters stand out in the AI supercomputing race? How has the combination of 3D Torus topology and OCS (Optical Circuit Switching) technology enabled massive scaling while

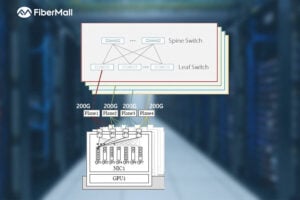

Dual-Plane and Multi-Plane Networking in AI Computing Centers

In the previous article, we discussed the differences between Scale-Out and Scale-Up. Scale-Up refers to vertical scaling by increasing the number of GPU/NPU cards within a single node to enhance individual node

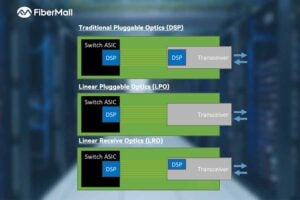

OCP 2025: FiberMall Showcases Advances in 1.6T and Higher DSP, LPO/LRO, and CPO Technologies

The rapid advancement of artificial intelligence (AI) and machine learning is driving an urgent demand for higher bandwidth in data centers. At OCP 2025, FiberMall delivered multiple presentations highlighting its

What is a Silicon Photonics Optical Module?

In the rapidly evolving world of data communication and high-performance computing, silicon photonics optical modules are emerging as a groundbreaking technology. Combining the maturity of silicon semiconductor processes with advanced photonics,

Key Design Principles for AI Clusters: Scale, Efficiency, and Flexibility

In the era of trillion-parameter AI models, building high-performance AI clusters has become a core competitive advantage for cloud providers and AI enterprises. This article deeply analyzes the unique network

Google TPU vs NVIDIA GPU: The Ultimate Showdown in AI Hardware

In the world of AI acceleration, the battle between Google’s Tensor Processing Unit (TPU) and NVIDIA’s GPU is far more than a spec-sheet war — it’s a philosophical clash between custom-designed ASIC (Application-Specific