Classification and comparison of mainstream DCN SDN solutions

| HW Network | Cisco | ZTE | Juniper | Arista /HP (resale) | Brocade | HW IT | Nokia | VMware NSX | |

|---|---|---|---|---|---|---|---|---|---|

| Category of manufacturer | Switch manufacturers | Software manufacturers | |||||||

| Category of SDN Solution | Switch-based Overlay scheme (Pure Hard Overlay or Hybrid Overlay) | Server-based Overlay Scheme (Pure Soft Overlay) |

|||||||

| SDN products | AC | ACI | ZENIC | Juniper Contrail or VMware NSX | Nokia Nuage VSP or VMware NSX | VMware NSX | Neutron+ | Nuage VSP | NSX |

| Transponder Products | CE series switches, VSG/Edumon series firewalls | Nexus Series Switches | hardware:M6000,BigMatrix 9900, 5960 TOR software:ZXDVR,ZXDVS,vFW | QFX series switches, MX series routers | 75/73/72/71/70 series switches | VDX series switches | Software: EVS (Enhanced OVS) | software:VRS hardware:VSG 7850 | Software: NSX set |

Note:

- Cisco also has a VTS solution, a server-based overlay solution, but it is not the main one. It is mainly used in old scenarios (not Nexus 9K)

- Although Contrail, VSP, and NSX are primarily based on software overlay, they can also manage some hardware switches (the management functions of different manufacturers are limited).

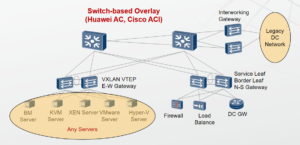

Switch-based Overlay Solution

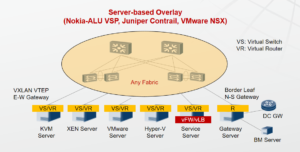

Server-based Overlay Solution

Comparison of Two Overlay Schemes

Switch-based Overlay Solution

- All Overlay functions are on the switch

- Easier to support various hypervisors and physical servers

- VXLAN wire-speed forwarding and low latency. Support SR-IOV-based NFV applications

- The O&M management tools and protocols of physical switches are mature and standardized, and the image monitoring performance is good

- The server and network are operated and maintained separately to locate network faults better

- It is more suitable for newly built or partially reutilized manufacturers (Note: Interworking Gateway can be used to access traditional VLAN networks)

Server-based Overlay Solution

- All Overlay functions are on the server

- Rely on Hypervisor, it is difficult to support all Hypervisors and physical servers

- When VXLAN is enabled on the vSwitch, the performance is greatly degraded

- The O&M of vSwitch is not very mature, and there are not many tools or protocols such as SNMP, OAM, BFD, xFlow, and mirroring that are like physical devices.

- Network fault location is difficult because the server and network are coupled

- It is more suitable for the full-reutilized or OTT (Over The Top) scenario (the fabric of the data center is owned by a third party, such as AWS)

Comparisons between the HW Soft SDN/Hard SDN

HW’s two SDN Region control planes:

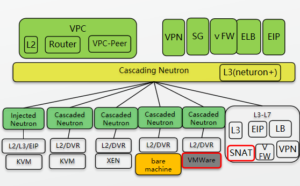

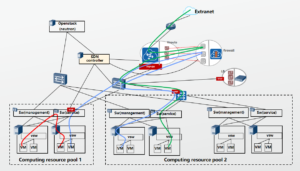

Soft SDN Region Control Plane

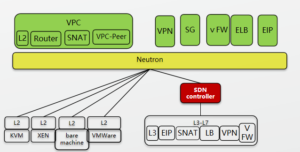

Hard SDN Region Control Plane

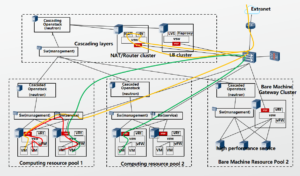

HW Soft SDN (Model)

HW Hard SDN (Model)

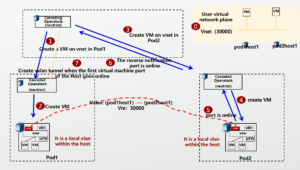

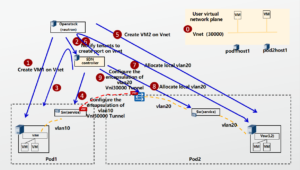

Soft SDN (Control Plane Process)

Hard SDN (Control Plane Process)

Soft SDN (service Traffic)

Hard SDN (service Traffic)

Comparison and summary of HW’s two SDNs

Conclusion:

Both soft SDN and hard SDN can meet the basic network capabilities of cloud data centers and support Layer 2 interworking across DCs. Here are the comparisons of their differences:

Soft SDN:

- Advantages

Decoupled from hardware and no vxlan switch is required. Router/LB/vFW network equipment adopts software clustering

multi-level Openstack architecture, which is flexible and easy to expand, suitable for large-scale scenarios

- Disadvantages

In small-scale scenarios, the management node occupies more x86 devices

Hard SDN:

- Advantages

vxlan is encapsulated/decapsulated on hardware network devices and has high forwarding performance (high throughput and low delay).

- Disadvantages

It is Coupled with network hardware devices, relying on hardware devices to support Vxlan, currently only commercialized with HW hardware devices;

The application scenario is small-scale (2000 nodes).

Note:

The forwarding performance is lower than that of the hard SDN scenario. In fact, by improving the performance of virtual switches, such as DPDK, and bare metal services, the performance can be equal to that of hard SDN;

HW Soft SDN has been commercialized by large-scale users on the public cloud, and it can support up to 100,000 hosts and one million tenants.