The AI industry’s “Super Bowl” has begun, and today’s star is Jensen Huang.

Tech entrepreneurs, developers, scientists, investors, NVIDIA clients, partners, and media from around the world have flocked to the small city of San Jose for the man in the black leather jacket.

Huang’s GTC 2025 keynote began at 10:00 AM local time on March 18, but by 6:00 AM, Doges AI founder Abraham Gomez had already secured the second spot in line at the SAP Center, hoping to “grab a front-row seat.” By 8:00 AM, the queue outside stretched over a kilometer.

Bill, CEO of music-generation startup Wondera, sat in the front row wearing his own black leather jacket “as a tribute to Jensen.” While the crowd was enthusiastic, Huang struck a more measured tone compared to last year’s rockstar energy. This time, he aimed to reaffirm NVIDIA’s strategy, repeatedly emphasizing “scale up” throughout his speech.

Last year, Huang declared “the future is generative”; this year, he asserted that “AI is at a tipping point.” His keynote focused on three key announcements:

1. Blackwell GPU Enters Full Production

“Demand is incredible, and for good reason—AI is at an inflection point,” Huang stated. He highlighted the growing need for computing power driven by AI inference systems and agentic training workloads.

2. Blackwell NVLink 72 with Dynamo AI Software

The new platform delivers 40x the AI factory performance of NVIDIA Hopper. “As we scale AI, inference will dominate workloads over the next decade,” Huang explained. Introducing Blackwell Ultra, he revived a classic line: “The more you buy, the more you save. Actually, even better—the more you buy, the more you earn.”

3. NVIDIA’s Annual Roadmap for AI Infrastructure

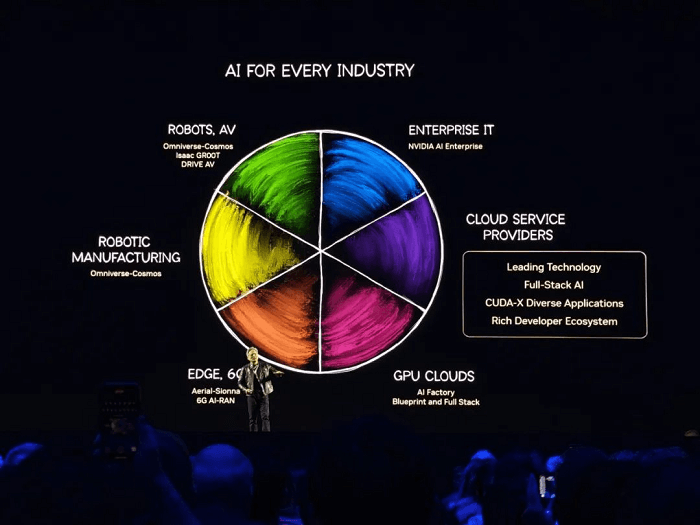

The company outlined three AI infrastructure pillars: cloud, enterprise, and robotics.

Huang also unveiled two new GPUs: the Blackwell Ultra GB300 (an upgraded Blackwell) and the next-gen Vera Rubin architecture with Rubin Ultra.

NVIDIA has unveiled two new GPUs: the Blackwell Ultra GB300, an upgraded version of last year’s Blackwell, and a completely new chip architecture called Vera Rubin, along with Rubin Ultra.

Jensen Huang’s unwavering belief in the Scaling Law is rooted in the advancements achieved through several generations of chip architectures.

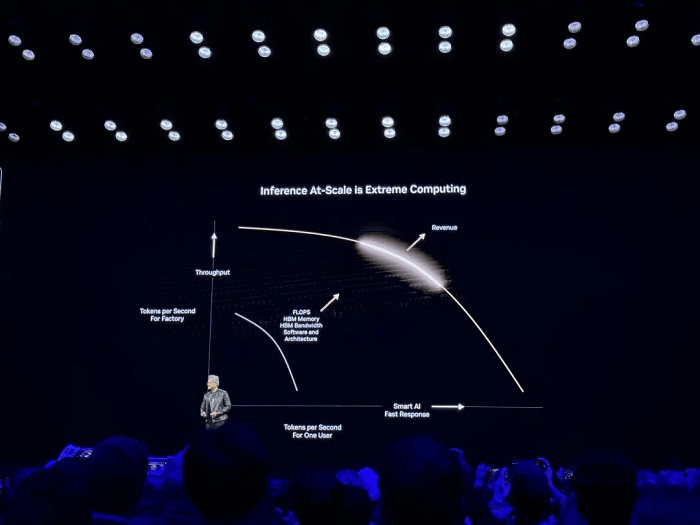

His keynote speech primarily focused on “Extreme Computation for Large-Scale AI Inference.”

In AI inference, scaling from individual users to large-scale deployments requires finding the optimal balance between performance and cost-efficiency. Systems must not only ensure rapid responses for users but also maximize overall throughput (tokens per second) by enhancing hardware capabilities (e.g., FLOPS, HBM bandwidth) and optimizing software (e.g., architecture, algorithms), ultimately unlocking the economic value of large-scale inference.

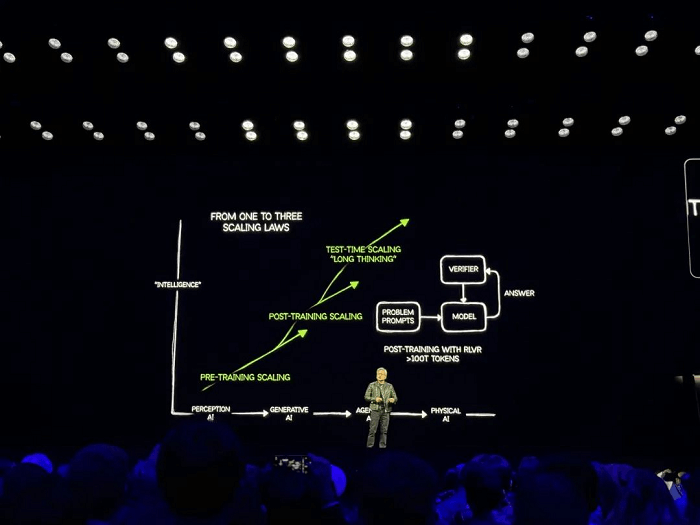

Addressing concerns about the slowing down of the Scaling Law, Jensen Huang expressed a contrasting viewpoint, claiming that “emerging expansion methods and technologies are accelerating AI improvement at an unprecedented pace.”

Facing considerable pressure, Huang appeared visibly strained during the live broadcast, frequently sipping water during breaks and sounding slightly hoarse by the end of his presentation.

As the AI market transitions from “training” to “inference,” competitors such as AMD, Intel, Google, and Amazon are introducing specialized inference chips to reduce reliance on NVIDIA. Meanwhile, startups like Cerebras, Groq, and Tenstorrent are accelerating the development of AI accelerators, and companies like DeepSeek aim to minimize dependence on costly GPUs by optimizing their models. These dynamics contribute to the challenges Huang faces. While NVIDIA dominates over 90% of the training market, Huang is determined not to relinquish the inference market amidst intensifying competition. The event’s entry banner boldly asked: “What’s Next in AI Starts Here.”

Key highlights of Jensen Huang’s keynote, as summarized on-site by “FiberMall,” include:

The World Has Misunderstood the Scaling Law

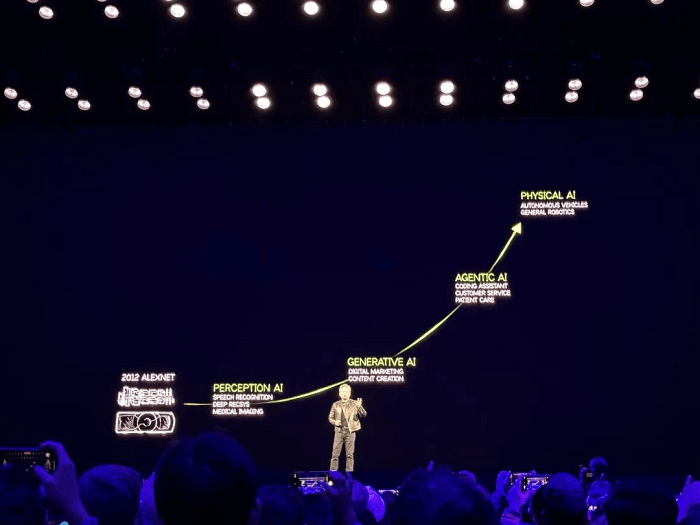

AI has represented a transformative opportunity for NVIDIA over the past decade, and Huang remains deeply confident in its potential. At this GTC, he revisited two slides from his January CES keynote:

The first slide outlined the stages of AI development: Perception AI, Generative AI, Agentic AI, and Physical AI.

The second slide depicted the three phases of the Scaling Law: Pre-training Scaling, Post-training Scaling, and Test-time Scaling (Long Thinking).

Huang offered a perspective that sharply contrasts with mainstream views, asserting that concerns about the Scaling Law’s slowing are misplaced. In his view, emerging expansion methods and technologies are driving AI progress at an unprecedented pace.

As a firm believer in the Scaling Law, Huang’s conviction stems from the fact that global AI advancements are closely tied to NVIDIA’s GPU business. He went on to describe the evolution of AI that can “reason step by step,” emphasizing the role of inference and reinforcement learning in driving computational demands. As AI reaches an “inflection point,” cloud service providers are increasingly demanding GPUs, with Huang estimating that data center construction value will reach $1 trillion.

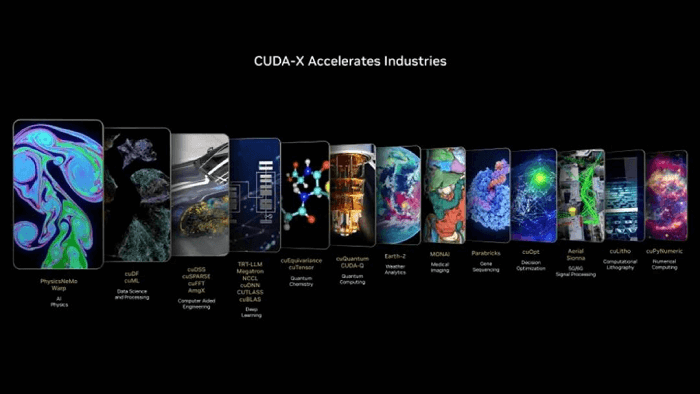

Huang elaborated that NVIDIA CUDA-X GPU acceleration libraries and microservices now serve nearly every industry. In his vision, every company will operate two factories in the future: one for producing goods and another for generating AI.

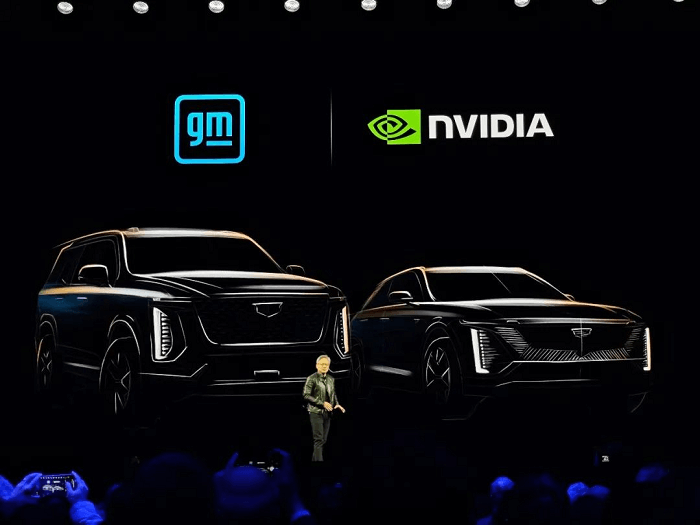

AI is expanding into various fields worldwide, including robotics, autonomous vehicles, factories, and wireless networks. Jensen Huang highlighted that one of AI’s earliest applications was in autonomous vehicles, stating, “The technologies we’ve developed are used by almost every autonomous vehicle company,” across both data centers and the automotive industry.

Jensen announced a significant milestone in autonomous driving: General Motors, the largest automaker in the United States, is adopting NVIDIA AI, simulation, and accelerated computing to develop its next-generation vehicles, factories, and robots. He also introduced NVIDIA Halos, an integrated safety system combining NVIDIA’s automotive hardware and software safety solutions with cutting-edge AI research in autonomous vehicle safety.

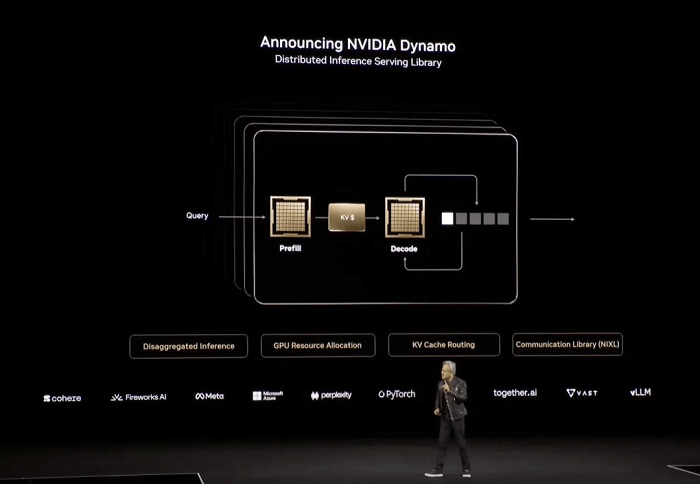

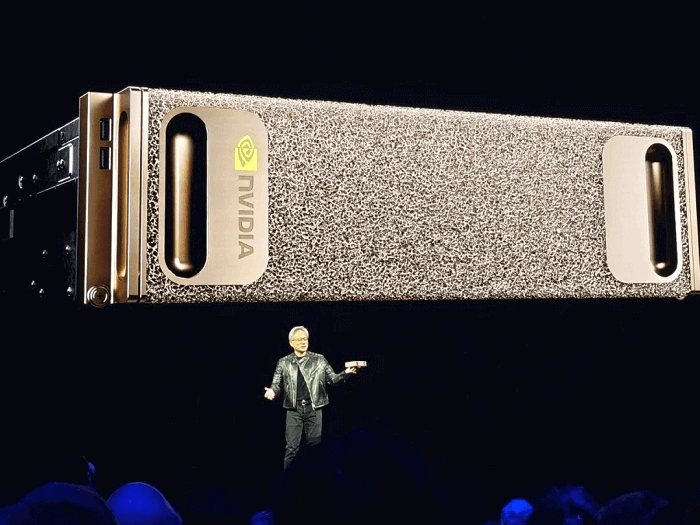

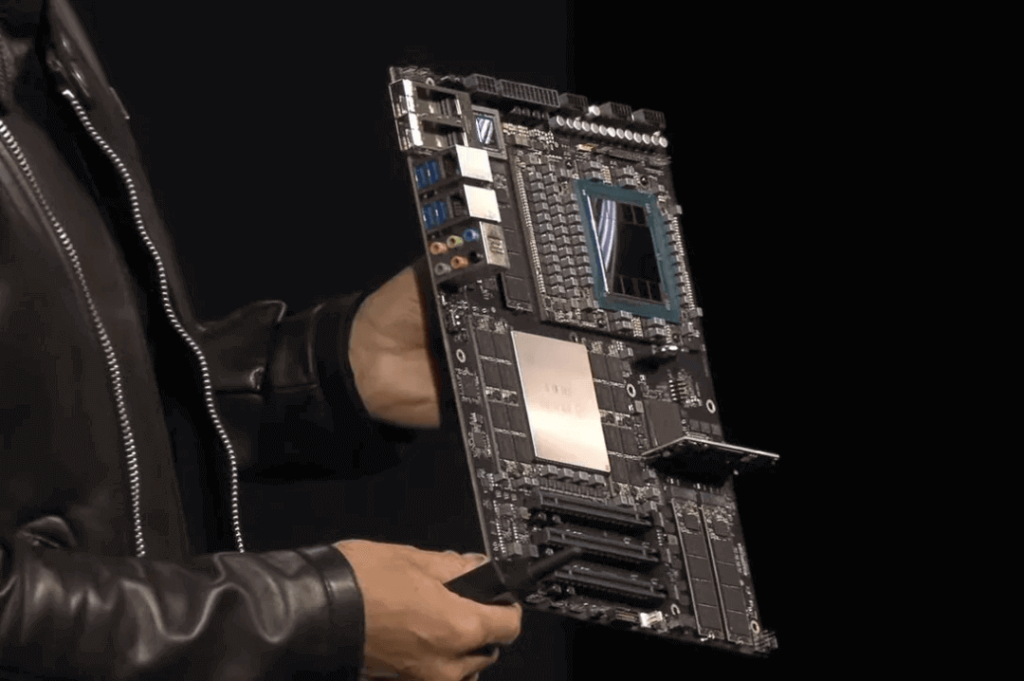

Moving to data centers and inference, Huang shared that NVIDIA Blackwell has entered full-scale production, presenting systems from numerous industry partners. Pleased with Blackwell’s potential, he elaborated on how it supports extreme scalability, explaining, “We aim to address a critical challenge, and this is what we call inference.”

Huang emphasized that inference involves generating tokens, a process essential for businesses. These AI factories that generate tokens must be built with exceptional efficiency and performance. With the latest inference models capable of solving increasingly complex problems, the demand for tokens will continue to rise.

To accelerate large-scale inference further, Huang announced NVIDIA Dynamo, an open-source software platform designed to optimize and scale inference models in AI factories. Describing it as “essentially the operating system for AI factories,” he underscored its transformative potential.

“Buy More, Save More, Earn More”

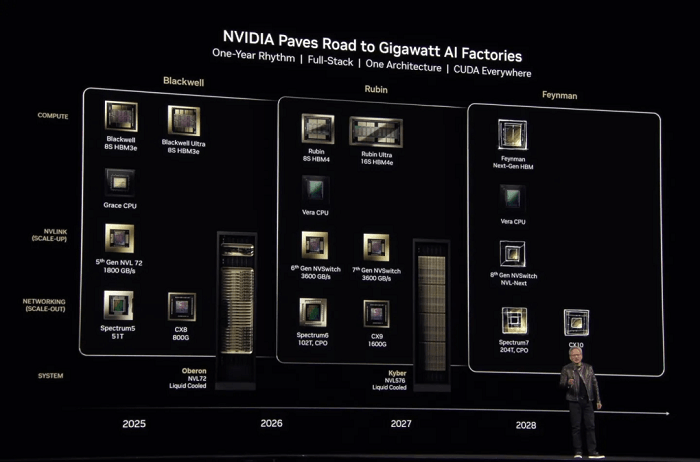

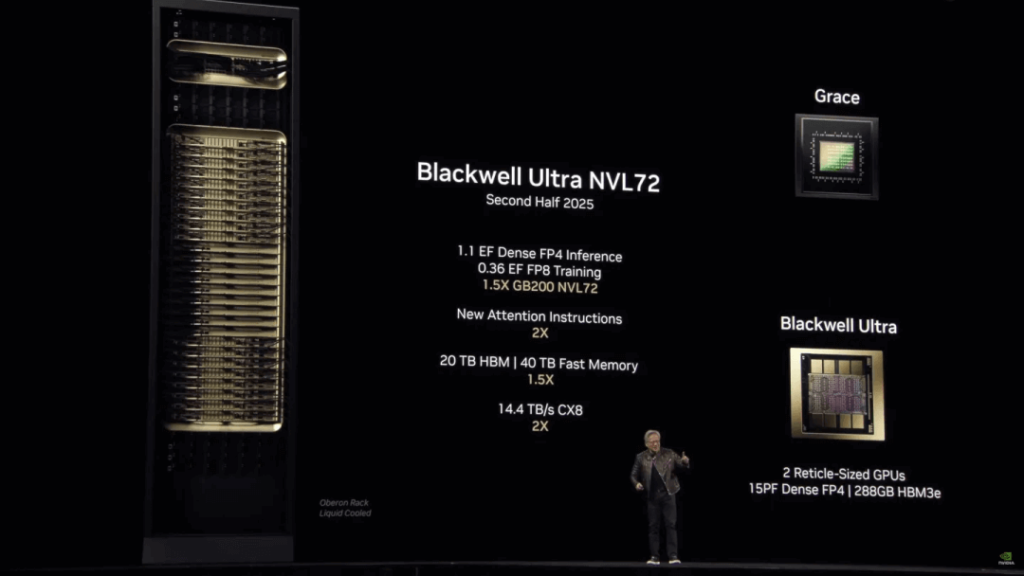

NVIDIA also revealed two new GPUs: the Blackwell Ultra GB300, an upgraded version of last year’s Blackwell, and the next-generation Vera Rubin and Rubin Ultra chip architectures.

Blackwell Ultra GB300 will be available in the second half of this year.

Vera Rubin is set for release in the second half of next year.

Rubin Ultra is expected in late 2027.

Additionally, Huang unveiled the roadmap for upcoming chips. The architecture for the generation beyond Rubin has been named Feynman, anticipated in 2028. The name likely honors the renowned theoretical physicist Richard Feynman.

Continuing NVIDIA’s tradition, each GPU architecture is named after prominent scientists—Blackwell after statistician David Harold Blackwell, and Rubin after Vera Rubin, the pioneering astrophysicist who confirmed the existence of dark matter.

Over the past decade, NVIDIA has released 13 generations of GPU architectures, averaging over one new generation per year. These include iconic names such as Tesla, Fermi, Kepler, Maxwell, Pascal, Turing, Ampere, Hopper, and most recently, Rubin. Huang’s commitment to the Scaling Law has been a driving force behind these innovations.

Regarding performance, Blackwell Ultra offers substantial upgrades compared to Blackwell, including an increase in HBM3e memory capacity from 192GB to 288GB. NVIDIA has also compared Blackwell Ultra to the H100 chip released in 2022, noting its ability to deliver 1.5 times the FP4 inference performance. This translates to a significant advantage: an NVL72 cluster running the DeepSeek-R1 671B model can provide interactive responses in 10 seconds, compared to 1.5 minutes with the H100. Blackwell Ultra processes 1,000 tokens per second, 10 times that of the H100.

NVIDIA will also offer the GB300 NVL72 single-rack system, featuring:

1.1 exaflops of FP4,

20TB of HBM memory,

40TB of “fast memory,”

130TB/s of NVLink bandwidth, and

14.4TB/s of network speed.

Acknowledging the overwhelming performance of Blackwell Ultra, Huang joked about his concerns that customers might skip purchasing the H100. He humorously referred to himself as the “chief revenue destroyer,” admitting that, in limited cases, Hopper chips are “okay,” but such scenarios are rare. Concluding with his classic line, he declared, “Buy more, save more. It’s even better than that. Now, the more you buy, the more you earn.”

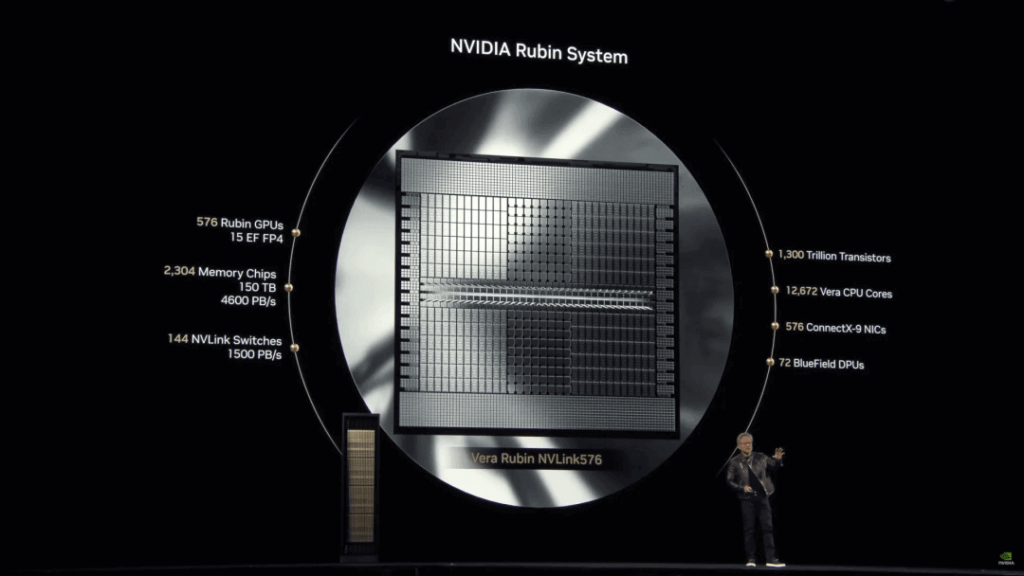

The Rubin architecture represents a groundbreaking step for NVIDIA. Jensen Huang emphasized, “Essentially, everything except the rack is brand new.”

Enhanced FP4 Performance: Rubin GPUs achieve 50 petaflops, surpassing Blackwell’s 20 petaflops. Rubin Ultra comprises a single chip with two interconnected Rubin GPUs, delivering 100 petaflops of FP4 performance—twice that of Rubin—and nearly quadrupling memory to 1TB.

NVL576 Rubin Ultra Rack: Offers 15 exaflops of FP4 inference and 5 exaflops of FP8 training, boasting performance 14 times greater than Blackwell Ultra racks.

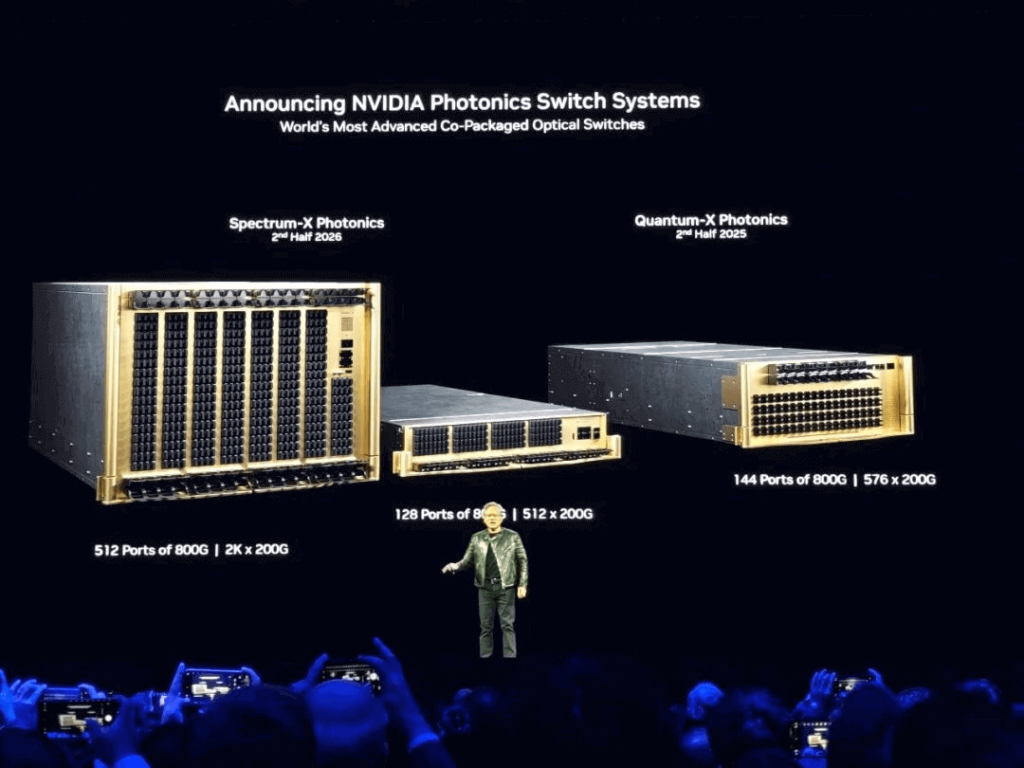

Huang also explained the integration of photonics technology to scale systems, incorporating it into NVIDIA’s Spectrum-X and Quantum-X silicon photonic network switches. These innovations blend electronic and optical communication, enabling AI factories to interconnect millions of GPUs across sites while reducing energy consumption and costs.

The switches are exceptionally efficient, achieving 3.5 times power efficiency, 63 times signal integrity, 10 times network resilience, and faster deployment compared to traditional methods.

Computers for the AI Era

Expanding beyond cloud chips and data centers, NVIDIA launched desktop AI supercomputers powered by the NVIDIA Grace Blackwell platform. Designed for AI developers, researchers, data scientists, and students, these devices enable prototyping, fine-tuning, and inference of large models at the desktop level.

Key products include:

DGX Supercomputers: Featuring the NVIDIA Grace Blackwell platform for unmatched local or cloud deployment capabilities.

DGX Station: A high-performance workstation equipped with Blackwell Ultra.

Llama Nemotron Inference Series: An open-source AI model family offering improved multi-step reasoning, coding, and decision-making. NVIDIA’s enhancements boost accuracy by 20%, inference speed by 5x, and operational cost efficiency. Leading companies such as Microsoft, SAP, and Accenture are partnering with NVIDIA to develop new inference models.

The Era of General-Purpose Robotics

Jensen Huang declared robots as the next $10 trillion industry, addressing a global labor shortage expected to reach 50 million workers by the end of the century. NVIDIA unveiled Isaac GR00T N1, the world’s first open, fully customizable humanoid inference and skill foundation model, along with a new data generation and robotics learning framework. This paves the way for the next frontier in AI.

In addition, NVIDIA released the Cosmos Foundation Model for physical AI development. This open, customizable model empowers developers with unprecedented control over world generation, creating vast and systematically infinite datasets through integration with Omniverse.

Huang also introduced Newton, an open-source physics engine for robotics simulation co-developed with Google DeepMind and Disney Research. In a memorable moment, a miniature robot named “Blue,” which previously appeared at last year’s GTC, emerged on stage again, delighting the audience.

NVIDIA’s ongoing journey has been about finding applications for its GPUs, from AI breakthroughs with AlexNet over a decade ago to today’s focus on robotics and physical AI. Will NVIDIA’s aspirations for the next decade bear fruit? Time will tell.

Related Products:

-

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$650.00

NVIDIA MMA4Z00-NS400 Compatible 400G OSFP SR4 Flat Top PAM4 850nm 30m on OM3/50m on OM4 MTP/MPO-12 Multimode FEC Optical Transceiver Module

$650.00

-

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$800.00

NVIDIA MMS4X00-NS400 Compatible 400G OSFP DR4 Flat Top PAM4 1310nm MTP/MPO-12 500m SMF FEC Optical Transceiver Module

$800.00

-

NVIDIA MMA1Z00-NS400 Compatible 400G QSFP112 SR4 PAM4 850nm 100m MTP/MPO-12 OM3 FEC Optical Transceiver Module

$650.00

NVIDIA MMA1Z00-NS400 Compatible 400G QSFP112 SR4 PAM4 850nm 100m MTP/MPO-12 OM3 FEC Optical Transceiver Module

$650.00

-

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$800.00

NVIDIA MMS1Z00-NS400 Compatible 400G NDR QSFP112 DR4 PAM4 1310nm 500m MPO-12 with FEC Optical Transceiver Module

$800.00

-

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$750.00

NVIDIA MMA4Z00-NS Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$750.00

-

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$850.00

NVIDIA MMA4Z00-NS-FLT Compatible 800Gb/s Twin-port OSFP 2x400G SR8 PAM4 850nm 100m DOM Dual MPO-12 MMF Optical Transceiver Module

$850.00

-

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1100.00

NVIDIA MMS4X00-NM Compatible 800Gb/s Twin-port OSFP 2x400G PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1100.00

-

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1200.00

NVIDIA MMS4X00-NM-FLT Compatible 800G Twin-port OSFP 2x400G Flat Top PAM4 1310nm 500m DOM Dual MTP/MPO-12 SMF Optical Transceiver Module

$1200.00

-

NVIDIA MMS4X50-NM Compatible OSFP 2x400G FR4 PAM4 1310nm 2km DOM Dual Duplex LC SMF Optical Transceiver Module

$1350.00

NVIDIA MMS4X50-NM Compatible OSFP 2x400G FR4 PAM4 1310nm 2km DOM Dual Duplex LC SMF Optical Transceiver Module

$1350.00

-

NVIDIA MCP7Y00-N001 Compatible 1m (3ft) 800Gb Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Direct Attach Copper Cable

$175.00

NVIDIA MCP7Y00-N001 Compatible 1m (3ft) 800Gb Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Direct Attach Copper Cable

$175.00

-

NVIDIA MCA7J60-N004 Compatible 4m (13ft) 800G Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Active Copper Cable

$800.00

NVIDIA MCA7J60-N004 Compatible 4m (13ft) 800G Twin-port OSFP to 2x400G OSFP InfiniBand NDR Breakout Active Copper Cable

$800.00

-

NVIDIA MCP7Y10-N001 Compatible 1m (3ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$165.00

NVIDIA MCP7Y10-N001 Compatible 1m (3ft) 800G InfiniBand NDR Twin-port OSFP to 2x400G QSFP112 Breakout DAC

$165.00

-

NVIDIA MCP7Y50-N001 Compatible 1m (3ft) 800G InfiniBand NDR Twin-port OSFP to 4x200G OSFP Breakout DAC

$295.00

NVIDIA MCP7Y50-N001 Compatible 1m (3ft) 800G InfiniBand NDR Twin-port OSFP to 4x200G OSFP Breakout DAC

$295.00

-

NVIDIA MCA7J70-N004 Compatible 4m (13ft) 800G InfiniBand NDR Twin-port OSFP to 4x200G OSFP Breakout ACC

$1100.00

NVIDIA MCA7J70-N004 Compatible 4m (13ft) 800G InfiniBand NDR Twin-port OSFP to 4x200G OSFP Breakout ACC

$1100.00

-

NVIDIA MCA4J80-N003 Compatible 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable

$600.00

NVIDIA MCA4J80-N003 Compatible 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable

$600.00

-

NVIDIA MCP4Y10-N002-FLT Compatible 2m (7ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive DAC, Flat top on one end and Flat top on the other

$300.00

NVIDIA MCP4Y10-N002-FLT Compatible 2m (7ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive DAC, Flat top on one end and Flat top on the other

$300.00

-

NVIDIA MCP4Y10-N00A Compatible 0.5m (1.6ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive Direct Attach Copper Cable

$105.00

NVIDIA MCP4Y10-N00A Compatible 0.5m (1.6ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Passive Direct Attach Copper Cable

$105.00

-

NVIDIA MCA4J80-N003-FLT Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Flat top on the other

$600.00

NVIDIA MCA4J80-N003-FLT Compatible 3m (10ft) 800G Twin-port 2x400G OSFP to 2x400G OSFP InfiniBand NDR Active Copper Cable, Flat top on one end and Flat top on the other

$600.00