Equally as important, if not more important, than modern data centers’ rapid evolution caused by the excessive rise in data usage and the need for greater efficiency, scalability, as well as speed, is network switching. Network architecture, latency, and operational performance can all be optimized through the innovative method of Top-of-Rack (ToR) switching. Within this piece, the essentials of ToR switching will be examined, along with its benefits against conventional network designs, as well as offering tips on how to put them into practice. This article is meant for data center strategists, IT experts, and network architects who want to understand how to prepare connectivity infrastructure for the future.

Table of Contents

ToggleWhat is a Tor Switch and How Does It Work?

Understanding the Tor Switch

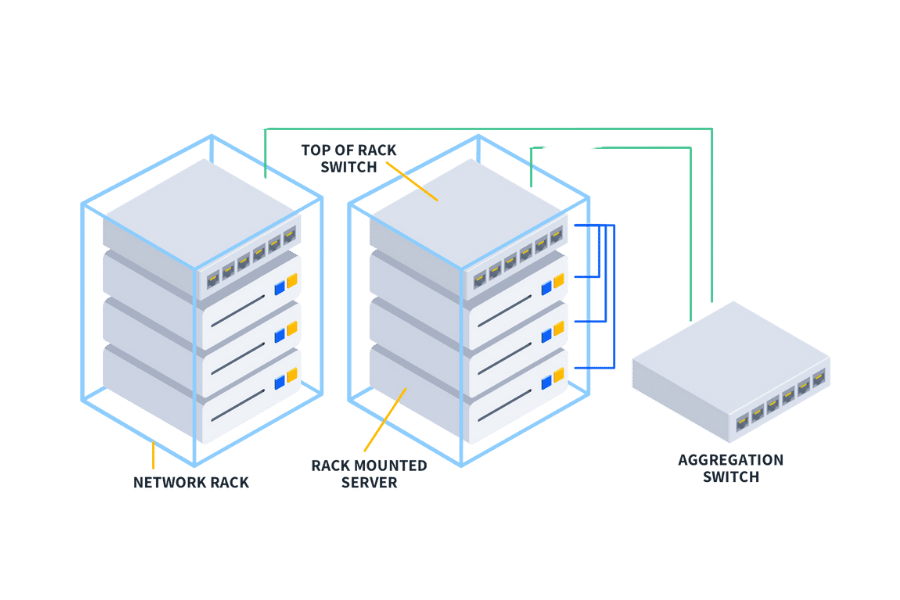

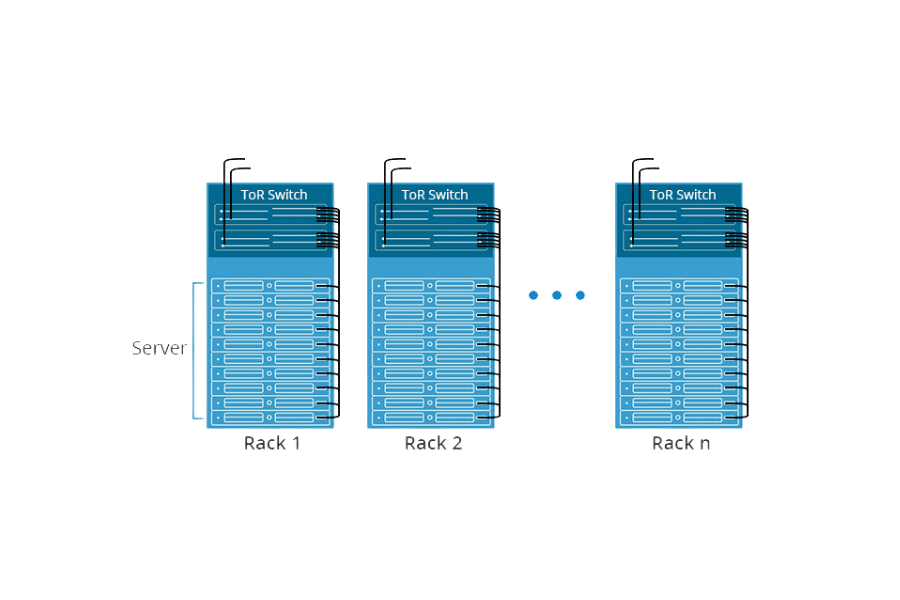

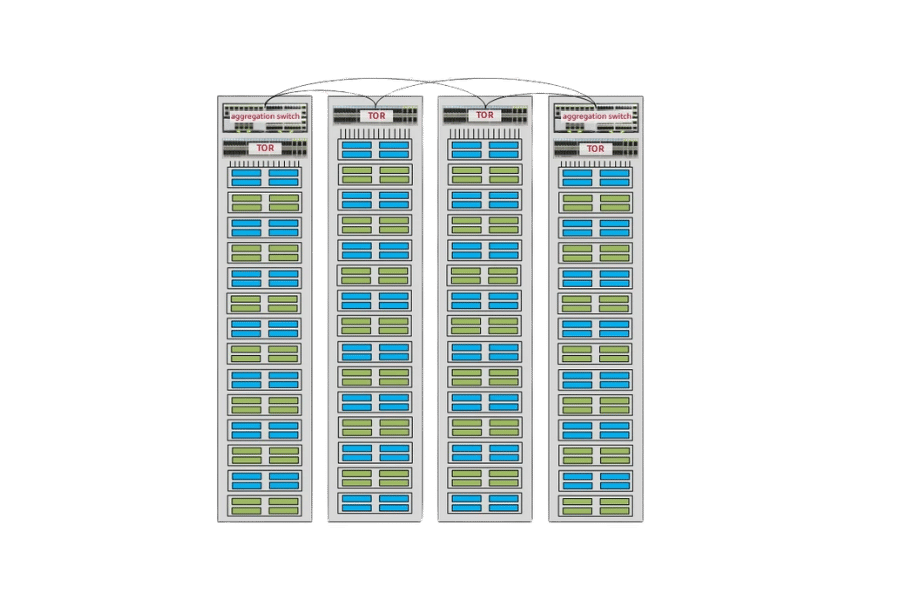

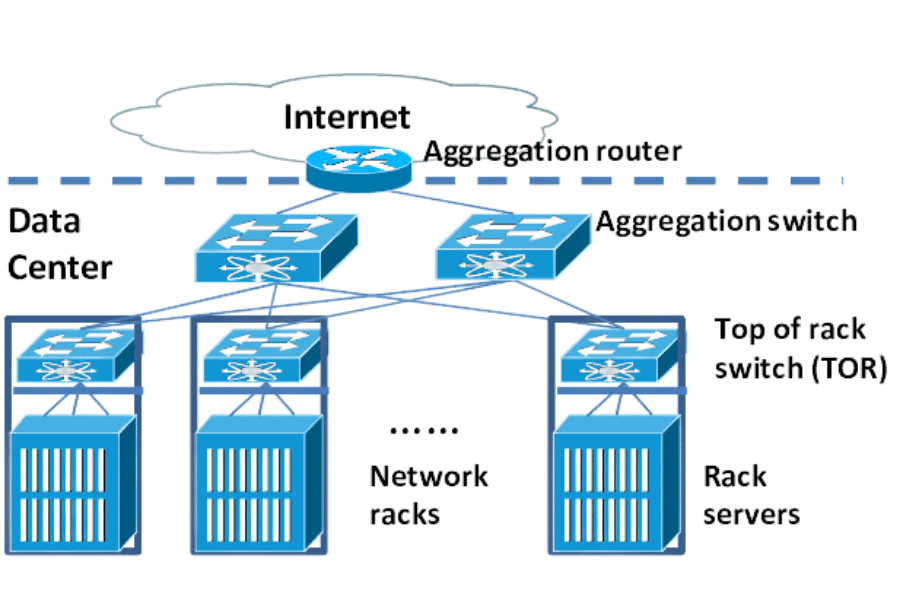

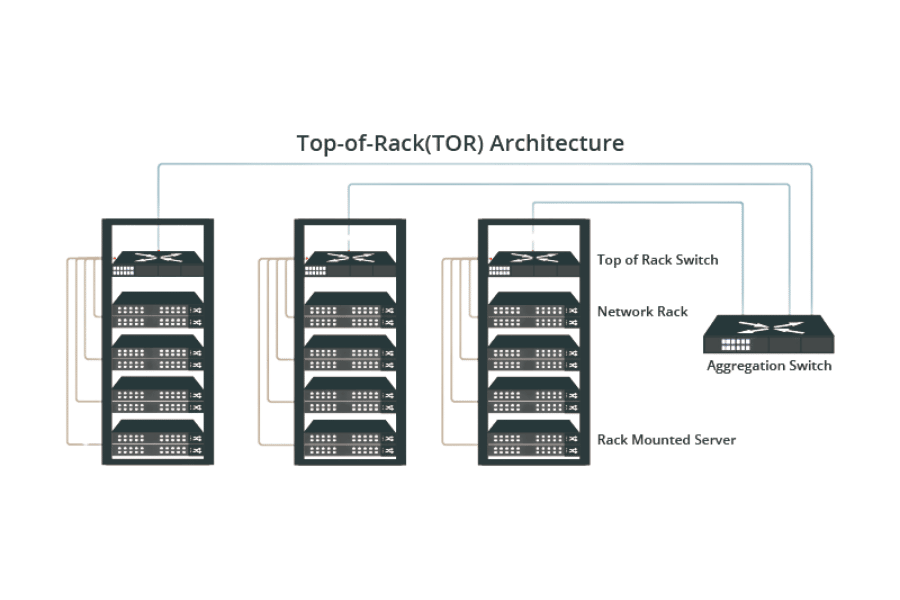

A Top-of-Rack (ToR) switch is a switch located at the top of a server rack in a data center. Its primary task is to link all the servers in the rack to the rest of the data center. ToR designs improves performance efficiency and reduces cabling complexity by shortening the distance between the server and the network switch. This arrangement supports centralized communication at the rack level which simplifies maintenance and provides flexibility to the network, which makes it easier to implement in modern data centers.

Key Components of a Tor Switch

- Ports: ToR switches have several high-speed ports like Ethernet ports and uplink ports. Uplink ports help connect the switch to the core network and the other ports serve to connect the servers. These ports cater to required data rate for efficient server communication.

- Power Supply Units (PSUs): Redundant PSUs greatly improve reliability and decrease the chances of critical environment downtime due to interruption of power supply.

- Switching Fabric: Internal configuration allowing movement of data packets between connected peripherals which is done with a guarantee of low delay with maximum data transfer.

- Cooling System: Overheating is prevented through the use of built-in fans or advanced cooling systems to ensure optimal performance is achieved.

- Approving User Interface: A simple interface that enables the administrator to configure, monitor, and troubleshoot the switch efficiently.

All of these components work together to guarantee the efficient functioning of a ToR switch in a busy data center.

Tor Switch in Data Center Networks

A Top-of-Rack (ToR) switch in data center networks acts as an essential link between servers and the network. It is positioned at the top of the server rack where traffic from the devices in the rack is aggregated and passed on to either core or aggregation switches within the network. This configuration reduces the amount of required cabling, improves bandwidth usage, and reduces time delay. ToR switches are particularly preferred in modern data center designs due to their ease of expansion and support of high speed data transfer which is critical to increasing workloads.

Benefits of Top-of-Rack Switching

Enhanced Network Efficiency

The performance of a network is improved with Top-of-Rack (ToR) switching due to the reduction of the distance data travels within a data center, thus reducing latency. ToR switches aid in boosting bandwidth efficiency because they connect to servers located in the rack, thus reducing bottleneck risks. This design supports high-speed connections as well as modern-day workloads posing as a challenge.

Increasing Redundancy and Scalability

Top-of-Rack (ToR) switching is useful in achieving scalability and redundancy in data center networks. This design promotes the easy addition of racks, thus allowing scaling of resources when business requirements expand, all without upsetting the underlying network infrastructure. Each rack is equipped with dedicated switches, allowing each to function as a separate unit, thus providing localized failure shielding.

Traffic management is simplified by deploying high-availability protocols like multipath routing (i.e., Equal Cost Multi Path (ECMP)) which allows for redundancy in ToR switching and distributes traffic across multiple potential pathways to eliminate single points of failure. A study conducted on contemporary data center architecture indicates that operational uptime is greatly improved using ToR in data centers and redundancy strategies often reach 99.99% reliability. Moreover, fault tolerance is improved by network layer virtualization and backup automation using Virtual Extensible LANs (VXLANs) and software defined networking (SDN), ensuring uninterrupted service in case of hardware or link failure.

ToR switching allows enterprises to efficiently respond to the increasing demand for cloud services, IoT devices, and AI powered workloads by smartly distributing the traffic across all the servers. This mixture of scalability and redundancy makes ToR switching an indispensable element in the design of next generation data centers.

Minimizing Network Infrastructure Latency

The process of minimizing latency in network infrastructure focuses on enhancing the speed and effectiveness of data transmission. Important methods include leveraging edge computing to perform analytics closer to where the data originates, using low-latency switches and fiber-optic cabling as wiring infrastructure, and minimizing the number of hops on a given path. In addition, advanced traffic management, for example, QoS (quality of service) prioritization, guarantees that important data is sent with minimal or no obstruction. For sustainable improvements, persistent monitoring and correction of the network performance is critical. These attempts will minimize the delays in the network and result in a network that is much more reliable and quicker.

Deploying a Tor Switch in Your Network Infrastructure

Step-By-Step Deployment Instructions

- Evaluate Network Needs. Analyze your network scope, usage types, and growth projections to determine if the deployment of a Tor switch will meet your requirements.

- Choose the Right Model of Tor Switch. Select a Tor switch that satisfies your infrastructure requirements in terms of port density, throughput, and other hardware devices.

- Prepare for the Physical Installation. Select the best position in your data center or network infrastructure with respect to the cooling as well as maintenance access.

- Connect to Other Network Layers. Join the switch to the core and aggregation levels while observing correct wiring and geometric relations to your network design for proper connection.

- Set Up the Switch. Perform initial configuration steps including, but not limited to, IP address allocation, VLAN assignment, and protocol activation that are relevant to your system needs.

- Validate Functionality and Efficiency. Complete the necessary checks to ensure that the switch functions properly and that the data traffic is appropriately forwarded in normal and peak operational modes.

- Adjustment and Servicing. From time to time take a look at the switch’s performance, and in due time service the firmware to continue providing dependability and security for your network infrastructure.

Common Difficulties and Their Resolutions

In my case, one of the most frequent difficulty is configuration issues at the setup stage like VLAN assignments or IP address conflicts. To fix this, I make it a point to validate the configurations as per the documentation prior to deploying them. Other challenges include enabled outdated firmware, which form gaps in system security as well as create incompatibility issues. I make sure regular updates are done, as well as scheduled maintenance, to mitigate some of these risks. Finally, optimizing the network to handle heavy traffic without degrading the performance becomes difficult. In such cases, network performance and QoS adjustment is necessary to prevent the network from bottlenecks.

Best Configuration Practices

To enhance the reliability and efficiency of the configurations, consider the following best practices:

- Documentation and Planing: Make it a point to outline the approaches to everything and make all the requisite documentation before commencing any setups; this ensures consistency and eliminates errors during implementation.

- Updates: Ensure all the firmware and software are up to date to the most stable of versions to eradicate security holes and enhance compatibility.

- Testing in Controlled Environment: Such modifications need to be made in a test environment first to ascertain and rectify any risks beforehand.

- Monitoring and Optimization: Use monitoring tools to track performance metrics to ensure such anomalies are rectified as fast as possible. Configurations like load balancing or bandwidth throttling, can also be done to optimize system performance.

Completing the steps outlined here will assist with protecting system integrity while also ensuring optimal functionality is achieved consistently.

How Top-of-Rack Switching Can Improve Network Efficiency

Enhancing Data Transmission and Network Interconnectivity

The efficiency of the network is improved as a result of increased data transfer rates along with decreased latency, which is achieved through Top-of-Rack (ToR) switching. ToR puts switches within server racks thereby lowering the distance that data has to travel as well as fast-tracking communication between devices. This approach also improves cable management by lessening the chances of bottlenecks and guaranteeing that connectivity is uninterrupted. Also, ToR configurations are scalable, which enables networks to incorporate heightened traffic volumes without major modifications to the infrastructure. With all these positives, ToR switching proves to be very helpful in the advancement and optimization of network performance.

How Virtualization Plays a Role

Network efficiency is boosted by virtualization because multiple virtual network functions can be consolidated onto a single physical server, leading to decreased consumption of resources and hardware. This method improves the performance of the existing structure, reduces the time to deploy by minimizing downtime, and makes network maintenance easier. Moreover, virtualization allows for flexibility through dynamic resource distribution to meet different traffic volumes, which increases the network’s overall responsiveness, and enables greater scalability.

Multiple switch and network administration

The use of multiple switches within a network infrastructure enables efficient management of system traffic and the overall dependability of the system. Each switch acts as a high speed hub within the network enabling effective routing of data between devices, eliminating bottlenecks. Contemporary network management approaches utilize Software-Defined Networking (SDN) technologies to flexibly handle several switches which makes it possible to automate the communication as well as the flow of traffic within the network.

Several reports suggest that employing multiple switches improves fault tolerance in a network due to system redundancy. For example, in the case of the failure of one switch, the network is able to reroute traffic through different switches which reduces the downtime significantly. Furthermore, multiple switches provide network segmentation resulting to better security through confining sensitive data flows to particular segments which diminishes exposure in case of a breach.

Data from the industry analyses indicates that networks with advanced switch management can get close to 99.999% uptime, which is important for mission critical applications. The use of Spanning Tree Protocol (STP) also helps as they ensure the removal of data loops which, in turn, improves performance. The management of several switches, along with the aiding of the management system’s ability to control advanced parameters, fosters a better experience and therefore is of paramount importance for large scale operations.

Analyzing the Top-of-Rack Solution Switching Ecosystem

Key Players and Their Innovations

Currently, the major competitors in the top-of-rack (ToR) switching ecosystem are Cisco, Arista Networks, and Juniper Networks. These companies are well known for dependable and effective solutions to enterprises and data centers. For example, many users of Cisco’s Nexus series appreciate its scalability as well as advanced features, such as virtualization and automation. Arista Networks prides itself on switching technology that emphasizes software-defined networking and robust cloud integration. Juniper Networks has the QFX series, which is crafted to deliver exceptional performance and integration across multiple network systems. These technologies reduce latency and increase throughput while enabling easier management, which is ideal for everyday operation of modern data centers.

Changes and Developments Toward the Future of Top-of-Rack Technologies

The future of top-of-rack (ToR) technologies will surely be driven by a combination of network automation, AI-managed services, and the adoption of 400G Ethernet networks. With the increased complexity surrounding modern data centers, automation becomes necessary to decrease operational overhead, as well as provide adequate uniform configuration across large networks. Intent-based networking (IBN) are emerging as key enablement technologies that give administrators the ability to state what they want the network to be in terms of state, and the automated systems will provide the implementation with needed real-time changes.

AI and Machine Learning (ML) technologies are transforming ToR switching through prediction, anomaly detection, and intelligent routing. For example, AI enhanced diagnostics minimize power and maximize performance by predicting hardware failures or network bottlenecks. This is particularly important as the need for low latency, high speed connections increases with the growth of AI training clusters, and high-frequency trading applications.

The evolution of ToR architecture has also been driven by the widespread implementation of new 400G Ethernet. Cloud computing and IoT are growing data driven workloads that require higher performance bandwidth solutions. Analysts predict the next five years will see massive growth in 400G port adoption which is indicative of the industry’s shift to more advanced networking standards. Furthermore, the development of high speed optical transceivers and improved cabling technologies makes affordable high performance networking more widespread.

Sustainability is also a driving factor for the development of new ToR architecture. Manufacturers are utilizing, energy-efficient devices and materials, so it is no surprise they comply with environmental policies. This is consistent with other industries’ goals of achieving a lower carbon footprint while satisfying next generation infrastructure needs.

In summary, automation, AI, high-speed Ethernet connectivity, and eco-friendly technologies all working together will lead to advanced ToR switches that are smart, agile, and scalable, ready for the demands of the future data centers.

Maximizing Value From FS Blog and FS.com Europe

Both FS Blog and FS.com Europe provide customers with up-to-date information regarding emerging products and trends on the networking and data center solutions. FS Blog has professionally crafted articles, well put expert opinions, and detailed guides aimed at professionals and businesses, making it easy to choose. FS Europe provides regional coverage of networking products as well as prompt delivery and support services. These platforms allow users to gain crucial information and innovative solutions, as well as professional help targeted towards modern day network infrastructures.

Frequently Asked Questions (FAQ)

Q: What is Top-of-Rack (ToR) switching and what does it do for network infrastructure?

A: Top-of-Rack (ToR) switching is a form of network layout in which data center switches are located above each server rack. ToR switches eliminate the performance bottleneck caused by a single switch serving many racks in a data center by providing increased scalability, better network performance, and easier management of connections within the rack. This greater efficiency fundamentally changes the structure of the network infrastructure by simplifying cabling and enabling better airflow while providing the ability to upgrade network infrastructure without extensive rewiring and investment.

Q: What are the primary benefits of utilizing ToR switching in enterprise environments?

A: In enterprise environments, applying ToR switching offers additional benefits beyond increased network availability through fewer cabling and failure points. Efficient uplinks to the core network and highspeed connections within the rack provided by the ToR switches improve network reliability. In addition, some modern ToR switches are more intelligent devices that integrate Software-Defined Networking (SDN) and can therefore be managed more easily and programmed to respond to changing network needs.

Q: How does ToR switching integrate with SDN controllers?

A: The integration of ToR switching with SDN controllers operates smoothly, which allows for even greater flexibility and automation of network management tasks. An SDN controller is capable of managing multiple ToR switches at once, which it can use for automated configuration, traffic engineering, and policy deployment within a managed domain. This method improves the responsiveness of the network and changes to resource allocation with respect to the needs of the application is more efficient.

Q: Can ToR switching be implemented in industrial networks?

A: Yes, ToR switching may be adopted in the industrial networks. Benefits include fragmentation of networks for individual business processes, lesser time delays for important tasks, and simpler control of the IIoT devices. In fact, ToR switches are often deployed in industrial applications in which they are embedded in handheld terminals designed for rugged environments and enabled with industrial controller automation software.

Q: How does ToR switching contribute to advancing efficient R&D in network infrastructure?

A: ToR switching contributes to advancing efficient R &D in network infrastructure by accelerating the deployment of new networks through flexible and scalable architecture. They’re very easy to deploy and use when testing new protocols, concepts of software defined networking, or even new high speed networking technologies along with ToR switches. The rapidity at which network solutions are developed and adopted is enhanced with this flexibility.

Q: What impact does ToR switch failure have on a data center network?

A: In the case of a ToR switch failure, only the servers associated with that particular switch will be impacted, thus limiting the damage on the entire network. To mitigate this issue, many data centers employ redundancy strategies by utilizing multiple ToR switches per rack or by implementing network with other possible routes. This contributes to network resiliency, making sure that there is always a continuous flow of operations even with the failure of a single switch.

Q: What role do ToR switches play in supporting high-speed connections, for example, Gigabit Ethernet?

A: The very design of ToR switches enables the support of high-speed connections, including, but not limited to, Gigabit Ethernet, 10, 40, or even 100 Gigabit Ethernet. These switches commonly have high-speed uplinks to the core network and provide intra-rack connections of low-latency and high-bandwidth. These features guarantee that the requirements that come alongside modern data center applications and services are always met by ToR switching.

Q: Where I can learn about Top-of-Rack switching and keep abreast of news around this subject?

A: For learning about Top-of-Rack switching and staying up-to-date with recent happenings in the field, you can follow specialists such as Orhan Ergun, read highlighted articles on networking sites, and follow magazines that specialize in the infrastructure of data centers. Also, attending networking conferences and webinars can greatly enhance your understanding of current trends in ToR switching and network infrastructure.

Reference Sources

1. Design and Performance Analysis of Electro Optic ToR(EO-ToR) for Low Latency Data Center Network

- Authors: Souvik Roy et al.

- Publication Date: June 15, 2023

- Conference: 2023 5th International Conference on Energy, Power and Environment

- Key Findings:

- The document suggests an Electro-Optic ToR (EO-ToR) that segments information suitable for both packet and circuit switching.

- The evaluation measures for latency and sojourn time reflect marked progress from conventional ToR structures.

- Methodology:

- The authors concentrated on modeling EO-ToR with MATLAB whilst examining latency and sojourn time metrics as performance measures against traditional ToR switches.

2. OpticNet: Self-Adjusting Networks for ToR-Matching-ToR Optical Switching Architectures

- Authors: Caio Caldeira et al.

- Publication Date: May 17, 2023

- Conference: IEEE INFOCOM 2023

- Key Findings:

- The ability for automated self-optimizing network algorithms is presented as a shift in paradigm through OpticNet, which uses a minimum of reconfigurable switches for topology realization.

- It proves that the architecture achieves performance targets while remaining adaptable and effective.

- Methodology:

- The authors created a ToR switch matching model involving reconfigurable OCS and validated the framework with experimental results from actual workloads.

3. Torp: Full-Coverage and Low-Overhead Profiling of Host-Side Latency

- Authors: Xiang Chen et al.

- Publication Date: May 2, 2022

- Conference: IEEE INFOCOM 2022

- Key Findings:

- The paper describes Torp, a framework that achieves total coverage and minimal latency by offloading host-side latency profiling of operations to seams of the Tor switches.

- It enhances latency profiling in data center networks (DCNs) to a considerable extent.

- Methodology:

- The authors incorporated Torp on Tofino switches and carried out experiments to show its effectiveness in profiling host-side latency against available solutions.

4. A High Performance Hybrid ToR for Data Centers

- Authors: He Liu

- Publication Date: 2015 (not within the last 5 years but relevant)

- Key Findings:

- This paper proposes a new design of ToR architecture termed as REACToR, which incorporates elements of packet and circuit switching for improved performance of data center networks.

- It puts forward a scheduling algorithm that seeks to maximize the use of resources by minimizing circuit reconfiguration.

- Methodology:

- The evaluation is done through simulations and practical implementations, showing effectiveness of managing data center traffic using the hybrid ToR design.

6. Data center

Related Products:

-

S3100-48T2Q4X, 48-Port Ethernet Switch, 48x 10/100/1000/2500/10G BASE-T RJ45, with 2x 40G SFP+ and 4x 1G/10G SFP+ Uplinks

$1095.00

S3100-48T2Q4X, 48-Port Ethernet Switch, 48x 10/100/1000/2500/10G BASE-T RJ45, with 2x 40G SFP+ and 4x 1G/10G SFP+ Uplinks

$1095.00

-

S3100-24T6X-P, 24-Port Ethernet Switch L3, PoE+ Switch, 24x 10/100/1000/2500 BASE-T RJ45, with 6x 1G/10G SFP+ Uplinks

$746.00

S3100-24T6X-P, 24-Port Ethernet Switch L3, PoE+ Switch, 24x 10/100/1000/2500 BASE-T RJ45, with 6x 1G/10G SFP+ Uplinks

$746.00

-

S3100-48T6X-P, 48-Port Ethernet Switch L3, PoE+ Switch, 48x 10/100/1000BASE-T RJ45, with 6x 1G/10G SFP+ Uplinks

$734.00

S3100-48T6X-P, 48-Port Ethernet Switch L3, PoE+ Switch, 48x 10/100/1000BASE-T RJ45, with 6x 1G/10G SFP+ Uplinks

$734.00

-

S3100-8T4X, 8-Port Ethernet Switch L3, 8x 10/100/1000/2500/10G BASE-T RJ45 Ports, with 4x 1G/10G SFP+ Uplinks

$398.00

S3100-8T4X, 8-Port Ethernet Switch L3, 8x 10/100/1000/2500/10G BASE-T RJ45 Ports, with 4x 1G/10G SFP+ Uplinks

$398.00

-

S3100-48T6X, 48-Port Ethernet Switch L3, 48x 10/100/1000BASE-T RJ45, with 6x 1G/10G SFP+ Uplinks

$365.00

S3100-48T6X, 48-Port Ethernet Switch L3, 48x 10/100/1000BASE-T RJ45, with 6x 1G/10G SFP+ Uplinks

$365.00

-

S2100-24T4TS-P, 24-Port Ethernet Switch L2+, PoE+ Switch, 24x 10/100/1000BASE-T RJ45, with 4x 1G RJ45/SFP Combo Uplinks

$360.00

S2100-24T4TS-P, 24-Port Ethernet Switch L2+, PoE+ Switch, 24x 10/100/1000BASE-T RJ45, with 4x 1G RJ45/SFP Combo Uplinks

$360.00

-

S3100-16T8TS4X, 16-Port Ethernet Switch L3, 16x 10/100/1000BASE-T RJ45, with 8 x 1Gb RJ45/SFP Combo and 4 x 1Gb SFP Uplinks

$340.00

S3100-16T8TS4X, 16-Port Ethernet Switch L3, 16x 10/100/1000BASE-T RJ45, with 8 x 1Gb RJ45/SFP Combo and 4 x 1Gb SFP Uplinks

$340.00

-

S2100-16T2S-P, 16-Port Ethernet Switch L2+, PoE+ Switch, 16x 10/100/1000BASE-T RJ45, with 2x 1G SFP Uplinks

$230.00

S2100-16T2S-P, 16-Port Ethernet Switch L2+, PoE+ Switch, 16x 10/100/1000BASE-T RJ45, with 2x 1G SFP Uplinks

$230.00

-

S2100-24T4TS, 24-Port Ethernet Switch L2+, 24x 10/100/1000BASE-T RJ45, with 4x 1G RJ45/SFP Combo Uplinks

$148.00

S2100-24T4TS, 24-Port Ethernet Switch L2+, 24x 10/100/1000BASE-T RJ45, with 4x 1G RJ45/SFP Combo Uplinks

$148.00

-

S2100-8T2S-P, 8-Port Ethernet Switch L2+, PoE+ Switch, 8x 10/100/1000BASE-T RJ45, with 2x 1G SFP Uplinks

$139.00

S2100-8T2S-P, 8-Port Ethernet Switch L2+, PoE+ Switch, 8x 10/100/1000BASE-T RJ45, with 2x 1G SFP Uplinks

$139.00

-

S3100-4T2X-P, 4-Port Gigabit Ethernet Managed Switch, PoE+ Switch, 4x 10/100/1000/2500 BASE-T RJ45, with 2x 1G/10G SFP+ Uplinks

$90.00

S3100-4T2X-P, 4-Port Gigabit Ethernet Managed Switch, PoE+ Switch, 4x 10/100/1000/2500 BASE-T RJ45, with 2x 1G/10G SFP+ Uplinks

$90.00

-

S2100-8T2S, 8-Port Ethernet Switch L2+, 8x 10/100/1000BASE-T RJ45, with 2x 1G SFP Uplinks

$71.00

S2100-8T2S, 8-Port Ethernet Switch L2+, 8x 10/100/1000BASE-T RJ45, with 2x 1G SFP Uplinks

$71.00